-

Posts

784 -

Joined

-

Last visited

-

Days Won

10

PJM_labview last won the day on July 25 2018

PJM_labview had the most liked content!

Profile Information

-

Gender

Male

LabVIEW Information

-

Version

LabVIEW 2020

-

Since

1998

Contact Methods

- Company Website

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

PJM_labview's Achievements

-

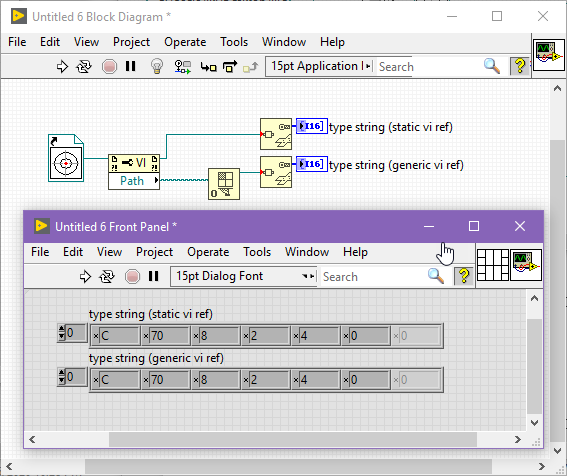

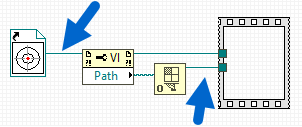

Thanks for all the answers While both type are identical (between no strict static vi ref and non strict generic vi ref) the behavior expressed while using either of these are not identical. For instance, doing a run vi method on the first one (static vi ref) with the auto dispose flag set to true will not dispose of the ref (makes sense to me) and doing the same thing on the later (generic vi ref obtain with the open vi ref) will dispose of the reference (again, this makes sense to me). In conclusion, knowing which type is "passed in" (a subvi) has some benefit that could be leveraged.

-

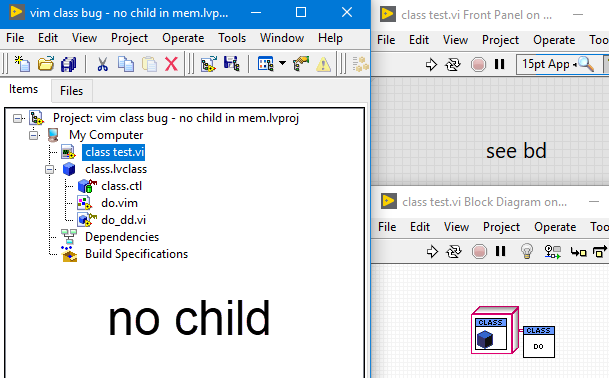

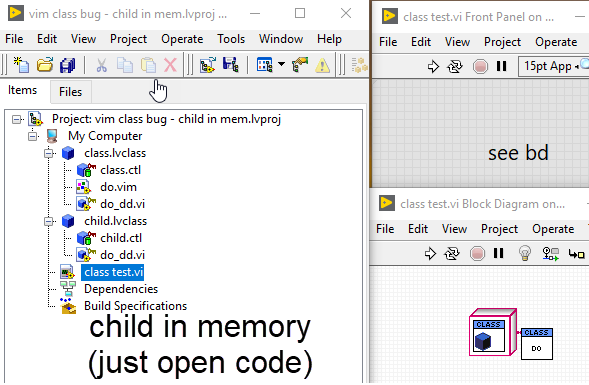

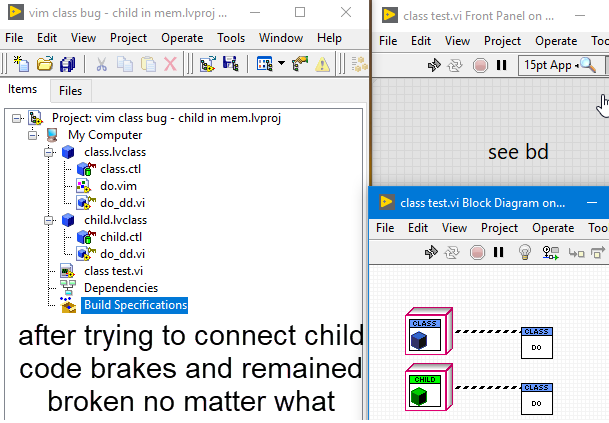

LabVIEW 2020 I am seeing an inconsistent behavior with a public vim calling a dynamic dispatch protected member. The calls is not broken as long as no child is made or loaded into memory. Once a child has been made (and the override created) trying to reconnect the call to use the child brakes the wire (at that point I am unclear what is the desired behavior). call is not broken (but converted vim is broken with a scope error). Also, this code above just run fine (and the protected dynamic dispatch does as well). everything seem fine (but converted vi is still broken) code is broken and can not be "unbroken" class vim bug.zip

-

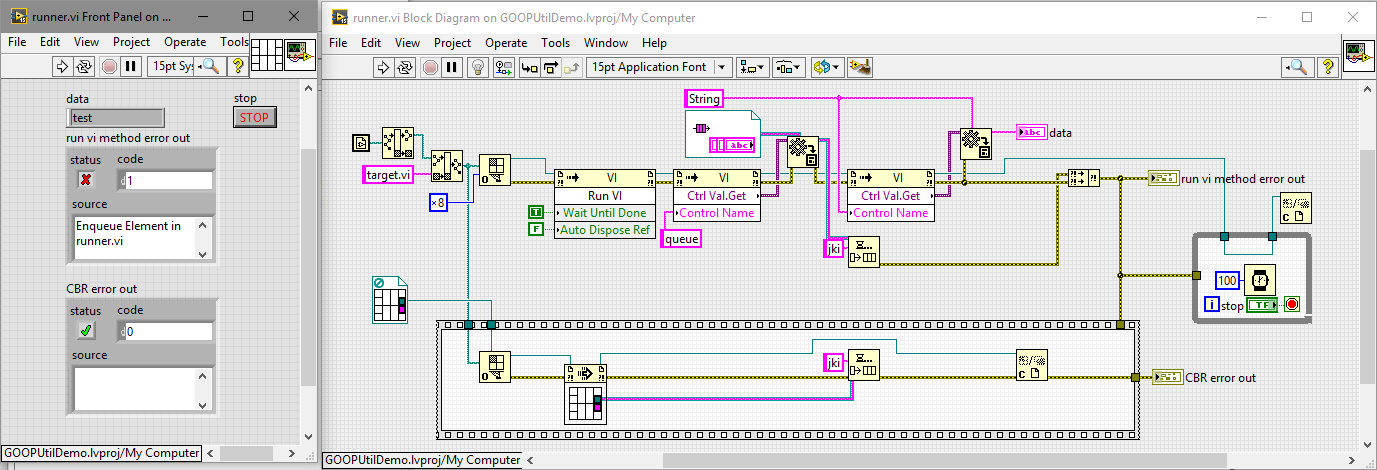

Use case: The selection of the target VI will not be under my control so I have no knowledge about it ahead of time (so I cant do a CBR). I can detect other potential issue with the run VI method (ex: reserved for execution ) through the "Open VI" primitive but I can not detect lifetime related issues (shown in the example). I can script a wrapper (at edit time) around the target to do a static call (somewhat similar to what actor framework do with message class), but I would rather avoid doing this if I can help it as this introduce various complications.

-

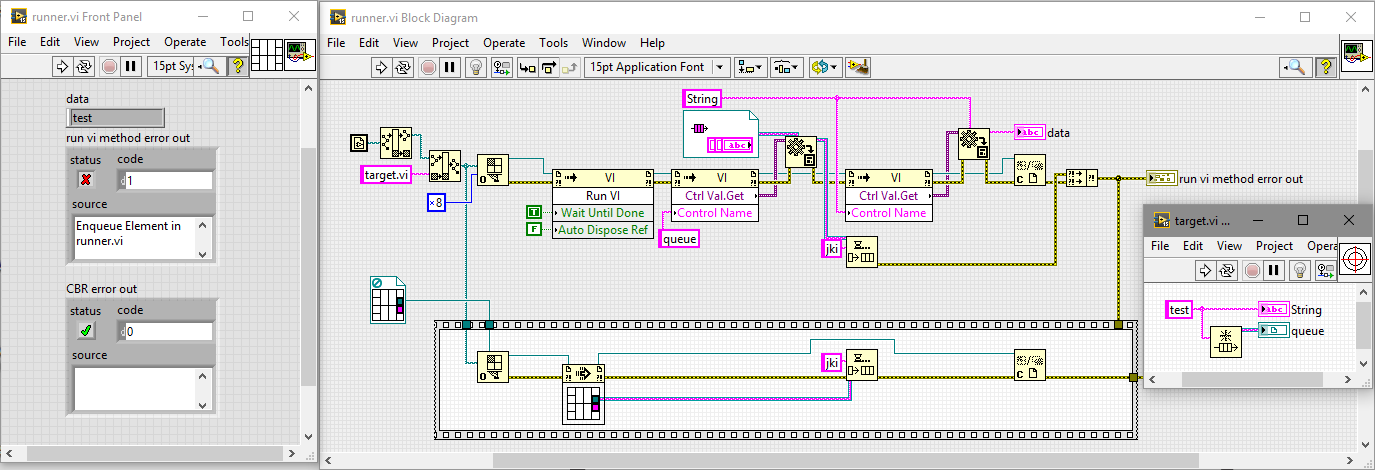

Lifetime of VI launched by the "run VI method" are managed by LabVIEW (meaning the caller do not own the the lifetime). Practically, as soon as the target VI terminate LabVIEW will dispose of the resource created by the target resulting - among other thing - for every references created within the target scope to die (see attached example). I have a use case where this is undesirable and I would love to have the flexibility of the run VI method (generically address control on the target VI) while keeping ownership of the VI call chain in the launcher (like the CBR does). Note: as far as I know, what I am asking is not possible but I would love to be told otherwise. Thanks PJM Cross posted on NI forum rvm.zip

-

Allocating Host (PC) Memory to do a DMA Transfer

PJM_labview replied to PJM_labview's topic in Hardware

Thank RolfK. I was actually hoping that you would chime in on that topic We were suspecting something around the lines of what you described (Virtual memory address not appropriate for the DMA transfer). We will move forward and find out how to lock the memory. Thanks again.- 2 replies

-

- labview memory manager

- dma

-

(and 2 more)

Tagged with:

-

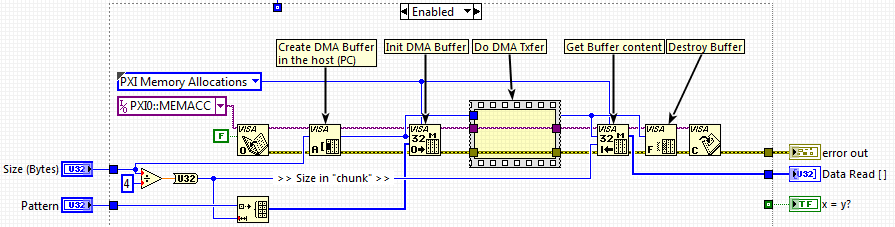

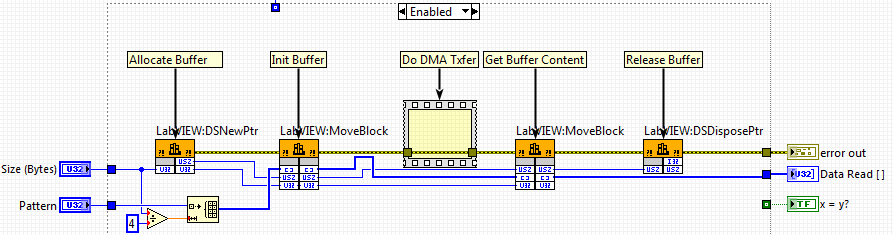

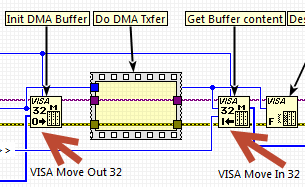

Hi We have an application where we need to have a custom PCIe board transfer data to the PC using DMA. We are able to get this to work using NI-VISA Driver Wizard for PXI/PCI board. The recommended approach is to have VISA allocate memory on the host (PC) and have the PCIe board write to it (as seen below). While this approach works well, the memory allocated by VISA for this task is quite limited (~ around 1-2MB) and we would like to expand this to tens of MB. Note: The documentation (and help available on the web) regarding these advanced VISA function (for instance "VISA Move out 32" and "VISA Move In 32") is parse. If someone has some deep knowledge regarding theses, please feel free to share how we could allocate more memory. Since we are not able to allocate more memory using the VISA function at this time, we investigate doing the same operation using the LabVIEW Memory Manager Functions which allow us to allocate much larger memory block. Below is the resulting code. Unfortunately while we can validate that reading and writing to memory using this work well outside the context of DMA transfer, doing a DMA transfer do NOT work (although the board think it did and the computer is not crashing). We are wondering why this is not working and would welcome any feedback. Note: the DMA transfer implemented on the board requires contiguous memory for it to be successful. I believe that the LabVIEW Memory Manager Functions do allocate continuous memory, but correct me if I am wrong. To troubleshoot this, I did allocate memory using the LabVIEW memory manager function and try to read it back using VISA and I got a "wrong offset" error (Note: This test may not be significant) Another data point; while the documentation for DSNewPtr do not talk about DMA transfer, the one for DSNewAlignedHandle does. Experiment using LV memory manager Handles has not got us anywhere either. We would welcome any feedback regarding either approach and about the LabVIEW Memory Manager Functions capabilities in that use case. Thanks in advance. PJM Note: We are using LabVIEW 2014 if that matter at all.

- 2 replies

-

- labview memory manager

- dma

-

(and 2 more)

Tagged with:

-

I have had to face the same issue in the past and I did not found a solution. It just occurred to me though, could you subpanel the background monitoring async VI into your probe and see if the subpaneled VI UI update (even though the probe is not)? I am not sure that this will work, but might be worth a quick try.

-

Efficient (Semi) Large Array Data Set Manipulation

PJM_labview replied to PJM_labview's topic in LabVIEW General

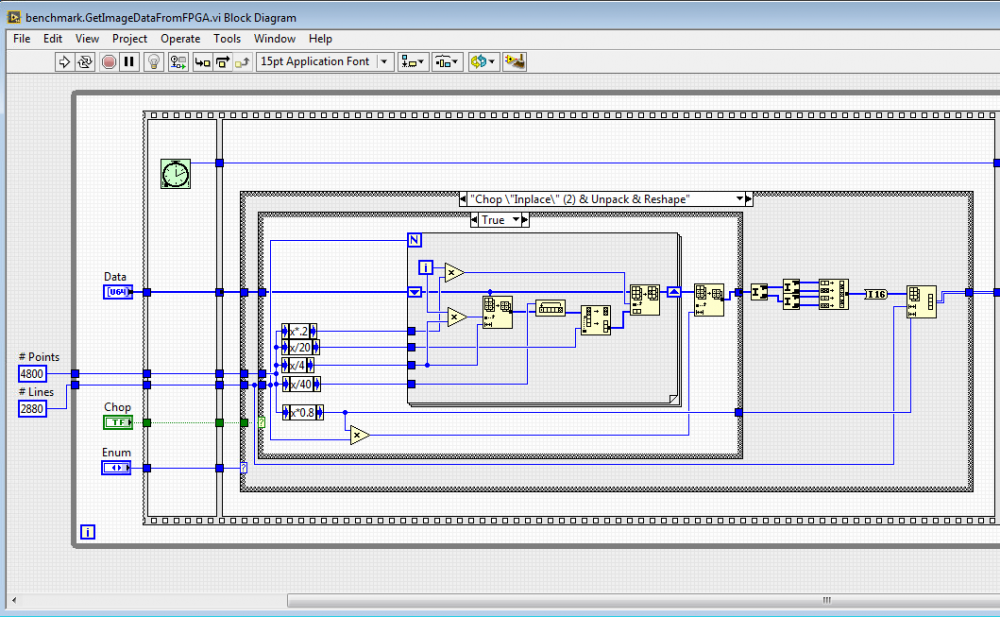

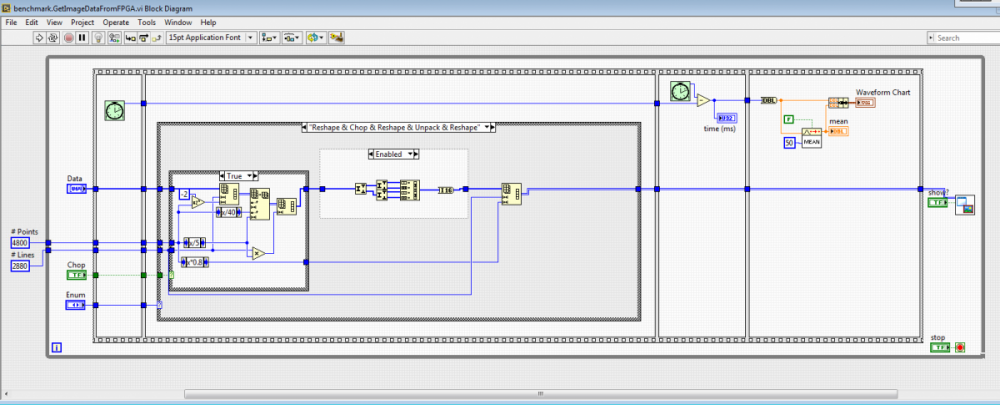

Just closing the loop on this topic. Below is the screenshot of the fastest solution to date that does include the buffer allocation (array creation) as part of the code that is being bench marked. Thanks for everyone help. PJM -

Efficient (Semi) Large Array Data Set Manipulation

PJM_labview replied to PJM_labview's topic in LabVIEW General

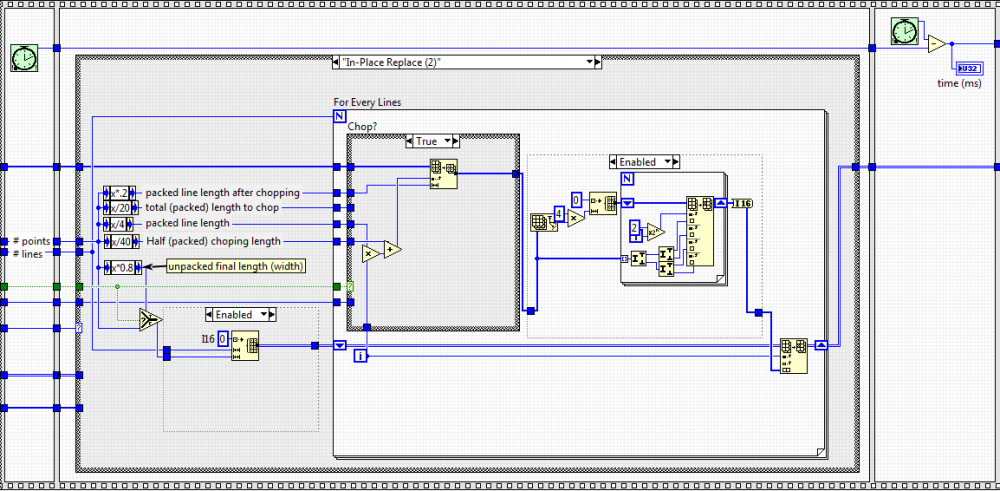

I down-convert it to 2013 and 2011. Since that post yesterday, I got a slightly faster version that operate on each line (like you suggested) [see image below]. Also typecasting is not faster than split and interleave. This is an interesting suggestion. I will have to give this more thoughts. Thanks -

Hi Everyone. I am trying to figure out the most efficient way to manipulate somewhat large array of data (up to about 120 Megabyte) that I am currently getting from a FPGA. This data represent an image and it need to be manipulated before it can be displayed to the user. The data need to be unpacked from a U64 to I16 and some of it need to be chopped (essentially chop off 10% on each side of the image so if an image is 800 x 480 it becomes 640 x 480). I have tried several approaches and the image below show the one that is the quickest but there might be further optimization that could be done. I am looking forward to see what other can come up with. Note 01: I am including a link to the benchmark VI that has a quite large image in it so this VI is about 40MB. Note 02: This is cross-posted on NI Forum. Thanks

-

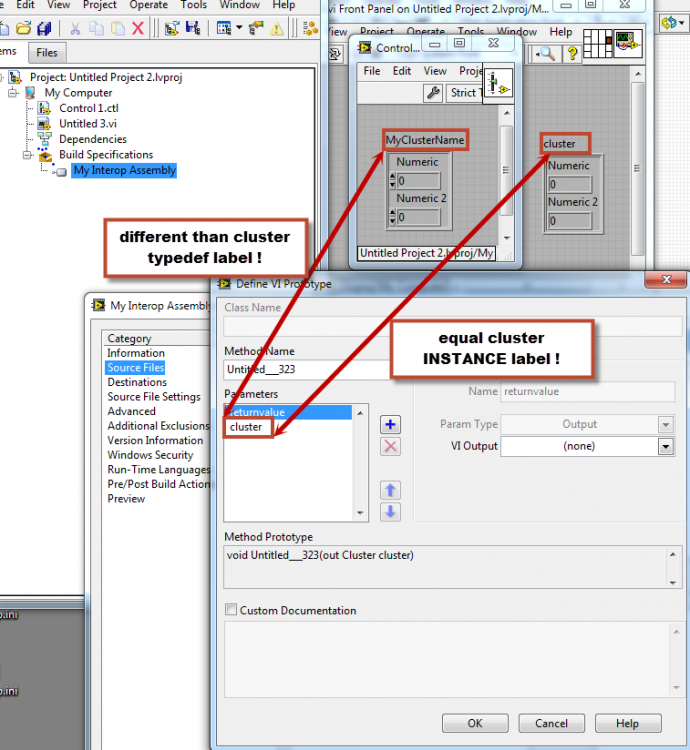

1st oddity: The documentation specify the following: "Clusters and enumerations defined as type definitions or strict type definitions—LabVIEW uses the label of the type definition or strict type definition as the name of the .NET structure" In reality I find that to be incorrect. Instead it used the typedef instance name. Am I missing something? Note: I know I can rename it, but there are very good reason while I would love to have it worked as described in the documentation 2nd oddity: When a typdef is part of a class it does not show as such when call from a .net app. For instance if I have the following in my LabVIEW project: A class is called shape A method in the class is called getBound A cluster typedef in the class is called Bound An interop build spec where the assembly namespace is LVInterop Then when I call the resulting dll from c# I see: LVInterop.shape.getBound (yeah, getBound method is part of the shape class [as expected])but I also see: LVInterop.Bound for the Bound typedef (?? how come the bound type is not part of the class ?? [i would expect LVInterop.shape.Bound])Any feedback on these two oddities will be very welcome.

-

Not at all, thanks for doing that!