-

Posts

4,937 -

Joined

-

Days Won

305

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ShaunR

-

-

Hi,

I connected an instrument (Power meter) through GPIB.

I can see that instrument in MAX. i.e the MAX is showing the GPIB address.

but the same time it says that the device is not responding for *IDE? command.

based on the instrument manual i tried various commands but it is not responding for any command.

any idea what went wrong?

Isnt it "*IDN?" (without quotes)

You may also be forgetting the termination character eg "*IDN?\r", "*IDN?\n" or "*IDN?\r\n"

-

And, hey, Shaun has an encryption package,

I do?

-

Hello,

Shaun, stop reading, this is getting OOP-y

Ok I'll shut up then. Obviously completely over my head

-

I don't use this (yet?) but I feel like it would be better to have your Enqueue VI be polymorphic and have an instance which takes either a 1D array or cluster of strings to facilitate arguments. No precarious parsing and your dequeue always returns two strings, one case and one argument. It seems cleaner. If you need more complex parameters than a string, you could go off the deep end and have the parameter be a variant which is VTD'd by small-footprint helper VIs in specific cases. No muss, no fuss.

Anyone ever tried using explicit parameterization like this?

For reconstitution, I have a separate vi that is basically just a split string with a smaller footprint. This means that I can just cascade the splits to whatever depth is required. I found trying to do it in the actual queue vi meant that you end up either limiting the depth (n outputs), or with array indexing which basically is the same, but with a bigger footprint.

For creating the message, I have just stuck to a string input (the ultimate variant). This has one major benefit over strictly typing and making a polymorphic "translator" in that you can load commands directly from a file (instant scripting). You can create easily maintainable test harnesses (load file then squirt to module) that works for all modules by simply selecting the appropriate file.

My view on polymorphic VI's is that in most cases they basically replicate code, so the "user ease" benefit must be far in excess of the effort and in my case; I don't think it is.I leave that to others to "inherit" from my vis if they want that feature.

Having arrays for inputs is fine on paper, but in reality it is no different. The user still has to create the array and uses concatenate array instead of concatenate string. Arguably he/she doesn't have to add the delimiter, but it's one of those "6 of one 1/2 dozen of the other" since if you just have simple, straight commands, you then have to build an array rather than just type in a string.

-

Top down and bottom ....

<snip>

Ugh. I hate that term. It's meaningless without clarification. What context are you using it in?

Perhaps start a new thread so as not to derail this one? (although I think we will just end up arguing about symatics).

-

It sounds like you do something similar to JKI's state machine. Personally I don't like that technique. I think parsing the message name makes the code harder to read. I don't ever include data, arguments, or compose multiple messages as part of the message name. It's only an identifier; it could be replaced with an integer or enum. The path would be in the data part of the message.

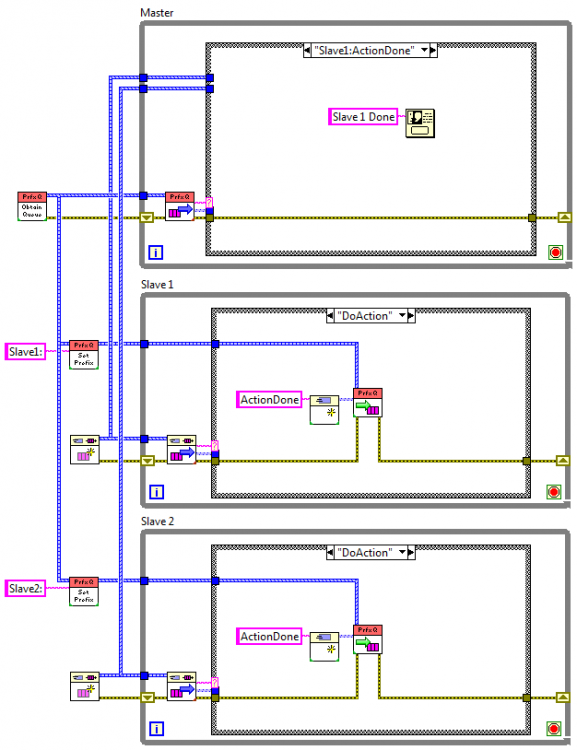

I see no difference in terms of features or function, only in realisation and symatics. You've identified that it is useful to identify the target (and consequently the origin) and whilst you have used the "Slave1,2 etc I would think that later on you would probably realise that a vi name is far more flexible and transparent (with one caveat).

By the way. I don't consider copy and paste reuse. I consider it "replication" and an "anti-pattern" (if the term can be applied in that sense). If I remember correctly, didn't suggest (incorrectly) that I would have to copy and paste to extend transport.lib and use it as an argument against?

If copy and paste reuse works for you, it's certainly an easier way to go about it. The tradeoffs aren't workable for everybody.

-

I disagree. Patterns are largely independent of the language you're using. Whether or not you need them depends on what you're trying to accomplish. Saying they're not as important in Labview is like saying formal development methodologies aren't important in Labview. Sure, many developers don't need them because the project scopes are relatively small, but their importance increases with project complexity. I think it's more accurate to say patterns aren't as important to most Labview developers rather than they aren't as important to Labview as a language.

I have already explained this in my original post.

Why do you say that? I don't think Labview is more inherently hierarchical than other languages.

Top down design (and to a lesser extent bottom up) are the recommended design architectures. Why would that be?

Maybe because "the diamond" isn't a design pattern? I believe you're describing your app's static dependency tree or perhaps the communication hierarchy, not a design pattern. Design patterns are smaller in scope--they address specific problems people frequently encounter while working with code. Have two components that need to communicate but use incompatible interfaces? Use an adapter. Need to capture the state of a component without exposing its internal details? Use a memento. Stuff like that.

I believe I did say "architecture". Incompatible interfaces are generally an admission that you haven't fully thought about the design. If it's third party code, then you simply wrap it in an subvi which acts as the "adapter" and I use sub-vis to capture state without exposing internal details. They are "problems" specific to LVPOOP.

"Diamond" might be considered an architectural pattern, but even then it feels too ambiguous. What aspect of the application is arranged in a diamond? I think it needs more descriptors.

Indeed. I vaguely remember describing it in another thread a while ago whilst I was investigating it. Now it's a defacto consideration in my designs. It's just a way of designing the architecture to maximise modularity and minimise points of interconnectedness (is that a word?

).

).(Incidentally, adapter code can be written with or without oop. I can't think of a good way to do mementos without objects... maybe encode the component's state as an unreadable bit stream?)

Indicators, shift registers, wires, sub-vis. They all externalise "state". There is (generally, not exclusively) no need for "special" components or design patterns to capture state in labview, unless (of course) you break dataflow. Even then, they are merely idioms.

-

And here's an example of how it would be used:

(I'll have to think about releasing a LapDog.Messaging minor update that includes protected accessors. That would allow you to only override the enqueue methods instead of all of them, but I need to ponder it for a bit.)

------------

It's hard to follow what you're describing. Can you post code?

Uncanny. I haven't really been following the thread (so I may end up trying to eat both feet) but saw your master slaves post.......this looks virtually identical to my standard app designs.

I found that using a colon separator (I started by trying to emulate SCPI command composition) means you have to write more decomposing/error checking code when you start sending paths around. (I eventually settled on "->"). May not be an issue with your stuff, but thought I'd mention it.

-

Well, Shaun, I threw you a soft ball and you lobbed it out of the park.

It's called "rounders" over here and it's played exclusively by girls

Reading about Architecture Astronauts made me wonder if that's not part of my problem. I know I need to spend more time on upfront design, but at some point my attempts to keep my software generic and reusable have led me off into spending a lot of time getting more and more abstract in my design. And whenever I sit down to code it up, all that I can think is how overly complicated it all is.

Patterns aren't as important in Labview as they are in other languages. Modularity is far more of a consideration IMHO. With modularity comes re-usability and those patterns relevant to Labview are generally the glue that binds the modules.

However labview is hierarchical in nature and in terms of architecture I only use one pattern (now)-the diamond. But you won't find it in any books.

As I've said in the past, I don't sling the computer science lingo. So your point that I'm already using designs is a good one. I just don't generally say to myself, "I think I'll use a Producer/Consumer design pattern here." I say, "I ought to feed this loop that's doing a bunch of stuff on command with a queue in another loop." So thanks for all the links to the right names and algorithms. That's important to know.

I use the terms "doodah" and "oojamaflip" quite a lot ("we'll use the messaging doodah for that DVM oojamaflip"

)

)Don't get too hung-up on terminology. It's a graphical language after all.

-

-

as the US being the only place I've lived long enough to comment on.

Interesting considering your handle (Asbo).

-

Shamar Thomas

-

Raw refnum data? Does the cluster also have an enum or some such that identifies what the refnum specifically is? That you use to decide internally what bit of code to execute? Your right, that's nothing like an object; it certainly doesn't need an identifying icon. I'm feeling calmer already!

-- James

PS> I just noticed the 3D raised effect in your subVI icons; nice!

No enum. The refnum is converted using the "Variant To Flattened String" to give a string and and array (which is clustered together). The type of refnum is encoded in the array as a type descriptor. The descriptor then decides which piece of code to execute and the flattened data is reconstituted for the particular primitive (TCP,UDP etc). It's just a way of removing the type dependency so that the refnum can be passed from one vi to another independent of the interface.

It's all in the Tansport.lib if you want to see how it works.

After you disagree with me, you can add your kudos to the idea exchange entry. :-)

Whats the point. It's has been declined with 322 kudos

.

. -

Well, I certainly can't pretend that isn't a very clear diagram. But that "refnum", that's actually a cluster, is really making my OCOOD act up! It's an Object! It's an Object! Quick, quick, open an icon editor! Aaarrrggghhhhhhhh...

No its not (an object). It's just a container like a string, int etc that contains raw refnum data. Why complicate it by adding 15 new vis just to read/write to it.

-

I vaguely recall a native implementation (non-DLL) of MySQL developed by ShaunR (I think), if that'd save you from moving to Postgre. Nope, it's SQLite.

The sqlite binaries can be compiled for VXworks. It's something I looked at (and successfully compiled), but since I don't have a vx works device for testing; couldn't go much further.

-

If you have OCOOD (obsessive compulsive OO disorder), then it is likely you also suffer from I-have-two-primitives-wired-together-here-better-create-a-subVI syndrome.

Nope. That's the ARCLD (Analy retentive classical labview disorder). Those with OCOOD have to create a class project, sub-vis for all the methods and fill out a multitude of dialogues.

-

If I count right that's 9.5 to 1.5 (2.5 if you count AQ).

Let's look at one of my more recent subVIs:

<snip>

To be devils advocate, if your fighting for space to fit yet another not-very-important indicator nested somewhere deep in a tight diagram, then your not coding as clearly as you could.

-- James

Yup. But my style is to have control labels to the left, indicators to the right and for them to be stacked closely to lessen wire bends.Of course. I don't suffer as much from the "obj" problem

-

1

1

-

-

+1 for hate large terminals-waste of space.

-

Would you care to take a stab at enumerating your understanding of those differences?

Off the top of my head....

P/S : Single server to many clients, Single clients to many servers. (An example of Many to Many).

P/C: Single server to single client (An example of 1-1)

M/C: Single Server to many clients , Single clients to single Server (An example of 1-Many).

Aggregator:Many servers to Single client, Single client to single server (An example of Many - 1)

The list goes on but are variations on something to something and these too can also be broken down further.

That's my understanding at least.

(Oxygen is starting to get a bit thin up here

)

)-

1

1

-

-

Not the same as the template, event structures are lossless transmission types.

Actually. thinking more (briefly). That is not a requirement of a Master/Slave. It is more an implementation of the example.In the same way that Pub/Sub is a subset of client/server, so is M/S and P/C. The differences are in the way that they realise the client/server relationship.

-

1

1

-

-

I do agree the M/S template doesn't seem to map very well to code I've encountered in the wild.

That's because we generally use the event structure to achieve this.

-

Producer/consumer is 1-1 master slave is 1-many. Also a producer/consumer is loss-less, master,/slave isn't.

-

You ever used DAQmx? Ever read keys out of an ini file using the VIs in vi.lib? I'm guessing you do do* OOP, just without knowing about it.

* wow - "POOP" and "do do" in the same thread - real mature LAVA!

Just because you use a class, doesn't make your program an object oriented design any more than passing a value from one function to another makes it dataflow.

-

1

1

-

-

Feelings on Icons for Terminals?

in LabVIEW General

Posted

Nope. It's obsolescence and having to upgrade to get bug fixes that keeps people buying Labview.