-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ShaunR

-

-

2 hours ago, ManuBzh said:

For sure, this must have been debated here over and over... but : what are the reasons why X-Controls are banned ?

It is because :

- it's bugged,

- people do not understand their purpose, or philosophy, and how to code them incorrectly, leading to problems.They aren't banned. They are just very hard to debug when they don't work and have some unintuitive behaviours.

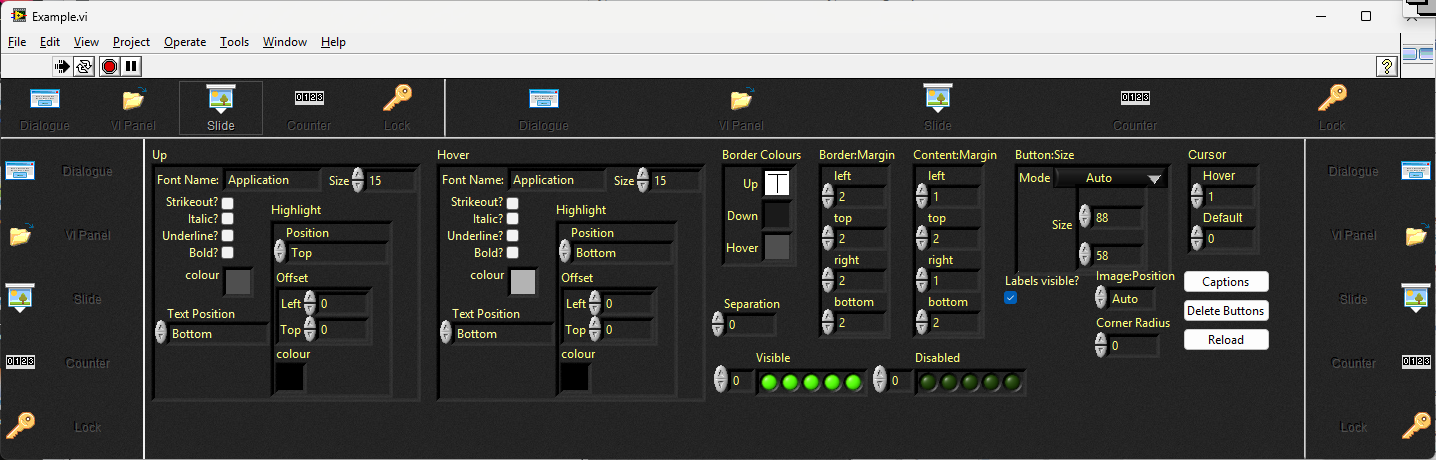

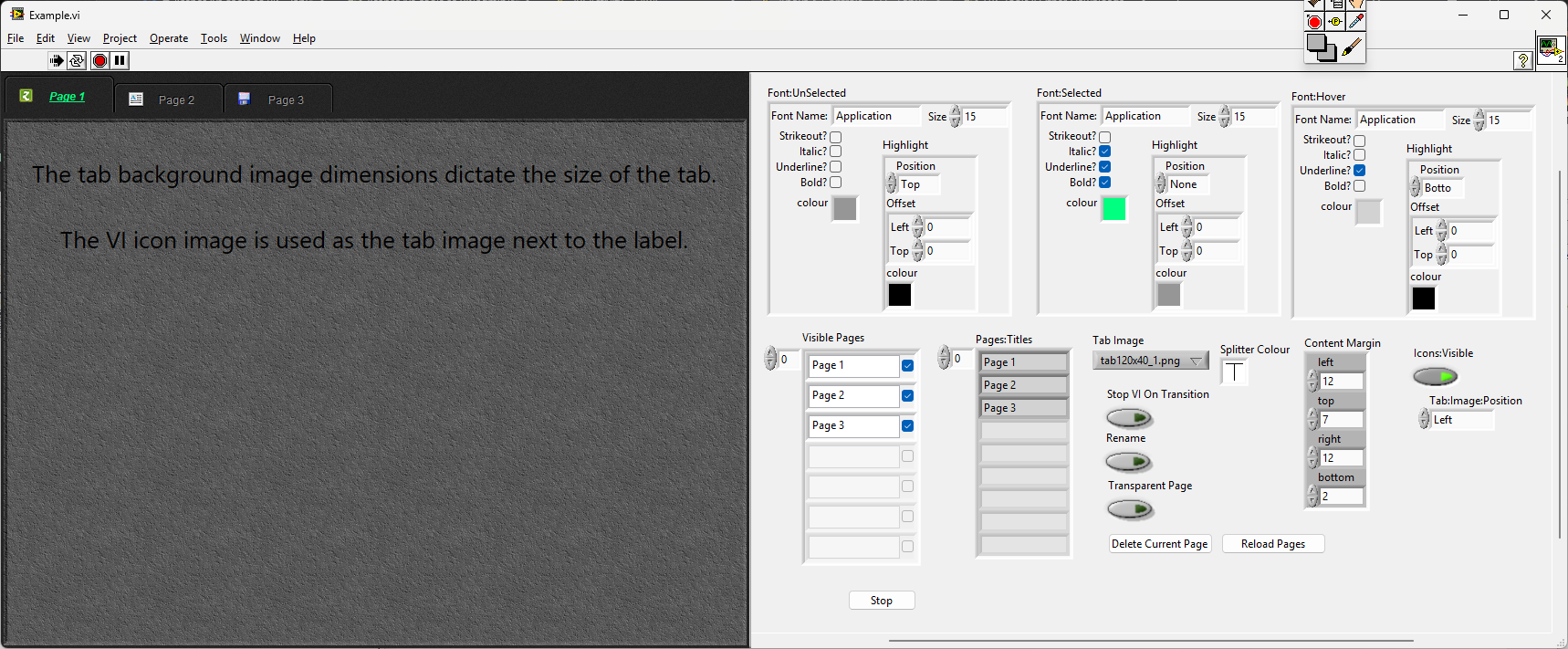

I have Toolbar and Tab pages XControls that I use all the time and there is a markup string xcontrol here. If you are a real glutton for punishment you can play with xnodes too

-

1

1

-

-

What's with the text centering?

I have 2012 (and 2009) running on a Windows 11 machine. I think I had to turn off the App and Browser Control in the Windows Security.

-

21 hours ago, ensegre said:

why? why? why?

-

Triggers fall into two categories: software and hardware. Whether you use software or hardware depends on the trigger accuracy required-hardware being the most accurate. For your use-case I would highly recommend hardware triggering and I think those devices support two types: Digital IO and TSP-Link. Both are well documented in the manuals and I would further recommend digital IO as it is the simplest to configure.

The way to do this is first to get it all working through the devices' front panels. Connect them up (including the digital IO, configure using the menu's then run a test. That will tell you what commands (and in what order) you need to configure for a successful test as SCPI commands map directly to menu's for most manufacturers. The advantage here is that if your software fails for whatever reason, you can still run tests with a precision digit (a.k.a finger). Once you are getting good results then it will be just the case of sending a single command to trigger the tests and the devices will do the rest. After they have finished you can then read all the data out.

-

1 hour ago, hooovahh said:

I'm unsure of your tone and intent with text,

The intent was to tell you the software was free (as in BSD licence) and, if had downloaded it, it contains a zlib binary that isn't wrapped.

-

23 minutes ago, hooovahh said:

Do you guys also disagree philosophically about sharing your work online? That link doesn't appear to be to a download but a login page, it isn't clear to me if the code is free (as in beer). I assume it isn't free (as in speech). In this situation I think Rolf's implementation meets my needs. I initially thought I needed more low level control, but I really don't.

I've never understood the "free (as in beer) or free (as in speech) internet vocabulary. Beer costs and speech has a cost. It's BSD3 and cost my time and effort so it definitely wasn't "free"

Rolf's works on other platforms so you should definitely use that, but if you wanted to play around with functions that aren't exported in Rolf's, there is a zlib distribution with a vanilla zlib binary to play with while you wait for a new openg release.

-

On 1/13/2026 at 7:09 PM, hooovahh said:

Thanks but for the OpenG lvzlib I only see lvzlib_compress used for the Deflate function. Rolf I might be interested in these functions being exposed if that isn't too much to ask.

Edit: I need to test more. My space improvements with lower level control might have been a bug. Need to unit test.

Yes. Rolf like to wrap DLL's in his own DLL (a philosophy we disagree on). I use the vanilla zlib and minizip in Zlib Library for LabVIEW which has all the functions exposed.

-

29 minutes ago, hooovahh said:

Just each unique frame ID would get its own Deflate, with a single block in it, instead of the Deflate containing multiple blocks, for multiple frames. Is that level of control something zlib will expose? I also noticed limitations like it deciding to use the fixed or dynamic table on it's own. For testing I was hoping I could pick what to do.

With ZLib you just deflateInit, then call deflate over and over feeding in chunks and then call deflateEnd when you are finished. The size of the chunks you feed in is pretty much up to you. There is also a compress function (and the decompress) that does it all in one-shot that you could feed each frame to.

If by fixed/dynamic you are referring to the Huffman table then there are certain "strategies" you can use (DEFAULT_STRATEGY, FILTERED, HUFFMAN_ONLY, RLE, FIXED). The FIXED uses a uses a predefined Huffman code table.

-

1

1

-

-

55 minutes ago, ensegre said:

Uhm.

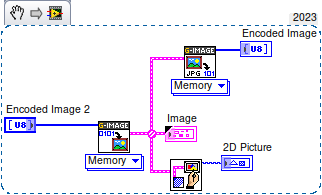

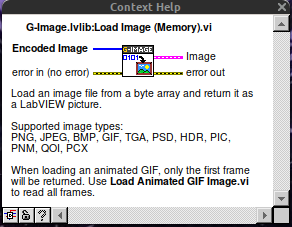

This is G-image. Am I missing something?OP is using LV2019.

Nice tool though. Shame they don't ship the C source for the DLL but they do have it on their github repository.

-

19 minutes ago, Rolf Kalbermatter said:

You seem to have done all the pre-research already. Are you really not wanting to volunteer? 😁

Nope. It needs someone better than I.

-

28 minutes ago, Rolf Kalbermatter said:

You make it sound trivial when you list it like that. 😁

While we are waiting for Hooovah to give us a huffman decoder

...

...

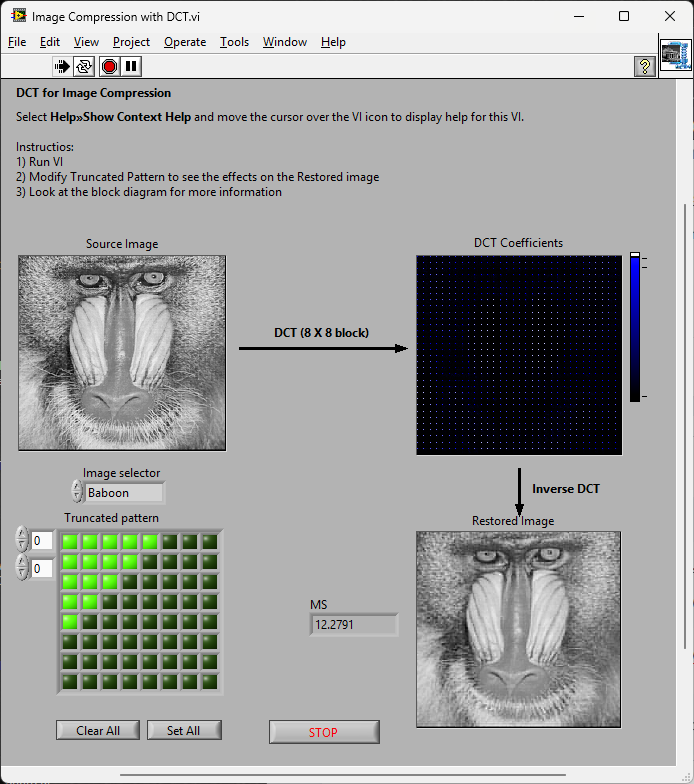

most of the rest seem to be here: Cosine Transform (DCT), sample quantization, and Huffman coding and here: LabVIEW Colour Lab

-

On 1/8/2026 at 3:17 PM, ensegre said:

You could also check https://github.com/ISISSynchGroup/mjpeg-reader which provides a .Net solution (not tried).

So, who volunteers for something working on linux?

There is an example shipped with LabVIEW called "Image Compression with DCT". If one added the colour-space conversion, quantization and changed the order of encoding (entropy encoding) and Huffman RLE you'd have a JPG [En/De]coder.

That'd work on all platforms

Not volunteering; just saying

-

1

1

-

-

2 hours ago, Harris Hu said:

I received some image data from a web API in Base64 format. Is there a way to load it into LabVIEW without generating a local file?

There are many images to display, and converting them to local files would be cumbersome.

base64.txt is a simple example of the returned data.

image/jpeg

LabVIEW can only draw PNG from a binary string using the PNG Data to LV Image VI. (You'd need to base64 decode the string first)

I think there are some hacky .NET solutions kicking around that should be able to do JPG if you are using Windows.

-

15 hours ago, Rolf Kalbermatter said:

What I could not wrap my head around was your claim that LabVIEW never would crash.

It just didn't. Like I said. I only got the out of memory when I was trying to load large amounts of data. I suppose you could consider that a crash but there was never any instance of LabVIEW just disappearing like it does nowadays. I only saw the "insane object" two or three times in my whole Quality Engineering career and LabVIEW certainly didn't take down the Windows OS like some of the C programs did regularly.

But I can understand you having different experiences. I've come to the conclusion, over the years, that my unorthodox workflows and refusal to be on the bleeding edge of technology, shield me from a lot of the issues people raise.

-

2 hours ago, Rolf Kalbermatter said:

From a certain system size it is getting very difficult to maintain and extend it for any normal human, even the original developer after a short period.

Gotta disagree here (surprise!)

Take a look at the modern 20k VI monstrosities people are trying to maintain now so as to be in with the cool cats of POOP. I had a VI for every system device type and if they bought another DVM, I'd modify that exe to cater for it. It was perfect modularisation at the device level and modifying the device made no difference to any of the other exe's. Now THAT was encapsulation and the whole test system was about 100 VI's.

Take a look at the modern 20k VI monstrosities people are trying to maintain now so as to be in with the cool cats of POOP. I had a VI for every system device type and if they bought another DVM, I'd modify that exe to cater for it. It was perfect modularisation at the device level and modifying the device made no difference to any of the other exe's. Now THAT was encapsulation and the whole test system was about 100 VI's.

They are called VI's because it stood for "Virtual Instruments" and that's exactly what they were and I would assemble a virtual test bench from them.

2 hours ago, Rolf Kalbermatter said:When I read this I was wondering what might cause the clear misalignment of experience here with my memory.

1) It was ironically meant and you forgot the smiley

2) A case of rosy retrospection

3) Or are we living in different universes with different physical laws for computers

Defintely 3).

LabVIEW was the first ever programming language I learned when I was a quality engineer so as to automate environmental and specification testing. While I (Quality Engineering) was building up our test capabilities we would use the Design Engineering test harnesses to validate the specifications. I was tasked with replacing the Design Engineering white-box tests with our own black-box ones (the philosophy was to use dissimilar tools to the Design Engineers and validate code paths rather than functions, which their white-box testing didn't do). Ours were written in LabVIEW and theirs was written in C. I can tell you now that their test harnesses had more faults than the Pacific Ocean. I spent 80% of my time trying to get their software to work and another 10% getting them to work reliably over weekends. The last 10% was spent arguing with Engineering when I didn't get the same results as their specification

That all changed when moving to LabVIEW. It was stable, reliable and predictable. I could knock up a prototype in a couple of hours on Friday and come back after the weekend and look at the results. By first break I could wander down to the design team and tell them it wasn't going on the production line

. That prototype would then be refined, improved and added to the test suite. I forget the actual version I started with but it was on about 30 floppy disks (maybe 2.3 or around there).

. That prototype would then be refined, improved and added to the test suite. I forget the actual version I started with but it was on about 30 floppy disks (maybe 2.3 or around there).

If you have seen desktop gadgets in Windows 7, 10 or 11 then imagine them but they were VI's. That was my desktop in the 1990's. DVM, Power Supply, and graphing desktop gadgets that ran continuously and I'd launch "tests" to sequence the device configurations and log the data.

I will maintain my view that the software industry has not improved in decades and any and all perceived improvements are parasitic of hardware improvements. When I see what people were able to do in software with 1960's and 80's hardware; I feel humbled. When I see what they are able to do with software in the 2020's; I feel despair.

2 hours ago, Rolf Kalbermatter said:One BBF (Big Beauttiful F*cking) Global Namespace

I had a global called the "BFG" (Big F#*king Global). It was great. It was when I was going through my "Data Pool" philosophy period.

-

16 hours ago, hooovahh said:

A11A11111, or any such alpha-numeric serial from that era worked. For a while at the company I was working at, we would enter A11A11111 as a key, then not activate, then go through the process of activating offline, by sending NI the PC's unique 20 (25?) digit code. This would then activate like it should but with the added benefit of not putting the serial you activated with on the splash screen. We would got to a conference or user group to present, and if we launched LabVIEW, it would pop up with the key we used to activate all software we had access to. Since then there is an INI key I think that hides it, but here is an idea exchange I saw on it. LabVIEW 5 EXEs also ran without needing to install the runtime engine. LabVIEW 6 and 7 EXEs could run without installing the runtime engine if you put files in special locations. Here is a thread, where the PDF that explains it is missing but the important information remains.

The thing I loved about the original LabVIEW was that it was not namespaced or partitioned. You could run an executable and share variables without having to use things like memory maps. I used to to have a toolbox of executables (DVM, Power Supplies, oscilloscopes, logging etc. ) and each test system was just launching the appropriate executable[s] at the appropriate times. It was like OOP composition for an entire test system but with executable modules.

Additionally, crashes were unheard of. In the 1990's I think I had 1 insane object in 18 months and didn't know what a GPF fault was until I started looking at other languages. We could run out of memory if we weren't careful though (remember the Bulldozer?).

Progress!

-

1

1

-

-

17 hours ago, Rolf Kalbermatter said:

What someone installs on his own computer is his own business

Tell that to Microsoft.

17 hours ago, Rolf Kalbermatter said:they are also easily able to add some extra payload into the executable that does things you rather would not want done on your computer.

Again. Tell that to Microsoft.

I'm afraid the days of preaching from a higher moral ground on behalf of corporations is very much a historical artifact right now.

-

18 hours ago, Rolf Kalbermatter said:

Actually it can be. But requires undocumented features. Using things like EDVR or Variants directly in the C code can immensely reduce the amount of DLL wrappers you need to make. Yes it makes the C code wrapper more complicated and is a serious effort to develop, but that is a one time effort. The main concern is that since it is undocumented it may break in future LabVIEW versions for a number of reason, including NI trying to sabotage your toolkit (which I have no reason to believe they would want to do, but it is a risk nevertheless).

I think, maybe, we are talking about different things.

Exposing the myriad of OpenSSL library interfaces using CLFN's is not the same thing that you are describing. While multiple individual calls can be wrapped into a single wrapper function to be called by a CLFN (create a CTX, set it to a client, add the certificate store, add the bios then expose that as "InitClient" in a wrapper ... say). That is different to what you are describing and I would make a different argument.

I would, maybe, agree that a wrapper dynamic library would be useful for Linux but on Windows it's not really warranted. The issue I found with Linux was that the LabVIEW CLFN could not reliably load local libraries in the application directory in preference over global ones and global/local CTX instances were often sticky and confused. A C wrapper should be able to overcome that but I'm not relisting all the OpenSSL function calls in a wrapper

.

.

However. The biggest issue with number of VI's overall isn't to wrap or not, it's build times and package creation times. It takes VIPM 2 hours to create an ECL package and I had to hack the underlying VIPM oglib library to do it that quickly. Once the package is built, however, it's not a problem. Installation with mass compile only takes a couple of minutes and impact on the users' build times is minimal.

-

2 hours ago, Rolf Kalbermatter said:

That's one more reason why I usually have a wrapper shared library that adapts the original shared library interface impedance to the LabVIEW Call Library Interface impedance

Yes. But not a good enough reason.

-

39 minutes ago, Rolf Kalbermatter said:

A 18k VI project! That's definitely a project having grown out into a mega pronto dinosaur monster.

You may say that but ECL alone is about 1400 Vi's. If each DLL export is a VI then realising the entire export table of some DLLs can create hundreds of VI's, alone.

However. I think that probably the OP's project is OOP. Inheritance and composition exponentially balloon the number of VI's - especially if you stick to strict OOP principles.

-

19 hours ago, crossrulz said:

Yes, decoupling is a long process. I have been trying to do that with the Icon Editor. Not trying right now as other priorities with that project are more important. Do the process slowly and make deliberate efforts. You will eventually see the benefits.

Ooooh. What have you been doing with the icon editor?

-

58 minutes ago, Softball said:

Hi

NI has always tried to 'optimize' the compiler so the code runs faster.

In LabVIEW 2009 they introduced a version where the compiler would do extra work to try to inline whatever could be inlined.

2009 was a catastrophe with the compiler running out of memory with my complex code and NI only saved their reputation by introducing the hybrid compiler in 2010 SP1. Overall a smooth sailing thereafter up to and including 2018 SP1.

NI changed something in 2015, but its effect could be ignored if this token was included in the LabVIEW.in file : EnableLegacyCompilerFallback=TRUE.

In LabVIEW 2019 NI again decided to do something new. They ditched the hybrid compiler. It was too complex to maintain, they argued.

2019 reminded me somewhat of the 2009 version, except that the compiler now did not run out of memory, but editing code was so sloow and sometimes LabVIEW simply crashed. NI improved on things in the following versions, but they has yet to be snappy ( ~ useful ) with my complex code.

Regards

I still use 2009 - by far the best version. Fast, stable and quick to compile. 2011 was the worst and 2012 not much better. If they had implemented a benevolent JSON primitive instead of the strict one we got, I would have upgraded to 2013.

-

-

1 hour ago, Phillip Brooks said:

--insecure is a curl argument. You don't need to include that in the headers.

You can do the equivalent of --insecure by setting verify server to False when creating the HTTP session.

Should never do this. Anyone can sit between you and the server and decode all your traffic. The proper way is to add the certificate (public key) to a trusted list after manually verifying and checking it.

What's the point of using HTTPS if you are going to ignore the security?

About X-Controls... :-D

in LabVIEW General

Posted

Never used them or had a need for them.