jdunham

-

Posts

625 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by jdunham

-

-

So with my LabVIEW restarted and my application having the following settings in its INI file

QUOTE

server.tcp.enabled=Trueserver.tcp.port=3364 (or whatever you like)

It's all working.

Other settings might be advisable for slightly better security, but this was enough to make it work. Just like in the development environment, latching booleans won't accept a Value(signalling) property, but I can fix that for the next build.

Thanks.

-

QUOTE (dblk22vball @ Dec 11 2008, 08:38 AM)

I started by copying the relevant options from LabVIEW.ini to my own application's ini.

QUOTE (Neville D @ Dec 11 2008, 09:34 AM)

Under your Project, right-click on the My Computer and enable VI Server from there. Then try the build.Also, just to trouble-shoot Firewall problems, try to access the exe on your desktop host itself and see if that works.I didn't realize there were target-specific settings, so thanks (though it would be interesting to know whether this does anything more than alter the INI file.

I'm trying everything on localhost for now.

QUOTE (Dan DeFriese @ Dec 11 2008, 10:10 AM)

No I wasn't trying to use 3363.

QUOTE (Dan DeFriese @ Dec 11 2008, 11:21 AM)

I threw together a simple example (LV8.5):I'm going to try this next, thanks a lot!

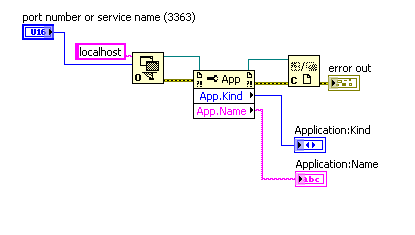

My own simple example is not working. Though I got farther with some of these tips. If the built app is running, then calls to Open App Reference on that port start succeeding, but all of the App Properties like App:Name and App:Kind are referring to the Labview development system rather than the exe. I will try your example and see if the same thing happens.

QUOTE (Yair @ Dec 11 2008, 11:41 AM)

I will try some more stuff and then post what I have.

Thanks all for the assistance.

I'll post again if I can reproduce any of that.

Thanks again, especially to Dan :beer: for the example!

http://lavag.org/old_files/monthly_12_2008/post-1764-1229028500.png' target="_blank">

-

I thought this would be easy, but so far not. I'd like to test my built application by using VI server to cause a button to be pressed.

This web page implies that it should be no problem, as long as you open the Application Reference of the built executable, but it points out that VI server needs to be enabled during the build, which is eminently reasonable.

In that web page, it says

QUOTE

Also, this change still permits you to open application references to an EXE, and subsequent VIs referenced by name, through VI Server when it is enabled for the EXE.with a tantalyzing but unhelpful link on the last bit.

So how can I get my built exe to accept VI server connections? I'm using Vista (ick) and LV 8.6. No matter what I put in the INI file, I just get error 63 (server refused connection) when attempting to connect. I haven't even tried worrying about exported VIs, since I can't even connect to the app. I have checked the Windows Firewall, and poked around in the Application Builder options to no avail. Am I missing something?

-

QUOTE (Justin Goeres @ Nov 26 2008, 06:22 AM)

It probably is .

.I just don't want to implement it myself if there's a better way. However, posting/Googling/yelling/crying haven't yet produced a solution

.

.why would it need to be recursive? the OS doesn't recurse down those paths. Just test all the paths in the environment variable and you're done.

If you're trying to run the EXE, you can always just run it and if it works, great, if not, you handle the error from System Exec and branch off into whatever you had planned to do if "which" had returned nothing. (I assume you already thought of this, but you never know).

-

QUOTE (dblk22vball @ Nov 25 2008, 11:19 AM)

Why don't you use a cluster of two 1-D arrays, one for each control.

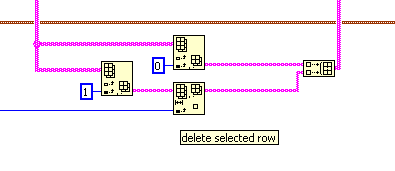

http://lavag.org/old_files/monthly_11_2008/post-1764-1227646334.png' target="_blank">

-

QUOTE (BrokenArrow @ Nov 25 2008, 09:48 AM)

Good idea, the trial license. I've also been thinking of picking up an old version on eBay. The 6.1 usually goes for cheap.This is a great community here and I know someone would upsave for me, but I don't want to give people my busy work, but more importantly, I wanted to know a good solution going forward. I could conceivably have hundreds of these to convert.

Doesn't seem like EBay is necessary. Your local NI sales guy/tech support team wants to keep you happy. If you have a paid license, it seems like they would just give you a copy of the old Labview if you explain the problem.

-

QUOTE (vultac @ Nov 25 2008, 04:19 AM)

okay.....thx...im a little lost tho....well i was able to set D0 and D1 to go high and low...but its the timing now thats giving me a headache...well ive attached a jpeg of my progress so far~ struggling with the wait(ms) vi :/!!Well the pic ive just attached basically shows me making 00000001(D0) go active high then making it go active low...thinking about how i could make D1 go active high when D0 is halfway through being active high....

I would work on the bitmasks first, so you can set any desired bit without setting any others (see earlier post).

Then I would make that into a subvi so that it's easy to reuse.

Only after that I would worry about the timing. How do you want to store the timing pattern? Are you going to make a pattern of ones and zero at some regular interval, or are you going to have a list of durations and the desired state for each one? There is no single right way to describe your waveforms; just try to pick the easiest way that is still useful.

-

QUOTE (vultac @ Nov 24 2008, 11:22 AM)

DAQmx can control one line at a time with different tasks, but it wouldn't surprise me if mccdaq can only read and write the 8-bit port in one shot. In that case you'll need to read up on http://en.wikipedia.org/wiki/Mask_(computing)' rel='nofollow' target="_blank">bitmasks

The basic operation is to read the port (the byte), pick the mask which will change the state as desired, and operate on the byte value, and then write the result back to the port.

In LabVIEW you don't have to do this with an array of booleans. The Boolean operators work just fine on integers and work like they would in C, that is, they operate on each bit independently.

To avoid race conditions, you need to protect the read-modify-write operation with a mutex, which can either be wrapping this change in just one subvi (you can perform the operation in multiple places, but it has to wrapped in a common subvii) or else by using LabVIEW semaphores. If your whole application is a big state machine or a big sequence you may be able to guarantee that only one operation can be tried in parallel, but relying on that is not recommended.

-

QUOTE (Darren @ Nov 21 2008, 10:30 AM)

How are you specifying which VI you want to drop off disk? If it's with a File Dialog, you can just use Quick Drop to "Select a VI...". In fact, I have a shortcut ('sav') for Select a VI to make it even faster.We hates file dialogs, we does. When you have several thousand VIs, many with similar names, it's very hard to find them using Windows Explorer or file dialog boxes. QD is neat, but much more often I want to find other VIs in my hierarchy which may not belong in a reuse palette, but I need to find them and sometimes I want a new copy.

So we have a tool which is kind of like quick drop but searches the open projects (BTW it would be awesome to have this feature as part of QD) . It works great for opening VIs, but often it would be cool to have the VI it finds available for dropping on a diagram. Normally it's not too bad because you can open the VI and grab its icon, but if the VI in run mode, you may have to go to another vi and change the tool, etc. Also, you can't grab the icon of a polymorphic VI and drag it onto a diagram. I want to drag items from the listbox and have the VIs drop onto my block diagram.

-

QUOTE (EJW @ Nov 21 2008, 06:43 AM)

Actually, i am replacing the third element with new data, same size. I keep the first two indexes and input a new third array to replace the original.asbo has it right. You aren't telling the LV compiler any more than you were telling us, and we didn't understand either. You have to throw it a bone.

Put another way, you may know that the array will stay the same size, but the LabVIEW compiler has to generate code which will always work, no matter what you may have dropped into the local variable at some point.

Once you do the right thing and use replace subset, you don't need the inplace compiler hint anymore, because the Replace function always operates inplace.

-

-

-

QUOTE (vugie @ Nov 20 2008, 12:53 AM)

http://forums.lavag.org/Diagram-shortcuts-t10208.html' target="_blank">Here is a simple tool I wrote some time ago, where I used copy-paste mechanism to drop something on BD.That seems pretty interesting, though I don't want to copy pre-existing code, just a VI from disk. Maybe scripting could drop it in a new VI and your tool could grab it from there (seems like a rube goldberg approach). I was hoping someone who knows more about quickdrop (Darren?!?) could tell me how to do the very last bit of putting something on the cursor for dropping.

Also, I have SuperPrivateScriptingFeatureVisible=True but I am still not able to access all those methods. Is that stuff that was only in LV 7.1 and you have to save those nodes in 7.1 to get access in later versions, or is my setup still wrong?

-

Is there a way to drop a specific VI on a diagram a la quick-drop? I see there is a special invoke node App:User Interaction:Drop Control or Function, but it doesn't seem to work with VIs.

-

So if it's a camera, you would typically acquire your analog to digital value in a double (nested) FOR loop, with one loop for rows and one loop for columns. Wiring the voltage out will give you a 2-D array of pixel intensities. You would run that once for each frame you capture. Then you can use lots of other labview functions to process the 2D image array. If you wire it to a 2D intensity graph, you will see your image (you may need to transpose the array and/or reverse the axes.

Ok, so most people who want to acquire data from an array sensor like a camera will use frame grabbing hardware which does this all for you. Billions of dollars have been spent making this easier and many orders of magnitude faster than what you can do with a measurement computing daq board one pixel at a time.

So vultac, I think you need to tell us some more about what you are trying to do and why might need to reinvent the wheel. If it's a school project, well that's cool, but you still need to give some more information so that an appropriate level of help can be provided. If it is a paid job or a project for fun, then giving some more information will still help you arrive at a reasonable solution at a much faster rate. I'm sorry if I'm totally misinterpreting the situation, but you haven't given us much to go on.

-

QUOTE (san26141 @ Nov 18 2008, 02:49 PM)

Nope. Class_A contains some instances of ClassB. Containing is a "... has a ..." relationship, while inheritance is an "... is a ... " relationship. Stick those in a sentence to see which relationship makes sense.

For example, truck is a vehicle, so it inherits the properties of vehicles (has wheels, uses gas, moves on roads). Truck has a gas tank (or two), but that doesn't mean any thing about shared properties. Methods you run on the gas tank (fill it's storage area with gasoline) would not also work on a truck (ok, forget about gas tanker trucks because LV does not support multiple inheritance).

QUOTE (san26141 @ Nov 18 2008, 02:49 PM)

Problem:1) When I have to "INIT" Class_B from within Class_A:Init how does each instance of Class_B get its own unique parameters?2) How does Class_B:Init know to initialize Class_B.lvclass different from Class_B.lvclass 2 different from Class_B.lvclass 3?- For example each instance of Class_B is initialized with a different COM port.3) Can the input lines (blue arrows below) be used to tell the method which parameters to initialize the calling instance?LabVIEW OOP is not like many other by-reference OOP implementations. even if you don't call your init function, the existence of your wires on the diagram allocates memory and instantiates a copy of every class. You have 3 classB wires, so you already have 3 different objects. However, they don't contain anything until you fill their private data with the values. Somewhere on your diagram you have to get the COM port values from somewhere (front panel and/or a config file) and pass them into your classB init routines. Actually they don't have to be init routines, they can just be an accessor method to update the COMport element of ClassB.

Usually a LabVOOP Initialize method does not take a copy of the class in, and therefore is an initializer because the new wire out is the first time that object's wire and the data its holding comes into existence. The wires pointed to by your blue arrows could be deleted, since they are probably empty anyway. If they are not empty, you probably are not initializing. Similarly since this is the diagram of ClassA:Init, you don't need to input class A (typically you would put a constant copy of it on your diagram which is all empty, and then bundle in the values from calls to ClassB:init.

hope that helps.

-

This is just a race condition, but I wanted to give a more concrete example. Let's say you have some kind of cluster or array which is modified in different threads of your application. You may have to unbundle or index it, replace the element, rebundle it, and wire it back into the global (or notifier or lv2 global or whatever). Between the unbundle and the rebundle, you have to make sure that no other thread can start the same process of unbundling, or else one of the copies will be stale and be victim of a race condition.

The normal way to fix this is to wrap the operation in a subvi so you can take advantage of the blocking behavior of subvi calls. However, this means the subvi has to know about every type of data you might want to replace in this global thing, which may lead to running out of terminal pane connectors, and generally ugly code. The other alternative is protecting the updates with a semaphore.

-

QUOTE (bmoyer @ Nov 14 2008, 01:38 PM)

You may also be interested in http://forums.lavag.org/Finding-Suspend-When-Called-t11898.html&p=51578' target="_blank">this post. You may not use that kind of suspend very often, but if you do and forget about it, there's no other way to find it without running the code.

-

QUOTE (vultac @ Nov 11 2008, 05:19 PM)

actually im trying to acquire a signal from the analogue inputs and convert this signal(analogue form) into digital form....Im in the UK now..it would be expensive to call to US from here! ><!

Well they have email too (http://www.measurementcomputing.com/tech.html), and there's always skype.

If you card is working, then the signal is already digital once you do any kind of acquisition. The vendor should have some example programs you can test out. If the examples are not working, then your best bet is to contact MC. I don't mean to drive you away, but honestly you are going to get up and running faster with them.

There could also be also some confusion about terminology. Your computer can only measure an analog signal by digitizing it, but it it will still have an "analog" appearance on your computer screen. You'll still see a sine wave if you have a sine input (though if you zoom in hard enough, you will see that it is digitized. If you are trying to express your input data in some other digital way, you may need to write some more software. You can also post your work so far and some screen shots and people could give you more help. But you should really be working with your vendor's tech support if you don't know how to use the hardware at all.

-

QUOTE (vultac @ Nov 11 2008, 04:29 PM)

thanks mate. but do u have any idea how could i convert my analogue signal into digital form?I have to point out the hardware vendors pay their tech support staff to help you get through the initial start-up with their equipment. It's noble of Dan to be helping you out, but you really should call your vendor to get basic help like this. Even if you got the card second-hand, you can frequently get them to help you out because they want you to buy your next card from them too.

When you get to something tricky where the vendor support technicians may not know the finer points of advanced use or application-specific concepts, then you might find an experienced user here who is able to help.

Good luck.

-

QUOTE (shoneill @ Nov 11 2008, 02:12 PM)

I actually used to wonder about the warning from a VISA read to the tune of "The amount of Bytes read was equal to the amount requested, there may be more to read". I used to think Duh!, stupid computer but now (After all these years doing RS-232 interfacing) I actually understand why that warning is there. I've always had to deal with Instruments returning either fixed-length replies or short replies, so I never ran into the real-world case that a reply needed more than one VISA read to accomplish.Well I think it's a lame way for VISA to give you that information. It would be better to have a boolean output for "TermChar found?", but that's what you get with an API developed by an industry committee. I guess it's fine if you use RS-232 just like GPIB (which is the intention behind VISA) but if you use RS-232 for other devices, you frequently don't get termination characters, and you have to jump through some hoops to get that working with VISA (NI: If you don't believe me just look at the forum topics on this).

-

QUOTE (shoneill @ Nov 11 2008, 05:42 AM)

It would also be possible to check the received string for1. Size (if it's 999 Bytes long, chances are there's more data to be read

2. If the last character in the received string is the termination character

VISA will return different error/warning codes in the error cluster for the different situations you are discussing.

-

QUOTE (horatius @ Nov 4 2008, 05:19 PM)

I have loops with long waiting periods. If I press a button the VI should stop the current waiting cycle and continue without passing of whole waiting time. I think it's possible with Event Structure but there must be an easier way to do this.We use queues and notifiers for this (they act the same way). You can have the notifier wait as long as you want (or forever), and then you can close or destroy it from another loop and it will stop waiting. We call this "scuttling" and we use it everywhere. You will want to filter error 1 and error 1122.

-

QUOTE (Variant @ Nov 4 2008, 02:00 PM)

http://www.regular-expressions.info/ is a popular site, but there are many others.

Does emulating God suffice to pass the Turing Test?

in LAVA Lounge

Posted

QUOTE (Jim Kring @ Dec 8 2008, 07:11 PM)

I would say no, since you could say that schizophrenia is a type of damage or disability affecting one's humanity. So if one's humanity is a bit broken, then it may be easier to emulate (whether posing as God or any other delusion). Succeeding at this seems like an easier task than emulating real fully-functional humanity.

fun stuff!