jdunham

-

Posts

625 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by jdunham

-

-

Your VI is fine. I don't think there is any simpler way. People don't do this very much because a lot of functions which could handle one character also handle the characters as U8 integers. For example the IsDigit? function and its siblings Can handle strings or U8s.

It seems like it would be better to focus on your real problem. I use LabVIEW serial all the time and I have never had that issue. Is your box expecting Serial flow control? If you dig in to all of the hyperterminal settings and make sure you set the exact same settings in NI-VISA (using attribute nodes on your VISA refnum) then they will perform the exact same way. AFAIK Visa is just wrapping the same Microsoft OS Serial port functions as Hyperterm.

-

QUOTE (jcarmody @ Jan 29 2009, 03:27 AM)

I don't think that this will work if the Pause control is handled in an Event Structure because setting the value of a control doesn't trigger an event. I discovered this yesterday and ended up putting a Value (Signaling) property node in the Timeout case.Jim

You can set the value(signalling) property remotely. You have to get the control reference from the VI reference and the control name, but once you have the reference to the specific control, you can call any of the control's properties or methods.

-

QUOTE (NeilA @ Jan 28 2009, 09:39 AM)

There has been a decision by people who dont necessarily know what they are talking about...You can always try the Dear Abby ploy: Go back and tell them, "Well I wasn't sure the best way to proceed, so I asked the experts some of the LabVIEW bulletin boards, and the unanimous reply was that my company is flushing money down the toilet by not using TestStand for this application. Many people find it is working great for this application and several people said that rolling our own would be foolhardy because it would take so much longer to write and then still need to be debugged."

Sometimes people get religious and this won't work, but other times the advice finds its way through the cobwebbed crania when it originates from outside the group.

Good luck!

-

-

QUOTE (Callahan @ Jan 26 2009, 09:38 AM)

...The image is clickable...So is the pict ring. But I see the drop-down is not as attractive as the VIPM version. Like Yair mentioned, they also have to do some trickery to only drop down a subset of the images in the control. Pretty slick. But you only asked about a clickable picture, and the pict ring should be enough. Are you using the operate tool to click it?

-

QUOTE (hfettig @ Jan 26 2009, 08:41 AM)

I have 240 records (cluster of data), which I want to randomly access and modify.The accessing part is easy but every time I try to change one of the records a new record is added to the end of the file. I do set the file position before calling the write function, but I just noticed in the help for the write function that it says that the file pointer is always set to the end of the file before writing.

Is there a way to avoid that or will I have to go with my own custom binary format?

I think the datalog format is just a cluster of two elements: a DBL timestamp (old-fashioned) and a cluster of all the front panel data in tab order. I don't know if there's also a header at the beginning of the file. It shouldn't be too hard to read all the records, modify the one you want, and write everything back to a new file.

That being said, the datalog format is a terrible format, and I really wish they would revamp that system, since the datalog feature is really great. You might be better off with your own format or TDMS format.

-

It's most likely a "Pict Ring". It's on the same palette as the regular ring and enum (but not on the System palette).

-

QUOTE (jlokanis @ Jan 21 2009, 01:21 PM)

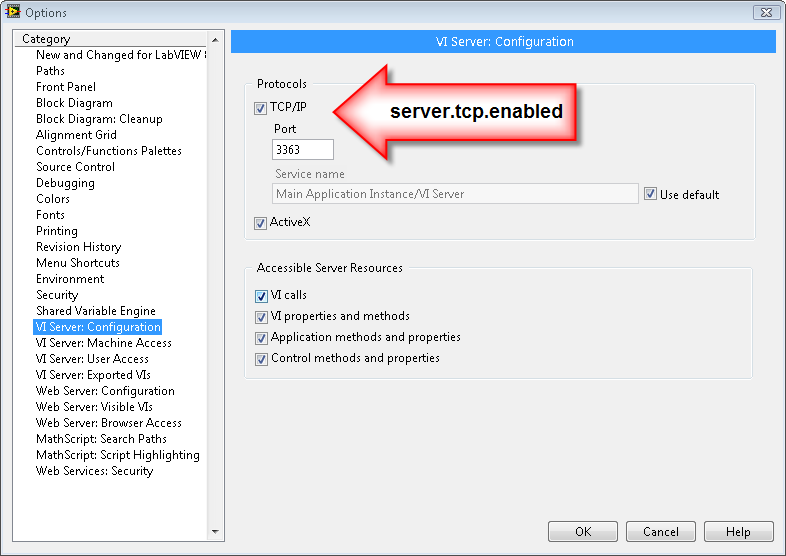

Can't telnet in. So, the server does not seem to be running.server.tcp.access="+127.0.0.1"

server.tcp.acl="290000000A000000010000001D00000003000000010000002A10000000030000000000010000000000"

server.tcp.enabled=True

Well first telnet in from a command prompt on the same computer. If the server is listening, it will connect (telnet can't get you past the connection, but it should be a healthy black screen and kick you out when you start typing).

The most likely thing is Options -> VI Server: Machine Access. The default is localhost only. Try adding your other machine's IP address to the list with access allowed. You can also allow "*" (everyone) but only if you are secure from inbound internet connections (or you don't care about security).

If that doesn't work, verify that Windows firewall is open on port 3363, and then look at the other LV VI Server settings.

-

It should work fine, even over the network. First I would telnet to that machine on port 3363 (unless you changed VI Server's port) to make sure it is accepting connections. If that works, then the error out from each VI server call should tell you what's not working.

-

QUOTE (mross @ Jan 21 2009, 09:50 AM)

Our differences on preferred resume style demonstrate the near impossibility to please every resume reviewer.I think your point is well taken for non-technical resumes, and people with limited relevant experience to the job description. On the other hand, I have read many resumes (though only for engineering positions), and I can't recall an interesting resume that was NOT two pages, or more. I can envision a person with five to ten years work experience that can fill one and a half or two easy-to-read pages with relevant information; relevant for me includes a meaningful objective or summary section, education, and so on. I tend to view graduate work and some undergraduate project work as work experience. Obviously, I am pretty forgiving about resume length.

Mike is right, the length is not a deal-killer. But relating back to the original resume, zmarcoz, your "courses" include. math, EE theory, electromagnetcs, circuit design. Holy smoke, you've got two degrees in EE. Of course you took those classes, so why are they cluttering up your resume and obscuring all the interesting stuff. I think you should take out the "Courses" section, and then under each Eductation item, put the 2 or 3 most interesting/unusual classes from your list.

I would consider the same thing with the skills, though some people like to see them listed separately. For me it's more interesting to see the context in which you used them, like when you say you used LabVIEW in your research project. The middlemen are just going to grep your resume for the right words with automated software, so they could care less where you list them. No one cares if you know Microsoft Office. If you can't learn it in 30 minutes, then there's a serious problem. If you're great at Excel Macros and VB for Applications scripting, you should mention that.

On to the Experience section: Prepared Course Materials, Supervised students, Evaluated and graded... - again all those are obvious, since you were a TA and all, and at the very least could be removed or combined into one item. I think each experience of yours could be condensed to 3 items, maybe 4.

With all that, you should be able to get your resume down to one page and still tell Mike an interesting enough story about yourself.

-

I think you should get your resume onto one page. Others may disagree, but I think one only needs a multi-page resume with 10-20 years of experience AND several different jobs or skills. If someone could fit their professional 'snapshot' onto one page, but they choose not to, then I get several negative reactions:

- they don't care about saving me time/effort

- they can't tell the difference between important/useful stuff and the rest, so they've stuffed it all in.

- they can't present information in such a way that I can absorb it an a hurry (Remember humans only absorb 7±2 pieces of information at a time. The resume is your chance to get me to remember those about you)

It's not that I get insulted by those things, but I will suspect that those same things will happen on the job, should the candidate be hired.

I'm not trying to be negative on you. This is a very common problem with resumes.

I wouldn't put any 'negative' things down, like that your English is not as good as your Chinese. If your resume is in English, everyone will assume you have an adequate ability to use it in the workplace, so you don't need to clutter up the resume with it. Fluency in Chinese could be a real asset, so I would keep that, as long as you realize the reader will then assume that English is your second language.

Good luck!

- they don't care about saving me time/effort

-

I was having a related problem in that I had an lvlib window open, and I still got the _ProjectWindowX value as the LaunchVI and I couldn't figure out how to distinguish this window from its project. Any ideas?

-

QUOTE (Ton @ Jan 14 2009, 09:22 PM)

Yes but only to VI server connections (with http://zone.ni.com/reference/en-XX/help/371361E-01/glang/open_application_reference/' target="_blank">Open Application), it is not some magic token to set up a random data server connection.Well you can connect to it with Telnet (this is useful for testing your connectivity and checking your firewalls), but after you send it a few keystrokes and it realizes you don't speak VI Server, it will disconnect.

If you want a server like Ton is mentioning, you have to write code using the TCP routines in vi.lib, and it has nothing to do with the INI file. You can listen on any port you want, but you have to write all the code to receive data and send responses. The LabVIEW Examples are a good place to start.

-

QUOTE (Ton @ Jan 14 2009, 09:22 PM)

Yes but only to VI server connections (with http://zone.ni.com/reference/en-XX/help/371361E-01/glang/open_application_reference/' target="_blank">Open Application), it is not some magic token to set up a random data server connection.Well you can connect to it with Telnet (this is useful for testing your connectivity and checking your firewalls), but after you send it a few keystrokes and it realizes you don't speak VI Server, it will disconnect.

If you want a server like Ton is mentioning, you have to write code using the TCP routines in vi.lib, and it has nothing to do with the INI file. You can listen on any port you want, but you have to write all the code to receive data and send responses. The LabVIEW Examples are a good place to start.

-

-

QUOTE (Variant @ Jan 13 2009, 03:38 AM)

I need to know the internal details like, whether we are making the exe as a server or VI server opens an option for the exe to be accessed by the other applications.Rolf is right, it would be helpful to know more about what you are asking. If you are asking if this is a security vulnerability, then yes. If you enable the VI Server then LabVIEW will listen on port 3363. If your firewall lets that port through, then other machines can detect that your computer is listening. If another computer connects to VI Server, and that computer can speak the VI Server protocol, then it can run any VI on your system if it knows the name.

I don't think LV scripting will work through this connection. I don't think you could use this connection to build new VIs on the target system which could do very bad things like list or read or delete the contents of your hard drive without specific advance knowledge of VIs existing on the remote system. (That's an interesting discussion on its own, especially if I am wrong).

If you build a separate EXE, you would have to enable VI Server for that application in its own INI file in order for that to be exposed or vulnerable.

If that wasn't the thrust of your question, then you could try again.

-

QUOTE (OlivierL @ Jan 13 2009, 09:16 AM)

About the Build Array and Concat Strings, isn't LabVIEW supposed to release the memory automatically by itself after the loop is over? I can see that as taking huge amount of time in a long loop process but can memory leaks really happen from that?You're basically right, depending on your definition of memory leak. LabVIEW itself isn't leaking memory if it's just doing what you ask. But if performance slows to unacceptable levels while the loop is running, and maybe if that's what the OP is experiencing, then he would be advised to look for these situations.

-

We commit all our installers, but we don't build them with LabVIEW for various reasons we are trying to eliminate. Keeping all your successful builds is well worth the server disk space.

Why don't you just build into a parallel folder, and then when you are finished, copy the whole thing into your version-controlled folder. It's a one-line command with Windows ROBOCOPY (the replacement for the venerable XCOPY). If you are doing a manual build, you can just drag and drop one folder onto the other one and replace everything old.

Jim is right though, programmatic builds are the way to go.

Jason

-

-

QUOTE (zmarcoz @ Jan 10 2009, 07:05 AM)

- Functions»All Functions»File I/O»Advanced File Functions>>New File vi. I read a book which said this vi has an input of datalog type. Then I read the VI examples, I see an example which write the data into file with this VI. The datalog input is a bundle with string and DBLs. I also read the VI which read the output file generated by the previous VI. This VI also have the bundle (I can understand this part), but it also need me to open a datalog file (I don't understand this part). What is that datalog file? Doesn't the bundle contain all the information to read the data file??

The datalog type can be any type you want (your strings and DBLs was probably just an example), but files don't have to be datalog type. If you don't wire the datalog type, you get a normal (binary or text) file which can be understood by other programs. Datalog files are LabVIEW-specific, but have pretty much been abandoned by NI which now recommends using TDMS or LVM files. The problem is that datalog files are not self-describing, so if you don't have the original program which created the file, you can't read the file.

- Functions»All Functions»File I/O»Advanced File Functions>>New File vi. I read a book which said this vi has an input of datalog type. Then I read the VI examples, I see an example which write the data into file with this VI. The datalog input is a bundle with string and DBLs. I also read the VI which read the output file generated by the previous VI. This VI also have the bundle (I can understand this part), but it also need me to open a datalog file (I don't understand this part). What is that datalog file? Doesn't the bundle contain all the information to read the data file??

-

-

QUOTE (SimonH @ Jan 8 2009, 02:11 PM)

You could also use the VI Profiler that can be found at Tools » Profile » Performance and Memory…. If you check "Profile memory usage" it will show you the memory being used by each running VI as your program runs.No, there are plenty of things the profiler won't report. I don't believe that shows global memory like queues and notifiers or stuff allocated by ActiveX nodes that you've invoked.

-

QUOTE (VoltVision @ Jan 7 2009, 01:57 PM)

Hello all,I have an application where a variable (DBL scalar) is shown in a graph to be bouncing above and below the target setpoint @ approx 1-5sec intervals. In other words, if the setpoint is 1500lbs, the variable bounces between 1250 and 1750lbs, which is +/-250lbs and it does this bouncing @ approx 0.2 to 1 Hz. The customer wants me to show the average instead of the real-time.... so the graph would show a horizontal line @ 1500lbs in the above example. They dont want to see the ripple. So, I pulled out the "Mean PtbyPt.vi" function and as long as I increase the sample length adequately it does exactly what my customer wants. Heres my problem... When the process starts or ends and the weight returns to zero lbs, the mean is very, very slow to respond.

Is there a way to make it "come into" and "out of" the target zone quickly, but filter the heck out of it when it is in the zone? I am thinking of making my own function that would look @ the slope. If the slope is less than a certain threshold, then the "Mean PtbyPt" sample length is long, but if the slope is greater than a certain threshold, then the "Mean PtbyPt" sample length is short. Since I am just learning labview now and I have never used any of the filters or signal processing functions before, I feel like I might be recreating something that already exists? Would any of these help me? FIR, IIR, Chebyshev, Butterworth? I am glad to learn all of these things, but to accomplish my task efficiently without chasing my tail, can anyone point me in a helpful direction?

What is your sample rate? Or more specifically, how wide are excursions? Not that I really want to know, but if they are narrow, you could probably use a Median Filter, and if they are wide, you probably need some other kind. Butterworth is a very basic and popular IIR filter.

The other thing I would recommend is to store a waveform for analysis. You can just write it to a Waveform Graph, copy the graph to a new VI, make it into a control (instead of an indicator), and save the waveform as the graph's default value. Then make another graph for the filtered results. Get slightly fancy and drop the original data in there too, and you can see the filter effect plotted against the original data. Now you are freed from the pt-by-pt world, and from having to sit by the machine and you can find something that works well. You could even post it here for suggestions. Once you have a good filter, then worry about getting it to run in real time with some kind of point-by-point function.

I think it's better (but certainly not required) to use a real filter that does what you want than to make something up.

-

QUOTE (Thang Nguyen @ Jan 7 2009, 07:10 AM)

I don't sure if Window allows two software programs write to the same TCP/IP port at the same time or not, but currently my system has two programs (1 LabView and 1 C#) having to write data to the same device by a same port. I would like to know which VI I should use to check if that TCP/IP port is busy or not and how I should do to wait on that port.You should be able to use TCP Open Connection with a reasonable timeout. If the connection is successfully established, you can send or receive data. If the device is not ready for LabVIEW, then the Open Connection function should wait until it is. If the device is fancy enough, it may be able to handle simultaneous connections.

Since the messages are coming from the same system, you may also need to make sure that the local port (which is usually auto-numbering) is not conflicting, but LabVIEW's default is to choose an unused local port.

Topic about LabVIEW just started on slashdot.org

in LAVA Lounge

Posted

My take on this is that LV has two natures. It is more accessible than most other languages for getting up and running and getting something useful done with the investment of one or two afternoons.

And yet there is a richness and elegance in the language and its advanced features for accomplishing some truly amazing things. In other words, it's a totally awesome programming environment (both the language and the execution/task scheduler).

The problem is that not many people care that both of those are true, including the other posters on /.

I'm not sure how to make an argument that would affect anyone's opinion about it.

Even NI Marketing knows that this dual nature of LabVIEW is mostly just a problem for them.