jdunham

-

Posts

625 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by jdunham

-

-

QUOTE (Minh Pham @ Nov 2 2008, 04:15 PM)

You dont need any PCI card for this as serial communication is enough to control and receive msgs between the PC and the device.RS232 to USB should be fine but if you can use the straight serial to serial cable as it is simple and cheaper that way i reckon.

I think the OP meant that his computer doesn't have any serial ports. RS232 to USB should work fine, but a PCI card will be fine too. Often your motherboard will have the RS232 header on it, so you just need the extender cable to the back of your chassis, which you could find at a surplus store. Either way, you should be able to get an RS232 port for US$20-25 (unless you buy it from NI).

-

QUOTE (bodacious @ Oct 29 2008, 01:14 PM)

jdunham - maybe I'm a bit too optimistic, but my understanding was that a COTS mesh-radio architecture will take care of dynamic routing and maintaining connectivity in the network, hence it should be transparent to me as a user that "only" deals with the individual nodes. Since I don't have the mesh-radio hardware on hand yet, I'm trying to emulate the network with a simple ethernet subnet, hence my questions about the TCP/UDP broadcasts.Ok, that makes some sense. I presume you are ready for the fact that the mesh networking will consume a lot of the bandwidth of your radios, and you are accounting for that in your traffic analysis.

To answer more of your questions, more information about the design considerations is needed. If your network is reliable and you don't need a hard guarantee of delivery, then you should just use UDP for broadcasts. If those are not true, can you make one of the nodes a master node? Then you send all updates to it, and it can deliver the broadcasts in an efficient manner to each subscribed node. Is your network dynamic, that is will nodes connect and disconnect at unpredictable times?

-

QUOTE (Kyle Hughes @ Oct 29 2008, 02:22 PM)

I would love to hear some comments on PushOK CVS as well. Attempting to find a decent WEB-based utility to help a team of remote users work on the same LabVIEW project.Is your team already using CVS? If not, then it's hard to imagine why not use SVN instead.

-

QUOTE (bodacious @ Oct 29 2008, 10:36 AM)

A server architecture would certainly solve a lot of problems, but I'm not 100% certain that all nodes will be able to see the server. In the final implementation we will employ a mesh radio to extend the wireless range, but I don't have the hardware yet, so I can't tell if that's an option or not. Another concern is data traffic volume - if we have a large number of nodes and all nodes need to exchange data will all nodes across the network the traffic scales with N^2, instead of just N if the nodes can broadcast their information.At the risk of being a jerk, I will point out that you are designing a mesh network (whether or not you get mesh radios), and that mesh networks are hard, and a lot of people are doing PhDs and starting companies about them. If you are really going to reinvent all of this and since you have some pretty basic questions, I think you have a lot of struggle ahead. I don't want to say you couldn't do it, but I hope you are reading a lot of the literature, and looking for other forums where people have more relevant expertise (nothing personal intended to you or Dan).

-

QUOTE (Thang Nguyen @ Oct 28 2008, 10:58 AM)

How do you think about my event structure with the solution of using register node? Is it fine?I'm a big fan of notifiers. To me they seem easier than dynamic events, but if you already need the event structure it's probably a good solution. I don't really like to mix the GUI code with the process messaging code.

-

QUOTE (BrokenArrow @ Oct 28 2008, 10:30 AM)

Hello all,Once LabVIEW takes control of the serial port (sets it up, starts reading and writing to it) it doesn't want to let go, even if you've closed VISA, etc.

In my experience, when your hardware freezes up (lets say due to a bad command sent to a device), you have to quit LabVIEW and restart. No number of serial port stops, breaks, closes will make it re-open the port with success. You have to close LV. On a similar note, If you've started LabVIEW and had any kind of serial comm going on, you have to quit LabVIEW in order to use Hyperterminal (or anything else). These two scenarios are related in that I don't know why LabVIEW holds the port hostage.

Is there a way, while LV is still up and running, to simulate whatever is happening when LV closes and opens?

VISA Close works just fine for me, but other VISA calls will auto-open the port. Therefore you have to make sure that after closing, you don't call any other VISA functions on the port, even in other loops. I just tried it again.

Write some characters to COM1, and I can't open my terminal (PuTTY). Run VISA close, and then it opens OK. Can you post some sample code?

-

QUOTE (Thang Nguyen @ Oct 28 2008, 09:43 AM)

With the queue I have to wait for the time out to dequeue. In my dynamic VI B, I have an event structure. It maybe affect to each other. It will be the same with notifier. Maybe I don't know how manage them well.You shouldn't need to wait for timeout. Main VI A should open the queue and drop your values in. Dynamic VI B should open the queue and start listening, and there should always be something in the queue. You can use a zero timeout and throw an error if the queue was empty, because your queue should always have the parameters waiting in it. We start all of our cloned dynamic VIs this way. The Dynamic VI should force-destroy the queue, because if you let the Main VI Close the queue, then it may clean up the data before the dynamic VI can receive it.

-

QUOTE (TobyD @ Oct 27 2008, 08:44 AM)

EDIT: I found http://digital.ni.com/public.nsf/allkb/F02B2BF8943A31D786257393005D16F1' target="_blank">this article on ni.com, but I'm running Vista and the application data folder no longer exists - still looking

On my Vista computer, visaconf.ini is located in C:\ProgramData\National Instruments\NIvisa.

I don't know the best way to replicate settings between computers, but it seems likely that your app will need elevated privilege if you want to write a program to modify the file.

-

QUOTE (Aristos Queue @ Oct 25 2008, 07:12 PM)

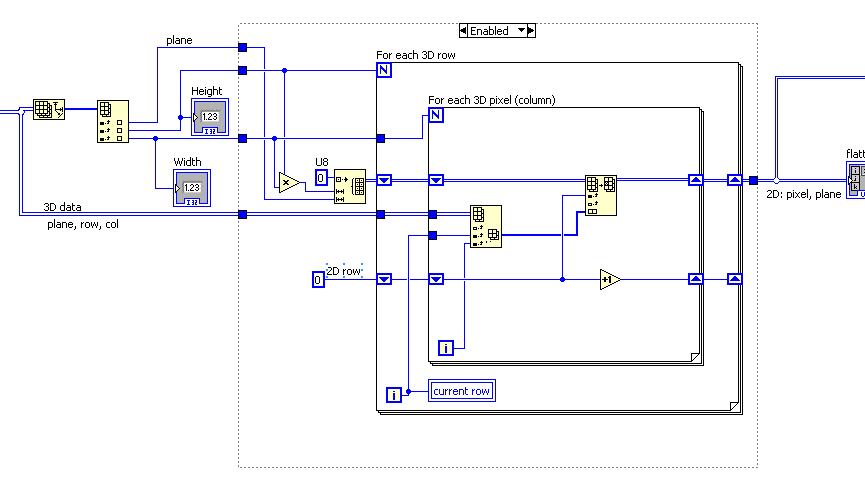

http://lavag.org/old_files/monthly_10_2008/post-1764-1225093225.png' target="_blank">

-

The problem is that you are using Build Array inside the loop. This requires LabVIEW to reallocate memory on every iteration (I think they try some optimization, but there is only so much they can do.) Instead of using shift registers which start empty and hold an ever-growing array, you should initialize with a full array containing all zero values, and then use Replace Array Subset, which is an in-place operation, to drop the correct values at each location.

Not only is build array slow, but it gets slower as the array grows.

On my computer the original VI ran too slowly to measure. After about 15 minutes It had done more than half of the rows, but was getting slower.

The rewritten version ran in 47ms.

-

QUOTE (Phil Duncan @ Oct 23 2008, 08:37 AM)

The start trigger for the acquisition needs to be either an analog rising edge or falling edge on ANY of the 16 channels.I am currently using a simulated M series PCI 6250 to try this out but I can't seem to set up the triggering. It seems that a DAQmx task can only have one single trigger. I could try to set up 16 tasks, each with their own trigger then try to acquire data on all tasks if any task triggers but this seems ludicrous.

Setting up 16 different tasks will not work, as there is only one circuit for the analog trigger. As others have said, you can acquire continuously and perform the "triggering" in software. Our product (a gunshot detection system) has been doing this with NI-DAQ since 1995 and it works fine. You should have no problem with 20kS/s.

I would recommend that you learn about the property nodes of DAQmx Read. The Read system is very flexible and powerful, and rather than writing a circular buffer, allows you to use the built-in circular buffer that is DAQmx. You keep reading all of your channels to detect the trigger condition, and when you have found it, call DAQmx Read with the desired range of samples, and you will get all the data in one coherent chunk. You can also do things like having maybe a 30ms window to look for trigger conditions, but grab that window size every 1msec, so that you don't have to worry about extra code to handle events which straddle the boundary of two successive reads.

-

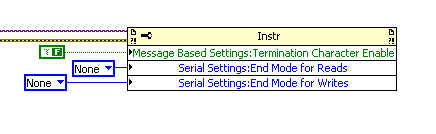

I think VISA Serial defaults to a text-oriented mode, where EOL chars are sent automatically at the end of each write. You should be using more VISA properties to make sure all those features are turned off.

-

QUOTE (jgcode @ Oct 16 2008, 05:28 PM)

Would running the above VI and forking data to the subvi in an inplaceness structure guarantee that a copy will not be made?I don't think so, though I was hoping for someone who knows more than me to be chiming in by now.

It helps to think about the requirements on LabVIEW. When you fork a wire, LabVIEW can only continue sharing the data between the wires if it knows no changes to either side will violate the integrity of data on the the other side of the fork. If you fork a wire and send one tine into a subvi, then that subVI might modify the array, so the caller is going to have to make a copy to protect the other tine(s) on the calling diagram. If you wire the array into the subvi and back out, then you can avoid the fork and the caller won't have to make copies to protect the data.

Also, if one side of the fork doesn't change the data, like it just gets the array size, then there should be no extra buffer allocation. Even if you send the other tine into some mysterious subvi, the compiler is smart enough to take the size first before it allows the subvi call to have its way with the data. Use the Show Buffer Allocations tool to get the actual situation for a particular diagram.

I think the inplaceness structure is just a hint, since you can't force the compiler to violate dataflow.

This would all make sense with pictures and circles and arrows, and I just don't have the time or knowledge to do that right.

-

If you care about memory usage, you need to make sure the subset array wire is a true subarray, and you need to scrutinize the buffer allocations nearby on the original array wire. AFAIK, the only way to know about subarrays is to look at the wire's context help.

Make sure you read this thread about sub-arrays

Even if the min-max is performed on a subarray, you need to check the array going into everything. If you run jgcode's fine VI, I think the caller will often have to make a copy of the entire source array, which you can detect by viewing the buffer allocations. If you took that VI and wired the array in and passed it back out, and did not fork the wire in the caller, you can probably avoid any array copies. Notice I am hedging here because it's hard to find clear documentation on this complex subject.

-

QUOTE (d_nikolaos @ Oct 13 2008, 07:51 AM)

Hi,I want to join 2 bytes that I take them from the serial port. Is that possible?

Except for that, I want to have somethink like start and stop.

I want to sent from a PIC through the RS232 some numbers in dec form like ('#19*')

# = start byte

* = stop byte

Is that possible?

Everything is possible, but what kind of inputs and outputs are you looking for?

Check out "Concatenate String", "Format Into String", the String/Number Conversion palette, and possibly the Data Manipulation palette.

It would also help if you post some code (or a block diagram screen capture) of what you are trying to do.

-

QUOTE (Jo-Jo @ Oct 10 2008, 01:25 PM)

I'm sure I'm not the only one that has run up against this. Does anyone know of a way to keep references alive after closing, or just stopping, the top level VI of the hierarchy that opened them? I'm trying to implement a more distributed architecture (not really distributed in the networking sense, everything is on one PC), in which the different application instances must know what the other instances are doing. In particular when a panel closes, it needs to check to see if any others are open in order to determine what to write to an XML file I am using. I know I can just reacquire new references whenever I dynamically open a new top-level VI, but I feel this is sloppy, and resource intensive. I feel a much better way of doing business is to acquire all the references I need when I initialize the application, and then keep them alive in a global, or something else that allows communication between threads, and close them when I'm finished with them. Unfortunately LabVIEW doesn't like this sort of architecture, and I can't find a way to stop the garbage collection.The other replies are right about ways to keep a VI's reference open, but it sounds like you want to keep the caller's reference open. I read your post a few times, but I still don't quite get it. Let's say you have a loader VI which kicks off the real app panel with VI Server. Why do you want to keep a ref to the caller, anyway? My first thought is that you should keep a list of all the spawned panels themselves, so that your manager can check whether those are still open or valid. But maybe I'm misconstruing what you really need. What good is a ref to a VI which is closed and stopped?

To put it another way, if you have to make a special effort to defeat the LabVIEW garbage collection, maybe your design is not quite right. How are you using different "application instances"? Are you running separate built EXEs? You can't share a global between application instances, whether in EXEs or LV source code. A VI ref is dependent on the application instance, so how do you deliver the correct app instance to your top level VIs now? Even if you open the VI ref by name, you have to supply the app instance.

Maybe it's just Friday afternoon and I am wiped out, so ignore my babbling if you have solved it already.

-

QUOTE (Mark Yedinak @ Oct 9 2008, 08:53 AM)

This approach will not work if you are using TCP connections though. If you use this on a TCP connection you will establish two independent connections to the device. The device should be smart enough that it returns the response on the same connection that it received the data on. It would be nice if VISA allowed true asynchronous communication. In addition, it would be nice if VISA allowed independent control of the various settings on the resource. In particular, it should allow different timeout values for the transmit and receive.Thanks, I should have stated more clearly that I was talking about serial ports. For TCP, our app uses the native calls which work fine for full duplex communications. I supposed we could have used VISA for that too, but your post makes me glad we didn't.

-

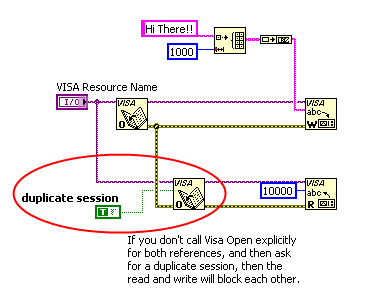

QUOTE (skof @ Oct 8 2008, 10:02 PM)

What I expected is that in asynchronous mode my transmit and receive processes would be independent, i.e. do not block each other.What I see in fact both in synchronous and asynchronous modes that a receive process may wait for response and block a transmit process from sending the data what will produce that response

Looks like Transmit and Receive still work via one door even in asynchronous mode.

Looks like Transmit and Receive still work via one door even in asynchronous mode.As I understand from this article http://digital.ni.com/public.nsf/allkb/ECC...6256F0B005EEEF7 locking take place in asynchronous mode anyway

Yes, it seems like asynchronous should help.

But, yes, you can get what you want.

http://lavag.org/old_files/monthly_10_2008/post-1764-1223532516.png' target="_blank">

I did suggest to Dan that this useful nugget be put on the NI Developers' Zone, but a quick search turns up nothing.

Let me know if it works for you.

-

QUOTE (jlokanis @ Oct 8 2008, 10:56 AM)

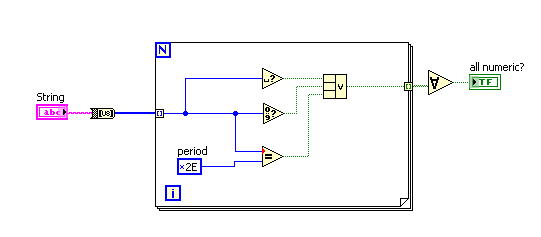

Well it's pretty easy to write in LabVIEW without regular expressions

But make sure that you don't want to support scientific notation or NaN, and what about a + or - only at the beginning.

You should also use trim whitespace.vi rather than testing for whitespace chars if you don't want to allow whitespace in the middle.

If you really want to do it right, a regular expression may be better. check out http://www.regular-expressions.info/floatingpoint.html

-

QUOTE (jgcode @ Oct 7 2008, 02:52 PM)

Hi MJE,Cheers, I did read about the file location transparency.

However, in Vista - Read/Write File Functions return an error in LV if you try to read/write a file you do not have admin access to.

So the transparency only comes into play after you enable admin access.

Other programs don't seem to have this problem - i.e. admin rights is not selected - so there must be a way around this?

The transparency is also called virtualization, because you are allowed to write to a virtual C:\Program Files\ which is not the real thing.

When you install a program, the installer runs under admin privs, and I think at that time you can declare that your app's virtualized folders have lesser privilege. I'm too lazy to look up whether this is the exact truth, but that's what I remember from a bunch of previous googling.

-

QUOTE (bmoyer @ Oct 7 2008, 05:12 AM)

http://lavag.org/old_files/monthly_10_2008/post-1764-1223401468.png' target="_blank">

-

QUOTE (jgcode @ Oct 5 2008, 11:18 PM)

QUOTE (jgcode @ Oct 5 2008, 11:18 PM)

Thinking out aloud however, if the installer installs the data files (whether in Program Files or elsewhere) wouldn't the installer know to remove them aswell on an uninstall?Or is there a built-in proxy in the installer that does not allow such behaviour?Well what has worked for us is that the configuration INI file is not installed by the installer (actually we do install one to fix the fonts, and that has to be in the program files folder, and it never changes).

For the real INI file with our application settings, the one which will go in the user directory, the software checks for it on every startup and creates it if not found (you may want to prompt the user to set the settings at that point too). I suppose your uninstaller ought to remove that file, which would be the polite thing to do, but I haven't tried that.

-

Our project has lots of typedefs and interconnected functionality. We (4 developers) use SVN and we don't use locking, but we sure don't look forward to merging. We have some conventions and some custom tools which help with the merging, but it makes me annoyed that they needed to exist. Sometime soon, I am going to try locking to see whether it improves our workflow.

-

QUOTE (jgcode @ Oct 4 2008, 05:42 PM)

Thanks for the responses guys :beer:Cheers for the links aswell.

I also wanted to point out that there is a big benefit to getting your config data out of c:\Program Files\.... If you want to deliver an upgrade to your program, you can let the installer blow away everything in that folder without losing any user settings or configuration state.

String Parsing

in LabVIEW General

Posted

QUOTE (Minh Pham @ Nov 3 2008, 07:19 PM)

I don't think it's safe to assume that the base file name doesn't have any numbers in it, so I think you need a more sophisticated regexp than [0-9]+

If you use

\s[0-9]+(?=\.vi$)

then it will find the number right before the extension, including the leading space. This only works with the Match Regular Expression function, not the older and simpler Match Pattern function.

However, I gotta ask what you are trying to do? Cloning VIs is way easier than making your own copies of templates. Thats how we spawn off multiple processes and multiple instances of a pop-up GUI. You can search LAVA for more details (that's how I learned about them).