jdunham

-

Posts

625 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by jdunham

-

-

My goal is to find the best(fast) way to update a graph in the main vi front panel from other subvis.

They are a bit weird to work with, but you might consider subpanels. Then you can have your graph in the subvi, which is great for debugging, and show the panel in the main VI with no extra programming.

However if your data is coming from different acquisition VIs and aggregated in the graph it may be the right solution.

-

- Wow! (feel free to substitute other words to shout here) This is much more formidable than the CLA I remember. I'd rather take the four hour exam any day rather than this one. Unless the CLA has also changed, it would seem that the CLA and the CLA-R are oddly incongruent.

Hi Jim, I'm sorry you have some hassles ahead of you to get re-certified, but I have to confess to feeling a bit vindicated, so thanks for sharing your experience. I totally agree with all of your points. That test is just a wee bit too hard, and I hate that saying that makes me into a whiner too.

Jason

-

Couldn't you kill the process via the command line?

This VI from the dark side shows some of the commands.

A executing functions within a DLL are done within your process. You can't kill a DLL separately from the app.

-

I believe the NI replacement would be VariantType.lvlib:GetTypeInfo.vi and its associated typedef control.

I'll also point out that it is pretty trivial to implement your own Format Anything method once you start using the GetTypeInfo call. A simple case structure on the returned type and you're off to the races. Here's some code I've been using for the last year or so:

Wouldn't be too hard to extend that to duplicate all the functionality of the OpenG VI.

Thanks! We use some other custom "format anything" VIs and we were handling the timestamps with custom code. Thanks for pointing me at the new VI.

-

I believe all of these issues arise from the OpenG library not being able to distinguish between timestamp and waveform types. In my opinion the legacy OpenG VIs which now have NI equivalents should be retired to avoid errors like this.

What's the NI Equivalent for Format Variant Into String__ogtk.vi?

-

So seems like there are a few ways of doing this ( this = high speed imaging (3000Hz-5000Hz), then writing to disk and later post-processing)

1. Use Grab VI and attach a time stamp to it (like for the filename) and write this combo. to a queue, later dequeue to post process.

2. using "LL Sequence.VI"

3. using " LL ring.VI" or "buffered ring"

In Grab VI... the timestamps is the way to check FOR SURE that the frame rate is what I set it to be. ( Framerate = #frames/Total Time; and (N- (N-1)) frametimestamp = exposure time (should be constant)

however, in the "Sequence" or "Ring" option, is there a way to get accurate time stamps of the acquired frame ? Seems like I can only set total number of frames (N) and maybe measure total time for acquisition and get the average framerate. Not very good for me, since I cant be sure that every frame was acquired with deltaT = (1/framerate).

So whats the final word on "best way" to do highspeed image acquisition and writing to disk problem ?

Sorry I don't have IMAQ loaded on my current computer, but I can give some general observations.

If at all possible you should try for a hardware timed acquisition with frame buffer handling done at the driver level. With a really high frame rate like you have, it seems way too risky to attempt any kind of queue-based software buffering at the LabVIEW level.

I would try to use LL Ring.vi or Buffered Ring since your goal is continuous acquisition. I don't know how your frames are synced and timed on the hardware side, and so that could affect the system, but it seems like the Ring grab should be able to give you an accurate timing of frames. It shouldn't just be dropping frames, or if it does, you should try to fix that somehow so that you can grab without any dropped frames.

If you get to that point, then the frame timestamp should be a simple multiplication of the frame number and your frame rate, added to the start time.

If your framesync isn't even regular, like if it is based on some detection sensor, then you have a bigger problem. In that case I would get an NI mulitfunction or counter/timer card and use the RTSI bus to share the framesync with a hardware timer, and then you can build up a buffer of timestamps associated with framesyncs.

If you can get that hardware-timed ring grab working, then you should be able to set up a parallel thread to monitor the current frame number and write any new frames to disk, hopefully without converting them to 2D arrays first (which is really slow); instead using some kind of IMAQ function to save the file.

At any rate, my suggestion would be to get to the point where you can rely on every frame being acquired with a known delta time, because I don't think you'll be successful measuring it in software at those high rates.

Good luck,

Jason

P.S. It probably would have been good to start a new thread since it's a new problem.

-

In my first attempt I am trying to acquire a set number of frames N (say N= 4000). So used Queues (of size N+1000) and Grab Acquire VI. As soon as I grab the image, I assign a time stamp to it and this cluster of (Image + time stamp), I write to a queue. Once the entire N frames are acquired, I Dequeue and post process.

Aren't there native IMAQ functions for writing images to disk? Why don't you stream your input straight to disk, and keep a list of frame numbers and/or file names and timestamps in your queue? That gives you an index into your collection of disk image files, and you can access them in your post-processing step.

-

I am mucking around with the ADODB(7.0.3300.0) .NET library and was wondering if anyone else had tried it. I tried to write a simple snippet of code to return a list of tables in an Access database using the ConnectionClass constructor without much luck. I have attached a png of the diagram and an error message. The Open Method executes just fine, but the OpenSchema method, which is used to return a record set reference for the list of tables, fails. The OpenSchema method expects the Restrictions and SchemaID arguments as .NET object references, whereas in the ADO activeX library they are variants, and can be left unwired without errors. After researching the OpenSchema method a bit, it seems that the newer .NET version possibly requires a SchemaID other than NULL, but I have not idea where to obtain it. There is a CreateObjRef method in the ConnectionClass, but it too requires an Object ref as an input, which seems like a strange catch 22.

We use a .NET contructor for System.Data.SqlClient.SqlConnection. All our database transactions use that.

Jason

-

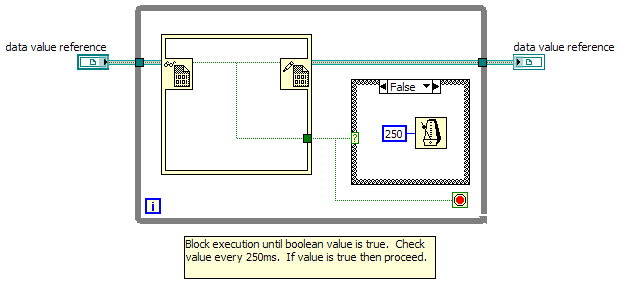

I thought this was an interesting comment. Suppose I created a sub vi like this and used it in an app. Is this polling or is it waiting for a TRUE event to occur?

Well if I can't seem to be right, I'll take interesting as a consolation prize.

Yes it's polling. However, I don't see that you've abstracted it away. 'Abstracting' implies you've hidden the implementation details, which you haven't. Now if you put that in an lvlib or an lvclass and make it a private method, remove the diagrams, and then I would say my code is not polling, even if it calls yours. If I instrument the code and discover that it really is polling underneath the abstraction, I could rewrite your lvlib, maintaining the same API, and get rid of your pesky polling. Since the system can go from polling to not polling without any change in my highest-level code, it's not useful to say that my code is polling.

Furthermore if you truly believe the OS and/or LabVIEW queue functions are polling, then you could pay NI 10 billion dollars or perhaps less, to furnish a new operating system with a different architecture and get rid of the polling and your queue-based code would not have to change (unless you were polling the queue status which I don't recommend). Now I don't believe this investment is necessary since I've already laid out arguments that queues are not polled, and no compelling evidence to the contrary has been presented. Didn't you already back me up on this by making some code to wait on dozens of queues and observing that the CPU usage does not increase?

I think the bottom line is that this is a question of perspective. Yes, in the end (or is it the beginning?) ALL computer operations are based on polling, and can't not be.

I don't agree. Like most CPUs, 80x86 chips have interrupt handlers, which relieves the CPU of any need to poll the I/O input. If the interrupt line does not change state, none of the instructions cascading from that I/O change are ever executed. The ability to execute those instructions based on a hardware signal is built into the CPU instruction set. I guess you could call that "hardware polling", but it doesn't consume any of the CPU time, which is what we are trying to conserve when we try not to use software polling.

If you put a computer in your server rack, and don't plug in a keyboard, do you really think the CPU is wasting cycles polling the non-existent keyboard? Is the Windows Message handler really sending empty WM_KEYDOWN events to whichever app has focus? Well the answer, which Daklu mentioned in a previous post, is a little surprising. In the old days, there was a keyboard interrupt, but the newer USB system doesn't do interrupts and is polled, so at some point in time, at level way beneath my primary abstractions, the vendors decided to change the architecture of keyboard event processing from event-driven to polled. While it's now polled at the driver level, a even newer peripheral handling system could easily come along which restores a true interrupt-driven design. And this polling is surely filtered at a low level so while the device driver is in fact polling, the OS is probably not sending any spurious WM_KEYDOWN events to LabVIEW.

So I suppose you could say that all my software changed from event-driven to polled at that moment I bought a USB keyboard, but I don't think that's a useful way to think about it. Maybe my next keyboard will go back to interrupt-handled. (see a related discussion at: http://stackoverflow...d-input-generic)

-

I have an application where I have to call a third party dll to do some calculations. Sometimes these calculation can take a long time and I would like to be able to provide the user with a stop button so they can abort the calculation. So far I haven't been able to figure out how I can abort the dll before it is finished. I thought I might be able to use the taskkill command (this is on a windows XP machine). I can use the taskkill command to shut down any exe that is running but either it doesn't work for stopping a dll or maybe I don't have the syntax right. I also tried using the VI server to abort the vi that calls the dll but that didn't work either. Any ideas on how I can abort the dll?

I don't think you can abort the DLL easily, since it's running inside your own app. Killing it would be literally suicidal. You could try to break up the calculations, so that you call the DLL more often (this is easier if you could modify the DLL code). You could write another LabVIEW app to run the DLL, and use TCP or network queues to communicate between the apps. That would make the DLL app killable, but it's a lot of work considering that your stated purpose is a slight improvement of the user experience.

-

If your VI stops at some breakpoint, and then you abort the VI, the "pause" button will often be left on. Then when you run that VI again, it will be paused and will stop at the first node to execute. So check whether the pause button in the toolbar is pressed in.

-

When did you take the recert? There is a one year grace period where your status is changed to "suspended" or "inactive" or something like that. You can still take the CLAR during this time and do not have to start at the beginning.

Yeah, I hadn't really been paying attention and was already suspended. NI was very helpful, and I think they even tacked on another month so I could schedule the test, but after failing the exam, it didn't seem worthwhile to keep pushing and asking for favors.

I have to admit that I'm somewhat peeved that I now ostensibly have to go and shell out thousands so that I know the magic NI-scoped terms with which to pass the exam.

...

Admittedly I speak too soon, as I have not taken the test yet. You just happen to be the second person I've talked to with a nearly identical response to my question.

Well at any rate I would recommend sitting for the exam once, and then taking the course only if you fail. You don't have much to lose (except $200 and an hour of your time) by trying.

-

Sure thing - I was planning on saying something about it afterward. I'm scheduled for the end of this month, so I'll know after that.

As far as the manuals go, it's funny - when I used to work for an alliance partner I took for granted the extra course manuals we had laying around!

Should I call up NI training and certification to see if I can purchase manuals, or is there another way? I've never gone about trying that.

Hi. Mr. Jim:

I wish I had better news for you. I've been programming LabVIEW since 1994, and have freelanced to create many different systems when I was a consultant and for several years now I have been working on a large project with a few co-developers and thousands of VIs. I use projects and libraries, and have made a few XControls and have used OOP classes a bunch of times. I took the CLA-R last August to renew my CLA certification, but I didn't take any courses.

I took the practice exam from NI's website and was disappointed to get a score slightly under the passing grade. There wasn't a lot of time before my deadline for the exam, but I figured I would just be more careful. I reviewed all the questions I got wrong, got a good night's sleep and ate a good breakfast. During the test, I felt I had plenty of time to review the questions which I was not certain about, and overall I would say they were similar to the sample exam.

After all that I got almost the same grade as I got on the practice exam, failing by one or two questions. Since I'm not actively seeking consulting work, I figured I don't need the CLA, though when I want it again, I will have to start again from the bottom.

I have to give NI credit for being able to design a test that is very difficult to pass unless you have taken their training courses. Aside from generating revenue, it fulfills its purpose of showing the people interested that the certified person has been trained and is not just a good test taker and LabVIEW hacker like me.

Well good luck with the exam, and let us know how it goes.

Jason

-

Where in the manual did you see the stuff about variable size elements?

I'm just talking about your link to the manual.

You can tell whether your data is flat by trying to write it into a Type Cast function. It will only accept flat data types (though it doesn't seem to accept arrays with more than one dimension, even if they are flat).

If an array of strings were stored contiguously, and you changed the size of any of the strings, LV would have to copy the entire array to a new memory location, so it wouldn't really make sense to store them that way.

-

Everything you wrote seems correct. Did you have a question or an observation?

Like the manual says, arrays of flat data are stored contiguously. Arrays of variably-sized data are not stored contiguously, though the array of handles to those elements is stored contiguously.

If you ask LabVIEW to flatten the data, say to write it to a file in one pass, it will have to allocate a string large enough to copy the array into. There are other, more clever, ways to write a large dataset to a file.

Welcome to LAVA!

-

In your opinion, what is polling? What characteristics are required to call something polling? Based on your comments I'm guessing that it needs to be based on a real-world time interval instead of an arbitrary event that may or may not happen regularly?

I would say polling is testing something in software repeatedly to detect state change rather than executing code (possibly including the same test) as a result of a state change (or the change of something else strongly coupled to it). Time is not relevant.

I would also say that if polling is going on beneath some layer of abstraction (like an OS kernel), then it's not fair to say that everything built on top of that (like LabVIEW queues) is also polled.

At last I would say that there could exist an implementation of LabVIEW such that one could create a queue and then the LV execution engine could wait on it without ever having to blindly test whether the queue is empty in order to decide whether to dequeue an element. That test would only need to be run once whenever the dequeue element node was called, and then once more whenever something was inserted into the queue, assuming the node was forced to wait.

Given that an ignoramus like me could sketch out a possible architecture, and given that AQ has previously posted that a waiting queue consumes absolutely no CPU time, I believe that such an implementation does exist in the copy of LabVIEW I own.

Pretty much all of those statements have been denied by various posters in this thread and others, though I'm sure that's because it has taken me a long time to express those ideas succinctly. I'm sort of hoping it worked this time, but extrapolating from the past doesn't give me too much confidence.

Thanks for indulging me!

Jason

-

How about whenever you move more than two nodes wire segments without adding or deleting anything, a little animated broom comes up and says "It looks like you're trying to clean up your diagram..." Or maybe it could be a paper clip; I know one who's out of work right now.

-

I think you are right - it is all about definitions. My definition is that if Windows is checking something every time an ISR is fired it is still polling regardless of the fact that the check was fired by a hardware interrupt. I think what you are saying is that since the code that does the check is automatically fired directly or indirectly by the ISR it is not polling. I can kind of understand that definition.

Sure. And it depends on what you are checking. If you are looking for new keypresses every time the timer ISR is fired, that's certainly polling. But if you only check for keypresses when the keyboard ISR fires, that's not polling. I don't think Windows polls the keyboard.

-

Well. I'm coming in a bit late but I'm a bit surprised that there's no discussion of tagged-token/static dataflow or even actors and graphs. Yet this whole thread is about dataflow ?

Apologies for not being sufficiently buzzword-compliant. And it's about time you got here!

-

I don't think you are understanding what I am trying to say. I'm talking about the entire software stack... user application, Labview runtime, operating system, etc., whereas you are (I think) just talking about the user app and LV runtime. Unless the procedure is directly invoked by the hardware interrupt, there must be some form of polling going on in software. Take the timer object in the multimedia timer link you provided. How do those work?

"When the clock interrupt fires, Windows performs two main actions; it updates the timer tick count if a full tick has elapsed, and checks to see if a scheduled timer object has expired." (Emphasis mine.)

There's either a list of timer object the OS iterates through to see if any have expiered, or each timer object registers itself on a list of expired timer objects then the OS then checks. Either way that checking is a kind of polling. Something--a flag, a list, a register--needs to be checked to see if it's time to trigger the event handling routine. The operating system encapsulates the polling so developers don't have to worry about it, but it's still there.

Well I do understand, but I don't agree. We'll probably never converge, but I'll give it one more go.

Repeating, "When the clock interrupt fires, ... it checks to see if a scheduled timer object has expired". This seems to you like polling, but I don't think it is. If the interrupt never fires, the timer objects will never be checked. Now since the clock is repetitive, it seems like polling, but if you cut the clock's motherboard trace, that check will never occur again, since it's not polled. If app-level code has registered some callback functions with the OS's timer objects, then those callbacks will never be called, since there is no polling, only a cascade of callbacks from an ISR (I suppose you could call that interrupt circuity 'hardware polling', but it doesn't load the CPU at all).

According to that discussion LV8.6 apparently uses a strategy similar to what I described above in wild speculation #2. The dequeue prim defines the start of a new clump. That clump stays in the waiting area until all it's inputs are satisfied. Assuming all the clump's other inputs have been satisfied, when the enqueue function executes the queued data is mapped to the dequeue clump's input, which is then moved to the run queue. What happens if the clump's other inputs have not been satisfied? That clump stays in the waiting room. How does LV know if the clump's inputs have been satisfied? It has to maintain a list or something that keeps track of which inputs are satisfied and which inputs are not, and then check that list (poll) to see if it's okay to move it to the run queue.

Again, polling is one way to do it, but is not required. Not all testing is polling! You might only test the list of a clump's inputs when a new input comes in (which is the only sensible way to do it). So if a clump has 10 inputs, you would only have to test it 10 times, whether it took a millisecond or a day to accumulate the input data.

I guess that's back to definitions, but if you're not running any tests, not consuming any CPU while waiting for an event (each of our 10 inputs = 1 event in this example), then you're not polling the way I would define polling. You don't have to run the test after every clump, because LV should be able to associate each clump output with all the other clump inputs to which it is connected. You only have to run the test when one of the clumps feeding your clump terminates.

It makes sense that LV wouldn't poll the queues in the example because it wouldn't really help with anything. That's the watched pot that never boils. As long as you design the execution system to be able to work on a list of other clumps which are ready to run, and you can flag a clump as ready based on the results of the previous clumps, then you don't need to poll. It's sort of like... dataflow!

If the LV execution thread has exhausted all the clumps, I suppose it could poll the empty list of clumps, but by the same logic it doesn't need to. The minimum process load you see LV using at idle may be entirely dedicated to performing Windows System Timer event callbacks, all driven from the hardware ISR (which seems like polling but I already tried to show that it might not be polled).

If Microsoft or Apple or GNU/Linux engineers chose to simulate event behavior with a polled loop within the OS, then yes it could be, but it doesn't have to be polled. And as Steve pointed out, if there are no functions to run at all the processor will do something, but I don't think you have to call that polling, since the behavior will go away when there is real code to run.

Having said all that, whether or not the dequeue function polls (and what definition we should use for "poll") isn't bearing any fruit and seems to have become a sideshow to more productive topics. (You are of course free to respond to any of the above. Please don't be offended if I let the topic drop.)

Understood. I'm enjoying the spirited debate, but it doesn't need to go on forever. I'm glad we're having it because the mysteries about queues and sleeping threads and polling are common. If the topic were silly or had easy answers, presumably someone else would have come forward by now.

-

Ooof. Sorry you didn't pass. If it makes you feel better, I had my CLA (I was one of the original ones, I think), and then I couldn't manage to pass the recertification exam, after stubbornly refusing to take any of the training courses. Common sense will only get you so far, and apparently it's not far enough to be a CLA!

-

There are a couple places where I think you're slightly off mark. First, there is a single clump queue for each execution system, not for each thread. Clumps are added to the queue (by Labview's scheduler?) when all inputs are satisfied. The execution system assigns clump at the front of the queue to the next thread that become available. Second, when the dequeue prim is waiting for data, the thread is not put to sleep. If that happened then two (or four) dequeues waiting for data would block the entire execution system and nothing else would execute. ...So while the dequeue does "sleep," it also is woken up periodically to check (poll) the queue....

Well I was hoping that AQ would have backed me up by now, but then I realized that he backed me up on this last time I tried to tell people that queues don't poll. So if you won't take my word for it, you can post a rebuttal on that other thread.

Another related post in the same thread has some interesting info on thread management and parallelism.

I'd be really surprised if an OS passes access to the system timer interrupt through to Labview. First, no operating system in the world is going to allow user level code to be injected into the system timer's interrupt service routine. (Queue Yair linking to one... ) ISRs need to be as short and fast as possible so the cpu can get back to doing what it's supposed to be doing. Second, since the OS uses the system timer for things like scheduling threads it would be a huge security hole if application code were allowed in the ISR.

The OS kernal abstracts away the interrupt and provides timing services that may (or may not) be based on the interrupt. Suppose my system timer interrupts every 10 ms and I want to wait for 100 ms. Somewhere, either in the kernal code or in the application code, the number of interrupts that have occurred since the waiting started needs to be counted and compared to an exit condition. That's a kind of polling.

Here's more evidence the Get Tick Count and Wait (ms) prims don't map to the system timer interrupt. Execute this vi a couple times. Try changing the wait time. It works about as expected, right? The system timer interrupt on windows typically fires every 10-15 ms. If these prims used the system timer interrupt we wouldn't be able to get resolution less than 10-15 ms. There are ways to get higher resolution times, but they are tied to other sources and don't use hardware interrupts.

Well I didn't mean that the OS allows LabVIEW to own a hardware interrupt and service it directly. But an OS provides things like a way to register callbacks so that they are invoked on system events.The interrupt can invoke a callback (asynchronous procedure call). Do you think LabVIEW polls the keyboard too?

Back in the old days, the DOS-based system timer resolution was only 55ms for the PC and LV made a big deal of using the newly-available multimedia system timer for true 1ms resolution. I think that's still the away it is, and 1ms event-driven timing is available to LabVIEW.

That gives us three different kinds of dataflow. I think all are valid, they just view it from slightly different perspectives.

[Note: I'm defining "source node" as something that generates a value. Examples of source nodes are constants and the random function. Examples that are not source nodes are the dequeue and switch function outputs, although these outputs may map back to a single source node depending on how the source code is constructed.]

Explicit Dataflow - ...

Constant-Source Dataflow - (My interpretation of AQ's interpretation.) Every input terminal maps to a single source node. Maintaining constant-source dataflow allows the compiler to do more optimization, resulting in more efficient code execution. Breaking constant-source dataflow requires run-time checks, which limit the compiler's ability to optimize compiled code.

Execution Dataflow - ...

I'm waffling a bit on the naming of Constant-Source Dataflow. I considered "Temporally Robust Dataflow" as a way to communicate how variations in timing don't affect the outcome, but I didn't really like the wording and it puts a slightly different emphasis on what to focus on. It may turn out that constant source dataflow and temporally robust dataflow mean the same thing... I'll have to think about that for a while.

Based on re-reading this old AQ post, I'm trying to reconcile your concept of constant-source dataflow with the clumping rules and the apparent fact that a clump boundary is caused whenever you use a node that can put the thread to sleep. It sounds like it is much harder to for LV to optimize code which crosses a clump boundary. If someone cares about optimization (which in general, they shouldn't), then worrying about where the code is put to sleep might matter more than the data sources.

Overall I'm having trouble seeing the utility of your second category. Yes, your queue examples can be simplified, but it's basically a trivial case, and 99.99% of queue usage is not going to be optimizable like that so NI will probably never add that optimization. I'm not able to visualize other examples where you could have constant-source dataflow in a way that would matter.

For example, you could have a global variable that is only written in one place, so it might seem like the compiler could infer a constant-source dataflow connection to all its readers. But it never could, because you could dynamically load some other VI which writes the global and breaks that constant-source relationship. So I just don't see how to apply this to any real-world programming situations.

-

For those who are suggesting SCC, you are completely missing the point. The drive computer is a bare-bones system that communicates with the robot during a competition. It's not the development machine/environment. We only change the code between matches based on how the robot performs. We use SolidWorks to model and design the robot, too, but we certainly can't put it on the drive computer, either.

Brining this back off-topic again, I think Mercurial is pretty lightweight and you could run it from a USB drive or by temporarily dropping a few files on the hard drive of the competition computer. It seems like that ability to back out changes or restore a configuration from a previous robomatch would really be a lifesaver in this environment.

-

Agreed on the SCC option. That other kid could have done any number of things (deletions, etc.) and saved the code, and whether or not it was intentional doesn't matter too much; it's still a risk. I use SVN too, though Mercurial seems like a better fit for this situation since all the change history for the current branch should be available even if you are disconnected from the internet. You could do that with SVN too if you run a local server.

I'm sorry for the awful situation, but I don't know that disabling a toolbar button is the real answer.

Why is G graphical only

in LabVIEW General

Posted

Not for technical reasons, to be sure. It's a business decision on the part of National Instruments.

You are absolutely right that some sort of textual representation could be implemented. However once diagrams were converted to an equivalent textual representation, it would be fairly easy to create other IDEs to edit those files or at least read and write the common format. It would also be easy enough to create a different execution system to read those text files and run them as a program, without having to reverse-engineer NI's patent-protected technology.

I think this would vastly increase the popularity of LabVIEW, but I'm not sure NI would be able to monetize that growth. These patents are the property of NI and it is their right to do as they please and their obligation to try to benefit their shareholders (including me holding a piddly amount of them). I'm quite sure they have had this debate internally.

So you can add this to the NI Wish List, and collect all the kudos you want, but don't hold your breath.