jdunham

-

Posts

625 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by jdunham

-

-

No. Each array has a dimSize of four bytes at the beginning of the array. You can't have a pointer to multiple arrays: it's possible to vary the decimated array sizes independently of one another, without another buffer allocation, by using the subset primitive.

Edit: From the "How LabVIEW Stores Data in Memory" doc in the help.

LabVIEW stores arrays as handles, or pointers to pointers, that contain the size of each dimension of the array in 32-bit integers followed by the data. If the handle is 0, the array is empty.

I don't think that's entirely true. If you look at the wires on those arrays where you expect allocations, you'll see that those are not exactly Arrays, they are "sub-Arrays". See this AQ post for more details: -> post #13.

-

QUOTE (TG @ Jun 9 2009, 10:52 AM)

Yeah it seems to work now except now it seems to randomly returns error 2 (with a meaningless GPIB explanation) and "LabVIEW memory full."Could I assume this is because the exe file has a possible memory leak?

I am reluctant to ask the guy who made this executable because I sincerly doubt he would make one with a memory leak

and afraid to askl without proof.

I wonder if anyone else encounter anything like that using this exe launcher in LabVIEW?

Is '2' the error code from the invocation (System Exec VI), or the return code from the executable? Labview error messages can't be applied to the latter.

-

QUOTE (horatius @ Jun 5 2009, 03:13 AM)

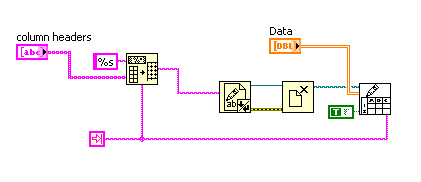

I fill an array (left side in picture) that I want to send to DAQmxWrite (right side in picture). In practical my array has only 2 different values and an INT8 type would be sufficient. However DAQmxWrite does only accept double arrays. Therefore I have to waste memory. Is there a solution?Why don't you just use a smaller array and use regeneration mode? If your input array is really complicated, then you can write some code to check the output status, and write to the output buffer in stages, keeping well ahead of the DAQ write mark. Granted, this is not as simple as the code you posted, but if you are running into memory problems, that's an easier fix than waiting while NI ignores your request to change the API.

-

QUOTE (crelf @ Jun 4 2009, 01:19 PM)

I'm not sure if the size of the system has anything to do with the selection between the models, more the size and structure of the team. There are puh-lenty of arguements for both methods - we (VIE) chose to implement the lock-unlock method as it allows us an extra layer of checking.Oh yeah, and we may even migrate to locking someday. The point was "don't let anyone tell you that the merge model can't be used with LabVIEW", even if they work for a very well-respected LabVIEW integrator.

Hopefully the OP has figured out that the choice of locking or merging is the major one to make when rolling out a SCC system. But as long as they don't use VSS, they will probably be OK.

-

Just use Index Array and only wire the second index input.

-

1

1

-

-

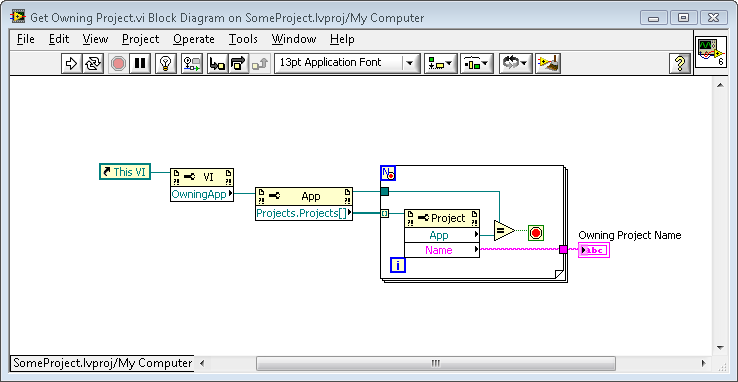

A running VI has a unique namespace, the Project Name and the context. In VI's title bar (in edit mode) and at the bottom left, the namespace is shown: for example,

"My VI.vi Front Panel on MyProject.lvproj/My Computer". I can't seem to find the VI Server property to dig this information out at runtime. I can get the context (with a private VIServer method), but not the project.

Ok, I found some code that works, but I feel like I'm missing something more obvious. Is there an easier way?

-

QUOTE (mesmith @ Jun 4 2009, 10:56 AM)

While I agree that LabVIEW can work well with the exclusive lock model for the reasons given, it is incorrect to say that LabVIEW does not work with the "copy-modify-merge" model. LabVIEW does provide a merge toolhttp://zone.ni.com/reference/en-XX/help/37...to/merging_vis/

http://zone.ni.com/reference/en-XX/help/37...rge_thirdparty/

Mark

Agreed. We have been using SVN without locking on a large LabVIEW system for 5 years. The main thing is to think twice before committing a lot of recompiled VIs (like when you change a typedef). That makes it more likely to have a collision with another coworker's changes.

-

You can also enable VI Server in your built application, just by adding the same lines to built app's INI file as the lines in your LabVIEW.INI file (they can be removed later for security). With VI Server enabled, you can use methods like GetControlValue and SetControlValue or hit the Value(signalling) property of the booleans. You can do it from a remote machine with LabVIEW installed, since you probably don't want to put LV on the target machine. The main problem with this is that you probably have to spend time assisting your test engineers writing these little control programs, but it's certainly a reasonable alternative.

-

QUOTE (Warren @ Jun 1 2009, 01:30 PM)

Thanks for the suggestions everyone. That example "C:\Program Files\National Instruments\LabVIEW 8.6\examples\portaccess\parallel port examples.llb\Parallel Port Read and Write Loop.vi" is in version 7, and I've actually had it open a few times, but I can't get anything to happen. The LEDs are lit up but thats how they are when the computer boots. I've been taking a look at "Labview for Everyone" and it says that if the Baud rate/ other settings arn't the same as the specs for the port then nothing will happen, but for that specific VI I dont see an option to change them. In the end I hope to use the serial port to communticate with a PIC (but I guess I need to overcome this obstacle first)... If anyone has any experiance with that and could shed some light on this problem that would be great.This ones resolved. I found an example a few days ago that pertains to Serial I/O, I'm not sure who the author is, but if he/she ever reads this thanks so much. I've attached an example of the VI. - For anyone who is attempting the same thing here's some tips ( A summary of what I learn from the above):

Remember to tie 11,12 to ground (19/20...) and be sure that that each output is reduced in some way before reaching the LEDs (I used 470 ohm) and remember LED - light emitting DIODE (so don't accidentally reverse bias them and wonder why there not lighting up!). And the last point, remember to set up the port properly in the system bios.

Well a serial port is totally different than a parallel port (well, they both use copper and electrons...). Parallel ports don't have a baud rate. It's a bit weird that VISA controls the parallel port and pretends that it's a serial port but don't let that throw you. If you try to set LPT1's baud rate, stop bits, parity, etc. in Visa, it will probably just ignore you.

Most of the stuff in LabVIEW for Everyone should be about serial ports because they are much more common for communicating with real stuff you might get paid to work with. Parallel ports used as digital I/O is mostly for fiddling around when you're too cheap to buy a $100 I/O board from NI. Nothing wrong with being cheap but most companies are going to want the real thing.

I'm glad you got it running!

-

QUOTE (Warren @ Jun 1 2009, 08:04 AM)

To answer the first reply: I have been sending single ASCII characters out, but on other attempts I have tried some hex and just binary (four 1's 0's randomly...as a shot in the dark) Im choosing the VISA port corresponding to 0x278...for me that should be LPT1 (some times all i can input is 278). Also I have Posted the Visa vi that I built quickly myself, but mostly I have been trying to get the built in examples to work..."Parallel port read / write loop" and "basic serial read/write""278" is NOT going to work as an input to the Visa Port. "LPT1" should work. "ASRL10::INSTR" should also work. If you right click on the VISA port, there are some options as to whether it accepts undefined names. These keep changing with different VISA/LabVIEW versions.

The Parallel Port example ShaunR mentioned is at "C:\Program Files\National Instruments\LabVIEW 8.6\examples\portaccess\parallel port examples.llb\Parallel Port Read and Write Loop.vi" on my computer. I don't know if it's on version 8.0, but I think you're wasting time if you don't get your hands on a current version and try this out. The vi.lib VIs which it uses are at "C:\Program Files\National Instruments\LabVIEW 8.6\vi.lib\Platform\portaccess.llb"

Good luck

Jason

-

QUOTE (Warren @ May 29 2009, 01:41 PM)

According to http://www.interfacebus.com/Design_Connector_Parallel_PC_Port.html' rel='nofollow' target="_blank">this link, the grounds are on pins 19 and 20. I would expect that most ports just tie all the grounds together.

Are you sending ASCII data out? How are you choosing the VISA port to use? Do you have the correct Base Address? Maybe you can post your VI and we can look at it.

-

QUOTE (Aristos Queue @ May 28 2009, 03:59 PM)

Yes. But http://lmgtfy.com/?q=LAVA+error.llb+Aristos' rel='nofollow' target="_blank">here it is in a slightly snarky way... I hope you are as amused as I was...

Snarky is fine. :laugh: I searched for "embedded object" which ryank had mentioned, but that didn't help. I guess you posted this a few months before I started paying more attention to this site. I'll check it out.

-

QUOTE (Graeme @ May 28 2009, 03:12 PM)

I have a Producer/Consumer architecture, somewhat new to me I should say, that I'm developing. In the Consumer loop there is code in the True case of a Case Structure which has a True constant wired to its selector terminal, so that the code should always run, and it appears to do so. However, remove the Case Structure in toto, so the code that was in the Case Structure should still always run, but certain aspects of the code now fail - so, code in the case that must execute, runs, code out in the open, which surely still must execute, doesn't run properly.What happens if you replace the case structure with a single-frame sequence structure? I didn't run your VI, but for sure if you remove the case structure, then anything not dataflow-dependent on the queue element will execute long before you drop a message into your producer.

-

QUOTE (ryank @ May 28 2009, 07:52 AM)

Actually, because of the nature of the specific error handling, I only do the string manipulation if there is an error that can't be handled locally and needs to be passed to the central error handler.Ditto.

QUOTE (ryank)

However, I disagree that the need for run-time performance ends when an error is thrown. I think this represents a common misconception about errors: that once one happens, normal operation goes out the window. There are many "normal" errors that occur during the operation of most large systems. Timeouts are a prime example, but there are also lots of other examples (buffer overflows, underflows, the FTP toolkit's brilliant idea of throwing a warning after every operation, the timed loop error that is thrown every time you programmatically abort a loop).Per above you don't have to do the string manipulation if you still care about runtime performance. I was just saying that in most cases you don't. If I get a timeout error, then it's not too likely that I can still count on my control loop performance, but of course I can see other situations where it would be essential. "Timed out waiting for flight control system response, ejecting pilot...". Of course if you use the built-in error routines, it takes quite a bit of diagram space to avoid the string manipulation whether or not there is an error. This is really a problem best solved by NI.

QUOTE (ryank)

As for NI doing something about errors, I'll certainly post my specific error handler code as soon as I feel like it's ready, and Aristos Queue has already posted his embedded object error code. Eventually I suspect more tools will become part of the shipping product, but as this thread shows, it's far from a simple problem, especially since any new system absolutely has to support the overwhelmingly large number of VIs that rely on the error cluster.Well I know it's a hard problem, but I remember presentations about the deficiency of the error cluster in NI Weeks from the 1990s. I've always been kind of disappointed there hasn't been more action before now, though better late then never, I suppose. Suggestions to the Product Suggestion Center seem to go into a black hole from the submitter's perspective. It's actually hard to know what I've already submitted, but I think I have put error suggestions in there in the past. For example, I think the Clear Errors VI is poorly thought out, since it wipes out errors you expect along with errors you might not have. It should have the ability to clear out specific error codes, and to check before and after errors around a specific block of code. Without that, it's a very dangerous routine, and I believe it should be deprecated.

Over the past few years I've spent a lot of time working on an error handler, and I know from other LAVA threads that most other advanced users have as well. It's just frustrating that so many man-hours have been put into fixing something that NI could have improved (with user feedback willingly volunteered) long ago. Thanks a lot to Chris for getting the ball rolling for NI Week. I wish I could be attending this year just for that.

Do you have a link to AQ's error handler? I thought I remembered seeing it, but Google was no help just now, nor did a search on ni.com yield anything useful.

-

QUOTE (ryank @ May 26 2009, 02:10 PM)

I categorize errors by using the <append> tag in the source field, which keeps them fully compatible with all of the normal error handling functions (one drawback is that this requires string manipulation, which is kind of a no-no time-critical RT code, I haven't yet come up with an alternative I'm comfortable with though).In our system, you can pass in a variant of whatever data wires you want and they are formatted into a string. However, by default this code, along with the code to grab the call chain, only runs if there is an error. There is no string handling unless there is an error thrown. Presumably if there is some kind of exception, need for real-time performance is probably over.

All that stuff would be so much easier if it were supported internally by LabVIEW. It would be great for NI to show some leadership on this and modernize the error handling system. This would really boost my productivity. Hopefully at the very least they will attend the NI Week session

-

1

1

-

-

QUOTE (n00bzor @ May 27 2009, 12:55 PM)

The standard parallel port contains discrete TTL lines. More information is here. If you don't have a built-in parallel port, you should be able to find a USB one for about US$15.

These are a bit pricier (US$100), but would be easier to work with

http://www.phidgets.com/products.php?category=0

If you want to have a lot of fun, you should ease into something like the Basic Stamp

Let us know what works!

-

QUOTE (crelf @ May 26 2009, 03:01 PM)

One of the questions that has existed since man first used a LabVIEW error cluster is how can I make it scalable so I can have more than one error simultaneously. The next question was where do I put all this data? I've seen many different implementations, some concatenated their error structure to the "source" text field in the standard error cluster, some implemented a completely new system outside of the traditional error cluster (with appropriate converters to and from each system). The question is: which one is best? The former breaks less and required less retro-fitting of existing code, and can benefit from some of the existing error handling VIs that ship with LabVIEW, but it sometimes breaks when VIs misbehave and wipe the error cluster clean (yes, some primatives have been know to do it under certain circumstances). The latter is tempting because we can start from scratch and design whatever we want, but it requires integration into existing components that, by default, use the existing format is challenging. Thoughts?We use the former approach, putting XML in to the error source. Then it is easy to detect whether the right XML is on the wire, and so what class of error we have. The XML contains the panel name (often different than the VI name), any custom error message which is on the block diagram which generated the error, the call chain, and any run-time data which is autoformatted with something similar to the VariantConfig library.

-

QUOTE (Michael Malak @ May 26 2009, 03:26 PM)

I posted a similar example in http://forums.lavag.org/Next-Newbie-Q-Local-variable-for-an-array-t14082.html' target="_blank">this thread It's a little different but maybe you can get some ideas.

-

QUOTE (Vladimir Drzik @ May 21 2009, 05:45 AM)

Hi guysI'd like to embed LabVIEW editor in the window of my LabVIEW application. The same thing that e.g. Vision Builder does in its Calculator step. The user would be able to write his own VI, using a restricted set of nodes. How can this be done?

Vladimir

I don't think this can work. The run-time engine does not contain the LabVIEW editor.

This is on purpose because NI does not want you to build an application which is substantially the same as LabVIEW and distribute it for free under your app builder license.

-

-

-

QUOTE (Warren @ May 21 2009, 02:05 PM)

http://lavag.org/old_files/monthly_05_2009/post-1764-1242946205.png' target="_blank">

-

QUOTE (crelf @ May 20 2009, 03:10 PM)

I don't think that it's hidden - you just need to right click on it and select representation. You can rest assured that if a few more constants were added to the palette then they wouldn't be the ones you want anyway, and you'd still need to select the representation.I'm not resting assured. My code overwhelmingly uses I32s and DBLs. Often I use Create Constant, but when the type has not yet been determined, then I go from the palette. It would be much better for me to have DBL and I32 available separately on the palette for both FP controls and BD constants. I suspect nothing will change, but you can't convince me the status quo is 'better'.

-

QUOTE (Aristos Queue @ May 20 2009, 09:40 PM)

There's even two ranges reserved for our customers. :-)With a measly 6000 error codes. We've used a good portion of them, and undoubtedly they would conflict with other users' codes if were were ever to share code. I don't suppose we could have a few more of the 4 billion codes available? Whom do we have to waterboard to make this happen?

Delete File

in LabVIEW General

Posted

Hi Mikael:

Even though your non-working code is probably trivial, maybe you should post a snapshot of that too. We do the same operation and haven't seen a problem in built exes. You mentioned it only happens on some computers. Is there a difference between XP and Vista/Win7?