jdunham

-

Posts

625 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by jdunham

-

-

QUOTE (scls19fr @ Apr 5 2009, 09:52 AM)

The problem with this is that my program will run every day, 24 h !So the amount of data will increase too much.

So I wonder if there is a way to store my data in a circular buffer.

Well if that's your only problem, a true circular buffer is not the best answer. Why not just store the data in a flat file (one row per measurement), and make a new file every day (please use file names like Weather YYYY-MM-DD so they sort in order) and then delete every file more than a month old.

I'm not sure I understood totally, but it sounded like you wanted to store three numbers once a minute. Together with a timestamp, that's about 50 bytes per minute or 26MB per year, give or take a few MB. Why purge the old records at all? Are you recording a lot more data that you didn't mention? Even recording every second, you should have no problem finding a big enough hard disk.

-

QUOTE (bsvingen @ Apr 11 2009, 02:08 AM)

You are missing the point (at least half of it). The behavior is not the issue, the quality is. More presisely you want to get rid of all possibilities for unexpected things to happen, at least as much as you can. It is about failure modes, not validation of operational modes.Well my point still stands. What is the difference between LabVIEW having undocumented features versus running LabVIEW on an OS with undocumented features? In reality, it's just a bunch of ones and zeros running through a von Neumann machine. Since we are well past the days where any person or team can make a useful and affordable computing device with no outside help, there is probably no way to eliminate the possibility of undocumented features.

The rest of us understand that presence or lack of undocumented features is not a useful metric of quality in a computing system, because no one has any way to count the undocumented features in the silicon, the os, the drivers, the labview etc, and of which could and maybe do have an impact on reliability. Since you can't even know how much there is, and since plenty of reliability problems (crashes) stem from documented features, it's surely pointless to worry about undocumented features.

-

-

QUOTE (Mark Yedinak @ Apr 10 2009, 11:15 AM)

It would be nice if LabVIEW provided a generic sort algorithm that the developer could plug a custom comparison VI into it. C++ has this capability so they have a very efficient sort algorithm and all you need to provide is a view of the data structure and a comparison function. This way you can efficiently sort an array of any data type. This would be a very nice addition to LabVIEW. Imagine being able to sort arrays of clusters easily with the additional ability to be able to sort on more than just the first element.Of course LabVIEW does sort on more than the first element of the cluster, but yes, it would be nice if you could chose the element order rather than having to rebundle your cluster.

-

Until a few days ago, the main portal page (http://forums.lavag.org/home.html) would resize properly to fit my current browser window. Now I have to scroll to the right to see the list of latest posts. Did the site get upgraded or otherwise broken? I tried two different browsers (Firefox & Chrome) and it behaves the same.

-

QUOTE (Ic3Knight @ Apr 10 2009, 07:26 AM)

At the moment, I read back byte by byte until I've seen both the start and stop 0xC0 bytes... but my code would be neater and possibly more efficient if I didn't have to....Seems like you could set 0xC0 as the termination character, and then every other message would be empty, and you would ignore them.

-

QUOTE (Justin Goeres @ Apr 10 2009, 05:03 AM)

But there's the rub: "code that can potentially lower this reliability."You seem to believe that the mere existence of any undocumented features, or experimental features that are left in the software (or the runtime that it executes in) but not exposed to the user, automatically constitutes a lower-reliability system. I say unit test the code, and design the system so that no individual piece puts too much trust in any other piece.

I totally agree with Justin. What if the compiler used by NI to build LabVIEW itself contained an undocumented feature? What if the operating system contained an undocumented feature, or else the OS used to run the compiler that built LabVIEW? The compiler is just a set of bits which generates another set of bits (LabVIEW.exe) which generates another set of bits (your app) which is controlling the action. Just because their creation is separated in time doesn't mean it's not just one system. Testing is the only way to validate behavior, not any proof about a closed set of features.

-

QUOTE (asbo @ Apr 10 2009, 03:58 AM)

http://lavag.org/old_files/monthly_04_2009/post-1764-1239376717.png' target="_blank">

-

-

QUOTE (postformac @ Apr 9 2009, 10:33 AM)

I tried using the "convert string to decimal number" function and it seems to not recognise the decimal point, I get a value of "1.000" out of my display. I have currently got round this by taking the string one byte at a time and manually looking for the decimal point, then dividing whatever is after the point by 1000 and adding it to whatever was before the point, which works fine.Aaaagh! On the same palette as "Decimal String to Number", you will find "Fract/Exp String to Number". I'm not sure why these names can't be a bit better, but I guess they go way back.

-

If you made an EXE, it needs the LabVIEW run-time engine to run on another computer. You can bundle it with the installer during the build process, or else leave it out and download it from the NI website when you run each installation.

Even microsoft languages need a run-time, but they are usually bundled into Windows itself.

-

QUOTE (Mark Yedinak @ Apr 8 2009, 08:45 AM)

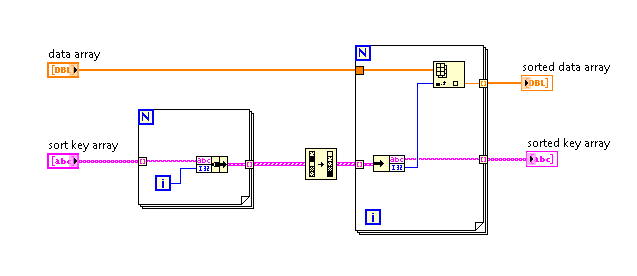

The OpenG functions are very useful, but also know that these work great.

http://lavag.org/old_files/monthly_04_2009/post-1764-1239215198.png' target="_blank">

-

-

QUOTE (jcz @ Apr 7 2009, 07:41 AM)

If you use a separate queue for each object, then you don't need to poll the queue status.

QUOTE (jcz @ Apr 7 2009, 07:41 AM)

I still haven't worked out how to lock a subprogram from receiving any new telegrams (e.g. during initialisation).If you use a separate queue for each object, each subprogram (object) will not process subsequent queue messages while any other message is being processed. This includes the initialization message. By using separate queues, each subprogram can be initializing itself in parallel.

QUOTE (jcz @ Apr 7 2009, 07:41 AM)

QUOTE (jcz @ Apr 7 2009, 07:41 AM)

The main idea I had was to create a bunch of main programs that would pretend to be a real-hardware-devices. So then I could simply implement some sort of protocol, define commands and talk to each sub-program as it was a real device. For instance to grab an image from a camera, I could then add a command to a queue (in exterme version SCPI-like command) :camera:image:snap, and only camera interface would run this command returning ACK.If you use a separate queue for each object, and a separate enum of commands specific to that object (subprogram), then your subprograms are all totally reusable. In fact I would wrap each bit of queue-sending code into a VI and then those VIs are the public methods of your subprogram.

In case I was too subtle, I think it's a mistake to use the same queue for all of your hardware components. Each object should be its own independent queued state machine which can accept commands and do stuff. Otherwise I think you are on the right track.

Jason

-

You need the Application Builder, which costs about $1000 extra or the "Professional Development" version which has the App Builder bundled in. If you search on "labview application build" in the manual, you should find instructions how to do the actual build.

-

People have been asking to change the wire appearance since before you were born. If you are using a built-in type which is not a cluster, then it would just obfuscate your code to change the wire appearance. If you are using clusters and want a custom wire, why not just make it an lvclass? (well because it's harder to see your values on subvi panels, but I hopefully NI is working on that).

-

QUOTE (rolfk @ Apr 2 2009, 11:04 PM)

Are you sure you have really enabled "Defer Front Panel Updates". 3.2 ms for an Add Item Tree element sounds fairly long although I don't have specific numbers. Or was this 3.2 ms with an already heavily populated tree?I can confirm Mark's observations. I made a very simple LVOOP tree for another purpose and for my testing, I loaded my folder structure into it from my enormous labview project with embedded SVN folders. The tree composed itself in about a second, but to test recursing through the tree and to view the result, I wrote code to put each element into a tree control. That part takes about 5 minutes to run. Defer panel updates didn't help noticeably.

I really think dynamically populating the tree is the way to go; I assume that the most file manager shells do something similar. It would be a good XControl.

-

What about an interface that doesn't try to populate the entire tree in advance. You could trap the item open event, filter it, fix the tree item which is about to open, and then open it programmatically. Most of the time, your users probably won't ever look at most of the thousands of items in your tree, so there's no real need to stuff them into the tree control.

-

This sounds like a relatively hard problem given the minimal tools you have for this in LabVIEW. If it were me, I would leverage the OS file dialog box. Unfortunately that generally lets you browse any folder on the computer. If it's windows, you could map your folder to an unused hard drive letter and then start the filedialog box at the top of that, and then strenuously object if the user picks a file on some other drive.

-

QUOTE (Kubo @ Mar 31 2009, 12:20 PM)

Hello everyone,I can't seem to figure out this little problem.

With my program I have made an installer to transfer it to another computer. I put it on one computer (has an older labview on it), and everything seems to work great. Then I put it on another computer without labview on it and it seems that it wont let me select a com port in my control to a serial connection. The rest of the program works fine... it just wont display the serial output which it needs to make the program function correctly. Is this because the measurement & automation program needs to be installed? Or maybe something else?

If your serial port is selected with a VISA control, then you need to deploy the VISA Runtime in order for the control and the VISA functions to work. You don't need MAX.

-

Jim's right, you want virtual server hosting, which you can google.

Here's one for $99/mo: http://myhosting.com/Virtual-Server-Hosting/

If you want to run LabVIEW for Linux, you have a lot more options

-

QUOTE (flarn2006 @ Mar 29 2009, 05:40 PM)

You can read more about http://en.wikipedia.org/wiki/Software-defined_radio' rel='nofollow' target="_blank">software-defined radio if you are interested in the possibilities, but it's pretty unlikely that one of these is hiding inside your WiFi card.

-

QUOTE (Mark Yedinak @ Mar 24 2009, 07:34 AM)

From my understanding the answer is yes, LabVIEW compiles the code as you wire it. It is constantly compiling the code. Someone from NI can correct me if I am wrong but I believe they are smart enough to only require recompiling the affects pieces of code and not every clump. This is how they can achieve the constant and effectively real-time time compiling of code.If you get a slow enough machine running, you can see the recompiles as you work. The Run Arrow flashes to a glyph of 1's and 0's while compiling. You can also force recompiling by holding down the ctrl key while pressing run, but it's still too fast on my unexceptional laptop to see the glyph (or maybe they got rid of it). You can also do ctrl-shift-Run to recompile the entire hierarchy, but I still don't see the glyph, even though my mouse turns into an hourglass for a short while.

-

QUOTE (Antoine Châlons @ Mar 26 2009, 02:05 AM)

I assume you will have to read the week number as a integer and do the calculation yourself...Rather than computing the week number, you could just start with January 1 of that year, and in a while loop, keep adding 24 hours and checking the week number until it matches.

Secret features: ethics of developing them

in VI Scripting

Posted

QUOTE (Aristos Queue @ Apr 12 2009, 06:40 PM)

Well I must not have been clear enough. I don't have any problem with unrevealed features. My point was that if someone considered that a serious problem, then any modern combination of silicon, OS, Compiler (which created LabVIEW.exe) and LV Runtime or any other app would be off-limits. I don't think you could ever predict all possible CPU execution paths in anything running on Windows.