-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by drjdpowell

-

Final score: Small terminals: 23.5 votes (that includes the "mostly-no"s, the "no"s and the "HELL, NO"s). Icons: 4.5 votes. -- James

- 74 replies

-

Everything's by-value, not by-reference, so the variant attributes disappear with the variant and containing object.

-

Futures - An alternative to synchronous messaging

drjdpowell replied to Daklu's topic in Object-Oriented Programming

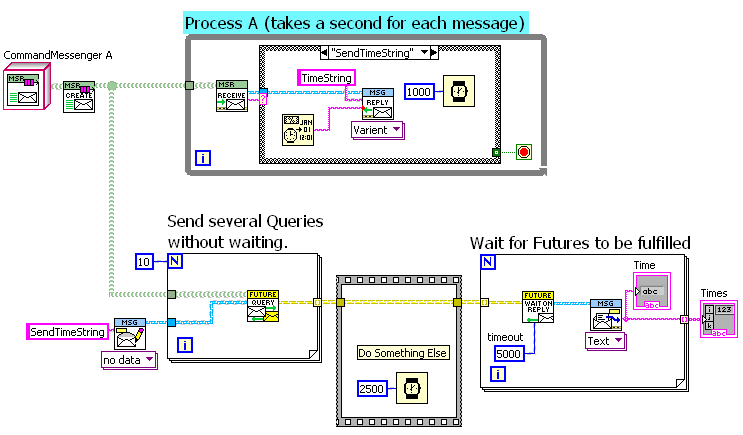

For fun, I whipped up a version of a "Future" in my Messaging Library. It takes my existing "Query" function and splits it among two VIs, connected by a "Future Reply" object. In the below example I have a process that takes a second per received message to reply. I start 10 queries, sending the "SendTimeString" message each time, then simulate doing something else for 2.5sec, then I wait for the futures to be completed. This is similar to the way "Wait on Async Call" works. -

Futures - An alternative to synchronous messaging

drjdpowell replied to Daklu's topic in Object-Oriented Programming

Just as an aside, the new "Wait on Asynchronous Call" Node is another example of a type of "future". -

The feature I'm imagining will only snap to a possible position when it is within a couple of pixels. This should be small enough that is will act to help the programmer align things exactly as he/she wants. So, if you want the tops of subVIs to line up instead of straight wires then no problem. Want bend the wires in a readable way, no problem. Basically, you place the item where you want and the program helps with the micro-positioning. You would have to place the terminal within two pixels of aligned to trigger the snap-to; nothing is forced on the programmer. Now, if you actually wanted the terminal one or two pixels off alignment with another, you would need to hit the arrow key once or twice. Hopefully, such situations will be rare. If not, there would be the ability (including a hot key) to turn the feature on/off. Unfortunately, it's hard to verbally communicate the advantages of CAD-like guides. -- James

- 74 replies

-

- 1

-

-

I had a similar application and could not figure out how to do it. Sorry.

-

Techniques for componentizing code

drjdpowell replied to Daklu's topic in Object-Oriented Programming

You're right, it doesn't really fit the intention of the "Decorator pattern", but structurally it is very similar. In the mental image I use for my messages, envelops with contents inside and a label on the outside, having the Messenger place the received envelope inside another envelop and labeling it "This is a message from from Slave1" doesn't seem confusing to me. Not much more than altering the label of the original message and then feeding into a parser, anyway. Just an idea; as I said, I have never used it yet. -- James -

As an aside: is this really true? LabVIEW objects can easily be flattened to a string, allowing the external code to manipulate the string, and then unflattened back to an object. So it's no safer than a variant or flattened data. And, hey, Shaun has an encryption package, so he could provide an encrypted, flattened string, which is more protected than a LabVIEW object.

-

Techniques for componentizing code

drjdpowell replied to Daklu's topic in Object-Oriented Programming

Not over your head, I'm sure, but I know you don't like getting POOP-y -

I've only been using OOP inheritance for less than two years, and my job has never been more than 30% programming, so I don't have meaningful statistics. But I have had one program that involves look-up and display of data records from seven very different measurements from a database. This led to a complex "Record" class with seven children. I also developed my messaging design for this project; it has seven "Plot" actors to display the datasets of the seven record types in a subpanel. Making a matching change in seven subVIs (actually eight, including the parent) is a pain, regardless of what the change is. It only takes one project to learn that lesson. -- James

- 74 replies

-

Techniques for componentizing code

drjdpowell replied to Daklu's topic in Object-Oriented Programming

Hello, Not entirely following the latest part of the discussion, but there may be some confusion between what Daklu was doing, extending the message identifier with "Slave1:", and the common method of adding parameters into a text command (as, for example, in the JKI statemachine with its ">>" separator). Parameters have to be parsed out of the command when received, thus the need to decide on separating characters and write subVIs to do the parsing. But Daklu's "Slave1:ActionDone" never needs to be parsed, as it is an indivisible, parameterless command that triggers a specific case in his "Master" case structure (see his example diagram). Now, if he did want to separate the "Slave1" and "ActionDone" parts (for example, to use the same "ActionDone" code with messages from both slaves using the slave name as a parameter) then he could parse the message name on the ":" or other separator. But there is an alternate possibility that avoids any possibility of an error in parsing (Shaun, stop reading, this is getting OOP-y; there's even a pattern used, "Decorator"). Instead of using a "PrefixedMessageQueue", use a variation of that idea that I might call an "OuterEnvelopeMessageQueue". The later queue takes every message enqueued and encloses it as the data of another message with the enclosing messages all having the name specified by the Master ("Slave1" for example). When the Master receives any "Slave1" message, it extracts the internal message and then acts on it, with the knowledge of which slave the message came from. You can use "OuterEnvelopes" to accomplish the same things as prefixes, though it is more work to read the message (though not more work than parsing a prefixed message) and less human-readable (unless you make a custom probe). It may useful, though. -- James Note: I put an OuterEnvelope ability in my messaging design, but I've never actually used it yet, so I'm speaking theoretically. -

Techniques for componentizing code

drjdpowell replied to Daklu's topic in Object-Oriented Programming

Not every use of LVOOP is some complicated thing that can't be done with "regular" LabVIEW. Quite often it is just a clean way to make a type-def-cluster with some handy extra features and it's own distinct "type" identity. Don't downplay the value of giving your cluster it's own, unique, pretty coloured wire that will break if you accidently wire it to the wrong thing. -- James -

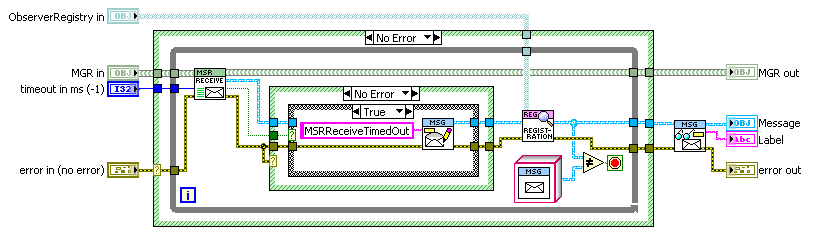

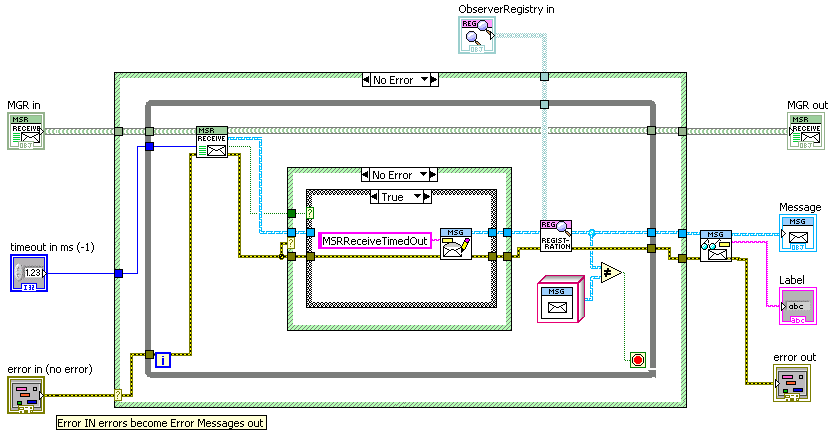

I like the visual connection between block diagram, front panel, and subVI connections. They are different facets of the same entity. Note that most of my LVOOP subVIs are both quite simple and have relationships with multiple other subVIs. Other methods in the same class will have matching connections for the object and error terminals; override VIs in related classes will match all connections. Mimicking these relationships visually on the block diagram is helpful (particularly as LVOOP means you have a lot of related subVIs). I'll make exceptions to the pattern if it doesn't flow naturally into the code. But find I seldom need to do any rearranging. I try and design conpane locations for a class before doing much coding, so I seldom change a terminal connection (changing the conpane on several dynamically-dispatched versions of the same subVI is a real pain). And with small subVIs, with not that many inputs (as Paul pointed out), there is less need for careful organizing. Connector pane locations also carry information to me: top left and top right terminals are the "subject" of the subVI (a relationship formalized in LVOOP, but common in other LabVIEW APIs); bottom left and right is the error cluster (OK, duh); remaining two left inputs are...uh..."consumed parameters" of the method; left two are "results returned". Top and bottom connections are for options, or things need to perform the action that are in some way... "independent" of it. So, to me, the "Observer Registry" input of my example naturally goes on a top input of the "Receive..." VI, not in the front. Hmm, OK, maybe it's just that my brain is wired funny. True. I was motivated to start this conversation by reading an idea to eliminate nearly all customization of terminals; not just icon/no-icon, but the ability to place the label in different locations, in order to standardize how things are done. I don't think this is necessarily desirable, even if the standard to be chosen were my own. -- James

- 74 replies

-

I've been thinking of posting simple CAD-lite guides as an Idea on NI.com. I think it could work well as long as it is kept simple. Like snap in position only for: 1) eliminating a wire kink. 2) aligning a control/indicator to another control/indicator, regardless of distance between them (possibly include constants and control references) 3) align all BD items, but only to other items that are nearby (to reduce the number of snap-to points which would be far too large otherwise) Maybe even just do (1) and (2), since most subVI/primitive alignment involves eliminating wire bends anyway. The "snap-to" range should only be a few pixels (less than the snap-to range currently used in aligning labels). Keeping the feature quite limited will make it very intuitive and easy to use. -- James

- 74 replies

-

I prefer that a subVI's terminal layout roughly matches its connector pane. So in the example, "ObserverRegistry in" is on the top because it connects to the top of the subVI. In contrast, with ShaunR's example I can't tell where his seven inputs connect. I recall in the past considering displaying a VI like this, either because it was a key VI that I wished would stand out, or it just had so many inputs/outputs that it would look like spider otherwise. Can't recall if I actually used it, but in principle it is very similar to bundle-by-name or property nodes, and like them is somewhat "auto-documenting". However, like Paul, I currently find I don't have that many input/outputs in any one subVI, and they are all pretty clear, due to the nature of LVOOP-style programming.

- 74 replies

-

Do you mean "select the terminals" and the little pull-down menu of alignment options? Or something better? I did a little CAD work back in the day and I've always found the LabVIEW alignment tools to be slow and clunky. A relatively simple "snap to guidelines" feature (aligning in both dimensions to related objects and also aligning for straight wires) would make it easy to write code clean from the start. I can't say I care that much about the standard types either way. A string is a string. But mixing icons and smaller types means I have to go through the effort of manually changing either the standard or non-standard types. And in larger diagrams, with only a few controls/indicators, I don't see much benefit to the smaller terminals, even for standard types. But I'd be happy to meet people half way if we all want to standardize on small terminals for simple types and icons for objects, type-def clusters and enums, refnums, or anything where the small terminal doesn't convey the full type. Flipping back and forth between BD and FP just to figure out what the terminals are? Can't say I like that option for code clarity! -- James PS> haven't been keeping up the score; it's a low two digit number to a mere 3.5 unicorns.

- 74 replies

-

My experiment illuminated a few things: 1) for small, compact diagrams the smaller terminal size is plenty large enough to be clear, and I can see why they are an advantage to making small compact diagrams. 2) I, personally, find it less tedious to spend time making up lots of icons than carefully aligning and adjusting things into small compact diagrams. I'm perfectly happy with my previous diagram that takes up twice the space and doesn't have the terminals all lined up, yet I'll open the icon editor if I notice a few-pixel inconsistency among related icons. Your OCD may vary. 3) I really don't like the "OBJ" issue. I don't care if I can read the label; although many objects like "Observer Register" will basically never be labeled any different, "Message" objects could easily be labeled "Command", "Response", or "Ack". And the icons sometimes express cross-class relationships, such as the "envelope" icon showing the close connection between Messenger and Message objects, or other relationships among objects, subVIs, clusters, and enums. And hey, LabVIEW's not a text language, don't you know. -- James

- 74 replies

-

- 74 replies

-

- 1

-

-

Raw refnum data? Does the cluster also have an enum or some such that identifies what the refnum specifically is? That you use to decide internally what bit of code to execute? Your right, that's nothing like an object; it certainly doesn't need an identifying icon. I'm feeling calmer already! -- James PS> I just noticed the 3D raised effect in your subVI icons; nice!

- 74 replies

-

Oh, are you "paul.dct" on NI.com? See everyone, another unicorn. I must admit, I wouldn't mind the visual distinction of a control from a VI (the border around the icon, little arrow, etc.) being a little more obvious. -- James

- 74 replies

-

Well, I certainly can't pretend that isn't a very clear diagram. But that "refnum", that's actually a cluster, is really making my OCOOD act up! It's an Object! It's an Object! Quick, quick, open an icon editor! Aaarrrggghhhhhhhh...

- 74 replies

-

If I count right that's 9.5 to 1.5 (2.5 if you count AQ). Let's look at one of my more recent subVIs: I choose this one because it has 8 total controls/indicators, which is more than most. The first thing this illustrates is that I use a lot of LVOOP objects, of more than one type, and a little thing that says "OBJ" really isn't that useful for easily identifying the object. The second thing is that in subVIs, the terminals are all (or mostly) the inputs and outputs of the VI, and I place them on the outside, with plenty of space for a larger icon. Even if the icons were just bigger versions of the smaller terminals, I would still consider them superior, because inputs and outputs should stand out and draw the eye. This example also shows what is probably the highest density of Front Panel terminals on any of my VIs. My User Interface VIs tend to use event structures and subpanels and a relatively small number of "big" controls/indicators. "Big" in the sense that they do a lot, like a Multi-column Listbox with an extensive list of Right-Click Menu options, or a Text box that holds a summary of a large amount of information, or an Xcontrol. So I seldom have a need to pack controls in densely. In fact, the terminal icons are sometimes just sitting, unwired, near the event structure, acting more as graphical documentation than actual code. This documentation is important, because most UI control/indicators are major parts of the VI, and I want their presence to stand out on the diagram. To be devils advocate, if your fighting for space to fit yet another not-very-important indicator nested somewhere deep in a tight diagram, then your not coding as clearly as you could. -- James

- 74 replies

-

Oh dear, 5 to 1 against so far. Surely there are some more unicorns? Additional question (motivated by the ni.com discussion): do you think having both forms leads to confusion, and would you be in favor of LabVIEW being changed to allow only one form? For the purposes of this question, assume the form to be chosen is the other one from the one you like. -- James

- 74 replies

-

I was just reading some opinions on NI.com about whether it is better to show FP terminals on the block diagram as full-sized 32x32 icons or as the smaller 16x32 terminals. I wondered what people on LAVA think. Do you use one type or the other and how strongly do you feel about it? Personally I (moderately strongly) prefer icons. -- James

- 74 replies

-

Are you sure you're actually using the "task" configured in MAX, rather than creating a new task programatically? Is "BDHSIO" the name of your MAX task, or is it the name of a channel? If it is a channel, you are creating a new task with the default sampling rate.