-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by drjdpowell

-

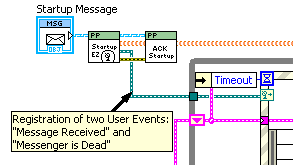

Hi AQ, I thought the concern about event order was due to the fact that an event structure pulls events from multiple queues: the static-registered events, and one (or more) dynamically registered event refnums. It’s possible to fire a few events into a dynamic queue before connecting it to an event structure, so it isn’t obvious how the structure determines in what order to pull off events from multiple queues. If it uses a timestamp, then what about near-simultaneous events with the same timestamp? — James

-

How to update all functions of a wrapper

drjdpowell replied to spaghetti_developer's topic in LabVIEW General

In the “Call Library Function” there is an option to “Specify path on diagram”. This adds an input where the all path can be specified. If you write your all to store the path in some central store, then it can be updated for all calling VIs at once. -

How to remuve the black rectangle on Key focus

drjdpowell replied to spaghetti_developer's topic in LabVIEW General

Or one could just learn to love the black rectangle. Problem solved. -

If you have any communication method with the dynamically-launched VI (queues, notifier, etc.) you can create this reference in the calling VI. When the calling VI goes idle, this invalidates the comm reference, throwing an error (from dequeue) which can be used to gracefully shutdown. Alternately, one can programmatically release the reference. An advantage of this is that dynamically-launched processes will always shutdown gracefully, regardless of how the top-level VI exits (no orphaned processes left running in the background). I call these processes “autoshutdown slaves”. Unfortunately, one can only do this with User Events via polling (as an invalidated User Event doesn’t throw an error). I hide the polling in a background process that fires a second “shutdown” User Event, so at least it doesn’t look ugly.

-

Project Design & Maintenance

drjdpowell replied to JimboH's topic in Application Design & Architecture

The changes are probably recompiles caused by subVI changes. Separating compiled code from source code should stop this. I’ve upgraded to 2011 recently and separated compiled code and it seems to work well so far. I generally put all subVI’s in libraries libraries (or class libraries), other than test code. Personally, I move VIs such that the Project is happy and just let SVN consider the file deleted and created new in another place. Makes your SVN repository bigger, but this isn’t a big issue. -

LapDog & Variant Messages Question

drjdpowell replied to Daklu's topic in Object-Oriented Programming

I would say “no”, as generally one could choose to either use variant messages or a long list of specific-type messages. Array of Variants is kind of a mix of both. — James Aside: After our previous conversation, I actually modified my own message hierarchy by eliminating all simple-type messages in favor of VariantMessages, except where there was extra functionality involved (for example: ErrorMessage). And in actual use, I tend to either use completely generic messages (Variant) or create specific-purpose messages for specific uses. The latter can have multiple data elements and are usually used in the “Command Pattern” (i.e. they have “Execute" or “Do" methods rather than “Read”). Thank you for considering VariantMessage for LapDog, BTW. I have recently been doing consulting work where I can't use my own messaging package, so I’m interested in Lapdog being widely adopted and as flexible as possible. -

How do your B and C VIs communicate with the outside world? If I were doing something like this, each VI would have a queue (or similar) message receiving system and it would shutdown on receiving a “Shutdown” message or if it’s message queue becomes invalid. This makes full shutdown of everything quite easy.

-

Conversion from (QMH + LV2 Globals) to AF

drjdpowell replied to todd's topic in Object-Oriented Programming

I’m not sure there is anything wrong with using your LV2 globals, assuming they are entirely internal to one actor (i.e. not a by-ref connection between different actors). -

Register & Unregister or just Register?

drjdpowell replied to Aristos Queue's topic in Application Design & Architecture

A Seems very like the Set and Delete Variant Attributes. Also, if the table just disappears at some point, then it is perfect intuitive that I don’t have to unregister (as the registrations are part of the table). -

1,2,3 button dialog with timeout

drjdpowell replied to Ton Plomp's topic in OpenG General Discussions

Some control over text size (i.e., the ability to increase it) would be nice. -

Ah, but 1 developer x many (small) projects that could use logging and it gets expensive. Anyway, it’s looking like we’re going with modifying a text-log system from another project. Boring. I’ll keep your API in mind, though, for any large project where a SQLite database would be a prime feature, rather than just a nicer way of logging.

-

Sorry, I know little about the terminology of licensing. At a consulting firm I’m doing some work for, they would like a reusable error/debug logger for use in their many projects. I suggested your logger. But, if I read it right, the SQLite API has to be licensed for each project (each “product”), which isn’t going to fly. My other options are a non-database solution, using Saphir’s SmartSQLVIEW, or calling the SQLite all directly with SQL statements. I actually prefer the last option (even though it will be much more limited and less polished than your stuff) as I can then use it as a logger in my message/actor framework without inheriting any licensing issues. Need to polish up my SQL, anyway.

-

Hi Shaun, Can you clarify the “fair use” of your database logger (and SQLite API)? I’d like to use it (or a modified version) as an error logger in custom software projects for clients. — James

-

LVOOP and Message Objects (LapDog mainly)

drjdpowell replied to jbjorlie's topic in Object-Oriented Programming

You could make a new non-dynamic dispatch VI that takes a Message object, downcasts to Command, then calls the (dynamic-dispatch) Do method. Call this VI “Do Command”” or “Do if a Command”, or something. Note that it doesn’t need to be a member of “Message” class (or any class). — James BTW, you could also make the “Do if a Command” VI return the original message if it isn’t a “Command” (this output would return a null message if the command was executed). That would allow you to put it in front of your case structure, acting sort of as a filter for Command Messages. -

Techniques for componentizing code

drjdpowell replied to Daklu's topic in Object-Oriented Programming

Hi Alex, One slightly annoying thing at first about LVOOP child classes is that they cannot directly access their own “parent” data via “unbundle”. All child objects have the parent private-data cluster, but methods of the child class need to access it via methods of the parent class. If you create VIs in the parent that return InputQ and OutputQ, then the child class VIs can use them instead of unbundle. Creating “accessor” VIs like this is automated, so it takes less than a minute to make several. One can even make them be usable in a Property Node structure to access several things at once. The reason for this, I believe, is to allow the designer of the parent class to have a well-defined public interface, and thus he/she can change the internal private structure without breaking any children written by other developers. -

I’m afraid I’ve lost the code itself (computer failure and I never checked it in to my code repository), but I have an image: This is the CLA practice exam, rather than CLD; those subVIs are mostly empty but for a single comment on what needs to be coded in them.

-

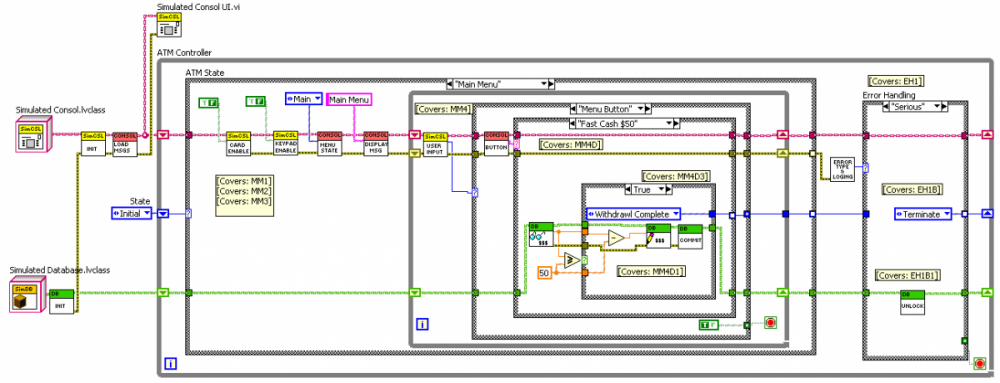

I am reminded of one of my first posts on NI.com: I haven't done the CLD myself, but this conversation prompted me to look at the Traffic Light example exam, along with the NI-supplied sample solution. Although I'm sure it follows standard "style", IMHO it is a pretty poor attempt at the described problem, at least if this were to have any connection to a real-world problem of programing a traffic intersection. 1) Real-world intersections come in many different configurations, but the solution presented is inherently specific to only one very-specific configuration with no thought applied to producing software that can be customized. Every different intersection type would require a different version of the code. A fully general solution is too big for a four-hour test, but one could at least set the groundwork by having some "intersection configuration" constants feeding into a more generalized intersection state machine. It would be easy to write code that could be configured with an arbitrary number of lights and states by using arrays, for example, allowing easy generalization to a five-way intersection. 2) The solution ties together the implementation of the intersection state machine and the implementation of the specific type of light. If traffic engineers were to start using four-bulb lights (Red, Y, G, and Left Turn Arrow) then the entire intersection code would have to be modified. The code should be more "modular"; there is no reason why a programmer implementing a new type of light needs to delve into the details of the intersection, nor does the intersection programmer need to know which specific light bulb signals "Left Turn". The clean coding and extensive documentation is nice, but useless if the code becomes obsolete the instant it confronts the first real-world complication. Admittedly, this is just a test question constructed from a predetermined answer, and the test-taker should be able to guess what the test makers really want in place of an actual scalable design. This was pre-OOP for me, but you can see how LVOOP might help in points (1) and (2). Problem is, it’s an exam question; any kind of investment up front for pay off in future development is a waste of time because there is no future. — James BTW, what about CLA exams? I tried out the practice ATM exam for that and used LVOOP.

-

Self-addressed stamped envelopes

drjdpowell replied to drjdpowell's topic in Application Design & Architecture

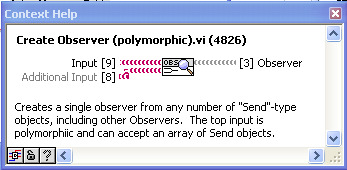

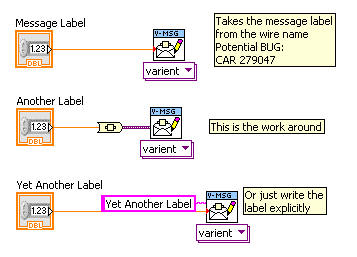

Ben, small note: I see in one of your images that you index over your array of Instruments and individually configure an Observer to substitute a message and then register this Observer with the Metronome. You’ll have less overhead if you take the entire array of instruments, create a single Observer, configure it to substitute a message, and register it. Observers can hold any number of “Send” objects internally. To make your array into a single Observer, use what is now called “Create Observer (polymorphic)” but was then called something like “Make Observer” or “Add Observer”. Another small note: There is a bug that is a problem for a feature that you might not be using but should know about. For Variant-type messages, one can not fill in the label and let the message use the variant data name as the label. But a bug in converting to variant sometimes doesn’t take the right name: -

Self-addressed stamped envelopes

drjdpowell replied to drjdpowell's topic in Application Design & Architecture

The new version uses the VI qualified name, rather than the path. Hopefully, this will work better. Some of those are experiments, since this framework kind of grew over time through trial and error, so be careful. There are two ways that one can get information out of a launched Parallel Process: (1) having it respond to a message sent to it, or (2) “registering" with it to have “notification” messages sent whenever something happens (an “event”) or the state of the actor changes (“state”). These are separate methods; one could probably just use one or the other. My examples are perhaps overloaded with trying to do both, and that might be confusing. But then, a reusable component may be more useful if it can communicate in a variety of ways. I actually tend to rely on the Observer Registry stuff primarily, but having replies can still be useful, especially for synchronizing things (as you do in your application). Exactly. Note, however that this is NOT a global thing like a named queue or global variable. To “register” to receive a notification one must send a registration message to the Parallel Process in question. You do this in your App when you register for “MetronomeTick” events. An “Event” is, well, an event. ”Tell me when this happens”. “MetronomeTick” is an event. If you look at the “Metronome” Block Diagram as an example, you’ll see that you can also register to receive Error Messages, and the “Shutdown” event which is the last thing sent when the Metronome shuts down. A “State” Notification is slightly different. Here, you don’t want to be told when something happens in the future, but what something is right now, and to be notified of any changes. Metronome has a “MetronomePeriod” State notification. If you were to register to receive this notification, you would immediately be sent a message containing the current period (the Observer Register remembers the last state message so it can notify any new observers). You would also be sent a message any time the state changed. Nothing happens. Neither “Notify Observers” nor “Reply” throws any errors if no one is listening. If you launch an actor and don’t subscribe to its published notifications or bother to listen to its replies that is not the responsibility of that actor. It is the job of the code launching the actor to decide who should receive messages from it. This is a deliberate design choice; actors are reusable components that are not coupled to the code that launches them. They don’t demand anything and only provide services. If you don’t use all the services… whatever. In your code, for example, “Metronome” attempted to reply to your “SetPeriod” message, but you attached no return address. It also published its period and a shutdown message, but no one asked to be notified of them. No problem, “Metronome” doesn’t mind. It stands ready to provide these messages, should the code that launched it want them. You could, for example, register your UI to receive “MetronomePeriod” state updates so you could display the period. Or you could change your “SetPeriod” message from a “Send” to a “Query” (that waits for a reply) to check that Metronome did what you asked (if you try to set the period less than 10 ms, for example it will comeback saying 10, because that is the lower limit). Queries are good for making sure something has happened before continuing; if you sent a “SetPeiod” message by accident, the reply would be the Error Message “Unhandled: “SetPeiod””, code 52. This message would also be published to anyone registered to receive error messages. Not necessarily. My simple examples mostly involve commands that change the actor’s important state (“SetPeriod”, etc.), so they naturally involve a Notify. But one could have commands that don’t change state, or the state can change for some reason other than a command. And it is OK to have actors that don’t have an Observer Registry at all, if that isn’t really needed. — James -

Self-addressed stamped envelopes

drjdpowell replied to drjdpowell's topic in Application Design & Architecture

Hi Ben, It’s late where I am, but I’ll try and reply tomorrow, particularly with a better description of what the Observer Register is for. Your code looks good. — James -

I too didn’t like that “Low Coupling Solution”. I spent some idle time trying to think of a way to make the “Zero Coupling Solution” simple enough that one wouldn’t ever need to consider the “Low Coupling Solution”. After I while I realized: it already is simpler! Not trivial to see at first. So I think it would be better if the documentation never mentioned the “Low Coupling Solution”, and instead concentrated more on demonstrating how to do zero coupling, and presented it as the standard way to use the Actor Framework. In fact, with a simple guideline, one could write “High Coupled” actors in such a way that they could later be easily upgraded to zero-coupled actors. I’ll try and get this suggestion, with more detail, in the 2012 beta forum (once I’ve figured out how to download the beta software ). — James

-

Need to get me some of that some day.

-

Object serialization (XML) and LabVIEW

drjdpowell replied to PaulL's topic in Object-Oriented Programming

That’s what I thought, but AQ stated: -

Note to Darin on in his Champion’s profile picture: I recognize that hardware! Axions! Or search therefore. Did a very little bit on it as a Post Doc long ago. I’m very, very least author on an axion Phys Rev Lett from 1998 (I see from my CV that you're second author). Found those things yet? — James

-

Object serialization (XML) and LabVIEW

drjdpowell replied to PaulL's topic in Object-Oriented Programming

I was under the impression (from a post somewhere by AQ) that lvclass references work in run-time as of 2010.