GregR

NI-

Posts

47 -

Joined

-

Last visited

-

Days Won

5

GregR last won the day on January 6 2015

GregR had the most liked content!

About GregR

Profile Information

-

Gender

Not Telling

-

Location

Austin

LabVIEW Information

-

Version

LabVIEW 2011

-

Since

1992

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

GregR's Achievements

Newbie (1/14)

39

Reputation

-

LabVIEW Built Windows Applications - Preventing the "Not Responding" Label

GregR replied to mje's topic in LabVIEW General

You will only get that label when the UI thread is not able to handle OS messages. This can happen through direct routes like a long CLN run in the UI thread. That is definitely the first thing to check. It can also happen through indirect routes. If you are doing something that is very disk or memory intensive, it can cause delays that slow down what would normally be very fast operations in the UI thread. If you are paging in a huge buffer in another thread and the UI happens to need some piece of memory paged in, then the UI in theory could be blocked long enough to cause the OS to consider the process hung. With the speed of most modern machines, this is unlikely but it is possible. -

Back to original problem. Fix #1 is to hide the graph's scrollbar. Keep in mind you are only giving the graph data for the visible range of the X scale. This means that the X scrollbar built into the graph will be useless. This is designed to allow the user to scroll through all the data that the graph has when it doesn't fit in the visible area. You will never be in that situation. If you want to let the user scroll through all the available data, you will need to implement your own scrolling. Fix #2 is to turn off "Ignore Time Stamp" on the graph. This option means no matter what the time stamp is on your data, the graph X scale will start at 0. This means the user will not be able to tell where they are in the data. It also means that when you scale is showing 5:00 to 10:00, your data is being shown at 0:00 to 5:00. With those changes your VI works pretty well except that you probably need to produce slightly more data. Currently there seems to be a zero value being produced in the visible area just before the scale maximum. I also noticed that your decimation doesn't work well when the minimum is negative. The scales move but the data is produced as if the minimum is zero. Also your decimation produces zero values when showing area beyond the end of the data. If you can't make it just produce fewer elements in these cases, producing NaNs is an option. The graph will not draw anything when it encounters NaN. As with the scrollbar, the graph palette option to fit to all data doesn't work. You might want to implement your own button to allow the user to zoom back out to all data. Another approach to some of these problems would be to add another plot to your graph that just has the first and last points of your waveform in it with the correct delta to put them in the right spots. Set this plot to have no line and a simple point style. This way the graph will still consider itself to have data across the entire range, but you only produce detailed data for the visible area. This doesn't solve the "ignore time stamp" problem but always the scrollbar and fit to all data to work.

-

Viewing Panel via Smart TV

GregR replied to Shazlan's topic in Remote Control, Monitoring and the Internet

WebUI builder requires Silverlight. Remote panels require a browser plugin and a locally installed LV RTE. Neither of these technologies are available on Samsung smart TVs. Regardless of whether these meet your functionality requirements, that means neither is an option. These TVs are an HTML/JavaScript platform with limited Flash support, so those are the tools you have to choose from. Websockets are definitely an option, as is building your VIs into RESTful web services using LV. If you are finding the WebUI builder graphs to be primitive, you may run into similar issues with the html UI solutions available. You can most likely achieve the displays you want, but it may take more programming than you expect. -

Reentrant References In an EXE

GregR replied to hooovahh's topic in Application Design & Architecture

Just to clarify for others that stumble across this discussion. You can open references to VIs built inside an EXE by path but this is only possible from VIs running as part of that EXE and the path will be different than during development. That path difference makes this error prone and a bad idea, but it is possible. In general the VI path is the EXE path with the VI filename added as another path segment at the end. However this has problems with class/library files that have the same filename. How LabVIEW resolves these conflicts also depends on the Advanced build setting for "Use LabVIEW 8.x file layout". If this option is on, then LabVIEW will put the files in directories next to the EXE. If the option is off, then LabVIEW creates virtual directory structure under the EXE. I won't try to fully explain this, but I will say that is repeatable between builds so you can figure out where the VI is being put and reference it from there. Most of the time there is a better approach but this is an option if you can't find another answer. -

LabVIEW will preallocate the array at the max size and truncate as you suspected.

-

Protecting the password is important but the problem doesn't end there. Say my LabVIEW built application queries the OS to decide if the current user has some privilege at launch time. How should my code remember that fact? Do I put it in a LabVIEW global variable boolean called "IsAdmin"? Guess where my weak link is. Forget about attacking the password. If I can find the right byte, I can turn any user into an admin. Or even before that, what if I can attack the code the decodes the answer from the OS. Any application that runs on the users machine and internally makes decisions about allowable operations is susceptible to in memory attacks (through debuggers or code insertion). There are a few LabVIEW-specific things you can do to reduce this exposure. Subroutine priority - Subroutines are less exposed through VI server. Inline VIs - Inlining of security critical VIs means there is no longer a single bottleneck that the user can attack to gain access to multiple pieces of functionality. Each piece must be attacked separately. Request Deallocation node - This node causes all temporary allocations for a VI to be disposed at the end of a subVI's execution. This does not necessarily overwrite the memory but could help. (I'm not sure what happens if you try to use this in a VI that is set to inline.) If we apply these to the issue of remembered state, then you might create inline VIs that know how to get and set into some obscured form of remembered state. Of course that just moves your weak link to be the algorithm used to obscure your state.

-

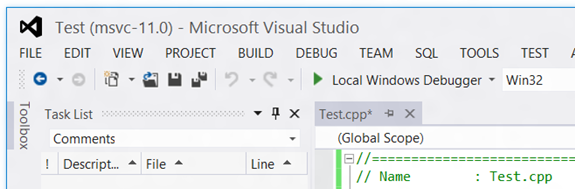

Personally I like the visual cues provided by beveled buttons and color, but that doesn’t seem to be the prevailing direction. The soon to be released Visual Studio not only removes button borders but also most color and any dividing lines between menus, toolbars and content. Then they put the menus in all caps. What do you think? Is this the direction LabVIEW should be moving?

-

If the VI is preallocated reentrant, then you should be allocating and deallocating a clone each time even if the VI itself is staying in memory because of other references.

-

Third Party U 64 Control, Used bits 25 most significant

GregR replied to KWaris's topic in LabVIEW General

It definitely could be an endian-ness problem, but your characterization of LabVIEW is not quite right. LabVIEW flattens to big endian but in memory (any typed data on a wire) it matches the endian-ness of the CPU. Since all our desktop platforms are now x86, they all run as little endian. So the problem would be that his data is big endian and LabVIEW is treating it as little endian. Don't mean to be pedantic but I don't want someone to come along later and convince themselves all LabVIEW data is big endian. -

Namespacing objects in a build makes them "different"

GregR replied to crelf's topic in Application Design & Architecture

But you defined your plugin interface in terms of an lvclass. You can't have a class in your interface unless both side are going to agree on the class definition. If you really want both side to not share any dependencies, then you can't have any dependencies in the interface between the sides. If your strict VI reference uses any class or typedef, then that definition must be shared. To operate the way you wanted, you can only use core data types in the connector pane of your plugins. If you have more than one plugin, then you need to make sure each plugin is built with a wrapping library or name prefixing to keep them from conflicting with each other. At that point whether the top level app does this or not is unimportant. -

Namespacing objects in a build makes them "different"

GregR replied to crelf's topic in Application Design & Architecture

You can't load a plugin that has a dependency that has the same name as one of the application's dependencies if the dependency is supposed to be different. In your case the dependency is a class that is passed from Caller.vi to Callee.vi so in fact it is critical that both sides do link to the same instance. This is the only way it will work. Whether that shared class is inside the EXE or not is a separate issue. It can be acceptable for this dependency to be inside the EXE and the dynamically loaded VI will work just fine. Your original project had 2 application builds: Caller and Namespaced Caller. Caller.exe works just fine for me (as long as I make sure Callee.vi is saved in the same version before running Caller.exe). The point of this exercise is not to avoid sharing but to figure out how to properly share. The suggestions I gave were all different ways of sharing those dependencies. They all have tradeoffs between robustness and development overhead. Take your pick. The real key is to make sure you understand which items are being shared. Any change to a shared item could cause you to have to rebuild both sides. -

Namespacing objects in a build makes them "different"

GregR replied to crelf's topic in Application Design & Architecture

There should be no problem running a 32-bit built application on 64-bit Windows. If you have to support 32-bit Windows, then it probably makes sense to only build as 32-bit rather than having to build everything twice. -

Namespacing objects in a build makes them "different"

GregR replied to crelf's topic in Application Design & Architecture

It took me a little while to understand that "namespaced" meant using the build option "Apply prefix to all contained items" in "Source File Settings" for dependencies in the build spec. Once I made that connection everything makes sense. This isn't really namespacing. This is changing the name of every dependency. So after the build Caller.vi references a class named "namespace.Shared Class.lvclass". Since Callee.vi didn't go through the build, it references the class "Shared Class.lvclass". A VI that has a single input of type "Shared Class.lvclass" is not going to match a strict VI reference with a single input of type "namespace.Shared Class.lvclass" so the open of the reference fails. If you are going to rename the class during the build, then all plugins must be rebuilt against the renamed version. You can't really do that if the renamed version is only present inside the application. There are a few other options though. You could include your plugins in the application build as "Always Included" files. This doesn't create much of a plugin framework, but in some cases that is acceptable. You can make the application build put its dependencies outside the application. Create new destination for a sub directory, then change the dependencies to build to there. Then in your plugin projects (Callee's project) reference these files instead of the original "Shared Class" source. Then when Callee is loaded, it will agree on what all the dependencies are named. This is similar to the solution of creating the shared items as another build, but avoids actually having a separate build step. You can build the shared items as a separate distribution and have both "Caller" and "Callee" projects reference that build output. This doesn't have to be built as a PPL. It could just be a source distribution, but either way it requires a separate project and build increasing maintenance. You can not rename shared dependencies going into the application. For items that will be referenced from plugins, you can add them to the project directly (instead of having them just show up in dependencies) and not have them be prefixed. This also will make it clear which items are valid to be referenced from plugins. That makes it clear to you which items might break plugins if you modify them and easier to tell others writing plugins what things they can reference versus items they should not reference. Many other variations of these themes. To decide which approach to take, I'd want to know what you are really trying to get out of using a plugin approach. Who is going to be building the plugins? Are the plugins supposed to be able to update independently of the application? Should the application be able to update without invalidating the plugins? What VIs/classes used in the application should be able to be referenced from the plugins? What were you trying to accomplish with the name prefixing option? -

Officially we would encourage you to use the VI Server APIs to do things like this. In some cases we even expose methods on the Application class that can return information about VIs without loading them. The main reason for this stance is because we reserve the right to change our file formats between versions. This is usually either to support new features or to improve performance and has happened many times to various degrees. The last substantial change was to compress several pieces of the VI because CPUs could decompress faster than the larger data could be read from disk. I realize not publishing the format is annoying but it would also be annoying to publish changes to it every release. Try and think that the time it would have taken us to update the documentation is instead being applied to some assume feature.

-

Why does LabVIEW give me disk errors before it crashes?

GregR replied to Sparkette's topic in LabVIEW General

There is another possible answer. When we build the EXE and all the DLLs that make up LabVIEW, we do generate symbol files. We don't ship them but we hold on to them for debugging. Each executable remembers the absolute path that this symbol file was created at on our build machines. In many cases our build machines are setup with multiple drive letters rather than just one huge C drive. When NIER encounters a crash, it uses a MS DLL to look for the symbol files to try to put more details in its log. One of the places this DLL looks is the path in the executable. I think this behavior is frequently the result of accesses to drive letters that are not mapped or mapped to removable media. The exact behavior may depend on what the drive is. I know we have had cases where it would request you insert a disk into an optical drive. In the end there are no ill effects. This is just a matter of unfortunate error handling.