Runjhun

Members-

Posts

19 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Runjhun

-

Have you checked with any different value of Board Index ? Index 1 should work. You can also download the AIT soft front panel, with that you'll get the board serial number and other details.

-

Hi, I want to use NI-9227 on NI-cDAQ 9188 chassis for current measurement. When the load is engaged an instantaneous current of 25 Amps for microseconds is observed using Oscilloscope. The specifications of this card says, Input Impedance = 12mOhms (across the channels Ai+ and Ai-) and Instantaneous Measuring Range is 14 Amps. My concern is whether this card will still support this in-rush current or the channel will go bad or the card will not work ? Any suggestion is welcomed. Regards, Runjhun.

-

.net Events in LabVIEW causing memory leak ?

Runjhun replied to Runjhun's topic in Application Design & Architecture

Thanks. Its been days I am reviewing my code but to no luck. Are there any tools available ? I am using the Profile Performance and Memory option from the 'Tools' menu but all the VIs have fixed memory. Let me know if anything is available .- 11 replies

-

- .net events

- .net

-

(and 2 more)

Tagged with:

-

.net Events in LabVIEW causing memory leak ?

Runjhun replied to Runjhun's topic in Application Design & Architecture

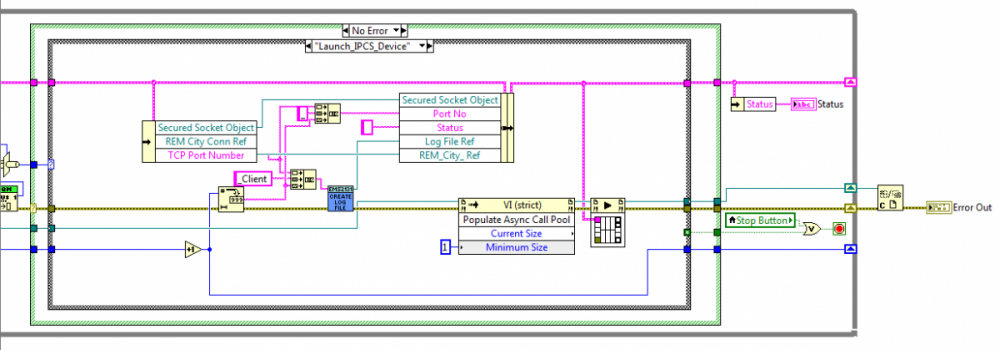

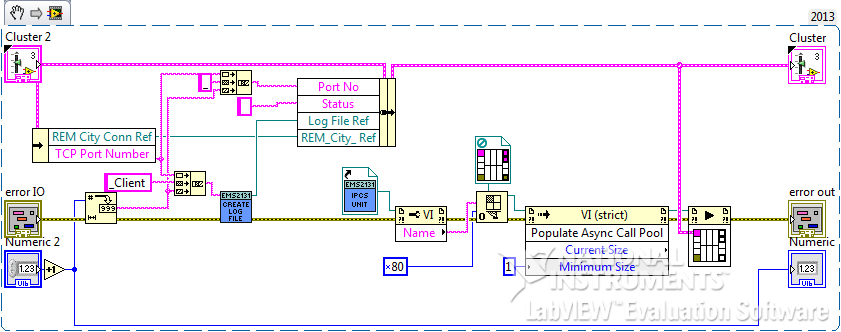

In Heavy Leakage code, the Open VI was called again and again, thats why memory leak was more. I was closing the VI ref inside the clone. In Slow leakage code, Open VI is kept outside the while loop, thats why memory leak is less. I am closing the reference, but its not captured in the screenshot.- 11 replies

-

- .net events

- .net

-

(and 2 more)

Tagged with:

-

.net Events in LabVIEW causing memory leak ?

Runjhun replied to Runjhun's topic in Application Design & Architecture

Hi, I had run few more tests and was able to reduce the memory leak to some extent. But still I could observe the leak. With Desktop Excecution Trace Toolkit, I dont see any Memory leaks and the Profile Performance and Memory usage for all the VIs also is constant. I am really unable to narrow down the problem. Whether its LabVIEW, .net DLL or God knows what ?- 11 replies

-

- .net events

- .net

-

(and 2 more)

Tagged with:

-

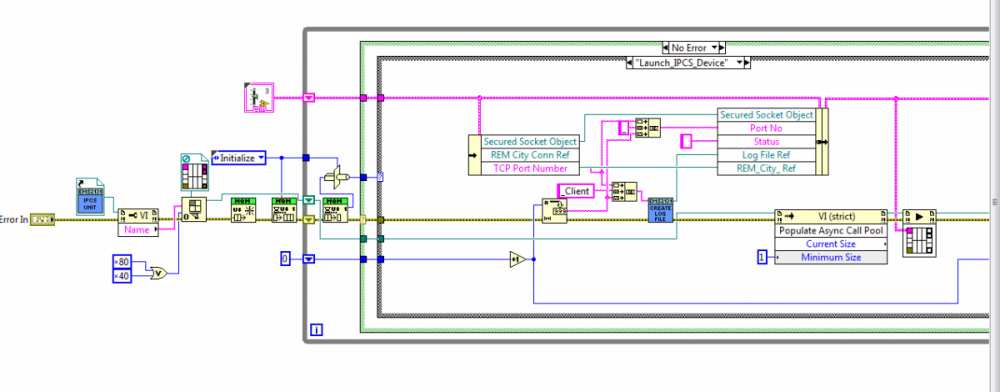

I found the solution to my problem. I had to write a .net application that was triggering an event whenever bytes were received on a socket. And as LogMan specified earlier, I used Regiter for .net Event node and passed the socket reference to it. With that whatever events were related to that class, they were populating in that list. So, I created a Callback VI, that would generate a Value Signaling event to an inetger array that would give me the bytes read from the socket. Appreciate everyone for their suggestions. Regards, Runjhun.

-

.net Events in LabVIEW causing memory leak ?

Runjhun replied to Runjhun's topic in Application Design & Architecture

Thanks for your quick response. With your example, I have one doubt, in case of accessing .net DLL or components, will these references won't be cleaned up the .net Garbage Collector. Will give more details on implementation.- 11 replies

-

- .net events

- .net

-

(and 2 more)

Tagged with:

-

Hi,I am using a .net DLL in LabVIEW, that generates some events. I am registering for those events at the start of the application and then waiting for those events in the Event Structure.All this is done in a re-entrant VI and I am using 'Start Asynchronous Call' node to launch the VIs dynamically.Everything is working fine. But when I run the VIs for long, the memory is continuously piling up. I am not sure it is happening because of LabVIEW or .net DLL.Does anyone has any idea about this ? Any help would be greatly appreciated.

- 11 replies

-

- .net events

- .net

-

(and 2 more)

Tagged with:

-

ned, can you please elaborate on this. May be with an example. I understand Asynchronous calls are difficult implementation in .net as well as LabVIEW but in my project I am not seeing any way out here for that. I have to implement few methods as asynchronous only. Can you please help here ? Runjhun

-

Yes. So I am trying to build my own callback but the problem is the callback function has an argument of type 'IAsyncResult'. But when I am selecting the consturtor node for this class, I get the message as 'There are no public constructors for this class'. What to do in this case ?

-

Hi, I have gone through the basic working of Event Callbacks but I have found examples that are related to UI events. In my case, I am reading some data from TCP socket and I want to generate event from that using the callback, so that it becomes an Asynchronous operation. The code that I am trying to convert to LabVIEW is the SSL Stream Begin Read method. "http://msdn.microsoft.com/en-us/library/system.net.security.sslstream.beginread(v=vs.100).aspx" I have posted my VI as well but as of now its broken because there's nothing wired to the callback function. Async Read.vi

-

I have done this. You dont get any support from LabVIEW except the .net constructor nodes. I have downloaded the code for the SSL based TCP server and converted that to LabVIEW code with the help of System and mscorlib dll. Regards, Runjhun.

-

Hi, I want to implement the SSL Begin Read method in LabVIEW with the System DLL using the .net construtor nodes.I am successful in doing so for the synchronous Read method. But when I am trying to modify my code with the Asynchronous methods, it asks me to wire reference for a Asynchronous Callback node. I haven't worked with Event Callbacks in .net before , so I dont have much clue about the dataflow and other things.Can anyone please help me regarding creating callbacks in this particular case.Regards,Runjhun.

-

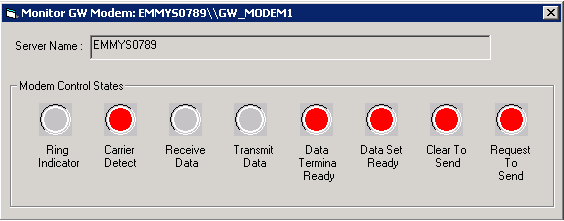

As I mentioned am using TCP/IP protocol. Will VISA properties accessible through TCP/IP ?

-

-

Hi, I (client side) am simulating a MODEM using TCP/IP protocol. Is there any way to monitor and control the MODEM lines as shown in the figure attached. See the control lines of the Server MODEM:

-

Hi Sharon, As far as I know from version 9 there is only Mobile Module that was referred as PDA module before it. Now as such there is no separate PDA module. Runjhun A.

-

Converting Timestamp to double data

Runjhun replied to Runjhun's topic in Development Environment (IDE)

I have used that but its not giving me the required output. If I convert it in this way then its giving me the time elapsed since 1.1.1904 till today. I dnt want this value to be seen as a plot to my graph. Without the change in the values the timestamp should be converted to double.Becoz i am feeding this input to the Excel Plot Graph function which accepts X-axis and Y-axis values in a Double 2-d array. -

Hi, I'm plotting a bar graph which takes only double data input. My X-axis should be the timestamp and my Y-axis is the double data. Bt here I can't figure out any way to convert the timestamp to double so that I can use it as an input. Any suggestions. Regards, Runjhun A.