-

Posts

2,767 -

Joined

-

Last visited

-

Days Won

17

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Grampa_of_Oliva_n_Eden

-

-

Nobody has mentioned the obvious feature...

Data Flow

By using graphical represenations of opeartions, typos* and syntax errors are eliminated.

Memory management

No need to check limit malloc and debug access violations. For those cases where the memory allocations are not obvious LV includes "Show Buffer Allocations" to let us SEE where the buffers are located.

Ben

* anyone that has read more than one of posts should understand why I really apprecaite the lack of syntax.

-

1

1

-

-

I'll still give you a chance to withdraw that comment.

In my book the auto-tool helps someone that doesn't know what they need to do next (switch tools) and the QD helps those that know exactly what they want to do faster.

Or are you just trolling?

Ben

-

2

2

-

-

You've always been able to change font settings on multiple widgets

Good point! I was thinking of something else but... It is still a nice feature that is handy.

Don't you like it?

Ben

-

I wish to change my answer, now that LV2010 takes about 4 times more time to compile my application it became my favorite feature, and this is why.

Worst threads is getting ahead so...

How about the multiple object property thing? I used to have to select each widget one at a time and change the font color etc. Not anymore!

Ben

-

I don't understand the auto-tool hate.

1.) It is an option, you can turn it off.

2.) Its function is based on what pixel you are hovering over. Memorize the pixel context, turn down your mouse sensitivity and quit complaining.

3.) Yes, it doesn't switch to the paintbrush tool, but if you bind the tools palette to Ctrl+T (who tiles windows anyway?) then you're in business.

Seriously, auto-tool gets the job done if you know how to use it. Your inability to use a tool doesn't invalidate its usefulness.

It is not hate but frustration. In my case I have to work on everything from LV 5 through 2010. Which means sometimes the auto tool is there and other times it is not or is a differnt flavor. If I get in the habit of using auto-tool then show-up at customers sight and start acting baffled when the auto-tool is not working on a LV 5 mahcine, i will look like .... not a LV expert.

THe tabs and space work on all versions and I don't even have to think about how many clicks from ths to that any more and besides, I can do the tool selection while the mouse is in transit so I have to right toll ready BEFORE I get there and not after.

Ben

-

I'm not an auto-tool fan either. It wires when I want to select and you have to select breakpoints and colour chooser anyway.... drives me mad. I'm a Tab and space-bar freak.

Put a mark in the Auto-tool select for me as well. I have tried it in various releases and although getting better, it eventually guesses wrong bringing my work to a stand-still. Shift-right-click shut-it-off and I am done experimenting until the next release of LV.

Ben

-

Have you asked your local NI representative? I'd think they'd be happy to have an opportunity to evangelize.

If they are C programmers you can start out with the classic "Hello World" example followed by a demo of multithreading without thinking... but what to do with the other 59 minutes...

Ben

-

In my part of the world everyone's winding down for their summer holidays! So I thought I'd start a pair of topics to sing the praises (and raise the sins) of LabVIEW. In this topic:

What design feature in LabVIEW most annoys you? The one that you constantly bang your head against, and wish that it had been developed differently, but it's probably too late to do anything about?

What design feature in LabVIEW most annoys you? The one that you constantly bang your head against, and wish that it had been developed differently, but it's probably too late to do anything about?In the code I write, it would have to be the separate Image datatype. Why could it not just be an array? Sure, there are benefits to having a separate type - some very fast image processing code, and handling of image borders. But it is so restrictive if what I want to do is not already coded as an IMAQ routine, for example, taking the square root as part of computing the standard deviation of an image region, or wanting to use 3D images. Either I ignore all the really useful image routines, or I use twice the memory and keep swapping back and forth.

OK, your turn.

1) "annoyed" past tense. Remember the Just in Time Advise thingy that peoples started calling "Spoolie"?

2) "Shared Variables" lets take the danger of globals and allow the devlopment of race conditions across platforms? While we are at we can tell the world they are the answer to everything, and lets lock down all of the functionality so users have to wait half a year to get a fix (maybe) and for bonus points let's make our DSC product dependent on them... Wait... We can move all support for the low level stuff to another continent and implement a 24 hour e-mail delay in replies like "BridgeVIEW? I don't know BridgeVIEW, but it was supposed to be like DSC." Yes the quagmiere of treading between "Yes I recomend using LV for this project." and "No, I am not going to take NI's advise to use SV's." can leave a mark on you.

Ben

-

Just curious Ben, didn't you ever release new versions of your reuse libraries and what did you do when a cluster would benefit from an update?

...

To the best of my knowledge, the only cluster exposed in my re-use is an error cluster. If NI updated it, our testers SHOULD catch descrepancies. All of the rest use native LV data types. Enums on the other hand are a different animal. Jim Kring taught me about using wrappers around my action engines (thank you again Jim!) and that suggestion has helped reduce the coupling between re-use and the code that uses them.

Aside... to all contributors on this thread...

Please accept my thanks for the interesting discusion. It is a public serfvice that I appreciate greatly.

Thank you!

Ben

-

Control references.

They let us do in sub-VIs what previously had only been possible using attribute nodes. Instead of the BD of user interfaces being dominiated with attribute nodes, the diagrams can "look like what they do".

Ben

-

1

1

-

-

1) By repatedly dismissing the use of a Tree.VI (which was the recomended standard prior to the introduction of the project in LV 8) you ignoring the obvious solution to typedef updates. see this thread on the Dark Side.

http://forums.ni.com.../m-p/4328#M3291

(note that message number. current threads are numbered 388242. THat was message number 3291 on the dark-side)

2) Re-Use code using clusters and LVOOP are different animals with LVOOP being more powerful. In my shop "re-use" code has a requirement that "it can be used AS-IS with no modifications". So changes to type defs to use code again is technically not -re-use but recycled since it requires a set of testing to enusre it works after the change. So or my ear the idea of how to update a cluster in re-use code is an oxymoron. THis is exaclty why LVOOP has made a difference in my development. I can re-use parent classes without modifying them so they meet the requirement of "re-use with changes".

Steopping back and looking at the bigger picture...

I used to look for collections of code at the bottom of my VI hiarchies for re-use candidates.

Now I look at the TOP of my Class hiarchies for re-use.

Ben

-

[/size]

I agree that it may not technically be a bug if there's some spec somewhere at NI that says this behavior is expected, but it's still a horrible design defect in the LabVIEW development environment. There's no reason bundle by Name and Unbundle by Name couldn't keep track of the names and break the VI if there were any change in the names, sort of like relinking subvis. The amount work to fix hundreds of broken subvis pales in comparison to the havoc wreaked by having your tested production code silently change its functionality.

We've learned to be more vigilant about cluster name and order changes, and have added more unit testing, but there's really no excuse for NI permitting this. Re the current discussion, I don't believe the existence of this horrible design bug ought to inform the choice of whether to use OOP or not, though given that NI seems reluctant to fix it, maybe it does factor in.

Jason

Slightly off-topic...

If you really have to delete an element from a type-def'd cluster I suggest you first change it to a data type that is incompatable with the original version eg change numeric to a string wiht the Tree.Vi open. This will break all of the bundle/unbundles and let you find and remove reference to that value.

THEN you can delete the value knowing that you have not missed any of them.

The VI Analyzer used to report an error about bundling the same value twice (often the case if you delete a field and LV wires to the next one of the same type).

AS far as i know the bug that are reported are being fixed.

Ben

-

alright maybe I'll try and ask for a new trend in this thread..who has worked on the coolest project which they can disclose and what was it?

-side question: Is it accurate to say that the majority of LabVIEW developers are freelancers/consultants rather than on salary in a permanent position?

and congrats crelf; sorry I don't know who you are yet (or what you do with your mad LV skills) but you're reputation defnitely precedes you!

While you are waiting for the cats to line in a row, you may want to review this thread on the dark side.

Ben

-

cool story Ben thanks for responding..I'm curious, along with the LV code what new method of quality control did you guys come up in regards to the DVDs?

I can not reveal that info becuase...

1) Confidentiality,

2) I have no idea (I make the piano, someone else plays it).

Ben

-

Been busy on the road, cna't spend much time reading... forgive interuption.

1) Comparing some of the bugs in type def'd cluster to non-buggy LVOOP just isn't fair. In some versions of LV the type def worked great. (LV 7.1 maybe)

2) AE's taht use type defs to encapsulate data and LVOOP are not mutually exclusive and live quite well on the same diagram.

3) I still use a Tree.vi (Greg McKaskle's suggestions became my mandates) and they take care of the issues with VI's knowing about changes.

4) I use bottom-up/Top-down approach to fisrt ID the risk, then turn my attention to the top down.

5) Type defs work just fine if you know the spec ahead of time and changes are additions only. Renaming and removing is hazardous mostly because of teh bugs in type-defs.

6) Viewed rom the VI Hiarchy, the stuff at the bottom is where I find most of the potential for re-use and get first concideration for implementation in LVOOP. The closer I get to the top, the more specific the code to the app and therefore the less re-use potential so they the the "quick and dirty" AE typed treatment.

7) I still find LVOOP to be more work up front.

8) I am repeatedly supprised by how much I can change quickly if first implemented in LVOOP.

9) I have avoided type-def'd enums on LVOOP classes to avoid issues with upgrades. I use rings and new functionality only gets added at higher ring values. I may choose to convert the rings to enums inside the class itself but I have not made up my mind on that point yet.

10) LVOOP suffers big time from its vocabulary and its insistance on keeping everything soooo arbitrary that developers can't find a toe-hold when starting the trek up the learning curve.

11) My re-use library has grown quite a bit after switching to LVOOP. I was having a hell of a time doing that beofe using LVOOP.

Done interupting,

Ben

-

It all fits... :-o... if crelf had enough time on his hands to post 5k messages it must be true : he has the coolest LabVIEW job :-D

Congrats!

Well congartulations to Chris!

Although my job may not be the coolest, it fit my needs very well.

I work as a Senior Architect at Data Science Automation out of their Pittsburgh PA office. My work consists of helping others get their LV project going. Since I consult in many different areas, I get exposed to a lot of different areas of technology and that is one of the bonuses of my job. For a while we had a signover the entrance to our training room (where we offer the NI training) that read "Through these doors pass some of the greatest minds the world ahs known." That expresion applies to many of my customers so it feels like I am "living in a Levis Dockers comercial" (don't worry if you are not old enough to understand that ref). The variety of what I get to work on can be found if you check out the DSA web-site (DSAutomation.com) but can be summarized with a short story.

One of my advisors at the University of Pittsburgh was nominated for the Chancelors Distinguished Teachers Awards (not easy for a Physics type to grab that title), so I wrote a letter detailing how my experience with him has changed to world. After reading it, he replied to me saying "Wow, if that is really true, I have to win!" It was and he did! An example of one of the claims was related to the quality control of DVDs and the cost of production. Before we deployed a LV solution, DVD's had a reject rate approaching 70% and the cost of DVDs was about $80. Not anymore.

So I may not have the coolest job, but it is pleanty cool for me, since along with the LV related activities I have "ZERO" managment and slaes responcibilities.

Ben

-

1

1

-

-

I've had such an utility in mind for a long long time.

Use case : When I start coding a new project, one of my first task is to create all the typedef clusters that will be necessary to store configuration settings, then I create VIs to read/write these "section cluster" from/to INI file (based on OpenG read/write section cluster).

All this clearly can be automated.

Using the following list as inspiration sources, I started to develop such an utility as a sandbox plugin. I'm planning to post it here sometime soon.

Before that I was wandering if anyone thinks this would be useful for them :-o

Inspiration list :

- the RCF plugin that creates a typedef cluster from a cluster constant,

- the create polymorphic VI example from the code repository

- vugie's scripting sandbox RCF

PS: I have a video to show what it does know but it's too big to be posted here, I'll find a way to share it very soon.

I wrote one of those in LV 7 scripting but dropped after the demo for my boss crashed. I was motivated by an application where the "clusters" were defined by a commitee and changed almost weekly (with more than 60K fileds scattered through more than 30 type defs, keeping up with the updates was keeping a very bored developer busy and cross-eyed.

I was able to get away with most of it by creating a templates VIs and type def's and playing games with renaming and opening after saving the new cluster by the old name ....

The only thing that had to be scripted was creating new actions (by cloning ohers) and then re-linking bundle/unbundles.

It worked fine at home.

Have fun,

Ben

-

Well, AFAIK methane has not any significant absorption peaks in microwave part of spectrum, so I don't suspect that anything bad would happen. At least unless there are no metallic objects with sharp edges, which cause sparking...

Discalimer: This is not my area of expertise so please be gentle.

I think the absorption peak that is critical is for carbon when it is in diamond state rather than the state it is in when bonded to hydrogen. It apparently left a yellowish coating that was "very hard to clean".

Ben

-

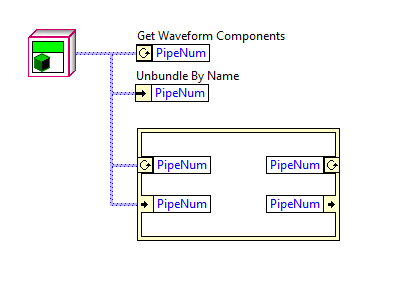

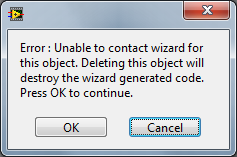

While I was poking around to post this idea on the idea exchange, I discovered something unusual. It's possible to unbundle data from a class wire using the Get Waveform Components prim and with the IPE waveform node. (Note this does NOT allow users access to private class data. It still has to be used in class methods.)

Is there a practical difference between the unbundle cluster and unbundle waveform nodes? I dunno--haven't played with it that much. The circle icon on the waveform nodes is a little rough, so I suspect it's just early prototyping code that was accidentally left in place.

I kind of understand how it could have happened. Class data and waveforms are both clusters, so in one sense it makes sense that either prim can unbundle them. Still, regular clusters can't be connected to the waveform prims, leading me to believe this is unexpected behavior.

"I wonder what would happen if I put methane gas in a microwave and cook it for a while?"

While that experiment sounds like a very bad idea, it turns out that experiment lead to learning how to put daimond coatings on machine tools etc.

I suspect nobody else had the intestinal fortitude to try something they suspected would blow-up never realizing this may lead to a gem in the rough.

But i am inclined to side with you that you have wondered into a bug. "Don't do that!".

Ben

-

Hi Everyone. (hello world)

Just wanted to say hello to the community, since I have a few posts on record. I have been trolling (lurking) around LAVA for a few years, but never had time to actively participate. I am fairly new at LabVIEW, but, have loved programming/computer/electronics since I would walk/talk. I hope that I will be sticking around for the extended future.

Thanks to all of the admin/regulars that have made LAVA what it is.

But then if we look at the other side I have to tell you about this thing I ran into when I was young but that would be a long time ago and after all, and these days I ...

You don't remeber what I was thinking about when I started this reply do you?

Ben

(Attempting to live up to my user name)

-

thank you my friend i'm very very happy for this great information i wish if we keep in contact in case if you interest about the same subject (direct interfacing without externat or additional equipment )

regards

I only check in here occationally when not on the road.

If you are succesful with that information it would be helpful to others (like yourself) sometime in the future if you posted what you learned (good or bad).

Take care,

Ben

-

sorry

could you please explain more

???

Enter "ni.com" in your browser and go to the NI web-site.

at the top of the page enter the text I posted into the search box.

Hit search.

One of those links should point at a tutorial that will tell you all about the VISA driver development Wizard (which will let you develop code to peek and poke the registers on the PCI buss).

Ben

-

DSC used early scripting to compose GUIs. These typically ended up being a bunch if independant loops scattered in the top level VI. I suspect it has something to do with that functionality.

Ben

-

Hi

i have understand your answer because you didnit answer me is there direct interfacing or not ?

is there web site link can help me in what you say about if it is connected to my question?

thank you

Got to the NI web-site and search for "VISA Driver development Wizard". It will let you poke and peek at the registers.

Not sure if it supports ISA but PCI yes.

No NI hardware required.

Ben

Worst LabVIEW design feature

in LAVA Lounge

Posted

And one of my pet peeves...

The EULA for the Beta program that effectively eliminates most serious testing and forces us to use the "dot-zero" version for BETA testing.

Ben