-

Posts

3,947 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

Questions about data storage and RAM resource usage in CompactRIO

Rolf Kalbermatter replied to 王佳's topic in Real-Time

Your mixing and matching two very different things. The 2GB RAM is the physical RAM that the CPU and the Linux OS can use. But the RAM in the FPGA that is used for Lookup Tables is a very different sort of RAM and much more limited. The 9043 uses the Xilinx Kintex-7 7K160T FPGA chip. This chip has 202,800 Flop-Flops, 101,400 6 input LUTs, 600 DSP slices and 11,700 kbits of block RAM or BRAM, which is what can be used for the LUTs. If you really need these huge LUTs, you'll have to implement them in the realtime part of the application program, not in the FPGA part. -

Get Node ID of a vaiable using OPC UA client

Rolf Kalbermatter replied to baggio2008's topic in LabVIEW General

You can't automatically. There could be the same name in several namespaces. You will have to browse the various namespaces and search for that specific name. That can be done with the BrowseForward function programmatically. Or if you know in which namespace they all are, you can reconstruct the NodeID string of course yourself, something like ns=1;s=your_string_name or if it is an uri namespace: nsu=https://someserver/resource ;s=your_string_name -

Get Node ID of a vaiable using OPC UA client

Rolf Kalbermatter replied to baggio2008's topic in LabVIEW General

How is that name created? Variables in OPC-UA are really only known by their node ID anyways. This can be a string node ID that gives you a name, but that is entirely depending on the server configuration as to what that string would be if any. There is no way that you can have some random string name and let the server answer you with what node ID that could be, since a node is simply known by its node ID and nothing else. So the real question is how did that string name in your spreadsheet file get generated? Is it the string part of a string type node ID with removed namespace part? -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

Ahh, ok, that I would wholeheartedly agree to. It's probably mostly going through the UI thread and possibly a lot through the root loop even. It touches many global resources and that's the most easy way to protect things. Adding mutexes over the whole place makes things nasty fast and before you know it you are in the land of mutual exclusion if you are not VERY careful. -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

Faster is a bit debatable. 😁 The scripting method took me less than half an hour to find out that it doesn't return what I want. Should have done that from the start before getting into a debate with you about the merits of doing that. 😴 The file is of course an option, except that there are a number of pitfalls. Some newer versions of LabVIEW seem to Deflate many of the resource streams and giving them a slightly different name. Not a huge problem but still a bit of a hassle. And the structure of the actual resources is not always quite casted in stone. NI did change some of that over the course of time. But pylabview definitely has most of the information needed for doing that. 😁 One advantage, it would not be necessary to do that anywhere else in the OGB library. The original file structure should still be guaranteed to be intact at the time the file has been copied to its new location! But it goes clearly beyond the SuperSecretPrivateSpecialStuff, that OpenG and VIPM have dared to endeavor into so far. Considering that I'm currently trying to figure out how to do LabVIEW Variants from the C side of things, as properly as possibly without serious hacks, it's however not something that would scare me much. 😁 -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

Let's agree to disagree. 😀 However, some testing seems to indicate that it is not possible with the publicly available functions to get the real wildcard library name at all. It's there in the VI file and the Call Library Node can display it, but trying to read it through scripting will always return the fully qualified name as it was resolved for the current platform and current bitness. No joy in trying to get the wildcard name. That's rather frustrating! -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

It depends what your aim is. If your aim is to get something working most of the time with as little modification as possible to the OpenG OGB library, then yes you want to guesstimate the most likely wildcard name, with some exception handling for specially known DLL names during writing back the Linker Info. If your aim is to use the information that LabVIEW is using when it loaded the VIs into memory, you want to get the actual Call Library Name. And you want to get that as early as possible, preferably before the OGB tool resaved and post- and/or prefixed the name of all the VIs to a new location. All these things have the potential to overwrite the actual Call Library Node library name with the new name and destroy the wildcard name. So while you could try to query the library name in the Write Linker Info step, it may be to late to retrieve that information correctly. That means modifying the OGB library in two places. Is that really that much more bad than modifying it in one place with an only most of the time correct name? -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

It does. The Call Library Node explicitly has to be configured as user32.dll or maybe user32.*. Otherwise, if the developer enters user*.*, it won't work properly when loading the VI in 64-bit LabVIEW. -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

It's pretty simple. You want to get the real information from the Call Library Node, not some massaged and assumed file name down the path. Assume starts with ass and that is where it sooner or later bites you! 😀 The Call Library Node knows what the library name is that the developer configured. And that is the name that LabVIEW uses when you do your unit tests. So you want to make sure to use that name and not some guesstimated one. The Read Linker Info unfortunately only returns the recompiled path and file name after the whole VI hierarchy has been loaded and relinked, possibly with relocating VIs and DLLs from anywhere in the search path if the original path could not be found. That is as far as the path goes very useful, but it looses the wildcard name for shared libraries. The delimited name would have been a prime candidate to use to return that information. This name exists to return the fully names spaced name of VIs in classes and libraries. It serves no useful purpose for shared libraries though and could have been used to return the original Call Library Node name. Unfortunately it wasn't. So my idea is to patch that up in the Linker Info on reading that info and later reuse it to update the linker info when writing it back to the relocated and relinked VI hierarchy. -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

Yes, my concern is this. It's ok for your own solution, where you know what shared libraries your projects use and how such a fix might have unwanted effects. In the worst case you just shoot in your own foot. 😀 But it is not a fix that could ever be incorporated in VIPM proper as there is not only no way to know for what package builds it will be used, but an almost 100% chance that an affected user will simply have no idea why things go wrong, and how to fix it. If it is for your own private VIPM installation, go ahead, do whatever you like. It's your system after all. 😀 -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

It's my intention to throw the VI, once it seems to work for me, over the fence into JKI's garden and see what they will do with it. 😀 More seriously I plan to inform them of the problem and my solutions, through whatever support channels I can find. It does feel a bit like a black hole though. The reason for working like it does, is most likely that it is simply how the Read Linker Info in LabVIEW works. They use that and the information it returns and that is not including the original wildcard name. Also most of that is the original OpenG package builder library with some selected fixes since, for newer LabVIEW features. I also don't get the feeling that anyone really dug into those VIs very much to improve them beyond some bug fixes and support for new LabVIEW features since the library was originally published as OpenG library (mainly developed by Konstantin Shifershteyn back then, with some modifications by Jim Kring and Ton Plomp until around 2008). -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

We are also developers, not just whiners! 😀 Honestly I did try in the past to get some feedback. It was more specific about supporting more fine grained target selections than just Windows, Linux and Mac in the package builder, specifically to also support 32-bit or 64-bit and not just for the entire package, as VIPM eventually supported, but rather for individual file groups in the package. Never received any reaction on that. Maybe it was because I wanted to use it with my own OpenG Builder, I just needed the according support in the spec file for the VIPM package installer to properly honor things. Didn't feel like distributing my own version of the package installer too. That format allows specifying the supported targets, LabVIEW version and type on a per file group base. VIPM package builder is a bit more limited for the purpose of more easy package configuration when building packages. I'm using that spec file feature through my own Package Builder to build a package that contains all shared libraries for all supported platforms and then install the correct shared libraries for Linux or Windows in the OpenG ZIP (and Lua for LabVIEW) package, depending on what platform things are installed. Unfortunately the specification for the platform and LabVIEW version does not seem to support 32-bit and 64-bit distinctions. So I have to install both and then either fixup things to the common name in the Post Install, or use the filename*.* library name scheme. -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

Actually I'm more inclined to hack things here: I don't feel like traversing the entire linker info array recursively if that is done anyhow in the next VI in the execution flow! But the current implementation is a bit naive, did some refactoring on that too. -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

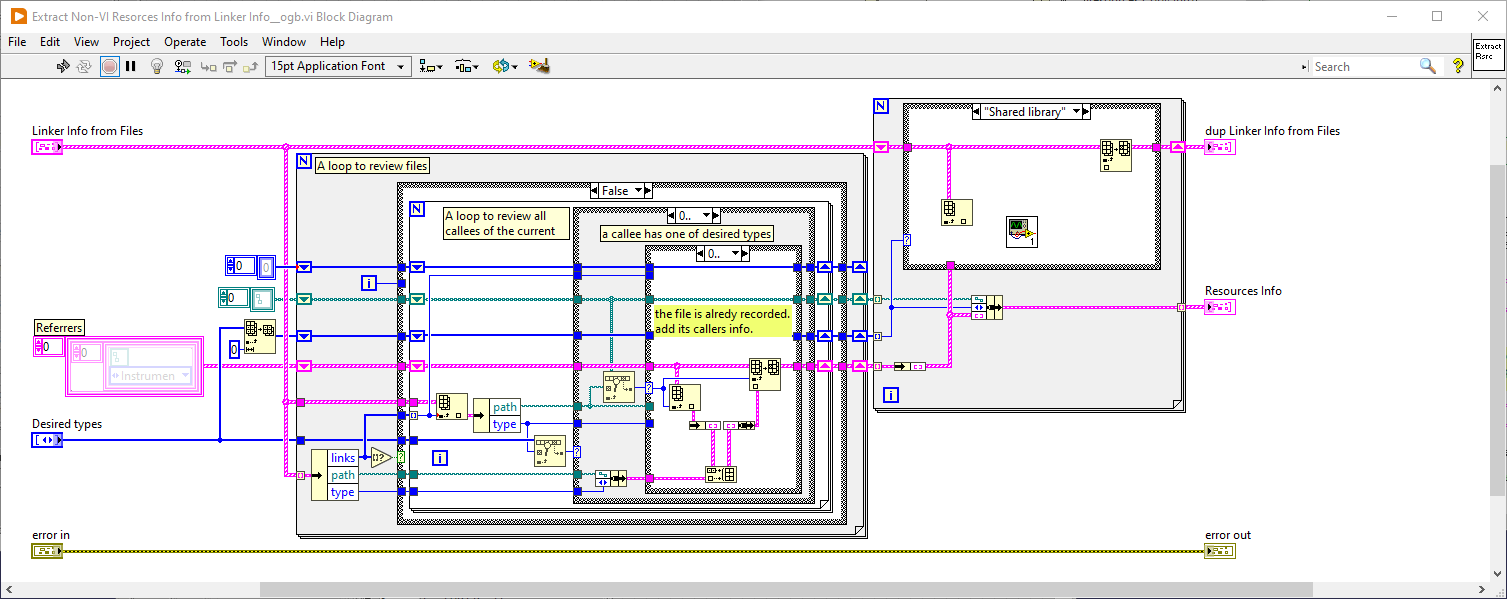

The most proper solution will be to go through all the files retrieved through the Read Linker Info function in the Generate Resource Copy Info.vi, find all shared libraries and from there their callers, enumerate for Call Library Nodes in the diagram and retrieve the library name and patch up the "delimited name" in the linker info for the shared library with the file name portion of that library name. In the Copy Resource Files and Relink VIs.vi, simply replace the file name portion of the path with the patched up "delimited name" value! The finding of the callers is already done in the Generate Resource Copy Info.vi, so we "just" have to go through the Resources Copy Info array and get the shared library entries and then check their Referrers. This assumes that all the library paths are properly wildcard formatted prior to starting the build process as it will maintain the original library name in the source VIs. Possible gotcha's still needed to find out and resolve: - Is the library name returned by the scripting functions at this point still the wildcard name or already patched with the resolved name while loading the VI? - Are all the scripting functions to enumerate the Call Library nodes and retrieve the library name also available in the runtime or only in the full development runtime? Although the Build Application.vi function is most likely executed over VI server in the target LabVIEW version and not as part of the VIPM executable runtime? -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

Yes, we kind of talk past each other here. Mostly because it is an involved low level topic. And VIPM is in this specific case technically pretty innocent. It uses the private Read Linker Info and Write Linker Info methods in LabVIEW to retrieve the hierarchy of VIs, relocating them on disk to a new hierarchy, which is quite a feat to do properly, patching up the linker info to correctly point to the new locations and writing it back. The problem is that the paths returned by Read Linker Info are nicely de-wildcarded, which is a good thing if you want to access the actual files on disk (the standard file functions know nothing about shared library wildcard names), but a bad thing when writing back the linker info. The Read Linker Info itself does not deliver the original wildcard name (it could theoretically do so in the "delimited name" string for shared libraries as that name is otherwise not very useful for shared libraries, but doesn't do so, which is in fact a missed opportunity as the fully resolved name is already returned correctly in the path). The only way to fix that consistently across multiple LabVIEW versions is to go through the array of linker infos after doing the Read Linker Info, recursively determine the callers that reference a specific shared library, enumerate their block diagram for any Call Library nodes, retrieve their library name and replace the "delimited name" in the linker info with the file name portion of that! Then before writing back the Linker Info, replace the file name in the path, respectively in newer LabVIEW versions you have the additional "original Name" string array when writing back the Linker Info, so might be possible to pass that name in there. Quite an involved modification to the existing OpenG Builder library. -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

This works on Linux too, and did so in the past for the OpenG ZIP library. I'm not really sure anymore what made me decide to go with the 32/64 bit naming but there were a number of requests for varying things such as being able to not have the VIs be recompiled after installation, as that slows down installation of entire package configurations. And the OpenG Builder was also indiscriminately replacing the carefully managed lvzlib.* library name with lvzlib.dll in the built libraries so that installation on Linux systems created broken VIs. In the course of trying to solve all these requirements, I somehow come up with the different bitness naming scheme with the LabVIEW wildcard support, but I think now that that was a mistake. In hindsight I think the "only" things that are really needed are: to leave the shared library name (except the file ending of course) for all platforms the same the simple modification in the VIPM Builder library to replace the shared library file name extension back to the wildcard add individual shared libraries for all supported platforms to the OGP/VIP package. But since VIPM has only really support for individual system platforms but not the bitness of individual files, we need to name the files in the archive differently for the bitness and let them install both on the target system with their respective name create a PostInstall hook that will rename the correct shared library for the actual bitness to the generic name and delete the other one Of course with a bit more specific bitness support in VIPM it would be possible to define the shared library to install based on both the correct platform and bitness, but that train has probably long departed. Will have to rethink this for the OpenG ZIP library a bit more, and most likely I will also add an extra directory with the shared libraries for the ARM and x64 cRIOs in the installation. Will require to manually install them to the cRIO target for the library to work there but the alternative is to: have the user add a shared network location containing the according opkg packages to the opkg package manager on the command line of the cRIO do an opgk install opgzip<version> on that command line That's definitely not more convenient at all!!! -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

Actually it's a little more than one edge case. It's potentially about any DLL that your library somehow references, including potentially built in LabVIEW functions that you use and that resort to calling DLL functions somewhere. Those might contain the 32 in their name or they might not, and they might also use the 32/64 naming scheme or not, and that would affect them too. Unlikely to happen? Probably, if you don't build a huge application framework library. Impossible? Definitely not. It's your tool and you decide how you use it. Just don't come and whine that it destroyed your perfect library. 😀 At least it always works to replace the file ending with a wildcard! Unless of course you decide to use a different file ending for your special purpose libraries for whatever reason! That would have to be also caught by checking that the actual file ending is indeed the expected platform ending and only then do the file ending change to a wildcard. It may sound like esoteric and far fetched edge cases, but I prefer to program defensive, even if I'm going to be the only final user of my functions. ☠️ -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

It would seem so. You look for a way to hack VIPM to do something specific for your own shared libs and leave anything else to its own trouble. 🙂 I was trying to find a way that is a more general fix but that seems in fact impossible at this point, unless there is a way to actually have LabVIEW return the original library name for DLLs as filled in the Call Library Node. Hmmm, thinking of this there might be, but it would involve a lot of changes in VIPM. When Reading the Linker Info earlier in the Builder, it may be possible to go through all shared library entries, get their caller name (with the link index) and then do a Find Object on the diagram of them for the Call Library Nodes, get the actual library name as filled in the Call Library Node, which hopefully hasn't yet been messed with by the loading of the VI into memory, and later on use that name to reset the linking info. -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

Not likely as there are many potential problems. For one, properly configured Call Library Nodes for system libraries will report a relative path with just the library name, so your File Open will simply error out on that as it can't find the file (it might actually magically, if you just happened to select a file through the File Dialog Box in the system directory as that will change the application global current directory variable and the Windows user space API is known to try to resolve relative names relative to that current directory). But the problem with that is that it will only work if the current directory is set to the System32 directory, which it usually isn't. An error on this level passed on to VIPM will not likely far well with the installation itself. But the main problem is that I do not see how that could work properly when trying to build on 32-bit LabVIEW for DLL files that should remain with 32 in their name. -

There is a Match Regular Expression node in LabVIEW that uses internally the PCRE library. It may not be 100% the same as what you have in Python, but that would be actually more a problem of the Python implementation. The PCRE library is pretty much the de facto implementation of regular expressions.

-

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

That looks nasty. Checking the PE file header would be one of the last things I would resort to. It also has the potential to fail for many reasons such as LabVIEW deciding to not convert the wildcard filenames in the Linker Info anymore, although I think that's actually unlikely since those paths are meant to actually allow access the files on disk in some way. But it's a private node, so all guarantees are pretty much null and void. Also I fail to see how your method would work with a DLL called user32.dll that should stay that way when your tool is running on LabVIEW for 32-bit. It will correctly detect that the DLL is NOT 64 bit, so use the 32 to search for in the file name, correctly find that and replace it with a *. Boom, When loaded in LabVIEW for 64-bit the VI will look for user64.dll and that will not work. Also don't try to read the file for the non-Windows cases as you don't do anything with that anyhow! The best we may be able to do here is to simply detect if it is a relative path with only one path element (it is for system shared libraries correctly setup in the Call Library Node) and skip the renaming back to wildcard names for them. This may require more investigation for other OSes such as Linux. But there, multiarch installations are also usually solved by having different lib directories for the different architectures and not to rename the files for standard libraries. Only file names with absolute path (and hence not installed in one of these directories but located locally to to caller, MIGHT use the 32-bit/64-bit naming scheme. It's a kludge, but VIPM unfortunately has limited support to install binary files based on the bitness of the target LabVIEW version! Otherwise I wouldn't need that complete filename wildcard voodoo for my libraries. In the past I tried to solve it by installing both versions of the shared library with different names and having a post-install hook to delete the wrong one and do the renaming to the expected standard name. But that has other complications in some situations. I may still decide to revert back to that. This nonsense is even worse to solve properly. -

So they would rather like to pay a yearly subscription (and have their developers loose access once finance decides to stop the recurring payment) than a one time fee and decide at the end of the year if they want to fork over another 25% of the then actual license price to stay in the maintenance program? Sounds like a typical MBA logic! 😀 But if you own old perpetual licenses and can proof it you get to actually renew them by simply paying the Maintenance Support Program (MSP) fee of 25% of the full price. NI has publicly stated that, until mid 2025, they will waive all late fees for lapsed MSPs (basically nobody could possibly still have an active MSP at this point after NI forced everybody to go to SAAS after January 1, 2022). The MSP is actually still slightly cheaper than the SAAS fee, and rightly so, people paying for the perpetual license paid royally for the initial license.

-

As of yesterday, November 18, the Perpetual licenses for LabVIEW and LabVIEW+ are officially back and should be directly orderable through the normal LabVIEW order page . But yes compared to before when the subscription was pushed down our throat the costs have significantly increased. I would say it is almost a 2.5* price increase if I remember correctly. The current Professional Developer price is higher than what the Developer Suite used to be back then, which also included LabVIEW Realtime and LabVIEW FPGA and just about any possible toolkit there was.

-

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

It just occurred to me that there is a potential problem. If your DLLs are always containing 32 in their name, independent of the actual bitness, as for instance many Windows DLLs do, this will corrupt the name for 64-bit LabVIEW installations. I haven't checked if paths to DLL names in the System Directory are added to the Linker Info. If they are, and I would think they are, one would have to skip file paths that are only a library name (indicating to LabVIEW to let the OS try to find them through the standard search mechanism). This of course still isn't fail proof: DLLs installed in the System directory (not from Microsoft though) could still use the 32-bit/64-bit naming scheme, and DLLs not from there could use the fixed 32 naming scheme (or 64 fixed name when building with VIPM build as 64-bit executable, I'm not sure if the latest version is still build in 32-bit). -

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

Rolf Kalbermatter replied to PA-Paul's topic in OpenG General Discussions

I checked on a system where I had VIPM 2013 installed and looked in the support/ogb_2009.llb. Maybe your newer VIPM has an improved ogb_2009.llb. Also check out the actual post I updated the image.