-

Posts

3,947 -

Joined

-

Last visited

-

Days Won

274

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

Well, you should definitely try 5.0.3, 5.0.0 to 5.0.2 had various problems in terms of proper installation on specific platforms and the shared library path having been messed up by the build tools in the sources for the package.

-

I’m intrigued. Linux support for > 5.0.0 is definitely in the package, why it doesn’t allow you to select it is not yet clear to me. I have a hunch why it might not work in pre 5.0 version but that that doesn’t affect the Windows version is strange. How large is the lvapp image?

-

It could, but generally, the unzip functions in the LabVIEW runtime are simply a (somewhat older) version of the ZLIB code plus MiniZIP, the same I'm using in OpenG ZIP. Of course OpenG ZIP 5.0 has been updated to use the latest ZLIB sources, an alternate extended version of MiniZIP that supports 64-bit ZIP files and ZIP streams, and Unicode file name support. Since ZLIP and MiniZIP in the contributed part of ZLIB are under the extremely liberal ZLIB license, similar to the 3 clause BSD license, NI is free to include it directly in the LabVIEW runtime.

-

Did you try with OpenG ZIP library? https://www.vipm.io/package/oglib_lvzip/ No guarantee that it works as I never considered testing that, but it is a quick test.

-

More than 1000 posts further after the long weekend! I really would appreciate if Michael could step in for a minute here. Do you have any idea if he is still around?

-

The most easy solution of all: But likely not extremely fast although fairly fast should do it! https://forums.ni.com/t5/LabVIEW/Any-GUID-generating-VI-available/m-p/4392240#M1293609 Even this should be considered LabVIEW attic area as indicated by the poop brown color of the VI Server node, but with considerably less rusty nails. Worst case, it simply stops working one day. It's most likely using the MD5 code that the old GetMD5Hash() was using. Anything else would have been traditionally at least potentially trouble to implement on non Windows platforms without help of extra library installations such as OpenSSL, EmbedTSL or coreutils, etc.

-

Well, that's what you get for strolling in the LabVIEW attic. Seems the prototype and functionality as I posted it was correct at LabVIEW 5.x times until sometimes later, but later got changed by NI for some reasons. Since it was an undocumented API, they are of course free to do that anytime they like (and this is also one of the main reasons to not document such APIs). The only one that could get hurt is themselves when they happen to forget to adapt a caller somewhere to the new function interface (and of course people like me and you who try to sneak in anyways). It's also while this is called the LabVIEW attic with many rusty nails laying in the open.

-

Believe me, while it is certainly possible, this is one area where you do not want to use LabVIEW to implement it. Main reason are twofold: It's a lot of bit twiddling and while LabVIEW supports rotate and boolean operators on numbers and is quite good at it, there is an inherent overhead since LabVIEW does do some extra safe keeping to avoid errors that you can easily do in programming languages like C. This typically is negligible except when you do it in a loop 8 * many million times. Then these small extra checks really can add up. So a LabVIEW inflate or deflate algorithm will always be significantly slower than the same in C, compiled as optimized code. This is because the boolean and rotate operations take an extremely short time to execute and the extra LabVIEW checks each time to be always safe are significant in comparison. The second reason is the fact that bit twiddling is an extremely easy thing to get wrong and mess up. You may think to have copied the according C code exactly but in most cases there are more or less subtle things that will turn your results upside down. Debugging on that level is extremely frustrating. I have run code many many times in single step mode trying to find the error in those binary operators and missed the actual issue many times until finally nailing it. It's an utterly frustrating experience and it made me long ago promise to myself to not try to implement compression or decompression as well as encryption routines in LabVIEW except the most trivial ones. ZLIB however is not trivial, not at all!

-

Yes that function is used for generating hashes in LabVIEW itself for various things including password protection. It was never meant for hashing huge buffers and is basically the standard Open Source code for MD5 hashing that floats on the internet for as long as the internet exists. It is similar to what the OpenSSL code would do, although OpenSSL is likely a little more optimized/streamlined. This function exists in LabVIEW since about at least 5.0 or thereabout and likely has never been looked at again since, other than use it. You could of course call it, and dig in the LabVIEW attic with its many rusty nails, but why? Just because you can does not mean you should! 🙂 If you absolutely insist you might try yourself. This is the prototype: MgErr GetMD5Digest(LStrHandle message, LStrHandle digest);

-

Not for me for most accounts, but that is a minor nuisance. But just checked and funnily I can view it on yours. But the current situation really breaks the camels back. I refuse to be racist, but the obvious Indian influence in it simply makes me very angry. I propose to simply take it offline until something can be done about it. This is less than not useful as is.

-

Obviously disabling account creation does nothing! They are currently creating new accounts about every 10 minutes. And then start spamming a few minutes later. There still are some active logins here and there from accounts with names that are fairly telling, and that obviously have been banned already, with 0 recent activity, created a few days ago, but supposedly having 10s of posts according to the account page. What seems disabled is the Recent Profile Visitors block on the account pages for everybody. Not sure what that helps with, but it definitely is not the solution. At the current rate, banning accounts after they posted dozen or hundreds of messages, is simply not a manageable solution anymore. If this can't be changed, this forum is dead.

-

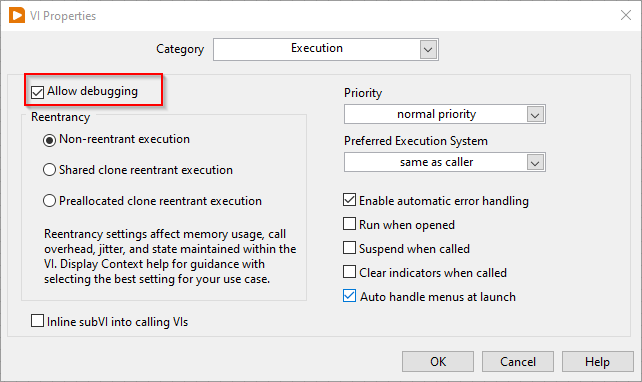

Confusion about VI debugging information

Rolf Kalbermatter replied to mooner's topic in LabVIEW General

-

It's starting to get really ridiculous. They seem to have optimized their spam bots to the point where they spam two forums with 120 posts so far, and others incidentally, most likely as trial to get them flooded too. If this continues like this you can shutdown this forum altogether! Nobody is coming here to see this shit and it drives the few that come here definitely away. I really hope Michael can find his admin login credentials and do something about this. Please let me ban Baba's this and Baba's that, visha's and all those other accounts. Pretty pretty please!!!!!!!!!

-

I do note that in the list of currently logged in users there are regularly users whose name looks suspicious and when checking them out they have supposedly 20, 40 or more posts but no current activity. Is this part of keeping them in the dark after banning them? Let them log in anyways and also maybe even create posts that are then consequently immediately /dev/nulled? I really wish Michael could throw that switch to require moderator approval of the first 3 or so post for every new user! It's not so nice to login on LavaG and find no new post by anyone as has been the case regularly lately, but I prefer that many times above finding a whole list of spam posts about call girls, drugs, counterfeit money and secret societies that are waiting for nothing else than to heap money over anyone who is willing to sell their soul to them.

-

Really????? 16 pages of spamming and still being busy!! Can we not just disable any kind of account creation for the time being, until this hole is fixed? Wouldn't this work too? https://invisioncommunity.com/forums/topic/473954-spam-attack-today/?do=findComment&comment=2943240

-

It's been getting a bit silent on that front in recent years but searching for PostLVUserEvent() on google (and more specifically the NI forum and here on LavaG) should give you a number of posts to study that will give you some ideas. But one warning, function pointers is usually mentioned in one of the last chapters of every C programming manual, since it is quite tricky to understand and use for most C programmers. It's definitely not a topic for the faint at heart.

-

LabVIEW can NOT generate a callback function pointer. That would require a very complex stack configuration dialog that would make the current Call Library Node configuration dialog look like childs play. Instead you have to write a wrapper DLL in C that provides the callback function, calls the driver function to install that callback function and the callback function needs to translate the actual callback event into a LabVIEW compatible event such as an occurrence or user event. The Callback tab in the Call Library Node configuration is despite its name NOT what you are looking for. It's not to configure a callback function that your DLL can call but instead to configure callback functions that the DLL provides that LabVIEW can call into while it loads the VI containing the function node (Reserve), when unloading that VI (Unreserve) and when aborting the VI hierarchy containing that function node (Abort). It's the opposite of what you try to do here.

-

It's a valid objection. But in this case with the full consent of the website operator. Even more than that, NI pays them for doing that. There are a few things Cloudflare can do, such as deflecting DOS attacks that can make it look attractive. Personally I mostly notice it as a delay when opening a CloudFlare "protected" website. In the case of NI this can sometimes amount to an infinite delay since the CloudFlare servers seem to get caught in some infinite loop trying to decide if I'm a bot, a hacker or a harmless visitor. Usually aborting the page loading and forcing a refresh results in an immediate success. A few times per year CloudFlare decides that trying to edit a post because of a typo and subsequently resubmitting it, is a very dangerous sign of web server flooding. My guess is that they get the timing wrong at those times and the analyzer thinks that the resubmit 30 seconds later was really 30 ms later, so can't possibly be a human. Of course these services are only tolerated when they are 100% invisible. Otherwise there will always be people feeling bad about them. And things can go bad, as has been proven by the recent CrowdStrike incident too.

-

Some Zeruzandah guy really starts to get on my nerves. 👿

-

😁 well, I can be sometimes tempted. And to be honest, selecting that menu option if I'm looking at the message already is really just taking up one second. It's in fact a lot quicker than selecting the report option and writing "SPAM" in it. Now, spending substantial time on their forum is another topic that could spark a lot of discussion. 😂

-

Connecting Arduino to raspberry pi

Rolf Kalbermatter replied to mustafa aljumaili's topic in LabVIEW Community Edition

You can have a LabVIEW VI (hierarchy) run on the RPi but you will have to add the RPi as a target to your LabVIEW project. From there you can create a program pretty much in the same way as you would for one of the NI RIO hardware targets such as sbRIO, cRIO, or the myRIO. However the LabVIEW runtime on the RPi is running in its own chroot environment (basically a light-weight Linux Virtual Machine running a Debian OS variant) and is based on the same runtime that NI uses for the ARM based RIO hardware targets. And this runtime has no UI. There is no way to have the VIs running in the RPi, show their front panel on the RPi display. You would have to rely on indirect user display options such as webservices or similar that a webservice client then displays on the actual RPi host or any other network connected device. -

Maybe there is an option in the forum software to add some extra users with limited moderation capabilities. Since I was promoted on the NI forums to be a shiny knight, I have one extra super power in that forum and that is to not just report messages to a moderator but to actually simply mark them as spam. As I understand it, it hides the message from the forum and reports it to the moderators who then have the final say if the message is really bad. Something like this could help to at least make the forum usable for the rest of the honorable forum users, while moderation can take a well deserved night of sleep and start in the morning with fresh energy. 🤗 It only would take a few trusted users around the globe to actually keep the forum fairly neat (unless of course a bot starts to massively target the forum. Then having to mark one message a time is a pretty badly scaling solution).

-

Not quite, they don't use NXG to create these application. NXG is truly and definitely dead. But they use the according C# frameworks and widget libraries they were creating for NXG.

-

Could it be that the filter event only works for LabVIEW file types? There is no reason to let an application filter file types that LabVIEW doesn't know about how to handle anyway. They will be simply dropped into the "round archive folder" /dev/null anyhow if the normal event doesn't capture them.

-

I was fairly sure that there was actually an application event for file open events passed from the OS to the running application, but seem to have dreamed that up. It shouldn't be to difficult to add although might have some implications. Would have to be a filter event that can check the file name and then indicates if LabVIEW should further handle it (for LabVIEW known file types), or if it should be ignored (potentially handling it yourself). Ahh, it's super secret private special stuff: And seems to have trouble in some newer versions than 8.2! Although Ton says he got it to work in 2009 again.