-

Posts

159 -

Joined

-

Last visited

-

Days Won

11

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by bjustice

-

I now understand why James made this SubVI for his JSONtext library. I hope that he built this thing with scripting. Brute force, but seems to work well.

-

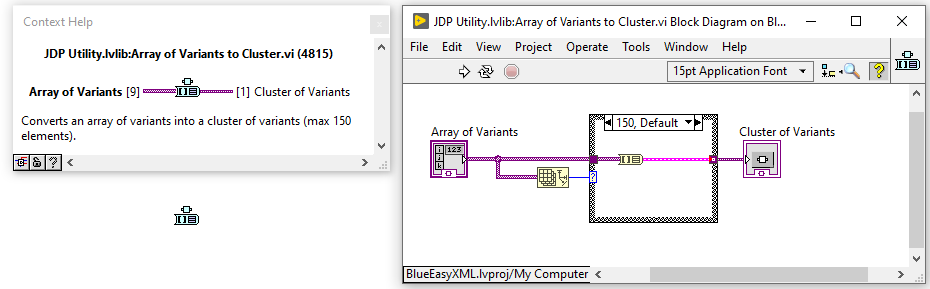

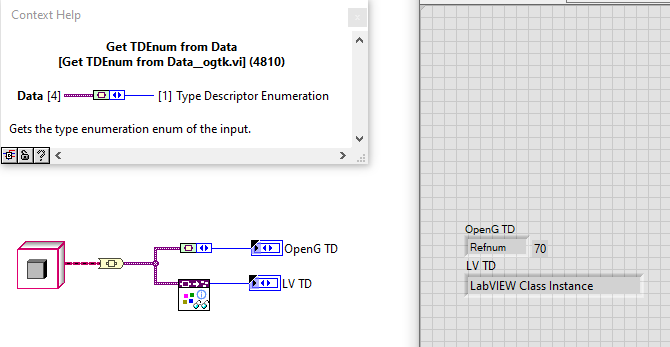

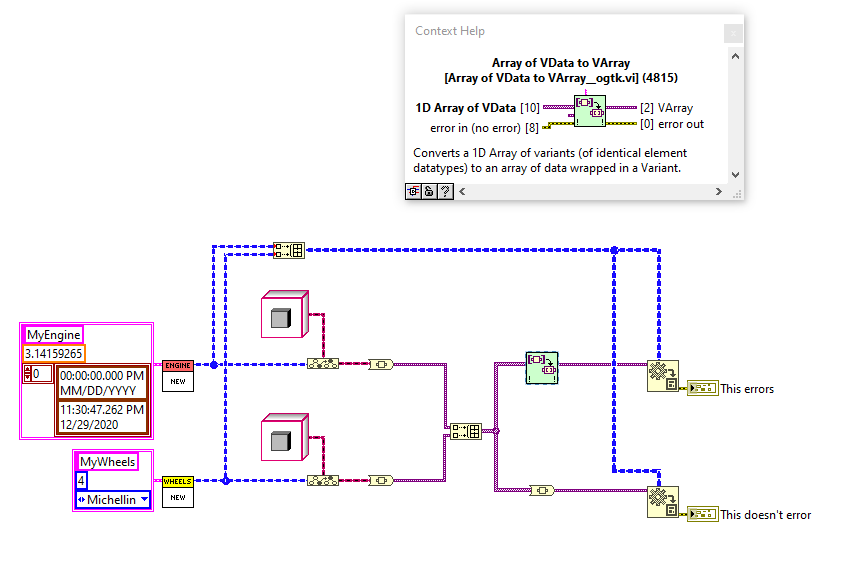

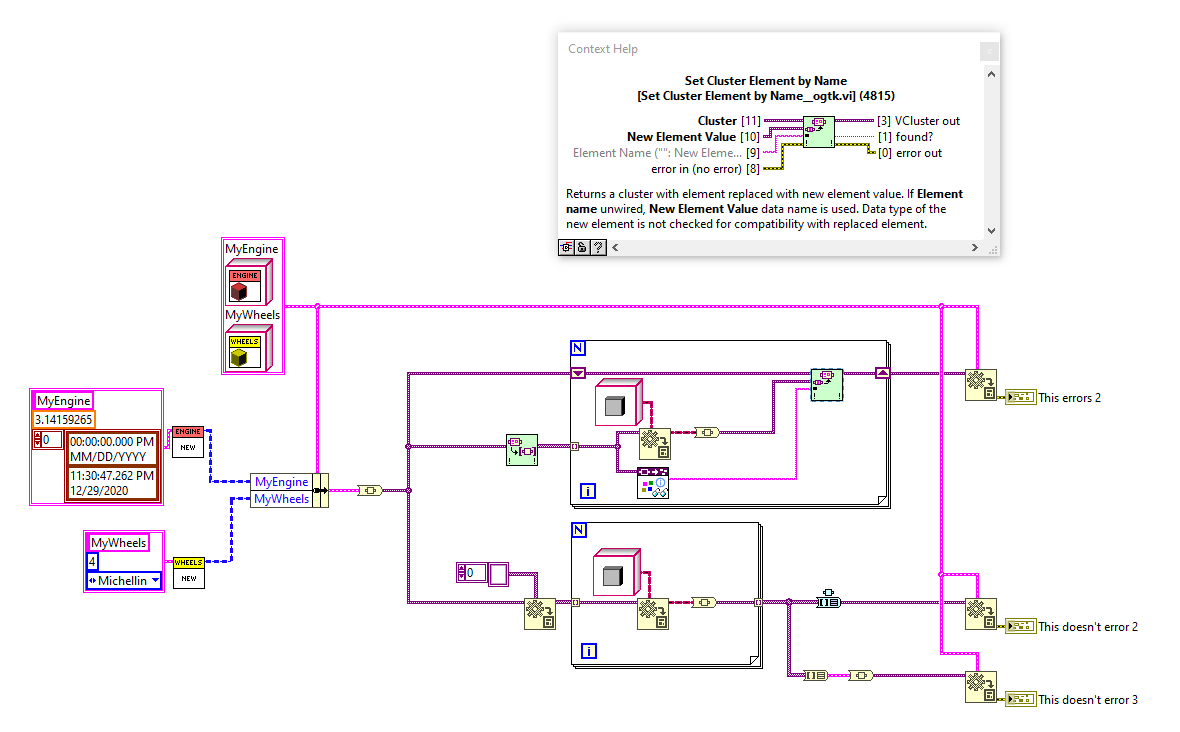

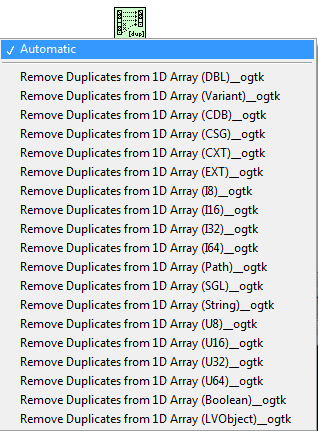

I did a deep dive into JSONtext, JKIJSON, and into EasyXML. I made a few interesting observations after doing a bunch of work to integrate object serialization into a branch of these libraries. Basically, beware usage of OpenG LabVIEW Data Palette SubVIs when working with LabVIEW objects 1) The OpenG TD doesn't include "LabVIEW Class Instance" (or any of the new datatypes either... maps, sets) 2) In the following code, note how the top method produces an error, but the bottom method is fine: 3) In the following code, note how the top method produces an error, but the bottom method is fine:

-

I feel the need to highlight the absurdity of how Shaun answered this question within 4 hours... about a post that he made 10 years ago. This community is amazing

-

Which is the best platform to teach kids programming?

bjustice replied to annetrose's topic in LabVIEW Community Edition

Upvote Scratch. I used to teach scratch to 12 year old kids at summer robotics camp. Very approachable, fun to make games. Lego Mindstorm is another good one -

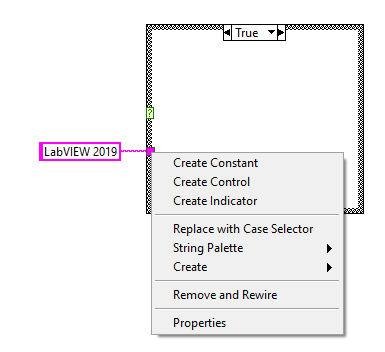

LabVIEW 2019 "create constant" right click menu

bjustice replied to bjustice's topic in LabVIEW General

Riiight, so Hooovahh is hitting the nail on the head here with my thoughts exactly. It would have been nice to maybe make the plugin menu a bit more intelligent such that it wouldn't have these "create constant, create indicator, create control" for these situations. The consistency argument is interesting and not something that I had considered. Give me 2 months with this new change, and I'll report back on how my muscle memory and opinion has changed. I might indeed be the old man yelling at a cloud right now. I'm trying to beat Darren for fastest programmer in the world, so muscle memory plays alot into things like right click menus and quickdrop names -

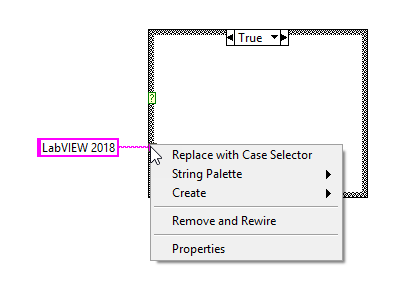

I've started working in LabVIEW 2019 SP1, and I've observed that the right-click menu on the block diagram has changed such that "create constant/control/indicator" are always at the top for almost every block diagram action. Example: If I recall, this was a pretty popular right-click menu plugin that alot of LAVA folks were using in prior versions of LabVIEW. It looks like NI simply cemented the idea in base LabVIEW 2019. I'm just curious though, does anyone here find this annoying? It's really wreaking havoc on my muscle memory. Furthermore, in situations such as above with a case structure, I'd much prefer the "Replace with case selector" to always be the top-item

-

I've not run into a situation where I've needed to get the actual image data into LabVIEW. I've mostly needed to handle file streaming to/from disk. It would be easier to use files on disk as a middleman between LabVIEW and FFMPEG. If you need a live streaming solution to LabVIEW, then you probably need a different technology I've never run into the situation where I've needed to send the "q" command. The ctrl-c command exits properly for everything I've needed, even for bad commands or non-connected cameras. I've not run into any crashing I've attached an example of a piece of code that will stream an RTSP stream to a file on disk. This also contains a copy of a FFMPEG build that I've found to be pretty stable. I'm not sure that I fully understand what you're trying to do distro.zip

-

[CR] Hooovahh's Tremendous TDMS Toolkit

bjustice replied to hooovahh's topic in Code Repository (Uncertified)

Thanks Hooovahh, I've used your TDMS concatenate VIs in a few places. Really convenient to see this wrapped in a VIPM with a few other tools. Will install this right alongside Hooovahh arrays -

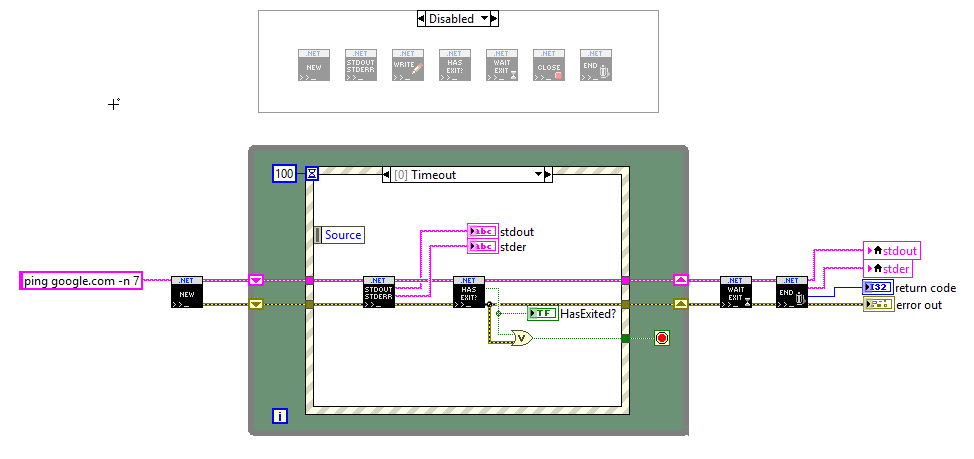

I threw this together, and maybe someone will find it useful. I needed to be able to interact with cmd.exe a bit more than the native system exec.vi primitive offers. I used .NET to get the job done. Some notable capabilities: - User can see standard output and standard error in real-time - User can write a command to standard input - User can query if the process has completed - User can abort the process by sending a ctrl-C command Aborting the process was the trickiest part. I found a solution at the following article: http://stanislavs.org/stopping-command-line-applications-programatically-with-ctrl-c-events-from-net/#comment-2880 The ping demo illustrates this capability. In order to abort ping.exe from the command-line, the user needs to send a ctrl-c command. We achieve this by invoking KERNEL32 to attach a console to the process ID and then sending a ctrl-C command to the process. This is a clean solution that safely aborts ping.exe. The best part about this solution is that it doesn't require for any console prompts to be visible. An alternate solution was to start the cmd.exe process with a visible window, and then to issue a MainWindowClose command, but this required for a window to be visible. I put this code together to allow for me to better interact with HandbrakeCLI and FFMPEG. Enjoi NET_Proc.zip

-

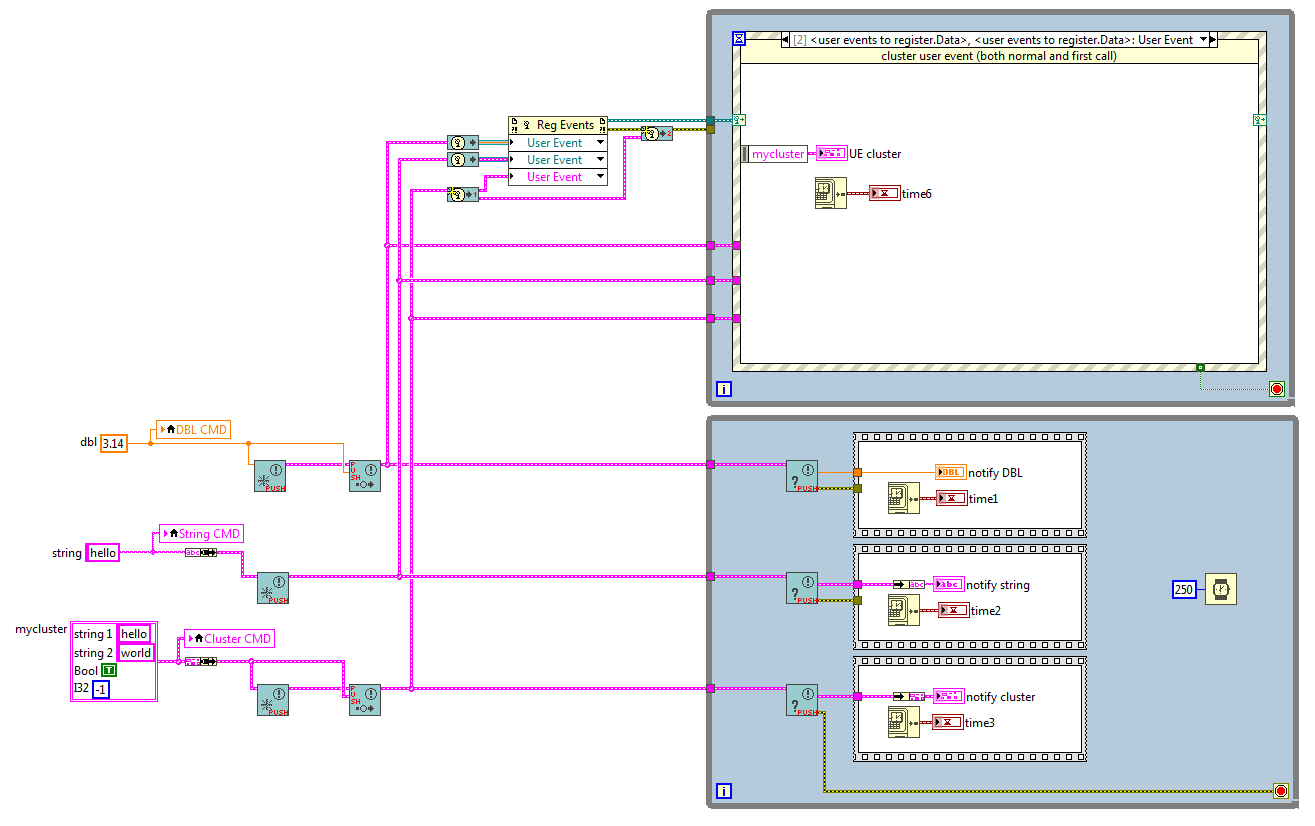

drjdpowell touched upon the primary (and initial) purpose for the code. I had an issue where I wanted to use user events, but the rule of "who starts first" came into play. This eliminated that worry. I could spin up a sub-process, register it for the user event, and force the user event case (only in that subprocess) to initialize with the most recent data. And, it's clean! Notice how I'm able to share the "mycluster" user event case structure with both the initialization UE and the normal UE. No tacky need for another case structure event that only handles first call initialization, or some other similar thing.

-

Oh, and it's saved in 2018 and makes heavy use of VIMs. So, apologies to those using older versions of LabVIEW

-

I made a fun piece of code, and thought that I'd share. Maybe it'll spark some good discussion. Here it is: Push-Notifier.zip I wanted to accomplish 2 things with this code: 1) I wanted to be able to create a notifier that I can register as a user event. Basically, I want a user event to be generated whenever a new notification is sent. 2) When I register for a user event, I want to be able to also force the event structure to generate a user event using the most recent notification. (Helpful for initialization of data in the event structure) Demo VI looks like this: Anyways, thought this might be fun to share

-

CAR created: Reference ID 726196.

-

Interesting history. Thanks for the information. I've reported this to NI. I will post here if they generate a CAR thanks everyone

-

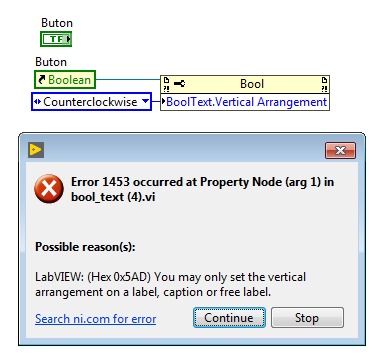

Benoit, thanks for looking at this with me. It looks like the error that you generated there is a result of the "Vertical Arrangement" property not being allowed to be applied to boolean text. I get the following possible reasons for that error: So, this is good proof that not everything in the "text property palette" is compatible with boolean text. Which is fine, but the text select end/start should return a similar error in order to indicate the lack of support. Also, as I said earlier, I can copy/paste text into the boolean text and have it retain properties. Which means that the property node interface simply doesn't appear to support programmatic interaction.

-

Darn. Thanks for looking!

-

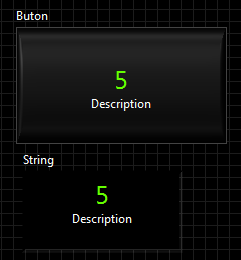

X-POST to NI forums. (No luck there so far.) https://forums.ni.com/t5/LabVIEW/button-with-different-size-text/m-p/3864798/highlight/false#M1095147 I am hoping to determine if there is a programmatic method to have a button with boolean text with varying font size/color. This is achievable with a strong control using text selection end/start. Booleans expose these same properties in the property node... but it doesn't appear to do anything. Even more interesting is that I can copy/paste text with varying color/size into the boolean text... and it will work. Thoughts? Thanks! bool_text.vi

-

The State of OpenG (is OpenG Dead?)

bjustice replied to Michael Aivaliotis's topic in OpenG General Discussions

I still actively use the OpenG toolkit. I would say that 90% of my usage lies in the Array and File palettes. I also recently learned that Hooovahh rewrote the Array pallette using VIMs though, so I've been using that in newer projects. Hooovahh Arrays It might be nifty if OpenG added Hooovahh Arrays to the OpenG Arrays palette... -

Fantastic! This is exactly what I was envisioning. Thank you.

-

Hey guys! This might be a dumb question... so ignore me if I'm out of touch. But, I'm a big fan of the OpenG toolkit. Is there intent to ever rewrite any of this codebase using malleable VIs? I know that many of the tools (especially the array VIs) use a polymorphic VIs in order to handle different data types. When malleable VIs were announced, I always envisioned that this toolkit would immediately benefit from this new capability. I'm mostly just curious

-

Matlab Script Node doesn't update when .m file edited externally

bjustice replied to Pat O's topic in Calling External Code

7 years later, this information helped me! Thanks for coming back to post -

I haven't head of TestScript before. Sounds interesting. But I have tried both the Enthought toolkit as well as the LabVIEW Python Node. I've been majorly impressed with the Enthought toolkit. I've passed fairly large data to/from Python and the performance has been pretty great. And it certainly does solve some deployment headaches. It has a building mechanism that is able to generate a self-contained python environment with only required libraries for running your code. You can copy/paste this environment directly onto a deployed machine and it will run! No need to install and configure a python environment from scratch for every deployed machine. 10/10 recommend

-

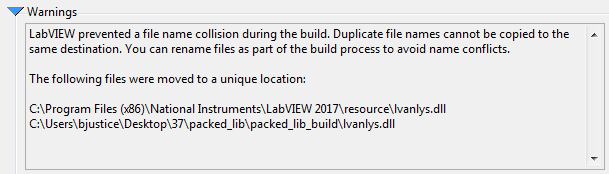

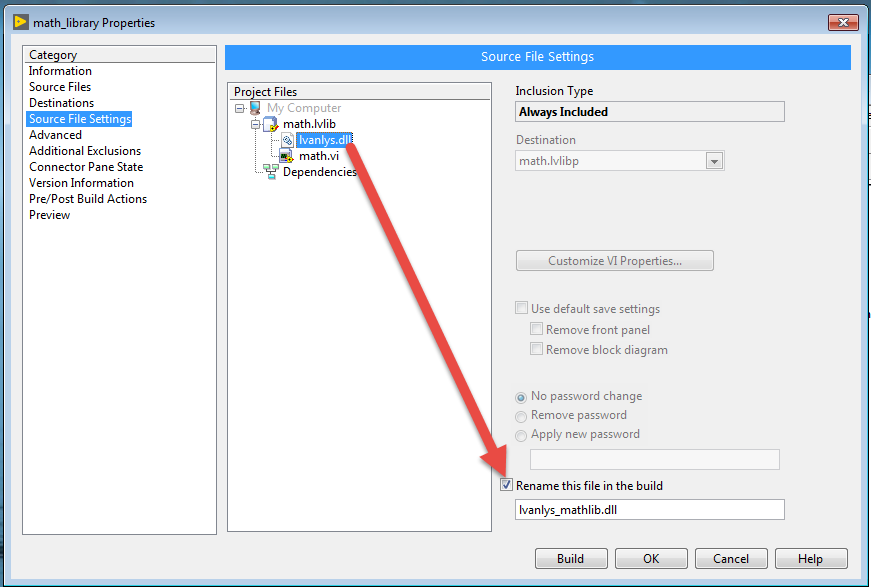

Packed library causing name collision of dll

bjustice replied to bjustice's topic in LabVIEW General

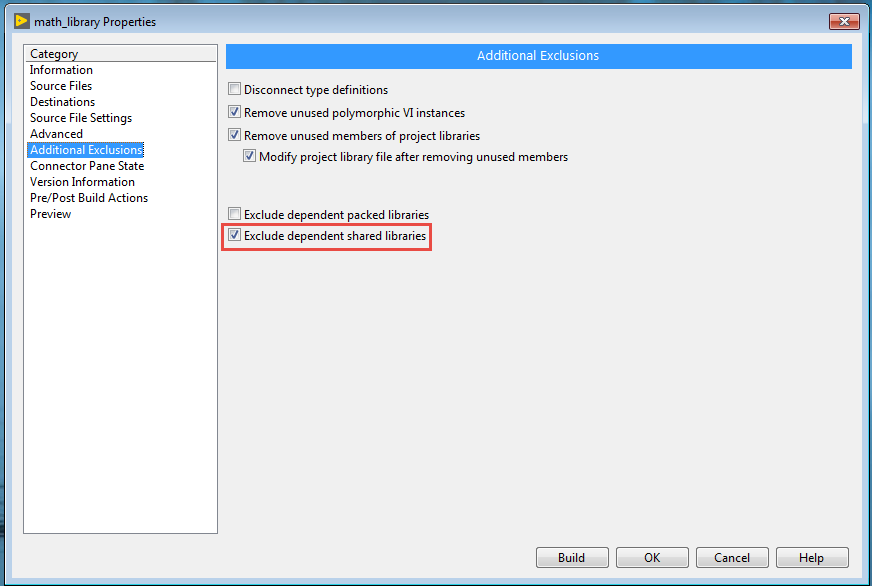

Co-worker found the solution! This option in the packed library build spec will exclude the lvanalys.dll from being placed into the packed library build directory. Furthermore, when the application EXE build spec runs, it seems to be smart enough to include lvanalys.dll in the resource folder when the packed lib is a dependency. (Cool!) -

Good people of LAVA, I am running into a peculiar issue involving packed libraries. I have attached a zipped folder that illustrates this issue in a simplified format. When I run the build spec in the attached folder, I get the following error: My understanding is that the application builder is running into this issue as a result of trying to include the lvanalys.dll twice: -lvanalys.dll is a dependency of G-code in the main.vi -lvanalys.dll is a dependency of the packed library build: "math.lvlibp" One workaround that I've discovered is to rename the dll in the packed library build spec: As you might expect, this results in the Main application builder including both lvanalys.dll and lvanalys_mathlib.dll in the resource folder. However, since the names are different - this doesn't generate a namespace collision warning. The downside is that this feels a bit kludgy. And it would be nicer to find a solution where the application builder is smart enough to understand that it only needs 1 copy of this dll in the resource folder. Thoughts? Am I missing something? I have only recently started to explore packed libraries. Thanks! math.zip

-

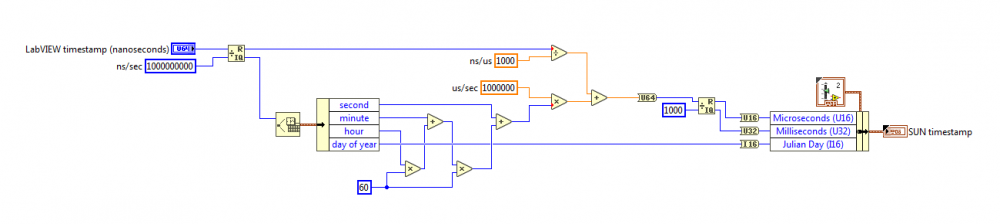

You guys all rock! Ok, so, for future readers, here are my lessons learned here: As you would expect, converting a U64 to a DBL yields loss of precision on the fractional seconds (near millisecond/microsecond regime). As such, feeding the "Seconds to Date/Time Function" a DBL input will result in loss of precision on the fractional seconds output The LabVIEW "timestamp" data structure consume 16 bytes of memory, and can maintain precision at the nanosecond regime so long as I typecast the structure correctly. (Cool! I always assumed that the timestamp structure was a DBL under the hood.) The Seconds to Date/Time function can accept either a UINT64 or a TIMESTAMP as an input. This yields a lossless (less lossy?) calculation. It might even be possible that the UINT64 input option is a completely lossless calculation since there is no floating point math involved (maybe...) After a bunch of unit testing and poking, I've decided to implement @infinitenothing's solution with one small tweak. screenshot: The small tweak here is that I convert the remainder directly to microseconds as opposed to converting to seconds and then adding to the output of the Date/Time function. This yields slightly better data. Thanks again everyone. I was banging my head against a wall for a few hours last week on this.