-

Posts

369 -

Joined

-

Last visited

-

Days Won

43

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by dadreamer

-

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Not that normal for sure. Usually such a video translation takes about 10 to 15 percent of CPU load. I suggest to try: - increase the loop delay from 10 to 50 ms; - as you know, that your image size is constant (1280x720), eliminate the GetPictureSize CLFN completely and move the Initialize Array node outside the loop. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

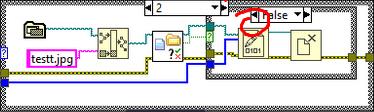

Something like that, but... - Why all your CLFNs are orange again? - You did not introduce a minimum delay for the loop. - Better to use some button, pressing which you would capture the image. So you would need a Case Structure or an Event Structure in that second loop to handle the button actions. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

You should have moved only PlayM4_GetPictureSize and PlayM4_GetJPEG to a parallel loop. The other functions should remain as they are, i.e. in the callback event frame. Now as you moved everything PlayM4 related and did not introduce a minimum delay for the loop, your While loop runs with a very high frequency, not only hogging up the system resources, but also executing few unnecessary runs for one User Event! To clarify, in each callback event you need to call InputData once, but you call it one, two, three times in a row or even more! -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Yes, that is why it was an one-time test. Remove all that file writing stuff and add the Decode Image Stream VI instead. It will decode the stream in memory. Obviously I just deleted first 4 bytes from the file as you forgot to connect the boolean constant and the VI was adding an extra integer of the array size to the beginning of the file. Plus it's known how the JPEG header starts, so it's relatively easy to find its first bytes in almost any file. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

You may stick with that Decode Image Stream VI, if you prefer JPEG format. Look at the Write to Binary File VI more closely. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

-

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

I meant that testt.jpg ............. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Can you zip it? The forum tries to handle it in its own way. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Just save and look at it. It's just for a one-time test anyway, so doesn't matters which moment you're capturing on your camera. You don't need that inner "2" Case Structure, remove it. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

I doubt there would be problems. Rolf already has set the CLFNs in his HikVision examples to any thread, so I could conclude that those functions are reenterant (thread-safe). Of course, it must be clarified with the documentation from the developer, but I accepted it on faith. 🙂 Moreover, you should move your GetJPEG call to a parallel loop as it's blocking your callback event frame, not letting it to process another events. Hence you see freezes of the GUI. Or limit the event queue in the Event Structure settings. Or use Flush Event Queue as suggested. It is. Why do you think otherwise? What's your issue? Just write the array into a binary file with a ".jpg" extension exactly one time and you're done. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

But if GetJPEG call is blocking, you don't have any other ways except implementing PlayM4 callback in the C code. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

To any thread. They should become yellow. But yes, you have a way too much events queued! -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Re-read my previous message. And maybe you have a large bunch of events in the queue? Check with the Event Inspector. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Only those stated in the documentation. This is the minimum size required. But it may occur that this size is not enough - this needs some testing on your side. If you would use BMP, then there's a more or less precise formula, but BMPs are quite larger in size, as you know. Also I, as Rolf did few posts ago, suggest using PlayM4_GetPictureSize function to know the actual image size. Insert it between InputData and GetJPEG nodes. Are you saying that those GetJPEG/GetBMP functions are synchronous and don't return until the buffer is filled? What if you switch all the HikVision and PlayM4 CLFNs from UI thread to any thread (yellow coloring)? Then it would run in another thread and not freeze the GUI. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Well, I see from your data that you're finally getting a valid JPEG stream. Can you save that pJpeg array into a binary file and open it in a viewer? Just to make sure it's the cam image. And now you need to figure out the proper array size so the GetJPEG function wouldn't error on you anymore. And in your "Stop" frame you should accomplish the cleanup procedures such as stopping the playback, freeing the port etc. Just find the opposite functions according to your UserEvent frame calls. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

It's date when pzj coder added that define to the header. What do you mean?.. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

@alvise Based on what Rolf said try to run this VI and report the PlayM4_GetJPEG Error Number. Preview CAM.vi -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

He already tried to allocate a huge buffer of 3000000 bytes. This buffer is definitely large enough to hold not only a single JPEG content, but even a several BMP ones. But this doesn't work as well. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Isn't it what you wanted? (Look at the pdf attachment) -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

You could try to switch to PlayM4_GetBMP, but I assume it gives nothing new. This is odd that even the last error number is zero. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Doesn't this work also? Do you ever see PlayM4_GetJPEG returning 1 sometimes? Preview CAM.vi -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

I slightly modified your VI, try it. Preview CAM.vi These are Windows API bitmap definitions. In 32-bit IDE they are 14 bytes and 40 bytes long respectively. So the final formula should be 54 + w * h * 4. But it is valid only if you will use PlayM4_GetBMP! For JPEG format there's a different header length to be defined. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

That's how some guy did that in Delphi. function GetBMP(playHandle: Longint): TBitmap; var rbs: PDWORD; ps: PChar; i1,i: longint; bSize: DWORD; ms: TMemoryStream; begin try result := TBitmap.Create(); if playHandle < 0 then exit; bSize := 3000000; ms := TMemoryStream.Create; new(ps); GetMem(ps,bSize); new(rbs); if PlayM4_GetBMP(playHandle,ps,bSize,rbs) then begin i1 := rbs^; if i1>100000 then begin ms.WriteBuffer(ps[0],i1); MS.Position:= 0; result.LoadFromStream(ms); end; end; finally FreeMemory(ps); FreeMemory(rbs); ms.Free; ps :=nil; rbs := nil; ms := nil; end; end; But that bSize := 3000000; looks not an elegant enough, so I'd suggest using PlayM4_GetPictureSize and calculating the final buffer size as sizeof(BITMAPFILEHEADER) + sizeof(BITMAPINFOHEADER) + w * h * 4 But you may test with that for now to make sure everything is working. Another option would be to use the DisplayCallback, that is set by PlayM4_SetDisplayCallBack. There the frames should already be decoded and in YV12 format, therefore you'd have to convert them to a standard RGBA or any other format of your liking. -

Using the DLL files of an application compiled with C# with labview

dadreamer replied to alvise's topic in LabVIEW General

Did you implement PlayM4_Play as suggested?