-

Posts

369 -

Joined

-

Last visited

-

Days Won

43

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by dadreamer

-

If you are using VDM, you should already have the basic example on how to manipulate the IMAQ image in a shared library: IMAQ GetImagePixelPtr Example Don't know, if you will gain anything in terms of performance, but worth a try at least.

-

-

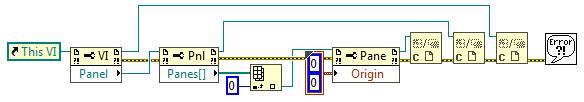

Maybe this will help: Programmatically Scroll Front Panel Using LabVIEW

-

-

You can download PID and Fuzzy Logic Toolkit 2012, then unpack it somewhere (e.g., on your Desktop). Run this command in the admin command line: msiexec.exe /a "C:\Users\User\Desktop\2012PIDFuzzy\Products\LabVIEW_PID_Toolkit_2012\NIPID00\NIPIDToolkit.msi" /qb TARGETDIR="C:\Users\User\Desktop\2012PIDFuzzy\Products\LabVIEW_PID_Toolkit_2012\NIPID00\NIPIDToolkit" (Replace "User" with your username). Now go to \2012PIDFuzzy\Products\LabVIEW_PID_Toolkit_2012\NIPID00\NIPIDToolkit\ProgramFilesFolder\National Instruments\LabVIEW 2012\vi.lib\addons\control\pid\pid.llb and there you should have PID.vi along with many other VIs from that toolkit. Also note that some VIs use 32-bit lvpidtkt.dll, so it won't go in 64-bit LabVIEW.

-

They introduced a token for smooth lines: SmoothLineDrawing=False

-

The Type Spec Structure is accessible in LabVIEW 2017, if SuperSecretPrivateSpecialStuff=True is written to labview.ini and the user RMB clicks on the Diagram Disable Structure and chooses "Replace With Type Specialization Structure" menu entry.

-

Darn, I'm slow Started to upload my disks to GDrive and on finish saw your message. Anyway, good to know it's resolved.

-

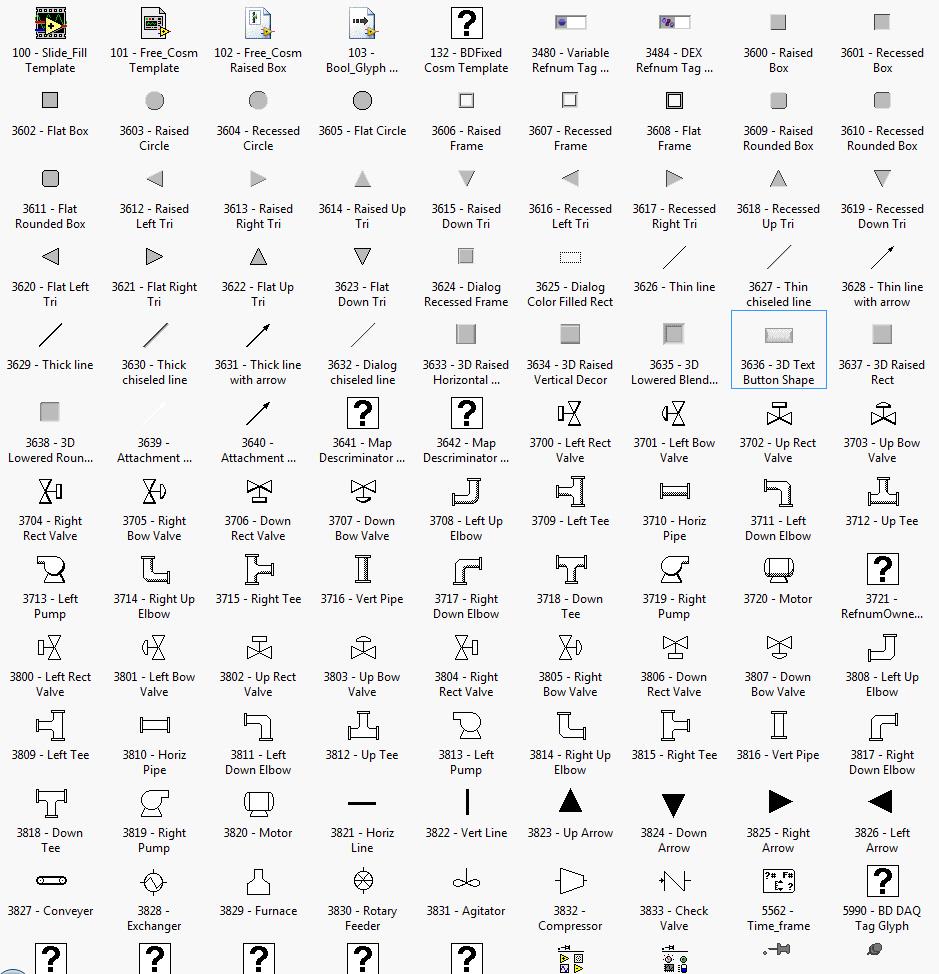

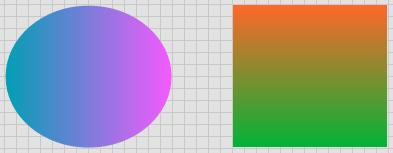

Yes, looks much like you meant the 2D Picture pixmaps opcodes. Their names are similar to those from the QuickDraw PICT Opcodes document (e.g., frameArc, paintArc), but the values are different. On classic Mac OS I've seen the following. If a vector PICT image (of kLVPictImage type) is placed onto the panel and resized, it is redrawn as if it was a LV native kPiccImage. But when the VI is moved onto another platform, such as Windows, the image becomes a common bitmap, losing all its vector abilities. Seems, the presence of a platform-dependent API (such as QuickDraw) really matters. I'd add dynamic (un)registration of the drawing procedures for the cosmetics. It would allow to add custom decorations relatively easy, even though the Drawing Manager is undocumented to use in the DLLs. Currently no way to add the user decor, not having LV sources. But honestly all this legacy tech should be rewritten or even removed/replaced by something much better. EMF/WMF support is not that good and SVG is still not considered. 😿 By the way. This thread seems incomplete after @flarn2006posted in his profile some info to obtain all the decorations from lvobject.rsc. Here's the VI to do that: All Cosmetics.viChoose the .mnu path on the FP, run this VI, then import the subpalette in the standard way (Tools -> Advanced -> Edit Palette Set). Most of "hidden" elements are not that interesting as they're of no use as stand-alone decorations. Some could be useful tho, like those DSC module graphics (valves, pipes etc.), except for the Multi-Segment Pipe, maybe, because it's not functional as a decor. Or how about some cool gradient decors?

-

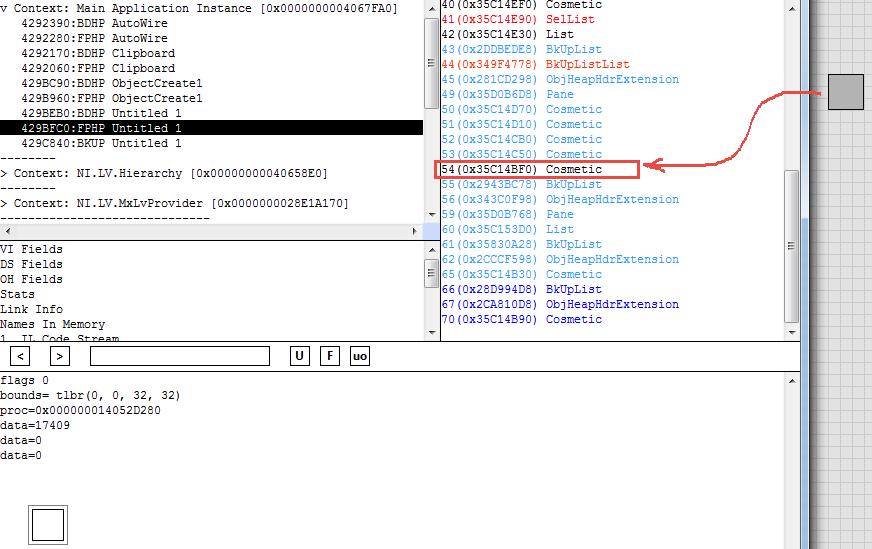

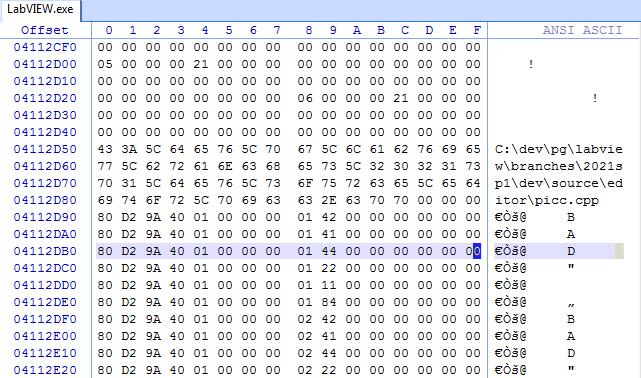

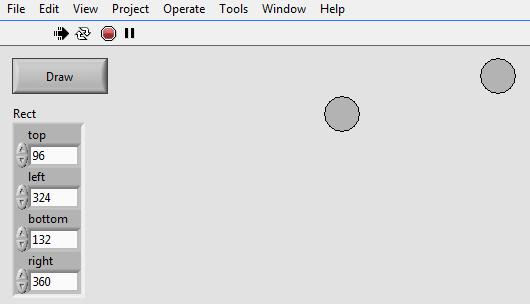

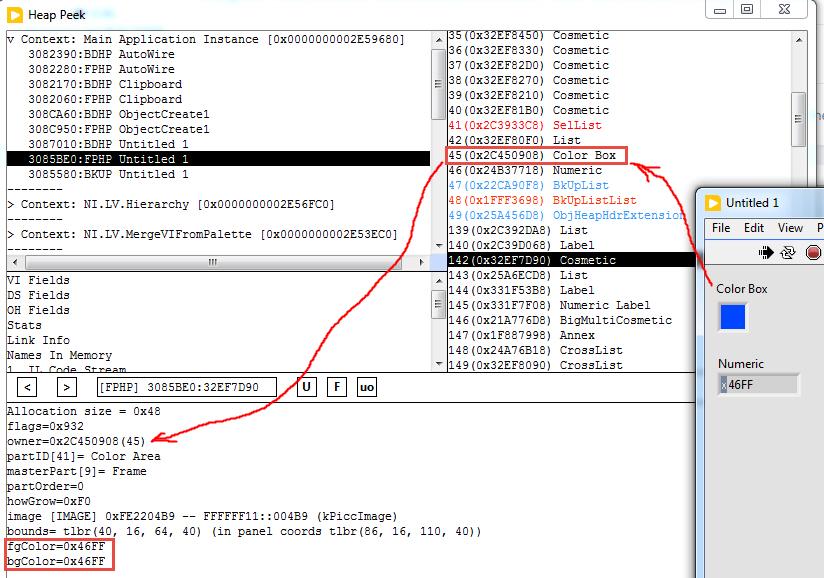

Have seen this statement of yours many times, but still don't see how you came to this conclusion. I investigated those built-in PICCs a bit. The "Cosm" resource forks in lvobject.rsc (labview.rsc in the old versions) don't contain any graphic operators. For example, this is how the Flat Box resource looks: 0000 0003 //cosmetic flags 0000 0000 00F0 0000 FFF0 FFF0 //rect 0010 0010 0000 0000 0200 0000 //symbolic color 0000 0000 0000 000B //image index to get later (e.g., 3D5) Now, this 0xB is just an index to the LabVIEW's internal structure, that can easily be observed in Heap Peek. Not very informative though. Here's the handler procedure address and some data, passed to it. Obviously not enough to do the drawing. This kPiccImage has 0x3D5 number assigned as well, which just indexes into the LabVIEW's internal table, looking like this: I marked the Flat Box line. Only two fields are here: the handler proc offset and the data, being passed into it. The data is in fact nothing more than the graphic attributes for the handler to draw, like the border width and the line style. Let's check this. I set the border width to 5 (instead of 1) and the line style to 0x11 (instead of 0x44). Easy to see: - Those PICCs are used in many places of the LV GUI. - Almost all the drawing work is done by the handler proc. - The procs and the attributes for the decorations/cosmetics are hard-coded inside LabVIEW. The proc itself uses the Drawing Manager functions to do the work. So, if it's the Flat Circle proc (to simplify), it behaves this way: DEmptyRect -> DSelectNormPen -> DPaintOval -> DSelectNormPen -> DFrameOval. It could easily be reproduced on the diagram: Draw Oval.vi The left circle is drawn from the diagram, the right one is a built-in decoration. Of course, it needs more work, when applied to a real project, as redrawing is necessary, when the window is resized, minimized, overlapped etc. The handler proc takes this job for the native cosmetics. So, I did not find anything that would resemble the Mac OS PICT format (version 2 or 1, at least). It is more like a "Picture in C" instead, as someone noticed earlier.

-

I have LabVIEW 8.0 Professional distro on three disks, that I believe was downloaded from torrents a while ago. The overall size is 1,68 GB. I'm a bit unsure whether it's ok to post it here. If the admin/mod's let me, I'll post it (without a "cure", ofc). As an alternative you may stalk for it in Google or other search engines. With pot luck you'll find it.

-

It's still on some trackers out there. Downloaded fine. This is the image without any hack tools inside. Or search the web for "NI.LabVIEW.v8.0.Real.Time.Module.ISO".

-

Please can anyone help me optimising this code

dadreamer replied to Neil Pate's topic in LabVIEW General

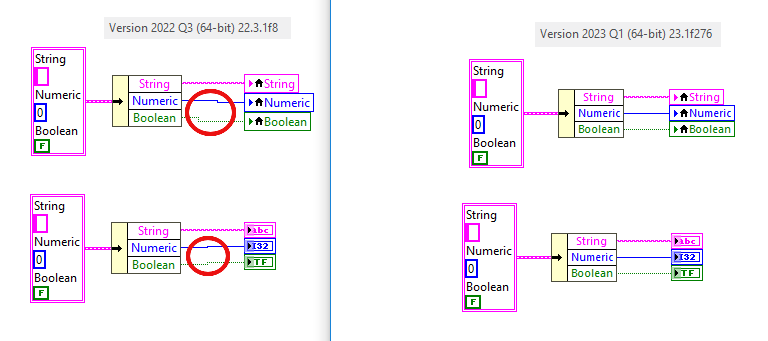

Yeah, I was thinking of double numbers whereas dealing with 4-byte integers, hence the confusion. In that thread I was introducing a filler field of 4 bytes in 64-bit LabVIEW using Conditional Disable structure. That's unnecessary here. -

Event on Colour Change while widget is open, is this possible?

dadreamer replied to Neil Pate's topic in User Interface

Seems like a feature really 🙂 I prefer not to use PN's without a serious need, as they run in UI thread, so have not tested that. It looks much like in this case the value is copied out of the DDO instead of the intermediate buffer. One way or another... it's working. -

Event on Colour Change while widget is open, is this possible?

dadreamer replied to Neil Pate's topic in User Interface

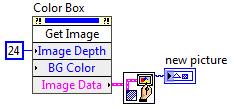

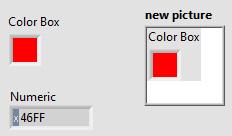

Its value remains constant (until you press the color of your liking), but its cosmetic color does update, that's why the Get Image method works, for instance, so it's possible to "catch" the moment, when the color changes, if the appropriate logic is implemented in the VI. Basic example. ColorBox Tracking.vi -

Event on Colour Change while widget is open, is this possible?

dadreamer replied to Neil Pate's topic in User Interface

No, not on the widget, you use Refnum to Pointer on the ColorBox control reference. After that you attach a debugger and study the memory dump to find out the cosmetics pointers in the data space (if you decide to go this route). You can get its HWND, but it's of no real use here. -

Event on Colour Change while widget is open, is this possible?

dadreamer replied to Neil Pate's topic in User Interface

This is possible, but totally unreliable. The Color Picker code changes the colors of the cosmetic, that is a part of the FP object (the Blinking property does that too). VI Scripting doesn't provide a way to obtain the ref's to the cosmetic. Ok, we could use that Refnum to Pointer trick to get the object's data space pointer, but we can't go further without using constant offsets. First, we need to find the cosmetic address, second, we need to find the colors addresses. Having those we could read out the colors. It would work only if the object data space structure does not change. But it is known to change with a 100% guarantee between different LabVIEW versions (incl. patches and service packs), RTE's, bitnesses, platforms, sun and moon phases or whatever. Besides, you would have to adapt that hacky technique between the objects with different types (such as Color Box and Boolean), because their data spaces are different and the offsets wouldn't work anymore. Due to that I personally dislike to base software on internal tricks. It's often unstable and very difficult to support. There exists an easier and documented way to get the color while the Picker is on. Just grab the object picture with the Get Image method and compare it against some initial picture to determine whether the changes are in effect. -

Event on Colour Change while widget is open, is this possible?

dadreamer replied to Neil Pate's topic in User Interface

Even though this Color Picker window is created by the OS API, it does not expose any properties or methods to the programmer. All the window handling is done in the LV core entirely. I won't suggest using ChooseColor or its .NET wrapper as I'm pretty unsure how to accomplish the task of mouse tracking there (maybe LPCCHOOKPROC callback function could be of some use, but it would require writing a DLL anyway). It would be much easier to create your own color picker SubVI with some means of interaction with the main VI. You can find some color chooser examples on NI forums, e.g. this one. -

Please can anyone help me optimising this code

dadreamer replied to Neil Pate's topic in LabVIEW General

I believe it is, at least for desktop platforms. Given the pointer that ArrayMemInfo outputs, I can subtract 4 bytes (for 1D array of U32) or 8 bytes (for 2D array of U32) from it and do DSRecoverHandle, that gives a valid handle. I can even get its size with DSGetHandleSize and it will correspond to the array size that was passed to the ArrayMemInfo (plus N I32-sized fields, where N is the number of the array dimensions). According to the doc's such as Using External Code in LabVIEW handles are relocatable and contiguous. Of course, Rolf could add more here. @Neil Pate If you wonder what that wrapper trick is, check this post. Using those tokens you could somewhat enhance your CLF Nodes and reduce time spent on each call. -

Please can anyone help me optimising this code

dadreamer replied to Neil Pate's topic in LabVIEW General

@Neil Pate As I can see, your pointer maths are okay, as long as you provide valid coordinates in Point 1 and Point 2 parameters. Thanks to @ShaunR it appears, that ArrayMemInfo has a bug, that's reproduced in 64-bit LabVIEW. I couldn't reproduce it in 32-bit LabVIEW though. The stride should not be zero, unless the array is empty, no matter if subarray or not. But even when the stride is 0, it shouldn't lead to crash, because in this case we're just writing into row 0 instead of intended one. To eliminate the bug influence (if any), you might not use the stride of ArrayMemInfo node, but use (Array Width x 4) instead as it's a constant in your case. -

Please can anyone help me optimising this code

dadreamer replied to Neil Pate's topic in LabVIEW General

As I wrote here, ArrayMemInfo node was introduced only in 2017 version. It just didn't exist in 2015. That's why it crashes. After a quick test in 2022 Q3 MoveBlock didn't crash my LV. Gonna get a closer look at the code tomorrow. -

DLL with nested pointer of double array

dadreamer replied to Miykoll's topic in Calling External Code

Of course, you can. 🙂 -

DLL with nested pointer of double array

dadreamer replied to Miykoll's topic in Calling External Code

Each time a library function wants a struct pointer as an input parameter, you should pass it as Adapt to Type -> Handles by Value. In this case LabVIEW provides a pointer to the structure (cluster). If the struct is very complex (not in your case) it's possible to pass a (prearranged) pointer as an Unsigned Pointer-Sized Integer and take it apart after the call with MoveBlock function. I see some inconsistencies in your struct declaration and the cluster on the diagram. The second field should be triggerCount, but the cluster has it named as triggerindices. The same for the third field: triggerIndices -> ListTI. Might be a naming issue only. Then which representation does that array have? If there are common double values, it's enough to allocate 8*100 bytes of memory. Of course, you may make some memory margin and it's not doing any bad, except taking an extra space in RAM. After the IQSTREAM_GetIQData call you likely want to extract the array data into a LabVIEW array, so before doing DSDisposePtr you would call MoveBlock to transfer the data. Also an important note: Unsigned Pointer-sized Integers are always represented as U64 numbers on the diagram. So if you are working in 32-bit LabVIEW, you should cast it to U32 explicitly before building a cluster. It's even better to make a Conditional Disable Structure with two cases for 32-bits and 64-bits, where a pointer field would be an U32 or U64 number respectively. -

DLL with nested pointer of double array

dadreamer replied to Miykoll's topic in Calling External Code

@mcduffDone. It's very limited example though as I don't have the OP's SDK and no VIs were posted to tinker with. -

DLL with nested pointer of double array

dadreamer replied to Miykoll's topic in Calling External Code

You can deal with pointers in LabVIEW with the help of Memory Manager and its functions. Just create an allocated pointer of 8 bytes (size of DBL) with DSNewPtr / DSNewPClr, build your cluster using that and pass it to the DLL. Don't forget to free the pointer in the end with DSDisposePtr. DSNewPtr-DSDisposePtr.vi upd: Seems like I've read it diagonally. Now I see you need a pointer to an array of doubles. So you'd allocate a space in memory large enough to hold all the doubles (not 8 bytes, but 8 x Array Size, i.e. 8 x lNumberDiodes). After the function call you'll need to read the data out of the pointer with MoveBlock function.