-

Posts

3,433 -

Joined

-

Last visited

-

Days Won

289

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by hooovahh

-

-

Have you tried uninstalling, then reinstalling the toolkit? Are the VIs in the vi.lib and just not on the palette?

-

There's also a little known feature in the top method where you can right click the Wait On Asynchronous Call and can set a timeout. This will then wait some amount of time for the VI to finish and will generate an error if it isn't completed yet. There are better ways to handle knowing when the VI is done, but it is good for a quick and dirty solution to wait a few ms for it to finish, and if it isn't done, go service something like the UI and come back again later. I submitted that as an idea on the Idea Exchange, then AQ said why it was a bad idea. Only for years later it to be implemented anyway.

-

2

2

-

-

XNode enthusiast here. I've never really minded the fact that the LUT needs to be generated on first run but maybe I'm using these CRCs often many times in a call so the first one being on the slow side doesn't bother me. If you did go down the XNode route, the Poly used could still be specified at edit time. It could be a dialog prompt, similar to something like the Set Cluster Size on the Array to Cluster function where the user specifies something like the Poly, and reflective settings used. Then the code generates the LUT and uses that. You could have it update the icon of the XNode to show the Poly and reflective settings used too. You could still have an option of specifying the value at run-time, which would then need to be generated on first call. Again I personally don't think I'd go this route, but if you do you can check out my presentation here. It references this XNode Editor I made.

-

2 hours ago, Porter said:

They did say that maybe Unicode support is coming to LabVIEW. I would give LabVIEW a plus for that.

They said Unicode support was coming in the next 1 to 2 releases, two years ago. Priorities change, challenges happen, acquisitions go through, so I get that NI hasn't been as timely with their roadmap as they had hoped. Sarcasm aside I don't actually want to be too critical of NI and missing road map targets. They didn't have to publish it at all. I'm grateful they did publish it even if it is just to give an idea of what things they want to work on.

Oh but I did look up the 2023 roadmap, and unicode support was changed to "Future Development".

-

If you want to just plop a window inside another one you can do that using some Windows API calls. Here is an example I made putting notepad on the front panel of a VI.

https://forums.ni.com/t5/LabVIEW/How-to-run-an-exe-as-a-window-inside-a-VI/m-p/3113729#M893102

Also there isn't any DAQmx runtime license cost. If you built your application then the EXE runs without anything extra. There is a NI Vision runtime license cost so maybe that is what you are thinking?

If you have a camera stream you can also view it by using VLC. VLC can view a camera stream, and then you can use an ActiveX container to get VLC on your front panel. USB cameras are a bit trickier but if you can get the stream to work in VLC, you can get it to work in LabVIEW. Here is an example of that.

https://forums.ni.com/t5/Example-Code/VLC-scripting-in-LabVIEW/ta-p/3515450

-

Bots won't procrastinate as well as I can. It truly is what makes me human.

-

1 hour ago, X___ said:

So it is the new way to not release any info whatsoever ("NI did not create this content for this release") on new releases?

Here is some info on what changes there are in the new release.

https://www.ni.com/docs/en-US/bundle/upgrading-labview/page/labview-2024q1-changes.html

This is not the major release of the year so there isn't too much to talk about. As for fixing the build issue I wouldn't hold your breath. I've already seen someone mention that the build for an lvlibp failed with error 1502. Cannot save a bad VI without its block diagram. But turning on debugging allowed it to build properly.

-

2

2

-

-

On 1/19/2024 at 3:38 PM, ShaunR said:

There is an "Element" property for arrays. You can get that then cast it to the element type. Then your other methods should work (recursion).

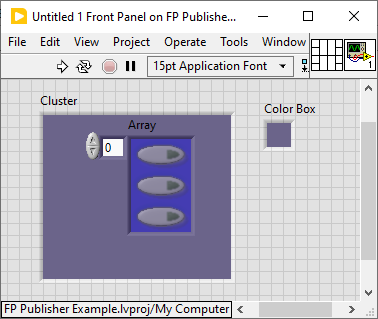

Thanks Shaun but this doesn't quite work like I wanted. Maybe I didn't explain it well I can be long winded. Using your method this is what I get:

In this case I actually want the somewhat blue looking color since that is what the boolean is in. The method suggested on NI's forums seems to work but is clunky but works. It hides the array element, label, caption, and index, then gets the image of the array control. Then gets the color of the pixel at the center of the image. With the element hidden it just shows the background which is the color blue in this case.

As for why, I also ended up answering that on the NI forums. It is because I wanted to improve the web controlling VI code I uploaded a while ago here.

-

Okay so I have a front panel, with some boolean controls on it. I want to get the image of that control, and I want to put that image on top of other images. The background color of the other images is controllable, so if I use the Get Image invoke node method on the control reference, and just set the background color to what the background is of the pane that the boolean is on, then it is convincing enough. But I am having issues when these boolean controls are in other controls. If it is in a Cluster for instance I need to get the image of the background of the cluster. Clusters can be transparent so I need to keep getting the owner controls until I find one that isn't transparent. For bonus points I can also use the Create Mask VI to mask out a color, and I can use the color of the background element to get an even cleaner image of my boolean control.

This is all a pain but doable. The issue I have is when my boolean is in an array. My main goal is to just get the boolean control, with the alpha or specific colors masked out. But when it comes to having booleans in arrays that is an issue. Any suggestions? Attached is a demo of what I mean. Set the enum then run it. For the scalar we just get the pane color. For clusters we get the color, then if it is transparent, keep going up until we find a color, or find the pane. But how do I handle the array? Thanks.

-

We've been on 2022 Q3 64-bit for Windows and RT applications for a little over a year. Both the RT and Windows builds fail, but RT way more frequently. I try tracking down the offending VI, I try renaming classes back and forth, I've tried checkbox roulette in the build settings, and I've tried changing the private class data. The only thing that consistently works, is clearing the compile cache over and over until it builds. Then usually running from source fails to deploy and I clear it over and over until that works, which usually breaks the build again. My failure mode is different in that I don't see it hanging on initializing build.

All that being said I'm glad to leave 32 bit behind.

-

1

1

-

-

In general it is just best to make your cluster a type def (which links all the places that data is used, similar to a defined struct) and then unbundle and bundle the data as needed. Since LabVIEW is a strictly typed language, getting all data from something like a cluster means it will have to return them as variants. And then what do you do with that? Well you'll need the Variant to Data, and specify the type it should turn into. If it is the wrong type you get an error. So while you can get all elements of a cluster in an order, the usefulness of it might be limited. If you are doing something like taking a cluster then writing it to a file, or reading it from a file and you want it to be somewhat human readable, then this might be useful way of doing it. These functions already exist in the OpenG Variant Configuration File toolkit.

-

1

1

-

-

On 12/5/2023 at 4:23 PM, Antoine Chalons said:

I remember when this thread started... am I old yet?

I can't answer that. But I can say there are many threads on the dark side, that also complain about searching over the years. And the conclusion there was the same.

-

I also had a hard time finding this in Google, which is a better search tool than the one built into the forums. Invision is the tool that the forums is built off of and the search comes with that forum level software. Occasionally the site admin will go through a migration process to the new versions adding features and it is possible search has been improved. But since Google is the defacto search tool they probably don't see much value in investing in better search tools. Sorry I don't have a good answer for this.

-

-

I knew of the malaphor only because my wife does it all the time, but then insists her phrase is the way people always say it. When you are up a creek, you are without a paddle. A bird in the hand catches bees with honey. Does the pope crap in the woods?

-

1

1

-

-

37 minutes ago, Neil Pate said:

Sure I know this pattern well, just prefer to have a single "mailbox" type event inside my actors for messages into the actor.

Oh yeah I'm not trying to convince anyone, I just was putting it out there in case others aren't aware of this style of messaging. I prefer the design I described it because it makes life easier on the developer using the API, at the expense of extra work on the architect making it.

-

21 hours ago, Neil Pate said:

Not for me though, my low level messaging uses events and that is just waaaaay to much drama to have separate events for every message. I wrap it up a layer with typedefs though, just the data is transported in a variant.

For me each message is it's own user event that is a variant, that gets created at the start. Then you can use it as a publisher/subscriber paradigm because you can create an array of these user events and register for them all at once. This is great for debug since a probe can just subscribe for all user events and see all traffic between actors. The Variant has an attribute that is Event Name, that is pulled out so the one event case handles them all, but the name is unique. VI Scripting creates a case to handle each unique user event, with a type def on how to get the requested data out, and if it is synchronous, another type def to put the data back as a reply. New cases are created with VI scripting to generate the VIs for request, convert, response, and the case structure.

-

1 hour ago, Neil Pate said:

yup, exactly how I do it too. For me the notifier has a variant data type so (unfortunately) needs to be cast back to real data when the return value comes

Typed Clusters takes care of most use cases with this. I have had times when two separate applications are talking to each other, and in those cases if the type def cluster changes, then both applications need to be built so the data matches.

-

16 minutes ago, HYH said:

This is an old flaw. It was first mentioned in LabVIEW 2021 SP1, and reported fixed in LabVIEW 2022 Q3 :

But apparently not.

The word I heard, was that this was the result of a code optimization gone wrong. NI attempted to add some code that would take shortcuts in loading things, and skipping things it didn't think it needed. Some of these code optimizations were fixed in 2022 Q3, but others remained. So I believe you will see the icons get wiped away in less scenarios in later versions, but even the newest public release today may see this bug. Hope you have SCC and can easily recover them.

-

- Popular Post

- Popular Post

3 hours ago, ShaunR said:

3 hours ago, ShaunR said:Happy? Or do you mean grateful for small mercies? You wouldn't have been happier with a documented and example laden product that you can ask questions about and actually works?

How about halfway happy. Which is more happy then I'd be with nothing. I enjoy a community that shares code. Sharing is caring. I'm agree that stuff on VIPM.IO should have a bit more polish. But a random forum post doesn't require the test and rigor of a commercial product.

-

1

1

-

4

4

-

1 hour ago, ShaunR said:

Support is always necessary.

I disagree. Large amounts of my code online have never required my support.

1 hour ago, ShaunR said:That never happens.

It's happened with me several times. I don't like speaking in these absolutes. These half finished projects have been extremely valuable to me over the years, way more valuable then having nothing. Often times I will take the code, polish it, add features, then post it online. Not as perfect but so someone else could add to it if they ever wanted. A community that only shares perfect code shares nothing.

-

1

1

-

-

I know you have an aversion to unnecessary support. But still I'd suggest you just post them in the code in progress section of LAVA. I've posted many random projects and VIs on LAVA and the dark side over the years and only my array VIMs, and CAN stuff ever get any questions or comments. Most of the time the community comments make these code projects better. And if I'm unwilling to improve them someone else can make the changes.

As for the specifics of these XControls. I have had a need for a dynamic or ribbon looking interface which is why I made one using the 2D picture control. I should have made it an XControl, but those gave me so much edge case problems I just have it as a class. I did see a tab like XControl posted online, but it was very limited in how it worked and the community could probably use another example at how this can be done. I can't seem to find it now but I think it was on NI.com

-

Every year I have to ping Michael about adding the new version of LabVIEW to the selectable drop down. It is something on the admin side, not moderator side. I'm guessing he just wanted to save getting bothered, and added 2024 when he added 2023, believing that there would be a LabVIEW 2024 eventually.

-

NXG's file types were all mostly human readable. The front panel and the block diagram were broken up into separate XML sections. At one point someone asked about having multiple front panels, and it was shown that the XML structure supported from 0 to N front panels for any GVI. NI never said they intended on supporting any number of front panels other than 1, but I thought that would have been an interesting thing to explore.

.jpg.45ecb7c4d120c02f4af3ef2ba434db24.jpg)

Using variant and set/get attribute

in LabVIEW General

Posted

Is there a reason you are doing this, instead of having a type def'd cluster? Then you can use the Bundle/Unbundle by name to get and set values within a single wire? Then probing is easier, and adding or updating data types can be done by updating that one cluster. Typically Variant Attributes are used in places where an architecture wants to abstract away some transport layer that can't be known at runtime. In your case we know the data type of all the individual elements. If you were doing some kind of flatten to a Variant, then sending over TCP, you'd want some standard way to unwrap everything once it got back. I've also seen it used where a single User Event handles many different events, by using the Variant as the data type. Then each specific User Event will have a Variant to Data to convert back to whatever that specific event wants. There isn't anything wrong with what you are doing, I'm just unsure if it is necessary.