-

Posts

1,187 -

Joined

-

Last visited

-

Days Won

110

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Neil Pate

-

-

32 minutes ago, Antoine Chalons said:

I'm parenting today, so wait a bit for screenshot, but let's say you have 2 1d arrays of string and you want to compute the intersection or the diff, how do you do?

Also open the vim "remove duplicate from 1d array" in the array palette, it uses sets.And check this https://forums.ni.com/t5/LabVIEW-APIs-Discussions/Tree-Map/td-p/3972244?profile.language=en

OK I see. Interesting I never really thought about doing anything like that. I will keep that technique in my pocket for next time I am doing 1D array stuff. Thanks!

-

1 hour ago, Michael Aivaliotis said:

I can live without it. On the level of priorities for NXG, this has to be the least important. Just drop the vi on the diagram and wrap a while loop.

It is so interesting how everyone uses LabVIEW differently.

For me, the ability to stop a running application and still have the Front Panel of a particular sub VI open and then, without doing any more work, run it continuously and allow me to debug is so valuable.

-

1

1

-

-

1 hour ago, Antoine Chalons said:

I rarely use 1D arrays anymore!

Wow, now I really am interested!

Can you post a picture of something neat you can do with a Set? I have not really had the "aha!" moment yet with Sets.

-

I am a bit late to the Map party. I love them though, thanks NI. 🤩

For those that have not tried them, take a quick look. I have only used the Map a few times (so cannot comment on Sets) but the API is nice and simple. Goodbye Variant Attributes 🙂

-

- Popular Post

- Popular Post

11 minutes ago, Aristos Queue said:Darren has acknowledged he is not the right person to develop a left-hand set of shortcuts. If you put together a list of shortcuts for yourself that work better for left hand, contact him... he's happy to promote them. You can swap out the QD shortcuts if you have a better set.

NXG actually seems quite good at figuring out what I am looking for with quick drop; I just type the name of the primitive and it appears. This is good as I cannot identify anything because the new icons all look totally weird to me...

-

3

3

-

5 minutes ago, crossrulz said:

Those are the same as what is default in LabVIEW 20XX. They were chosen by Darren specifically to only have to type with the left hand.

I am not convinced this is a good idea to promote these to mere mortals though. Someone new to LabVIEW might actually try and use and remember this (instead of just typing the "<" symbol which also works...)

Also, as a leftie my right hand hovers on the arrow keys when programming, so nowhere near the left hand side of the keyboard.

-

I am not sure if I have mentioned that the fist time NXG starts it essentially freezes my computer so hard I cannot even click anywhere else (like the Windows start menu) or bring up the Windows task manager. This hard freeze lasts anywhere from 30s to 60s. Thankfully things are better after subsequent launches.

-

- Popular Post

- Popular Post

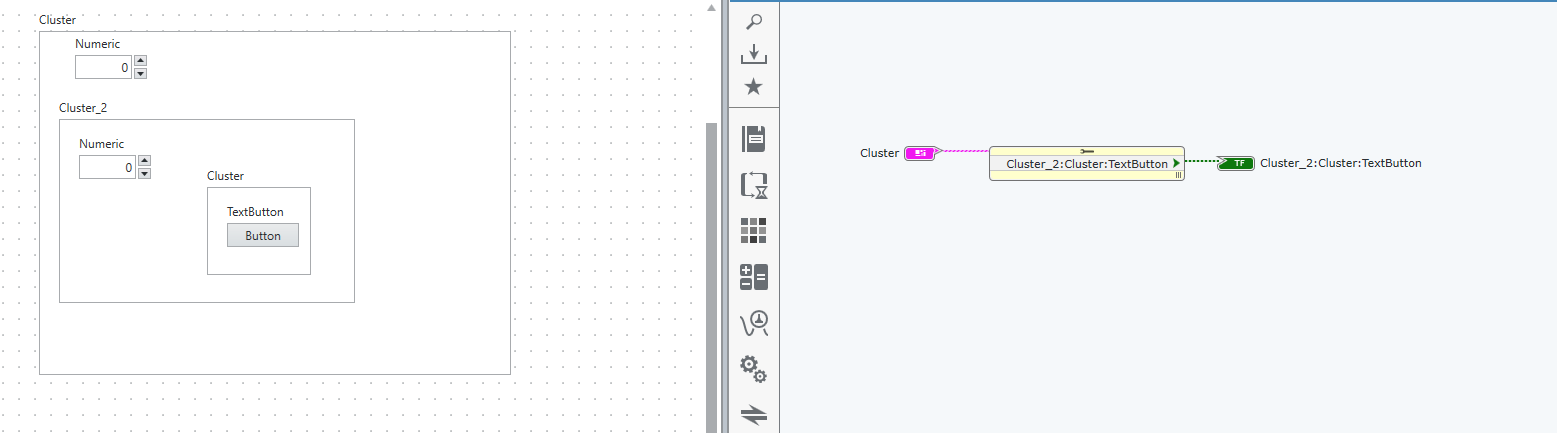

1 hour ago, Michael Aivaliotis said:So I guess NXG doesn't support dot notation unbundling.

You mean like this?

That is possible. Obviously for the sake of change a colon is now used to separate the elements, because hey they were changing everything else, right?.

-

3

3

-

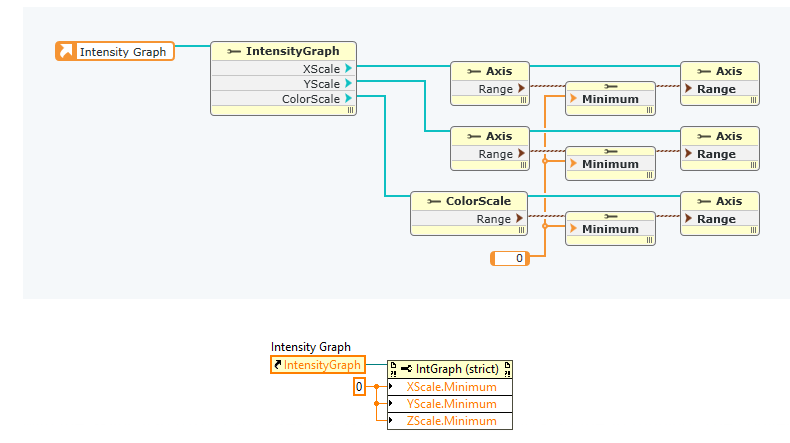

1 hour ago, Dataflow_G said:

Thanks for putting down all your thoughts and providing examples, Neil. I agree with every point you've made. Have you used the Shared Library Interface editor yet? That's some next level UI inconsistency.

I wrote a couple of blog posts on my experience converting a small (< 100 VIs, < 10 classes) LabVIEW project to NXG (see Let's Convert A LabVIEW Project to LabVIEW NXG! Part 1 and Part 2). During the process I made a lengthy list of issues and came to the same conclusions many people have voiced in this thread. Of the issues uncovered during the conversion, some were due to missing features or bugs, some a lack of understanding on my part, but a surprising number were due to interesting design choices. The TL;DR of the blog is there is nothing in NXG for me to want to continue using it, let alone switch to it from LabVIEW. Which is sad because I was really hoping to find something to look forward to.

Here's hoping for a LabVIEW NXG: Despecialized Edition!

Your two blog posts are really interesting reading, thanks for taking the time to document your experience. You really are persistant! This is a really nice writeup that will probably be totally ignored by all the managers and c# developers at NI and their sub contractors.

Your example of setting the scale ranges just screams .net interface! Yuck 😞

Same with this unbundling drama in classes. No LabVIEW developer would look at the technique in NXG and come to the conclusion that is a good experience.

-

1

1

-

-

And I had totally forgotten Run Continuously has been removed.

Like all good citizens I don't use this for actually running anything, but it is so helpful when debugging. In Current Gen when I have a VI that is misbehaving and I already have all the data "pre-loaded" in the controls I turn on the Retain Wire Values, click Run Continuously and I can just hover my mouse over the wires and can usually very quickly spot the mistake in my code.

Why is a feature like this removed? Sure, it is not a great thing to use this to actually run my application, but why removed it? Hide it away somewhere if you are worried it is going to be abused.

I raised this issue in the technology preview forums years ago.

Again, surely there are others who use this feature regularly?

Edit: so I was probably too harsh here, sorry. It seems that in NXG if you have Retain Wire Values turned on and you hover over wires after the VI has run you do actually get a small tooltip type popup that displays the value on the wire. I need to play a bit more with this implementation.

Edit2: actually I forgot another useful scenario where I still use run continuously. Simple VI prototyping. I write some code that does some kind of data manipulation and want to test it quickly manually with a bunch of input vectors. Hit run continuously and very quickly test a bunch of the input values and manually observe the outputs. Now in NXG I will have to create a new VI, drop down a loop of some sort, wire drop in my sub VI etc etc. What a pain in the ass... 🤮

-

The GUI of NXG is unfortunately stuck in 2010. Perhaps forever.

The depressing thing is I don't even want to know how much money has been sunk into development.

-

1

1

-

-

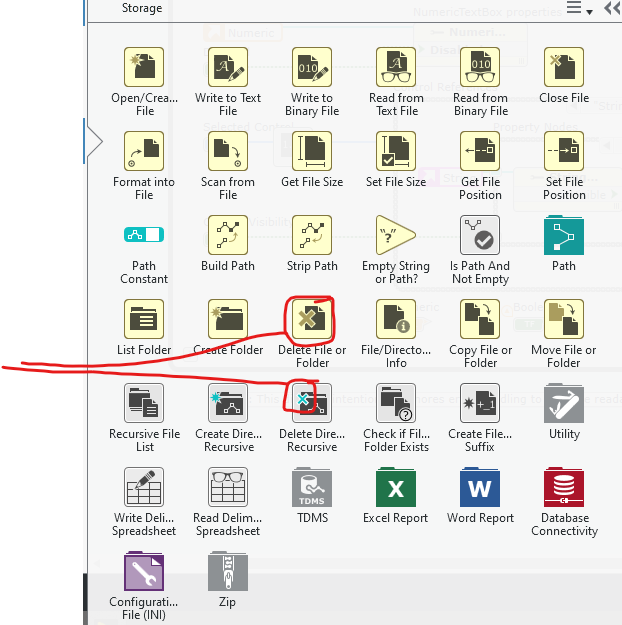

Apparently X is now delete. (As an aside, not sure why the "X" is different sizes on these two icons. Also why do some of these things have blue overlays (like the Create Recursive) but not others (Like Create File Suffix or Check If File Of Folder Exists))

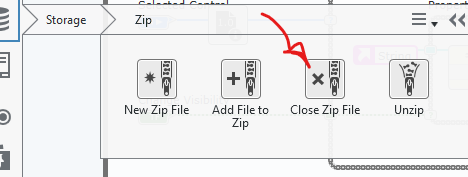

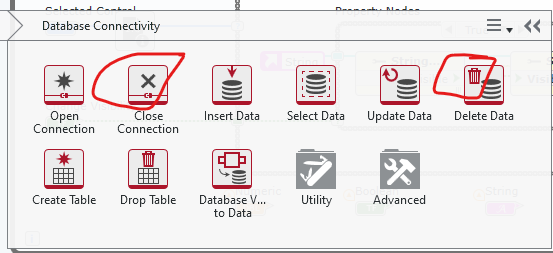

oh no wait, it is not. Here X is close.

Here it is close. And now we use the bin for delete.

Sigh...

-

1

1

-

1

1

-

-

Why do web VIs have a different file extension?

Now I have not used these on NXG but I sincerely hope we don't have a different file extension for RT and FPGA VIs.

-

35 minutes ago, Albert Geven said:

Hi

A lot is solved with quickdrop but not everything...

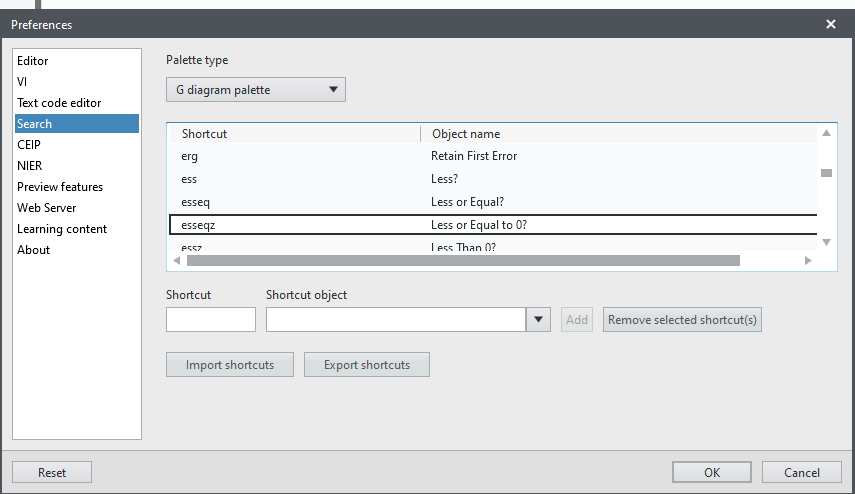

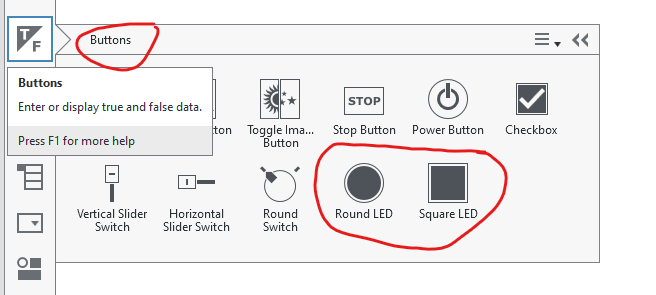

Speaking of quickdrop... "esseqz" really just flows out of the fingertips does it not? (Thankfully just by typing "Less" in the quickdrop you get want you want). I think the machine learning algorithm that was used to generate these "shortcuts" might need a bit more training data. Pity the algorithm did not at some point put their hand up and say, hang on, this is a bit crazy. Just look at the others,

"ess" for Less...

"erg" for Retain First Error

-

2 minutes ago, drjdpowell said:

Neil, your almost getting me to consider installing the latest NXG and try and give feedback again. Almost. It's too depressing. And there's no good channel for feedback; that forum link AQ gave is practically dead. And I doubt any NXG Devs are keeping up with LAVA. I gave some feedback on the Champions forum, but that's not public.

Please do give it another whirl. Certainly it is getting better just most of the pain points we have been moaning about for literally years are not being addressed. I feel this feedback is just going nowhere. It is quite telling that the devs of NXG do not monitor LAVA (I agree with you).

I wish NI could show us a just a single medium or large application that is being developed in NXG.

Thankfully Current Gen is still fantastic and just getting better, but at some point (probably in less than five years) work is just going to be stopped and then it will slowly wither into irrelevance.

-

I really like the auto-alignment feature of the Front Panel, but why is this disabled when designing a new gtype? There seems to be no snap at all so getting things to line up nicely in a cluster is a lot more work than it should be.

-

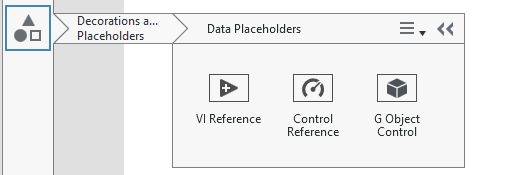

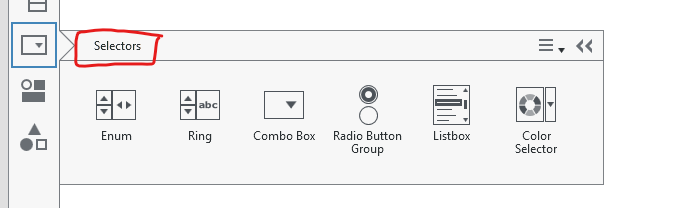

Anyone want to guess what is in the bottom icon? (Not to be confused with the Cluster which looks quite similar and is just above it).

Yes, you guessed right. Decorations and control references! Because those definitely deserve to be grouped together. But just to further confuse things, they are now called Data Placeholders.

I am sorry, I just cannot believe this GUI was designed by anyone who has actually used LabVIEW in any capacity or that this is the result of 8 years of iteration.

-

2

2

-

-

-

-

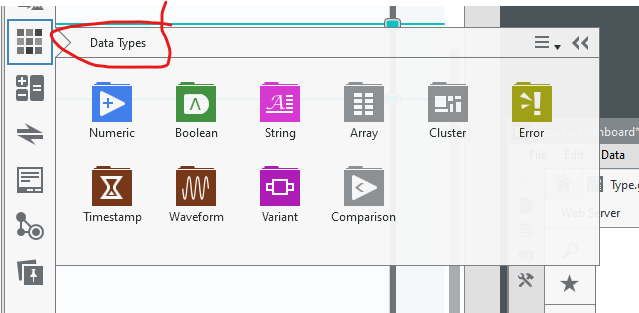

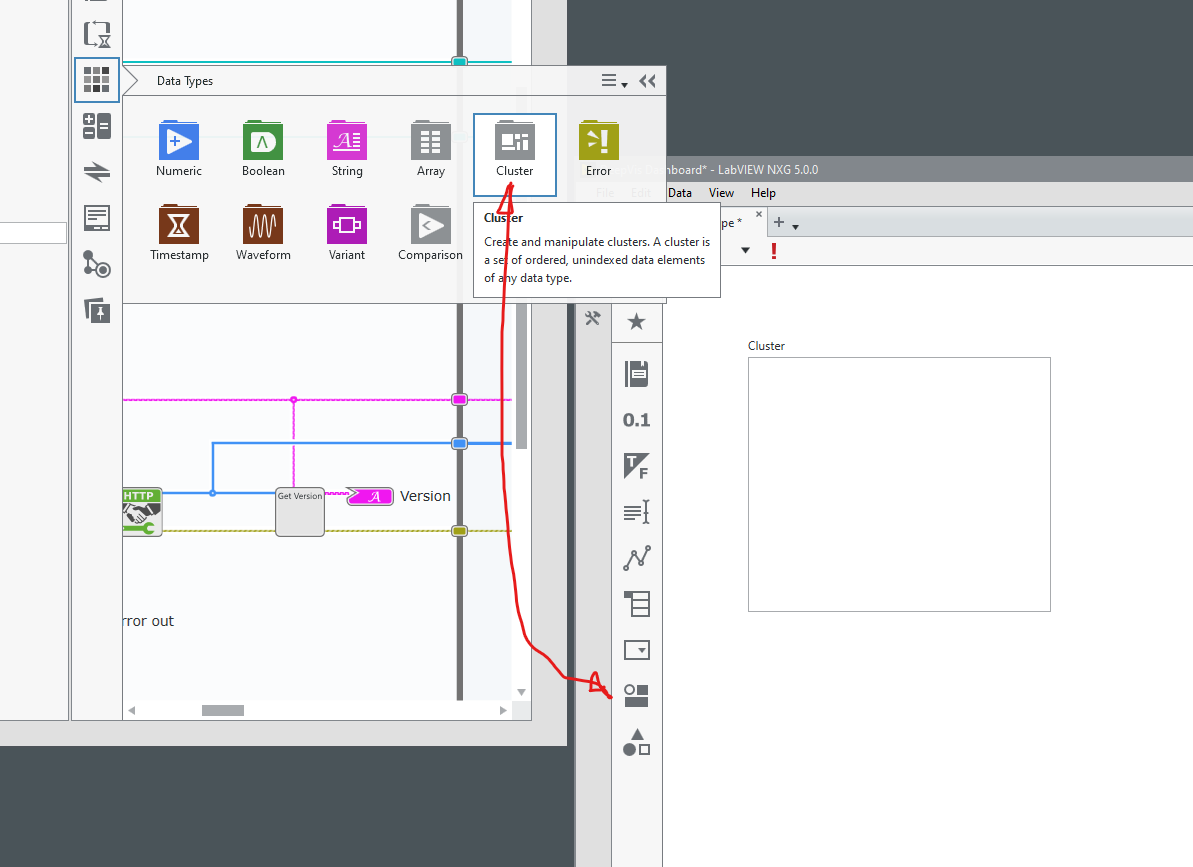

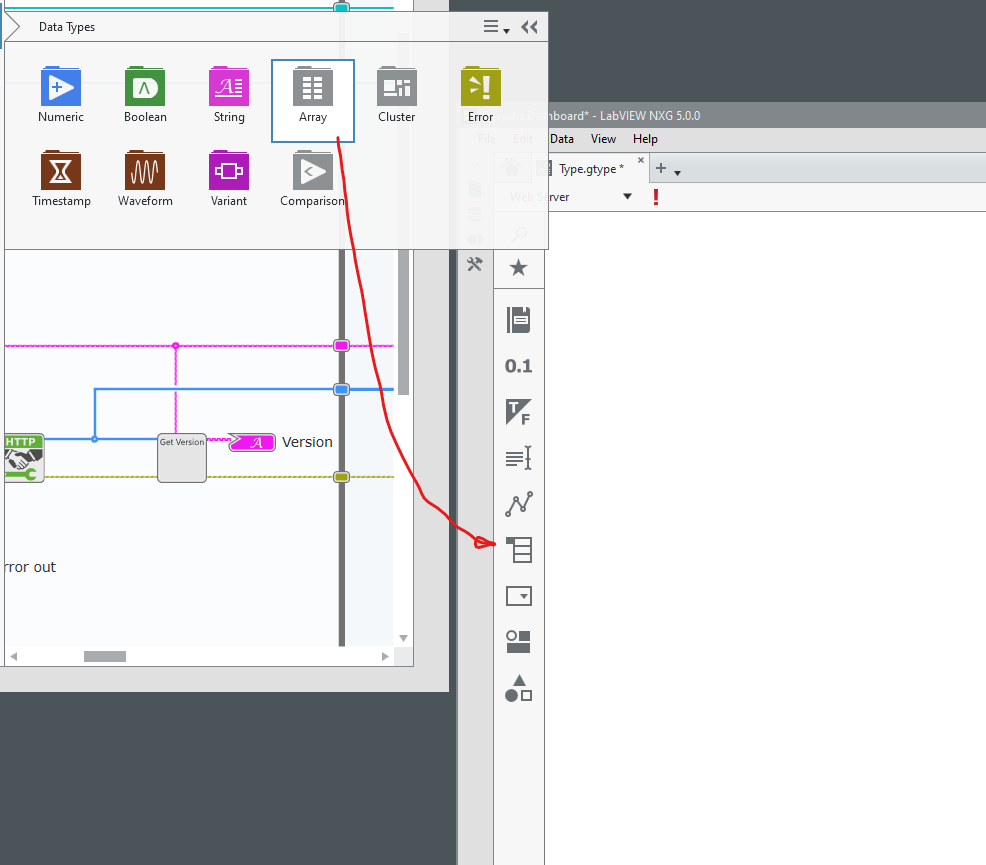

These are not actually Data Types. There are palettes for manipulation of data.

-

1

1

-

-

Why are there two different representations of clusters

and arrays?

-

1

1

-

-

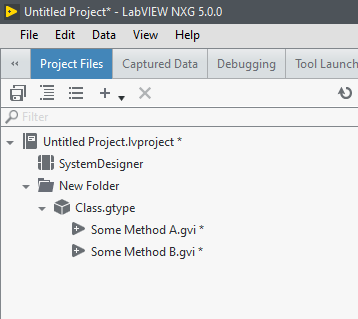

Please tell me I have missed something obvious...

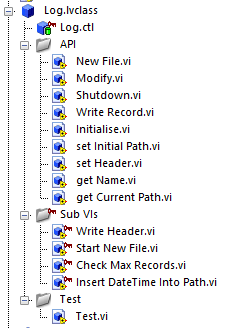

By visual inspection of the project, what is the access scope of the two methods in the class?

NXG has taken away Virtual Folders and also now visual indication of access scope? I am being dumb here right, I must be missing something obvious surely?

For comparison, here is a class I wrote 10 years ago, which of these looks easier to use? (Note my actual class on disk has a flat structure, no API or Sub VIs directories are present.)

Now I have reminded myself that Virtual Folders are gone. This is so terrible.... Why NI? 😞

-

Check out this excellent presentation that covers a lot of the bases.

-

1

1

-

-

I am still not convinced I want to develop a 2000+ VI project in NXG ... or that it has even been done yet. We are probably close to 8 years (or more) into development of NXG. That is a very long time to go without this kind of test.

EXE back to buildable project

in Application Builder, Installers and code distribution

Posted

https://www.cvedetails.com/vulnerability-list/vendor_id-12786/product_id-25696/NI-Labview.html