-

Posts

1,156 -

Joined

-

Last visited

-

Days Won

102

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Neil Pate

-

-

Anybody got anything older than this video about LabVIEW 5.0?

-

You are not alone Cat. I got a similar error and after battling with NIPM for a weeks or so gave up and just used a different computer. I was getting a similar error message which is totally nuts as it was also for the offline installer.

-

Yes this is bad news indeed. The forum channel is full of angry customers.

For what it's worth I have managed to import several repositories from Bitbucket into github using the github import, but it failed for me when I had 2FA turned on in Bitbucket.

-

I don't actually need the large memory support at this point, but feel it is getting close to calling time on 32 bit applications. I have been dabbling with LV2019 64 and so far it does what I need but admittedly I have not needed to interface with any hardware yet apart from a GigE camera.

-

@Rolf Kalbermatter my brain was clearly running badly when I typed that we needed LV2018, what I actually meant to say was the 64 bit LV was required. 🤐

I say this as it is my understanding the Tensorflow DLL is strictly 64 bit.

Do you suppose it would be possible to use 32 bit LV if going via the Python route?

-

Thanks JKSH

I echo your sentiment. Versioning is always going to be a problem, but just recnelty Tensorflow hit 2.0 so that was what I was planning on supporting as the minimum version.

Going down the python route is also interesting but a bit fraught. Probably due to my inexperience with python and it's tool chain I spent the better part of a month just trying to follow myriad tutorials online to get python and tensorflow working properly on my PC, with very little to show for my results.

I do think it would be reasonable to say that a toolkit such as this requires LV 2018 or greater.

-

Although my intended application is vision based I suppose in principle a Tensorflow Toolkit should support other types of ML applications. As I said I don't really know enough about tensorflow to know how big an undertaking it would be to create a wrapper for the whole DLL.

-

Hi everyone,

I have recently been playing around with the Tensorflow support built into the Vision Development Module. It seems to work fine and I have the basics up and running. There are a couple of problems though that I would like to solve.

Firstly, it seems to not use GPU acceleration at all which mostly makes it useless for real time processing at any sensible frame rate.

Secondly, it is locked into the VDM which is not cheap and also totally closed source. Under the hood the regular Tensorflow DLLs are called, but via a layer of the Vision toolkit.

Third, for this closed source reason we are locked into whey ever version of Tensorflow NI chooses. I totally get that from their business point of view, but conversely I suspect that there is very little push inside NI to update this regularly due to all the hurdles that come with it.

My proposal is to implement some kind of community edition of Tensorflow API that wraps the Tensorflow DLL directly in LabVIEW and exposes hardware acceleration capabilities.

Anybody interested in collaboration? I know a little bit about Tensorflow, but not enough to be productive, so my first step would just be to mimic the API provided by NI which is deliberately quite simple.

-

1

1

-

-

Not seen the banner at all since you did the switch, so it seems to be working. Thank you

-

Sorry, I know way too little about the subtleties of PPLs!

The only other thing I can think of is making sure the class is being loaded from the right place on disk. From your screenshot you have a bit of the path in the first dialogue can you try figure out the whole path and then pass it to the Get Default Class VI (or something like that, I am not at my PC).

-

The error probably goes away in your last scenario as dropping the class constant onto the block diagram causes the LabVIEW compiler to automatically include that class in the build. This kind of thing happens all the time with factory pattern type stuff in LabVIEW, where everything works perfectly in the IDE because all the classes are in memory.

If that is the problem you can solve it in a number of ways, one of the easiest I have found is to make a dummy VI that you can then include in the build or place somewhere on your top level VI, in this VI just drop down all the class constants of the classes you are using. The kinda breaks the lazy loading paradigm, but you win some you lose some.

Just as a side note, I avoid PPLs like the plague as I just cannot see a good use case for them for "normal" applications. The sheer number of problems that arise with PPLs have caused me to put them in the same category as Shared Variables; nice in principle but never to actually be relied upon...

-

Thanks Michael. I have not seen it today 😁

-

So out of curiosity, how does everyone else handle their name generation? Multiple cameras with multiple image processing steps each needing temporary storage. I usually try and programitically generate the name but now that I think about this thread there must be a better way that I don't know about.

-

Anyone else still getting the green wiki banner at the top? I must have dismissed this about 100 times if not more but it keeps coming back. This is on mobile Chrome but I am pretty sure I see it on desktop Chrome too.

-

Wow that sounds pretty rubbish! I have never really gotten to the point where I have said yup I totally understand this API.

-

The one thing that always confused me was writing the actual image to the terminal. If it was purely reference based there would be no need to sequence it as I have done it in my demo. The indicator gets updated when the same actual data value hits it, which is a bit unreference like (maybe...). As Rolf says, a strange duck.

This does help me know what happens if for example I broadcast an Image reference on a user event. No data copy of the whole image occurs, and if I do stuff to ref coming out the receiving event it might effect my original image, it is not a copy.

-

-

this is the external DVR I was talking about

-

Hi Tuan,

I would be a bit surprised if you could write to disk at 3.2 GB/s for any reasonable length of time even with a fast SSD. You say longer than 10s, how much longer? If you can buffer in memory all your data you could stream to disk as needed at a slower rate.

Something I came across recently which I was surprised even existed, is a feature where an FPGA can use a DMA FIFO transfer to write directly to a TDMS file using a kind-of DVR (external DVR perhaps). Not sure if your hardware supports that but it might help with performance.

-

They are just the dialogues indicating what is happening, no user interaction is required.

The first dialogue is Package Manager and the next one is all the RTE stuff.

-

I have a legacy application I am updating to use LV2019. It has an installer for the application which installs the run-time engine and the application itself (and a bunch of other stuff). This is done using InnoSetup and not NI's installer creator. Previously, I was able to run the RTE install in totally silent mode where no popups or anything came up on the screen, I would like to do the same thing but cannot use the same parameters as 2019 uses the new NIPM format.

I can get the installer to run through from start to finish with no user interaction using:

install.exe --passive --accept-eulas --prevent-rebootHowever during install it pops up its own dialogue.

Does anyone know how I can suppress this dialogue?

-

18 minutes ago, hooovahh said:

The only thing to add to Neil is that the Wait Until Completion should be True if you are going to read the standard output after it is complete.

Indeed.

In my circumstance though, I don't really care too much for the output, I have some downstream code that waits a bit and checks for correct generation of a PDF file I am creating.

-

@Brainiac did you solve this in the end?

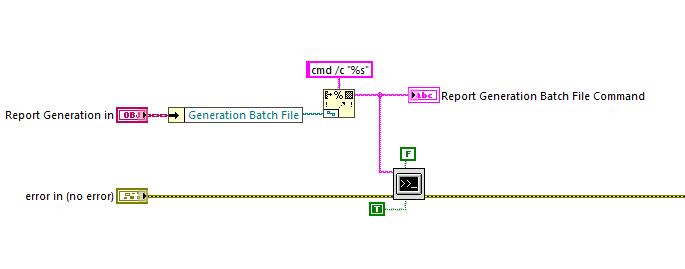

The code below shows how I do it, this works completely fine for me on Windows 7..10.

The Generation Batch File is the complete path on disk, I don't use the Working Directory input.

-

1

1

-

-

Now that you mention it, I recall a work around I had to put in place some time ago on a touch screen only application. Sometimes my custom dialogs got hidden behind the main panel, the way we solved it in the end (ugly but worked), was just to periodically force all the dialogs to be on top. Each dialogue type actor was responsible for getting itself on top. Not pretty...

DLL Linked List To Array of Strings

in Calling External Code

Posted · Edited by Neil Pate

Just when you think you know everything there is to know about a language (😉) somebody comes and shows you otherwise 😲