-

Posts

1,824 -

Joined

-

Last visited

-

Days Won

83

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Daklu

-

Yes, that is typically what they say and as a general rule of thumb, they are right. Programmers not trained in software engineer or familiar with OO thinking often create tightly coupled applications. What they should say is, "unnecessary coupling should be avoided." Too much coupling makes it hard to change the software. What they should also say is, "unnecessary decoupling should be avoided." Too much decoupling makes the code harder to follow and may impact performance. How much decoupling is "enough?" If you can easily make the changes you want to make it is enough.

-

In this particular case I was imagining the abstract parent class in the exe and the child class in the llb. I think you could do that. You do end up unable to create any parent-child relationships within the llb, but that may be an acceptable constraint given the circumstances. I hadn't considered that, but it does make sense. Yeah, that's a good idea. VIPM uses zip files for their package format. Probably have to do some pre-install processing to suck up all the dependent vis and namespace them, but I don't see a good way to get around that.

-

Absolutely, and I didn't mean to imply otherwise. Motivational hedonism only uncovers the first layer of reasons why we act the way we do. There are many more layers to go. I do believe thinking about actions in terms of pleasure/pain has made me more empathetic and less apt to idolize or condemn others. I'm much more able to accept them as they are instead of comparing them against a standard of what we "should" do. Agreed. Nature vs. nuture. That's a 40 page thread all by itself.

-

Herded? I think it's more like dropping a dead deer in a pack of hungry wolves. Sure we can make conclusions from this thread. I already have: 1. We can't agree on the criteria of "decoupled." (And I haven't seen any compelling arguments for accepting one set of arbitrary criteria over any other arbitrary set of criteria.) 2. Without a strict set of criteria, "decoupled" is meaningless in and of itself. It only means something when considered against a set of expected changes. 3. Since we obviously mean something when we use the word "decoupled," we each apply our own set of expected changes to the code in question and come up with different answers, all of which are correct. Conclusion #1: Therefore, when someone asks "is x decoupled?" the only good response is, "what changes do you expect to make?" Conclusion #2: Cat is a rabble rouser.

-

I think ppls *should* be able to do it, but in practice the process for getting there isn't intuitive. At least... it's not intuitive to me. Since you want a single file for each plugin, you're limited to packaging the plugin in a dll, ppl, or llb. I have no idea what Labview code compiled into a dll looks like to other Labview code. Maybe it would work...? Using PPL to do this is finicky at best. I haven't used LLBs either. Do library VIs retain their namespace and visibility when placed in an LLB? That might end up being the best solution, though I don't think you will be able to have any parent-child relationships inside the llb. This is a packaging and deployment issue and shouldn't have too much impact on the overall application architecture. Knowing you want plugins is enough to get started. I'm almost positive all your stated requirements can be met--at the extreme you could wrap each plugin in code that makes it operate as a stand alone app and communicate via TCP--it's just a matter of how much extra stuff you can tolerate to get there.

-

John was hoping to have a single file for each plugin to simplify distribution. Given the uncertainty of working with PPLs, deploying a directory instead of a single file might be the simpler solution.

-

I claim to have invented a three-sided square and I call it a "squangle." Does the fact that a squangle--by definition--can't exist (in cartesian space) mean the term shouldn't be used? No, because it conveys an idea; the concept of a three-side square. I agree with you here. "Altruism" means something, but it doesn't mean "acting against one's self interest," which I think is the implied definition the author uses. He says, "Case 3, however, might be a little more problematic. In fact, I figure many folks would immediately suggest that our hero was acting with altruism. If he was a "believer" in the rhetoric which suggests that giving one’s life for a cause is a worthy exploit, then of course he would be altruistic, right? No. Anyone who believes that the trade of his life is appropriate in exchange for some higher goal is, by definition, valuing that higher goal more than his life!" If we accept the author's proposition that people always choose actions that return the greatest perceived value then an "altruistic" person is simply one who values things differently than the average person. In general I would say an altruistic person places less value on personal comfort and self existence than most people. [Edit - Rats, Yair beat me to it.]

-

How about if instead bending a variant into doing somthing like that we get real data structures and iterators instead? (Yeah, yeah, it's on my list of things to do for LapDog...)

-

I wish I could explain things as well as these guys... that quote pretty much sums up what I was trying to say where "what he likes" = pleasure and "what he does not" = pain.

-

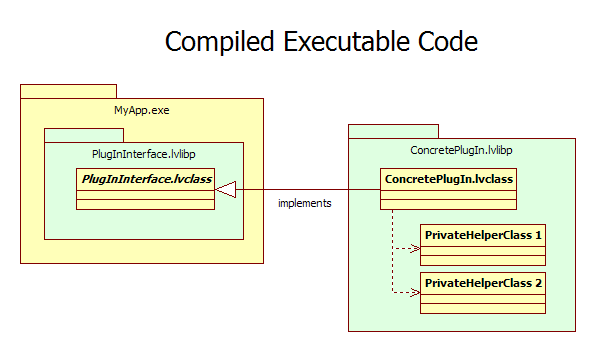

Well, I built some sample project that demonstrates the concept. And it even kind of works.... sometimes. 1. I thought the exe would absorb PlugInInterface.lvlibp, but it doesn't. It appears all packed project libraries (ppl) remain external to any builds that use them. 2. If an executable depends on a ppl, a copy of that ppl will be created during the build. Oddly, the built code doesn't appear to use the copy created during the build. It links to the same copy you used in the source code. After building the PlugInInterface ppl, move it to the directory where you are going to build the exe, and link to that copy in the MyApp source code. 3. Using a release version of PlugInInterface.lvlibp while creating ConcretePlugIn.lvclass prevented me from using the wizards to override parent class methods. A Release build strips the block diagrams and it appears the wizards need the block diagrams. It might work if you manually create all the overriding methods, but I didn't explore that much. It worked after I switched over to a debug ppl build. 4. Once you add a ppl to your project you can't move it's location in the file system. You have to completely remove it from your project, move it, then add it to your project again. My main method has only one class constant and one method from the ppl, and it still irrritated me to have to delete them before moving the ppl. I tend to move things around a lot while I'm refactoring so this is a show stopper for me. 5. In general, Labview was a lot less stable while I was playing around with ppls. 6. The attached version doesn't work very well--It's not loading the concrete plug in class. It *was* loading it earlier but I haven't figured out exactly what changed yet. How important are those particular requirements? MyApp.zip

-

Amen. You've greatly simplified what I was trying to say. (Wish I had a full-time copy editor to review my posts before I submit them...) Extending that thought a bit, since "decoupled" has no concrete, objective definition it follows that any assertions that a particular component is "coupled" or "decoupled" are based on an arbitrary set of requirements. Unless those requirements are identified and agreed upon it's unlikely a group of people will reach a concensus.

-

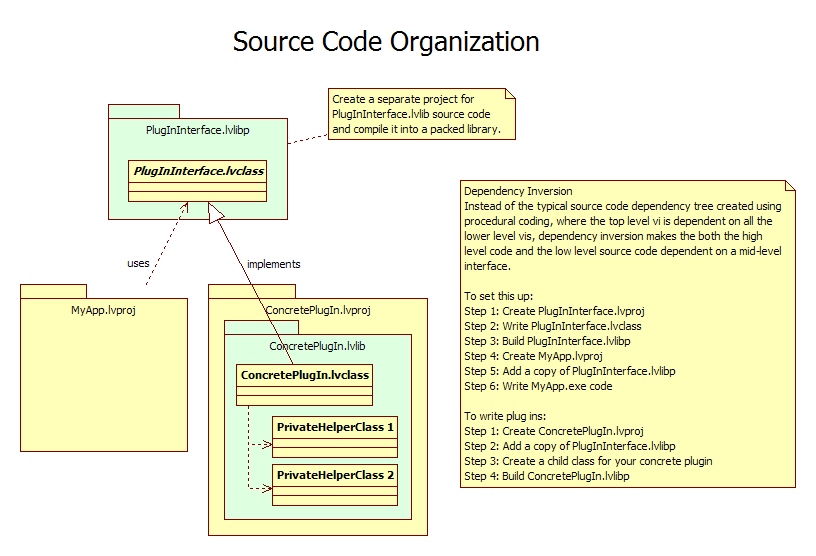

(Glad Lava is back... was going through withdrawals...) Okay, here's how I think you can accomplish your goals. I haven't actually done it yet, but based on available information I believe it will work. Your source code will be set up like this. Once you create PlugInInterface.lvlibp, you can have different teams work on MyApp source code and concrete plug in source code in parallel. Just make sure they all have the same version of the packed library... I'm not sure what will happen if you start mixing versions. Distributing the packed library to plug in developers might be a little unusual. It's designed for a specific app, so it's not really a candidate to add to your general reuse library. Personally I'd probably store each version on a server and let the devs download the most recent version when they start a new plug in. If your scc supports externals that might be another way to do it, but I can't speak to that. When it comes time to create an executable, I'd include PlugInInterface.lvlibp as part of the executable, as shown here. Again, I haven't tried it and I'm not positive any of this will work, but it's the route I'd explore given your requirements.

-

This topic is related to the recent thread on Decoupling the UI. Creating plugins that can be executed without running the main exe is pretty easy. The module itself shouldn't know or care who is asking it to do things. How complex the wrapper is depends on the module's api and how much set up work the main app needs to do to create and use the modules. Creating plugins where no source code dependencies exist between the application and the plug ins is a little trickier, but it can be done. The solution is something called The Dependency Inversion Principle. The DIP states: High-level modules should not depend on low-level modules. Both should depend on abstractions. Abstractions should not depend on details. Details should depend on abstractions. I have a UML diagram of one solution using packed project libraries, but can't upload it right now. AQ briefly mentioned how to do it here.

-

My bad LV habits acknowledged, a short list

Daklu replied to MoldySpaghetti's topic in LabVIEW General

Was looking for some old content and ran across this, which I hadn't seen before. Here's the main problem I've had with explaining the benefits of OOP to people... Any sort of OOP example is hampered by the fact that it has to be simple enough for users to easily understand it. For simple examples, the inevitible question everybody asks is "Why didn't you just do <insert procedural implementation>? It's way easier to implement." I (or whoever is doing the demonstration) typically start explaining what kinds of changes are easily accomodated by the OOP implementation. Sometimes that is sufficient; usually it is not. Demonstrations are good at showing how to do something using OOP and what can be done with it; they are not good at convincing someone why they should use OOP. For me, the benefit of OOP is felt through improvments to my entire development process. Testing is easier so I have higher confidence in my code. Change requests are easier to fulfill without risking introducing undiscovered bugs. APIs between the various internal components are better defined, making the whole process easier. Overall I experience far less frustration during development using OOP than I do using procedural programming. Those improvements are tangible to me, but they're not something I can demonstrate to the listener. In a nutshell, the real reason I use OO instead of procedural programming is that I can create higher quality code faster. Generally speaking, I can get 80% of the requirements done faster if I program procedurally. Those "positive functional requirements" are the requirements that define the application's reason for existence. It's that last 20%, the "by the way" requirements, that takes much longer to implement and test correctly. These are the things people usually don't think to specify when defining the requirements--specific error handling or UI behaviors, a "pause" button, "unattended" mode, etc., but ask for when they start working with the app. Often implementing that last 20% requires either, a) rewriting significant portions of the application, or b) allowing the various components to become more tightly coupled than they should. Neither option is desireable. Option a is essentially throwing away all the time and money spent working on the code that's being tossed out. Management tends to frown on that. Option b is incurring code debt, which management usually ignores until the app's growing fragility causes the manufacturing line to be shut down while the software team scrambles to untangle the mess of interconnected hacks and quick fixes. [Edit: Having typed all this up I realized my comments aren't relevant to the point I think you were making. (Reusable class libraries?) Oh well... I'll let them stand.] -

I think you only have two options here: 1. Create custom pre-build vis that create copies of your reusable code, or 2. Build the modules into dlls. (Note - I have no idea if you can dynamically load a child class from a dll.) AQ's timing is impeccable once again. He commented on this just this morning over here. "A class cannot leave memory until all objects of that data type have left memory." Here's my interpretation of what that means in your situation. Creating instance 1 of the module will obviously require disk access. Creating instances 2..n before instance 1 goes idle will not require disk access. If at any time all instances of a module are idle, the module may be unloaded and you'll take a disk hit when you instantiate the module again.

-

Sounds useful. Sharable? I've had error messages that say things like, "If you're seeing this error, the developer who wrote the code didn't read the documentation. Find him and smack him." But they tend to be few and far between.

-

The exe needs to be able to call a test module from the external library using only the name of the library and the module. Assume that all libraries will be stored in a fixed location relative to the exe. Is there a reason the test module needs to be a single file? Can you distribute them as source code with a predefined directory structure instead? People have reported some issues with packed project libraries, so I'd probably avoid them for now unless you're okay playing around in the sandbox a bit. The exe will spawn multiple parallel processes that could each call the same module at the same time. The module therefore must be completely reentrant to allow this with no blocking or cross contamination of state data. Each of the parallel processes is allow to call multiple copies of the same module simultaneously, if the module allows for this type of operation. So, each process must allow this with no blocking or cross contamination of state data. If absolute non-blocking behavior is required, mark every vi in the parent class and child classes as shared reentrant. As a practical matter you'll probably be safe if you just identify the relatively time consuming methods and mark them shared reentrant. Also, by-ref class implementations may be problematic if you're not careful with your wiring. Important question #1: Do you expect the parallel processes to make calls to the same source code module, or the same run-time object? The former is accomplished via reentrancy settings; the latter requires creating a lot more structural code. Important question #2: Who controls the dynamically spawned processes, the exe or the module, and why? The requirement implies the exe has to own the process, but it might be easier using an actor object pattern to let the module control itself. The exe needs to pass information to the module in a standard format to control its execution (parameters). Not a problem. It's just designing an api for the modules. The exe must be able to determine if the module is still running (or crashed). There are several ways to do this. I usually have the module send out status update messages and the exe just keeps tracks of the module's most recent status. The modules are coded so they always send a MyModule:Exiting message as the last action before exiting. You can also implement a request/response asynchronous message pair, a synchronous message, or implement a method the exe can call that checks the status of a module's internal refnum, such as the input message queue, and make sure the module releases the refnum when it exits. The module must be able to monitor a set of Booleans controlled by the exe in order to respond to abort or error flags. The module must be able to pass back messages to the exe while it is still running to update its status. The module must be able to pass back its results to the exe and those result must remain accessible and readable after the module has completed and left memory. All part of the module API. The module must be executable outside of the exe for debugging and development purposes with minimal wrapping. Explain. Executable apart from the main exe without using the LV dev environment? Or are you just saying you want to be able to test the modules without relying on the executable's code? Each library of modules must be packaged into a single file that removes diagrams, type defs, etc. Oh. Ignore my first question above. Hmm... if this is a hard requirement it sounds like you need either a packed project library or a dll, and to be honest I'm not sure how well either one will work in your situation. Each library of modules much include all sub-vis calls by any module in the library and those must be name-spaced to prevent cross linking with similar named Vis from other modules in other libraries that might be in memory at the same time. This way a common set of source code can be used by all modules but is frozen to the version used at compile time (of the library) Hmm... I'll have to think on that for a bit... Once a module is loaded from disk and called by the exe, a template of it will remain in memory so that future calls will not incur a disk access as long as the exe remains in memory. Not sure about this one either. I think LV does this automatically. If not you could just load one copy of each module and keep them in an array until the exe exits. My first inclination is to make a common ancestor class for all test modules. Each test module would then be a child class of this ancestor. I could then call the module by instantiating an instance of that class. The ancestor could then have must override methods for parsing the parameter inputs and for converting the modules output into a standard format for the exe to read. That's my take on it too. Not sure how to implement the flag monitoring or status updates. Depends on how you implement the interface between the exe's parallel processes and the modules. If you use a message-based system, the exe sends FlagUpdate messages to the modules and the modules send StatusUpdate messages to the exe. If you stick with the more direct option of just calling the parent class methods, then you need to inject boolean references (dvr) into the module when it is created and periodically check them.

-

"Thought" isn't necessarily part of the process. It *can* be, but it doesn't *have* to be. Incidentally, I discovered there's a name for an idea that is very similar to what I'm describing, though I think I take a slightly different approach to what the article describes. It's called motivational hedonism (MH.) Also, I don't think MH can be used to predict an individuals behavior given an arbitrary set of circumstances. In principle it could, but I can't even get a firm handle on my own pleasure/pain equation, much less quantify anyone else's. I suspect MH is better applied to analyzing why an action is taken after it has been done. Finally, I believe MH is correct in that it is consistent and can be used to explain any action, but I don't think it is necessarily the only correct explanation. It's just one way of viewing the reasons why an action is taken.

-

(Lots of thinking out loud here... read this as ideas I'm floating, not a viewpoint I'm asserting is correct.) Not conciously, no. Most of the process takes place on the subconcious level. When faced with a decision I am aware of at least some of the factors in my pleasure/pain equation, but most of them don't enter into my thinking. Understandable. From my hedonist point of view since you don't associate any pain (guilt) from not acting, taking action doesn't reduce the pain. The coefficient of pain for the guilt factor in your pleasure/pain equation is zero. Nobody acts unless or until they subjectively evaluate the risk to be low enough. Taking action is a physical manifestation that--for that person--the reward outweighs the risk. The reward isn't necessarily a monetary reward or public recognition, though it may be a factor for some people. These are pleasure increasing rewards. Reducing negative feelings such as guilt--pain reduction--is also a reward. When you remove all the extraneous stuff, the reward is simply a higher score on one's personal pleasure/pain scale. Higher than what? Higher than what it was before? No, higher than the pleasure/pain score of any of the other choices available. I disagree. Since people only act when they subjectively decide the "risk" is low enough, that in and of itself is not enough to determine if an action is cowardly or heroic. Cowardly and heroic are subjective labels we apply to actions we believe we wouldn't have taken had we been in the same situation. That's why people celebrated as heros often don't think of themselves as heros or say they're just "doing their job." To them, their action is a natural extension of who they are. There's nothing exceptional about it. When enough people believe an action is heroic, society calls it heroic. At the core, heros are those whose personal pleasure/pain equation causes them to take action when most of the rest of us wouldn't. We conciously admire their bravery and lack of self-concern, but what we're really admiring is their pleasure/pain equation. I would say no. Heroism, as commonly used, seems to have some necessary conditions that are not met by contributing to the Labvieiw community. Significant risk of physical injury is one of them. While I'm sure carpal tunnel is quite painful, it doesn't quite meet the society's standard.

-

@Yair, I actually thought about that thread when I was writing my post. I looked up the thread but didn't see any references to not wiring the input terminal. That thread focuses on where the terminal should be placed on the block diagram. Any other ideas where it might be? I like to sprinkle my code with debug messages to provide an ordered list of events that occured during execution. It takes a little more time to set up but it's saved me loads of time figuring out what went wrong, especially when if I've got many parallel threads interacting with each other.

-

Good question. I guess I would say I believe the general perception of hero-ness increases in proportion to the assumed impact of the heroic action. More houses saved means more people affected by the action, hence greater hero-ness. Personally I'm haven't convinced myself that's a valid way of looking at it, but I think the general perception probably works that way. Here's something else I just thought about... what about the passengers on the 9/11 flight that crashed in PA? By forcing a confrontation they probably prevented the hijackers from crashing into another building and are widely considered heros. Yet self-preservation was undoubtedly at least part of their motivation for doing it--doing nothing meant certain death. Does their desire for self-preservation make their actions not heroic? Nice to have someone else looking out for me.

-

I would say a heroic action results in an immediate benefit to others. I don't think that's the same as benefitting the "greater good." Consider... Heroic Herb disregards his own safety and runs into a burning building to save the life of Tina the Teacher. Clearly this is a heroic action. The next day Tina the Teacher snaps from the PTSD, turns into Tina the Terrorist, and blows up the elementary school where she teaches, killing herself along with hundreds of children. One can argue Herb's action no longer benefitted the "greater good." Does that make his actions the previous day any less heroic? I haven't read Atlas Shrugged, but I don't see why the saving one's own children would be considered selfish while saving a stranger is not. Obviously we are more emotionally tied to our children than to strangers, and we will certainly feel much worse about standing around while our children burn than we would about standing around while a stranger burns. In both cases the act of saving the person removes the guilt of not having acted, so are not both actions selfish? <Philosophical ramblings> For what it's worth, several years ago I adopted a hedonistic viewpoint of human behavior. Not hedonistic as is commonly used--excessively pursuing physical pleasure--but hedonistic in the sense that all decisions are made based on maximizing our pleasure or minimizing our pain. ("Pleasure" and "pain" as I'm using them refer to more than just physical or emotional reactions. They're words I use to cover the larger concepts of "that which we seek" and "that which we avoid.") When faced with a decision, we always choose the option that gives us the maximum immediate return on the pleasure/pain scale.* From this point of view all actions are essentially selfish and there is no such thing as pure altruism. I'm okay with that, especially since (IMO) hedonism does a better job of explaining human behavior than other models. (*The phrase, "maximum immediate return on the pleasure/pain scale" requires a bit of explanation. Humans have a remarkable ability to anticipate the future and so we commonly make immediate sacrifices in exchange for long term benefits, such as putting a percentage of every paycheck in an emergency savings account. Since we could get more immediate pleasure by spending the money instead of putting it in savings, isn't that a counter-example of "maximizing our immediate return on the pleasure/pain scale?" No, and here's why. Anticipating the future allows us to plan and prepare for it, but it also subjects us to worrying about it if we are not preparing for it. We have reactions now based on future (or possible future) events. The comfort of preparing or being prepared gives us immediate pleasure. The worry from being unprepared gives us immediate pain.) Hedonism explains why, given the exact same set of circumstances, different people will make different decisions. Herb the Hero rushes into the burning building while Carl the Coward stands by watching. Why is that? For Herb, the immediate pain of standing by doing nothing was greater than the immediate pain he felt from the risks of entering the building. What caused his pain? Maybe thinking about people suffering inside. Maybe supressing his sense of duty to help. Maybe the memory of losing a loved one to a fire. Regardless, he chose the less painful action. Carl had more immediate pain from the idea of entering the building than from doing nothing, so he did nothing. What caused his pain? Maybe he has a fire phobia. Maybe he was severely burned previously and remembers the physical pain from that. Maybe he's afraid of leaving his family without a husband and father. Yet he also chose the less painful action. Is Herb more admirable than Carl? What makes people behave differently while the building burns is that we each apply different factors (and different weights to the factors) to the pleasure/pain equation. It also explains why some Saturday mornings I go ride my mountain bike while other Saturday mornings I watch Charles in Charge reruns with bowl of Cocoa Pebbles. My pleasure/pain equation has changed. If you want to influence a person's behavior, figure out the factors and weights they use in their own pleasure/pain equation and address those. (Hedonism also explains why I'm spending time posting this instead of practicing for my CLA exam tomorrow. I get a lot of pleasure out of discussing philosophy, so much so that it overcomes the massive pain of writing. I am a sick, sick, man. ) </Philosophical ramblings> Can't say, the article didn't give many details. What would have happened if the firefighters hadn't acted as they did? Could the fire have spread to other houses or burned down the neighborhood? Preventing several families from losing their homes due to a spreading fire is hero-worthy in my book. Exposing oneself to dangerous situations due to inadequate training (they were volunteers) or inept command is not.

-

Good question... <Thinking out loud> I don't think so. There are character traits that may make one more prone to perform heroic actions--empathy, selflessness, desire for recognition, etc.--but that doesn't necessarily make them a hero. Society doesn't ordain someone a hero until a heroic action is performed and recognized. In other words, a hero is defined as someone who performed a heroic action. No more, no less. This is a very action-centric view of heroism, but there are many labels people earn only as a result of their actions. A person may have murderous thoughts and desires, but they are not a murderer until they actually kill someone. Psychotic? Probably. Unstable? Possibly. Dangerous? Almost certainly. Murderer? No. I guess the converse question is, is it possible for an heroic action to have been performed by a non-hero?

-

Merged? Like Shaun I assumed the event infrastructure was already based on queues. Since it's pretty straight forward to replicate user event functionality using queues I view events as a higher level abstraction of specialized queues. I'm not sure how you can merge them without giving up some low level control over queues or high level simplicity of the event structure. The prospect of having to create and register a callback vi to handle every fp event doesn't excite me. I like how the event structure simplifies that for me. Personally I think the event implementation is very good with respect to fp events. If we start talking about user events, well... they are not as useful as I had hoped when I originally dug into them several years ago. I know I've mentioned it in other threads and I know I hold a minority opinion, but I don't see any good generalized implementations that take advantage of user events' one-to-many capability while maintaining robust coding practices. On top of that, when a given component receives both queue-based and event-based messages they usually have to be combined somehow so a single loop executes both messages. That's not particularly hard to do, but it does require more code and can become a pain in the neck when it has to be done for every component. (Incidentally, the typical technique of nesting an event structure inside a queue-based message handling case structure isn't, IMO, a particularly robust solution. But I digress...) I agree there is significant functional overlap between queues and user events, but I don't think the APIs should be merged.