-

Posts

2,397 -

Joined

-

Last visited

-

Days Won

66

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by jgcode

-

-

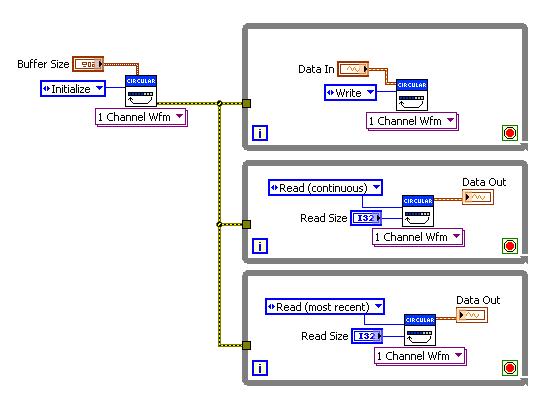

My doubt is these 3 parts are ok? Do I need to link the same data in for all the 3 parts? Because in the same the autor omit some links in his images. So I dont know if I have to wire these components or not.

I agree, the API is not the best - having wrappers around each method (init, read, write) that only exposed the required inputs would be nicer, but you could always do that.

Anyways, to answer your question, you only need to supply the information required for each method e.g. if you are reading from the buffer you do not have to input data.

This image (ref: ni.com) sums up an example best:

-

I agree with Paul. I've used non-default object constants in the past and in the end I thought they were more trouble than they were worth.

I haven't used them in coding, but know you can do it. I can imagine it would be a nightmare to track and make an edit in a large app.

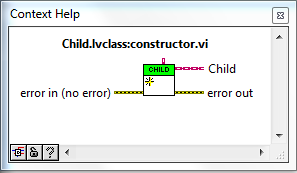

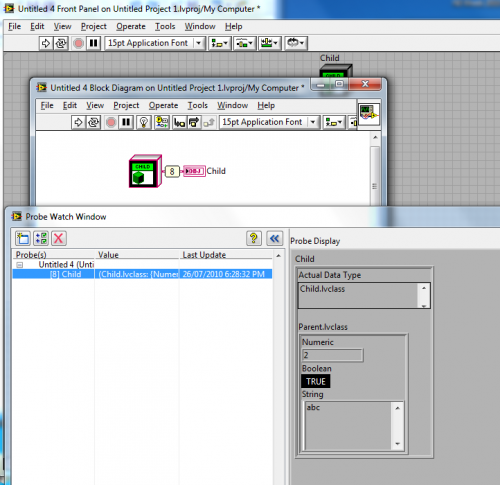

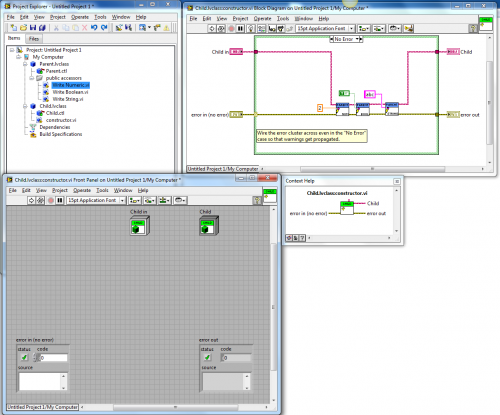

I think it is preferable to initialize the values of the object explicitly (not technically a constructor).

Tomi showed me the above here and I have been using it ever since

It does not need a Class input (that is optional but can be used by child classes that need to call it)

The only thing I can think of is that if you have a plugin architecture and you need to call this you will need to wrap it in a DD VI anyways.

Is it not a constructor? Well maybe not a real one, but close enough in a LabVIEW context?

Please discuss - does anyone do anything similar, different etc...?

-

I also found this article in NI http://zone.ni.com/d...a/tut/p/id/7188 but its this too hard for me too understand . Someone knows a simple way?

Hi Leonardo

That's the link I would have posted too

It shouldn't get more easier than that - the code is already written for you.

If you are still stuck, maybe it would help to post some specific questions on what are the issues you having and we can try and help you out with those

-

Anyone recommend any good Sports Bars near the Convention Center (NI Week).

Walking or cab distance.

I am thinking big screen TV and who play Versus.

???

Cheers

-JG

-

Known Issues:

When the plugin is cached, the Getting Started Window (GSW) will not appear as a VI is still 'open'. I currently to do not have a work around for this, only to use (Ctrl + Shift + X) on a call to the plugin to uncache it and then the GSW will appear.

I managed to fix this issue now, thanks to Christina's post.

My experiment of caching a Quick Drop Plugin is now complete!

-

Version History (Changelist):

3.3-1 2010 07 26

- Fixed (): Getting Started Window now shows when plugin cached in the background and no other VIs are opened

- Added (): Reset to Original Theme Color button

- Added (): Reset All Settings button

-

-

-

The Getting Started Window will not reappear if there are any open or hidden LabVIEW windows. If your background-running VI never opens its front panel, or closes it using the FP.Close method, it should not prevent the return of the GSW.

If your VI closes its window and the GSW is still failing to appear, you may have found a bug where LabVIEW is not checking the window list at a time that it should. If that's the case, PM me and we'll investigate.

Hi Christina,

Thanks for your reply.

That is good to know the exact criteria - which is what the issue would be here:

My process does have a GUI so the FP can be opened, therefore I use the FP.Hidden state to hide the window in-between calls.

(I was thinking it may be faster to load and I was also experiencing some issues using FP.Close and memory that I have since fixed)

Anyways, I have changed this around now so the FP.Close is called each time and now the GSW window pops up with the process still running in the background!

Thanks heaps!!

-

You could explore some of the application properties, if I'm not mistaken there's a 'private' or 'system' property that should make it invisible for the GSW.

Or you could launch the GSW. There is a menu launch token for the GSW that loads it into the front.

Try to get Christina into this discussion, since she wrote a lot of code for the GSW.

Ton

Thanks Ton,

I don't want to manage showing the GSW etc, I am hoping it can be all handled by LabVIEW by flagging that VI to be ignored or something similar.

I will check out the app props, in the meantime I have contacted Christina.

Cheers

-JG

-

Howdy !

I have run into the following issue when creating a tool for LabVIEW that continually runs in the background (daemon/process VI):

By default, LabVIEW shows the GSW when all other files (project, VIs etc...) are closed.

However with the process running in the background, it does not show the GSW!

Has anyone run into the issue before?

Is there a way to set LabVIEW to 'ignore' my Tool and get the GSW to show normally?

The VI is running in the NI.LV.Editor application instance (the Tool is launched by Quick Drop).

Cheers

-JG

-

Thanks for the info Darren. Since we have almost twice as much time to do the coding, are we expected to produce a more complete application?

I asked the same thing - check out Darren's blog comments for his response.

-

I was counting on the written portion helping me overcome my generally slow coding.

Me too, I am worried I won't be able to get it all down on the BD in that much time!

-

I am coming across an error when I run a compiled program trying to creating a new file in Windows Vista and 7. I think this is due to the new security features in newer Windows OS. Is there a way to get around it as a standard user instead of adminstrator? Is there an unprotected directory I can create new file and write to?

Hi Tim

Check out this topic here too.

In it Mads has posted an external link that explains your options.

Cheers

-JG

-

Thanks for posting this, JG. I have written a new post on my blog regarding the changes to the CLA exam.

Well, I am off to read it then

-

In case you didn't know....

The exam format for the CLA has now changed from: 40% written and 60% coding, to 100% coding.

The exam is still four hours and the new one now requires you to track requirements too.

-

(I decided to make this its own topic in case anyone wanted to search on it later etc...)

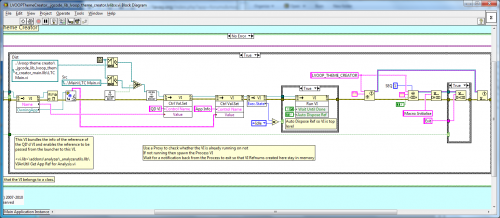

With the help of Darren (well, he did most of the work really) I have been able to cache my Quick Drop plugin.

The reason I wanted to cache the plugin was to decrease the wait to load the plugin each time.

This wait was just long enough to be annoying (and hey, to be honest I am just not that patient to start with).

And is due to the plugin containing a UI, libraries and classes etc...

Here is some side chatter in the Community (darkside) about caching a plugin if you want to check it out.

The plugin uses VIs from a VI Analyser toolkits to maintain the QD's VIs reference between LabVIEW application instances (as the reference was becoming corrupt)

There is also some handshaking to keep the launcher in memory so as to stop the spawned VI from going out of memory or not load the UI (another issue I had) - this was the easiest form of handshaking

So if you are interested please check out the latest version:

Known Issues:

When the plugin is cached, the Getting Started Window (GSW) will not appear as a VI is still 'open'. I currently to do not have a work around for this, only to use (Ctrl + Shift + X) on a call to the plugin to uncache it and then the GSW will appear.

If you have any feedback please post.

Check it out the increase in speed, quick enough I say:

<object id="scPlayer" class="embeddedObject" width="1065" height="838" type="application/x-shockwave-flash" data="http://content.screencast.com/users/jgcode/folders/LAVA%20CR/media/f9b0763c-d66b-4619-9732-ea809ddf773f/jingswfplayer.swf"> <param name="movie" value="http://content.screencast.com/users/jgcode/folders/LAVA%20CR/media/f9b0763c-d66b-4619-9732-ea809ddf773f/jingswfplayer.swf"> <param name="quality" value="high"> <param name="bgcolor" value="#FFFFFF"> <param name="flashVars" value="thumb=http://content.screencast.com/users/jgcode/folders/LAVA%20CR/media/f9b0763c-d66b-4619-9732-ea809ddf773f/FirstFrame.jpg&containerwidth=1065&containerheight=838&content=http://content.screencast.com/users/jgcode/folders/LAVA%20CR/media/f9b0763c-d66b-4619-9732-ea809ddf773f/Caching%20a%20Plugin.swf&blurover=false"> <param name="allowFullScreen" value="true"> <param name="scale" value="showall"> <param name="allowScriptAccess" value="always"> <param name="base" value="http://content.screencast.com/users/jgcode/folders/LAVA%20CR/media/f9b0763c-d66b-4619-9732-ea809ddf773f/"> </object>

-

New Version

Version History (Changelist):

3.2-1 2010 07 20

- Fixed (): Caching the plugin caused an error whereby the QD'd VI's Reference become corrupted between application instances. This was fixed using VI Analyser functions (mad props to Darren who sorted this issue out for me).

Known Issues:

When the plugin is cached, the Getting Started Window (GSW) will not appear as a VI is still 'open'. I currently to do not have a work around for this, only to use (Ctrl + Shift + X) on a call to the plugin to uncache it and then the GSW will appear.

See this topic for more info on caching.

-

Hate to raise an old post...

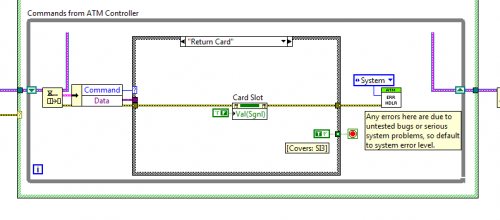

NI even used a Val(Sgnl) PN to cover a requirement in their CLA solution example !

-

It'll take me some time to absorb this post, but I do have a few quick comments.

Please post when you have time ! I love reading your stuff.

"You" was intended as a general term to indicate the QSM user, not you--Jon--specifically. I did a very poor job of making that clear.

Sorry, thats my fault, I will try not to take stuff so literal

Wrt the internet - that is the one thing I still have trouble grasping.

This is one of the main points where I've not quite been understanding where you've been coming from.

Gees, sometimes I don't even know where I am coming from!

The way I've seen the QSM used and my main point of contention with it is using it as a top level vi within a module and expecting it to provide some sort of structure to the module. It sounds like you've got some other architecture in mind already and are just using the QSM as a way to implement the architecture?

I have found, that this architecture is great (theres that word again) for the backbone of my screens (dialogs, data entry etc...) in most of my apps.

I find that using LVOOP I can encapsulate so much, that using a QSM as a Top Level VI can work fine in a small-to-medium size app.

As I have found it's pretty clean, when I am just (as Justin puts it) sequencing things.

But I have also seen its limitations or how it can be implement badly to create garbled code (with the points you raised thus far) and try to avoid those.

Also, programmers have been creating Large Applications for a long time, before LVOOP was around, so I trust the veterans, and am confident that these design patterns are quite robust and have been used extensively.

On the flipside, I am, as mentioned above, very interested in seeing the new breed of LabVIEW design patterns, most likely implemented in LVOOP (as it really is so powerful), that the community will develop / already have.

I am excited to see these and there seems to be a big push for it in the community, that hopefully they will start getting fleshed out, shared, standardised. and supported real soon.

-

It would have been cool if she belted out a tune at the end.

Maybe they should have got these guys to do it?

-

How do you apply this change? Probably by creating a UserType {Beginner | Advanced} enum and adding it to a supercluster of data that is carried through your app.

Just to be clear I don't agree with the above programming methodology although to be honest, thats what used to do when I started using LabVIEW but I consider it a very bad way to program now (as I am sure I have mentioned before). It is an assumption I guess that is made when talking about using QSM etc..

Dialog boxes are shown or not shown during execution based on the enum. Kind of a pain, but not insurmountable. Probably the kind of change you expect to do as a developer.

I may have dialog boxes shown based on the that state of possibly multiple classes within an application. I guess in this case, and for a simple example, it would be based on the configuration of the application and an event occurring rather than an enum e.g. attendedMode = TRUE AND exceptionOccurred = TRUE.

Q: What do all these requests have in common?

A: None of them affect the test execution logic in any way, yet will likely require changes to your test execution code in order to implement. I have a hard time considering an implementation "great" if it includes these limitations.

But you still need an architecture that sits behind that dialog box - the engine/process that runs it.

And using whatever implementation, I agree, it's function should be solely to present information to the user and possibly allow for user input, not affect execution logic.

Using your presented example:

If the system is running in "Normal Mode" and an exception occurs that you want to present to the user then I image in implementation could be as follows:

1. Pass Object as an input for the Dialog box

2. Run method to format information to string (possibly do this before passing to Dialog)

3. Get Exception string from Object

4. Display String in Dialog Box

5. Allow the user to enter a Comment in a "Comments Box"

6. If User presses Ok, Set Comment information to Object

7. If User presses Cancel, Set a predefined, default Comment (to indicate it was cancelled by the User) / leave blank etc...

8. Pass out Object that now contains the Exception Information and now a user Comment

Depending on other State data the Application logic may decide to

1. Discard this data

2. Log this data to a network share

3. Log this data locally

Now if the Application was configured to attendedMode=FALSE then application would make the decision not to show the dialog box in the first place, would instead run methods to format the Exception data to a string and maybe Set a default Comment to indicator not attended etc... But the application would be still be using the same Class methods called by dialog box - so that functionality is encapsulated and reusable

Therefore, with all functionality encapsulated, it has nothing to do with the Dialog or it's implementation. Its separated.

Put simply, the Dialog should call Get methods to access Object data and Set methods for User input etc...

So all the above options have absolutely nothing to do with how the data is displayed to the user.

And the architecture of the Dialog used (to display the data to the user) has nothing to do with the Application making the decision to:

1. Show a standard dialog box

2. Show a high contrast dialog box OR

3. Do not show a dialog box.

4. Other (expanded for more functionality)

Now I am sure you can implement the Application Logic using advanced design patterns that incorporate LVOOP - and that is what I am really interested in (and what the community is calling for at present).

But I still consider the QSM an important building block of my application for example, in order to implement the Dialog Box you get a new requirement (and for want of a better example) that says "the User Comment must be at least 20 characters long" (as management think this will make the attending user less likely to just press "a" <enter> and carry on).

Therefore when the Dialog is open the User types in a Comment that is 10 Characters long.

Your dialog (e.g. on a Comment Value Change Event) would then

1. Check the entry

2. Decide whether it was valid (>= 20 CHARS)

3. If valid enable Ok Button

4. If not valid display some text (contextual help) on screen that is a message saying why it failed

In order for the Dialog Box to do either of the above, one way would require it queuing a message to itself in order to either enable the Ok button OR to display some message based on the result of the validity check of the Comment.

Therefore, using a QSM would be a great way to do this IMO, and it would be an easy way to sequence these methods/commands/states.

And I also see no difference using a QSM or splitting this out into two loops in the same VI whereby a Producer (User Event loop) sends a message to the Consumer (Working Loop). Whilst this may have the advantage or simple design, it is more work to implement and it has limitations in sharing statefullness between the two loops (as they are combined). The JKI QSM implementation can be considered a Producer/Consumer combined in a single loop.

Additionally as this UI functionality is encapsulated within the QSM it can be broken down further it required based on changing requirements etc...

It can also be reused throughout an application (depending on if it runs in parallel, but considering a standard Dialog) just like any other subVI/method etc...)

So maybe in order to implement the above:

1. I start by creating the Dialog using a QSM without the need for any SubVIs, Typedefs or additional classes as it is so simple and its quick. The UI works and I don't need to spend that much time on it.

2. Changes occur to requirements and I refactor to make it tighter, using subVIs for reuse, typedef etc..

3. More expanding features means that I need to start using classes or possibly x-controls to encapsulate UI functionality etc...

4. A new requirement means I really need to take it up a notch and do something advanced e.g. a skinnable UI for Standard and High Contrast views that would need to share an engine/process for functionality reuse etc... Now this maybe implemented a totally different way, but one way could be using multiple QSMs whereby the original QSM gets strips of FP objects and become the engine/process in a UI Class that would now have two UIs (both implemented as QSMs).

Thats the thing I like is the flexibility of it all when I use the JKI QSM.

If I would have started with option 4 when I really only needed option 1 (and for example, it turns the application only ever needed option 1) then IMO would have wasted a lot of time (the customers and mine) on implementing something I didn't need.

I am also not bottled-necked in my approach as I can refactor the implementation at anytime to account for requirement changes.

-

1

1

-

-

I got mine yesterday and your're right - it looks awesome! Even better than last year's maroon (although I'm a New South Welshman, so anything's better than maroon...)

HA! I'll keep that in mind - I have visions of a NSWmen charging like a bull at anything red maroon

(I am from the West Coast so no dramas there)

-

....buy one of the Austin Utilikilts....

The Workman skirt would be handy for going onsite with tools

LVOOP constant with non-default parent values?

in Object-Oriented Programming

Posted

(Just to clarify the above example has Static connections with the class Input as optional)