-

Posts

2,397 -

Joined

-

Last visited

-

Days Won

66

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by jgcode

-

-

QUOTE (jdunham @ Oct 17 2008, 02:30 AM)

Even if the min-max is performed on a subarray, you need to check the array going into everything. If you run jgcode's fine VI, I think the caller will often have to make a copy of the entire source array, which you can detect by viewing the buffer allocations. If you took that VI and wired the array in and passed it back out, and did not fork the wire in the caller, you can probably avoid any array copies. Notice I am hedging here because it's hard to find clear documentation on this complex subject.Would running the above VI and forking data to the subvi in an inplaceness structure guarantee that a copy will not be made?

-

QUOTE (km4hr @ Oct 16 2008, 09:26 PM)

How is this done? Where do I go to find out?I looked around a bit and the best I can tell this requires optional (ie expensive) software. Is that correct?

The application builder is included in the professional version and dev suite otherwise it is an add-on.

Log onto ni.com for prices in your area.

-

QUOTE (km4hr @ Oct 16 2008, 11:44 AM)

Aspiring labview programmer wants to know how to turn a completed VI into an application that can be run by others without leaving it wide open to modification. Is this possible? Is there an "execute-only" mode?km4hr

If you turn the VI's into an application (e.g. build an executable) they :ninja: won't be able to get at the code.

-

QUOTE (marp84 @ Oct 16 2008, 08:45 AM)

i've labview 8.0 professional development system can i bulid the notch filter in this software and if it's can how to build it ?do you know what is the lower and higer cut-off frequency of this filter to eliminate the noise from ecg signal?

tanx before

There are filter VI's located in the Signal Processing>>Filters sub-palate that may help you.

I have also written custom ones easily enough before as well (e.g. 4th Order Dual Pass Butterworth etc..)

Your ECG question is one for a domain expert not a programmer

But you may be able to do some regression testing to see what filter cutoff(s) give you the closest signal representation with adequate noise removal.

-

QUOTE (Peter Laskey @ Oct 16 2008, 06:59 AM)

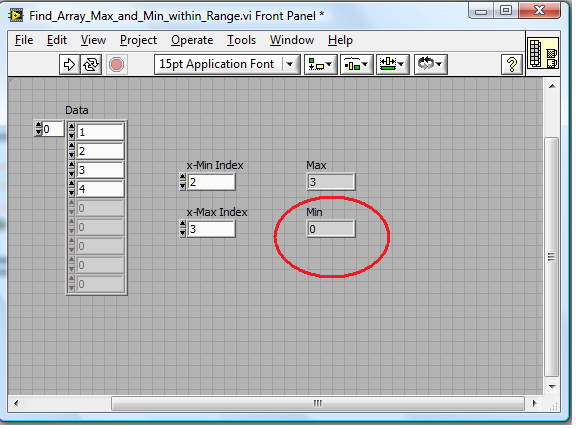

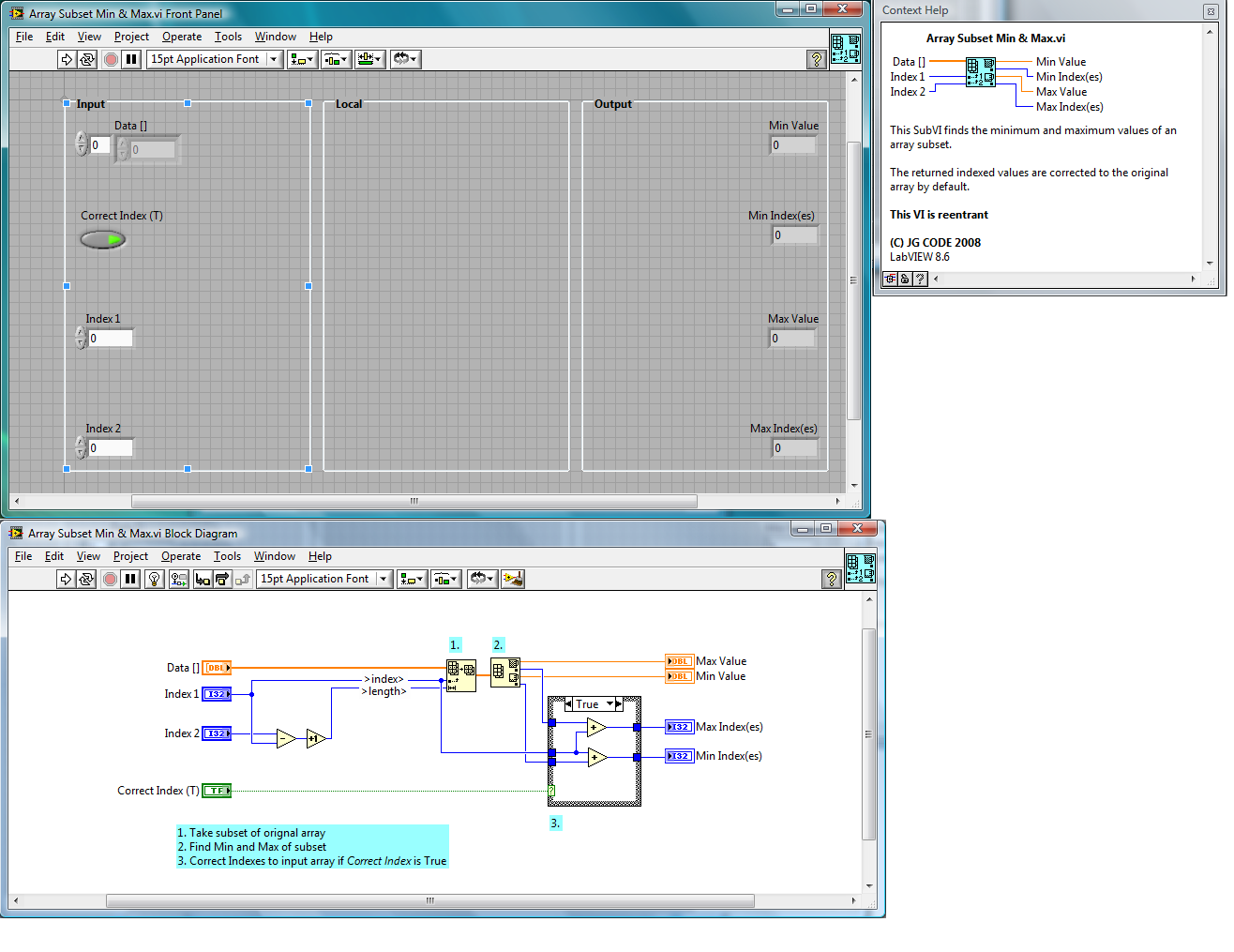

Attached is the SubVI that I wrote to iterate through the values of the array (ignoring values outside the start and end x index values) and storing the maximum and minimum values. The VI uses minimal buffer allocations so it is probably not too hard on the memory manager (versus array subset, array index, and delete from array). The question is, is there a faster way to accomplish this?This is provided you can inexpensively access your graph data from a buffer.

Your VI did not seem to return the correct minimum value?

This VI may help:

Download File:post-10325-1224113464.vi

Coded In LabVIEW 8.6

-

QUOTE (LV_FPGA_SE @ Oct 10 2008, 12:08 PM)

How much I/O do you need/want in your project? You could do without any I/O and write a program/application that only involves software. I guess this may be acceptable for a Computer Systems major.You could think about a distributed networked application with pieces running on different computers. Think along the lines of networked multiplayer game.

A simulated lighting system would be cool?

No need for hardware - just use multiple PC's to simulate hardware.

-

-

QUOTE (MJE @ Oct 7 2008, 11:28 PM)

Keep in mind that even under UAC in Vista, you will be able to get at config files located in your your program directory, however the file will be mirrored to a user-specific directory (I forget where, too time pressed to look), so you won't actually be writing to the file you think you are. This will be completely transparent to your program, and not noticeable in a one user environment, but can bring up odd results if you expect changes made to be global for all users since you're actually reading/writing a user specific copy of the config file. I think one of the above linked documents went over that, it's a backwards compatibility thing MS implemented to keep a lot of older apps from blowing up on Vista.Hi MJE,

Cheers, I did read about the file location transparency.

However, in Vista - Read/Write File Functions return an error in LV if you try to read/write a file you do not have admin access to.

So the transparency only comes into play after you enable admin access.

Other programs don't seem to have this problem - i.e. admin rights is not selected - so there must be a way around this?

-

QUOTE (jdunham @ Oct 7 2008, 02:22 AM)

Well, we learned the hard way, and to be honest, we're still doing it the wrong way until next our next version which has to support Vista.Well what has worked for us is that the configuration INI file is not installed by the installer (actually we do install one to fix the fonts, and that has to be in the program files folder, and it never changes).

For the real INI file with our application settings, the one which will go in the user directory, the software checks for it on every startup and creates it if not found (you may want to prompt the user to set the settings at that point too). I suppose your uninstaller ought to remove that file, which would be the polite thing to do, but I haven't tried that.

So your LabVIEW.exe can still access the config ini file in the program folders or is this only for XP?

If so what are your plans for vista - everything to the Users folder?

-

QUOTE (jdunham @ Oct 6 2008, 01:47 PM)

I also wanted to point out that there is a big benefit to getting your config data out of c:\Program Files\.... If you want to deliver an upgrade to your program, you can let the installer blow away everything in that folder without losing any user settings or configuration state.Great! Thanks for the tip jdunham :worship: - so I guess I should start doing that for XP too.

Thinking out aloud however, if the installer installs the data files (whether in Program Files or elsewhere) wouldn't the installer know to remove them aswell on an uninstall?

Or is there a built-in proxy in the installer that does not allow such behaviour?

Does anyone know?

If not....time for some testing methinks....

-

QUOTE (LV_FPGA_SE @ Oct 4 2008, 02:41 AM)

You can only have one RT Startup EXE, which is called when the cRIO system boots up. But you can call additional VIs from the EXE dynamically when it is running. So you can have two or more VIs running on a cRIO at the same time. Once a second VI is started, the main VI or RT EXE can stop running and the second VI will continue to run on its own. The second VI of course can start additional VIs dynamically as well.To do this, become familiar with how to call VIs dynamically using the VI server functions. When building the RT EXE you will need to explicitly include any VIs that you call dynamically in the Build Specification for the RT EXE.

Hi LV_FPGA_SE

Is there any caveats to running dynamically called VIs on LV RTOS.

My main question is with respect to memory allocations.

I am worried that dynamically called vis could chew up contiguous memory for large arrays.

At present I initialise my arrays from bootup and am very cautious in not dynamically increasing their size or making copies.

Especially when the application has to run for days/weeks/year

I have not have the need to use dynamic VIs on RTOS - is there a benefit to using them?

The main one I could see if it freed up resources - but does this actually happen?

Cheers

Jon

-

Thanks for the responses guys :beer:

Cheers for the links aswell.

That VI that will work a treat especially since its XP & Vista compatible.

Being a widely distributed LabVIEW product - I am still interested to know if anyone else knows how VIPM works around accessing Program Folders.

Cheers

JG

-

I get two "containers" for a LV application in my taskbar in VISTA (XP only has one which is good).

It has a splash screen then launchers the main vi.

One container is redundant the other maximises/minimises the application

Any ideas how to clean this up.

HideRootWindow=True has been tried but does not work.

Cheers

JG

-

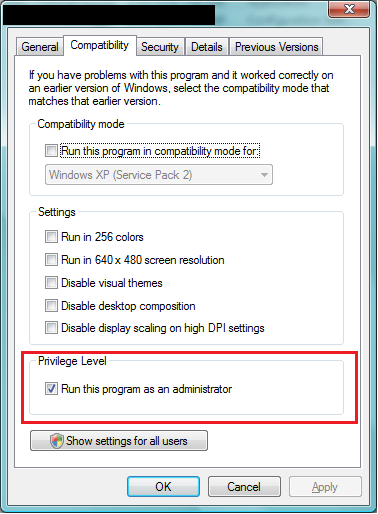

How are developers handling installing on VISTA?

I normally like to store private files in the data folder @ C:\Program Files\<application>\data or similair.

However, the application can not access them with out ticking the checkbox in the executable's properties and turning on administrator rights.

Is there any way to get around this on NI installer?

I have also noticed that VIPM does not have this enabled and it works!

Any suggestions on what to do or any pointers to any information?

There are a few topics on Windows Vista here and here.

NI whitepaper on virtualisation is here and the parent page here

Cheers

JG

-

QUOTE (jlokanis @ Sep 12 2008, 08:57 AM)

Before I totally reinvent the wheel, has anyone created replacements for the one and two button dialogs that do not block the root loop in LabVIEW?The main features I would need them to have are:

Ability to resize to fit message and button texts, like the originals do.

Reentrant (so one does not block another, in case many threads want to call this at the same time, and yes I know this might not always make sense.)

Centers itself on the active calling window (and not the screen, like the 3 button dialog does)

Does NOT block the root loop!

I can almost do this with the 3 button dialog, but it centers itself on the screen and is not reentrant.

thanks!

-John

PJM had a post with a blog reference that did the text/button changes.

Whether it fits the other requirements?

I think it came up in the - Sequence structure is bad / Invent the new dialog challenge - series of posts.

Worth asking him/checking out.

Cheers

JG

-

-

Following on from the Tom's paper chase and internal NI certificaton - this question is an off shoot that I guess only NI posters can answer but is open for discussion:

Do the exam markers actually sit through the NI Courses :question:

One example being I copied NI style from the Int course when I sat the CLD, and got picked up on it.

[More specifically - the use of coercion dots for the variant in the queue datatype cluster when making a wrapper VI for the display queue].

I did not do the data-to-variant conversion but nor does NI in their course - so I thought it was ok.

But then they penalise for it in the exam - I find that very hypocritical, inconsistent & annoying. :headbang:

-

-

QUOTE (jgcode @ Aug 20 2008, 07:01 AM)

I hope so - otherwise our Dev Suite subsription won't be here til Q3 - which usually turns into Q4.Don't know abut around the globe - but they are so slow in Australia!

Looks like I will have to eat my shorts...

Guess what turned up in the mail today....

...8.6

I am humble enough to do a big shout apology to NI

-

QUOTE (LV_FPGA_SE @ Aug 22 2008, 07:45 AM)

In fact the code, more correctly called the bitstream, that is running on the FPGA is more like a digital circuit diagram (think EE, not CS) , than any kind of program. So in order to preserve dataflow when converting a LV diagram to FPGA, extra code/circuitry is added so that the circuit implemented on the FPGA behaves like a LV VI. The most common part of this process is the Enable Chain. The enable chain enforces data flow from one portion of the FPGA code/circuit to the next by setting an enable flag whenever the data output of one piece of code/circuit is valid. The next piece of code/circuit will not perform its action until the input data is valid as stated by the explicit signal from the previous piece of code/circuit.Due to the mapping of LV code to the FPGA, any wires and data associated wires take up a signficant portion of the limited FPGA circuit real estate "space", so in LV FPGA programming you always optimze your code by minimizing the amount of data on your diagram. Therefore a sequence structure is preferable to data flow if you don't need the data for something else. In addition error clusters don't exist in LV FPGA and are not an option. FPGA programmers don't make errors.

With a name like LV_FPGA_SE I was looking forward to your explaination ! :beer:

-

QUOTE (Aristos Queue @ Aug 22 2008, 06:17 AM)

I've come to the conclusion that if the sequence structure is bad then the state machine is worse. Why? Because in this case the state machine would be nothing more than a stacked sequence structure with more runtime overhead. If a flat sequence structure is considered better than a stacked sequence for readability, then turning the sequence into a case structure is a step backwards. And because the cases run in the same sequential order every time, then you've added the overhead of the while loop and the case selection without any benefit.While I agree your other comments but..... I don't think a state machine should be compared to stacked sequence at all

For the following reasons (off the top of my head):

a) It is a design pattern

b) It increases readability (with an enum) - i would say that this is the most important point, seeing a large part of coding is about reading esp when doing maintainence/upgrades

c) Dataflow is not backwards as with sequence structures, as it uses USR/SR

d) It logic is scalable - although it may be sequential in operation now, later on states can be added, and logic does not have to be sequential

e) Its design pattern is scalable - without a doubt a SM + FGV (or whatever nonclementure you will to use) is one of the best design pattern I have found for creating a module (following the rules of encapsulation, loose coupling, high cohesion etc..)

-

QUOTE (jgcode @ Aug 21 2008, 01:46 PM)

QUOTE (shoneill @ Aug 21 2008, 03:50 PM)

Or use the OpenG versions with error inputs and outputs. You DO wire the erros terminaly, right? It's a better solution than sequence structure and needs less space on the diagram.....Shane.

It's a better solution than sequence structure and needs less space on the diagram.....Shane.I know this topic has been moved but...

Dude - I know Open G is available as I mentioned it.

Its not a better solution - its a different solution.

And no, if you are already using the flat sequence then it doesn't have to take up more space

-

QUOTE (mross @ Aug 22 2008, 01:46 AM)

you said:"a wire and a corresponding data type and in/out terminals would take more FPGA space then just the enable string of a sequence structure."

Just what is this "space" you are talking about? Screen space? The space of available gates? My first thought is that you mean visual real estate on a block diagram.

Is this text programming? Like I said I don't savvy FPGA. I thought FPGA were programmed on a big monitor where wires and sequence structres mean the same thing as they do for regular DAQ equipment and programming. I have no idea what the "enable string of a sequence struture" might be.

Mike

Another great point that FPGA uses them alot.

Check out the demos in Example Finder, if you can, and you will see that when you are programming nodes on the FPGA, flat sequence structures are used alot.

-

QUOTE (Raymond Tsang @ Aug 21 2008, 08:53 AM)

Thanks! Got it.But... because I'm taking data every second (or 0.1 sec), so every second, I have to pass a huge array through shift register, and put in one new entry. Is there a function in XY graph, where I can add a new point in every iteration?

Ray

The default buffer for a strip chart is 1024. That is hardly huge.

I have tried passing 50-100K pts without struggling.

Obviously the user can only see so much resolution anyway!

What is your definition of huge?

Sample rate x "time needed to be displayed" = on-screen buffer size

Do you need to write a point at a time to the screen or can you update the screen in chunks?

Do you need to write every point to the screen or can you decimate the array?

Best way to find Max & Min in array subset

in LabVIEW General

Posted

QUOTE (jdunham @ Oct 17 2008, 11:08 AM)

Thanks jdunham

With passing an array into and out of the subvi: you could still edit the array in the subVI and pass out the same data type but with a different allocation (e.g. perform a build array).

So I am wondering how smart the compiler is?

Isn't the point of inplaceness to guarentee buffer reuse.

I can see the problem if another operation was made in parallel in the inplaceness structure...

But I would love to know if the subvi was in an inplaceness structure and no other functions were run on the data in the structure - would buffer reuse be guaranteed :question: