-

Posts

1,068 -

Joined

-

Last visited

-

Days Won

48

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by mje

-

It would be news to me if there was a way of doing it. In the past I've wanted to treat blocks of a large file as native file system objects but never found a way of doing it at the operating system level. I figured you're either hanging onto a refnum/handle of the big file and synchronizing I/O operations yourself, work with a folder holding a collection of files to merging after the fact. Neither was ideal but I chose the later since I didn't need real-time read access and it's a whole lot less work.

-

Yeah, I saw that idea exchange entry. It broadly falls under the same issue, but this is a bit more specific. I'm fine with the blurriness that can result from the OS scaling the native display, that's expected when there's not an integer multiplier mapping between the scales. At 200% my display isn't blurry, it's just more pixelated, but a fractional scale of 150% or the like will produce those effects which I wouldn't classify as a defect. However there's something special about the mouse cursor-- while the rest of the interface is scaled by the OS as expected, the custom LabVIEW cursors are not thus adversely affecting usability. However they can be scaled. Take for example this screenshot of the same bit of code taken via the Windows Step Recorder: Somehow there's an auto-magical cursor scaling in the image that doesn't appear on screen. Shenanigans I tell you. As for DPI, Windows handles that quite elegantly. There's a seamless transition between modern applications and those which the OS is treating as legacy, no fiddling of mouse settings are required. Windows 10 is very impressive for this and is one of the reasons we only plan on supporting high resolutions for our LabVIEW applications under Windows 10.

-

TL;DR: Is there a way to fix the small cursors in LabVIEW on Windows 10 while working with desktops scaled beyond 100%? Now that I routinely work on 4k displays, the legacy IDE and applications created from it are becoming a bit hard to use. The non-system cursors LabVIEW use are too small-- they don't scale like the rest of the user interface on Windows 10, inclusive of the system provided cursors: That cross on the diagram is smaller than one of the tunnels. To add insult to injury, when I capture a screenshot with the cursor it miraculously scales in the image, so I'm thinking this is something LabVIEW is doing with it's cursors that prevent the OS from resizing them (the rest of the IDE scales beautifully with the build in Windows scaling). Also why I'm posting an image of my screen (don't tell anyone). I can work around this by having the OS to flash the cursor position when I lose it. Which happens a lot. But I am worried about complaints that may creep up from users of our applications because the non-system cursors on front panels are similarly unscaled. Is there a way to fix this? I'll preemptively say NG isn't an option, there are reasons I'm still working in LabVIEW 2017.

-

Displaying a transparent PNG programatically

mje replied to Neil Pate's topic in Development Environment (IDE)

Seriously vugie, BitMan is a fantastic library. -

Yep, I've confirmed that mucks things up good. Looks like I'll have to go hooovahh's route and cache the results of entire strings at a given font setting. Ugly, since the initial render will still be slow, but follow up renders will be quick.

-

Given we're talking 7 or 8 bit characters depending on if you care about extended codes, and the first 32 codes aren't printable, I'd go with an array for direct indexing.

-

Come to think about it, this is LabVIEW-- unicode/multi-byte isn't exactly a thing. So there's not a lot of printable characters. The interface uses the same font + size for the whole display, so when it's changed, run Get Text Rect.vi on all possible characters caching the results, incurring a relatively small cost, then the bounds for any string can easily be calculated without making calls to the underlying GDI layer. Should be fast. May need to have the caches keyed by style (bold, italic, none, or both).

-

I've identified a bottleneck in some of my more text-heavy picture based user interfaces as calls to Get Text Rect.vi. It's adding on the order of 100 - 1000 ms rendering time to my interface which otherwise does things in 10 - 100 ms depending on data density. To make matters worse it gets slower by a factor of 5x or so if a user-defined font is used (anything other than application/system/dialog). User-defined fonts are required to alter text size, so...yeah. S. L. O. W. I'm platform locked so the GetCharABCWidths method springs out, but I'd have to dig a bit to figure out the data structures used in that call as I haven't really dealt with it before. Has anyone tackled this before and can perhaps suggest alternatives?

-

Good one, you may be onto something. I'll do some investigating and see what surfaces. Cheers!

-

Now that I'm doing more thinking about this, a VI that crawls the loaded VIs in memory as part of the splash load may do the trick. Dynamically liked VIs and clones would need to be handled differently.

-

Indeed, it's your solution that got me wondering if there's a way to get notification. All the data is clearly available in VI server to do a polling solution, but that would be pretty adverse to performance. A few hundred UIs to poll and it would have to be frequent enough such to catch freshly loaded VIs before they're displayed to avoid twitchy behavior. All in I think I'd be back to square one having to modify each VI to keep the panels hidden until the global API has had a crack at transforming the panel otherwise it could look pretty hack-ish.

-

I'm wondering if there's a way to hook into VI server and register for notification any time a front panel is loaded. Not a specific front panel, but any in the owning application instance. I'm thinking of a VI that can run in the background and inspect each front panel that gets loaded to operate on it. Immediate use cases of interest are to apply automated interface scaling and language translation at run-time. I'm brainstorming ideas trying to find something that scales better than having to modify each and every user interface VI in an existing code base to make calls into a new API that is created.

-

I see, yes that makes sense. I get how that's a problem in LabVIEW since there is no lower level representation presented beyond the block diagram. For example when I debug my optimized gcc code I can always peer at the resulting assembly to get an idea of what's going on when code seemingly jumps from line to line or look at registers when a variable seemingly vanishes and never makes it's way into RAM. Without a lower level presentation, you're pretty much hosed with respect to debugging if any optimizations are enabled. I withdraw my objection, especially since there's some reference to optimizations in the dialog if I recall. Seriously though, thanks for taking the time to set me straight. Cheers, AQ.

-

I'd expect compilation to be different, how else will all the debug symbols get put in place? But what I don't expect is all the other stuff you said goes on. The text presented to me in the dialog says "Allow debugging." Not "Enable debugging, and constant folding, and loop invariant analysis, and target specific optimizations, and a bunch of other stuff." I expect the checkbox to toggle letting me attach a debugger to the VI to perform various forms of debuggery. Stuff like breakpoints, probes, step execution. Taken directly from the LabVIEW 2017 context help: I've been using LabVIEW since the 90s and had no idea other optimizations were tied to this checkbox until you mentioned it the other day. Nowhere had I ever read it was. It may be obvious to you, someone who's intimately familiar with the innards of LabVIEW, but LabVIEW.exe is a black box to me. That's what I mean by hacked up. There's a discontinuity between the function and indication of that checkbox. The fact that the setting affects things other than debugging means one of two things: the setting shouldn't be used for these other purposes; or the function of the checkbox has not been clearly expressed. I'm not trying to argue against having these optimizations and such. They're good and part of what makes LabVIEW so efficient. But since that checkbox really isn't "Allow debugging" and more "Allow pandora optimization", it may warrant some review of how the information is presented to the user? You'll also be my hero if you can get the word "debuggery" into updated UI or documentation.

-

Well, it's not different at all-- the beauty of naivety! That checkbox is voodoo as far as I'm concerned, and given what you've said I'd argue it's either grossly misnamed or misused. Literally. It says one thing and does a "thousand" of other things. You're already at the infinity point. Your point about optimization is completely valid. I don't think user optimizations should be a different class than compiler ones for this context. I'm on board with what you're trying to do: a VI scoped switch to allow code compilation. I'd still argue the debugging flag is the wrong place to go about it, just because that flag has been hacked before doesn't mean it's the right thing to do again. The argument for scaling comes to mind as well. What if I wanted to so similar things for unit testing, reentrancy, or general mischievousness?

-

To me debugging enabled means the ability to attach a debugger to the code, and toggles and all the stuff that needs to be in place to push meaningful information to the debugger. Any other use of the setting seems to be an abuse. I appreciate the case you've presented but to me it's the wrong way to do it. Unless of course some magical API exists that could extend debug functionality. Just kidding. Maybe? Seriously though, I get twitchy anytime a proposal is made to take something that already exists and use it to control something for which it wasn't intended.

-

Splash screen front panel flashes in edit mode before running

mje replied to colinleer's topic in User Interface

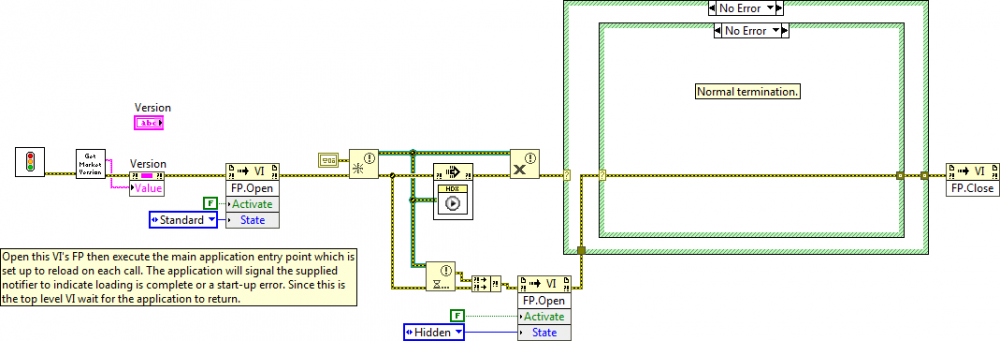

Having the "Show front panel when called/loaded" options cleared from the window appearance properties is very important. For me the basic splash template is: Splash VI initializes itself. Splash VI shows itself. Splash VI creates a notifier to pass to the main application which will indicate loading is complete or an error. Splash VI executes the application entry point. This VI has been configured to reload for each call via the "Call Setup..." context menu when you right click the VI on the splash diagram. This triggers loading the VI hierarchy. Application signals the notifier that was passed in when it's ready to go or if an error has occurred. Splash VI hides itself then waits for the application to return and reports on errors as appropriate. For example: The outer error frame handles low level errors for which there's really no additional information and the inner error frame will report errors returned from the application. -

I see this issue regularly. I do a lot of work where the "real" UI is just a big picture indicator. One stray coordinate on that blue picture wire will send the Picture to Pixmap VI into a tizzy as that's the first time a flattened 2D map is made of the image data. That VI also has horrible error handling-- which is to say it has none-- it'll just crash LabVIEW with the out of memory error if asked to do something absurd. Some general guidelines for interfaces I write rely on the Picture to Pixmap VI: Constrain dimensions to some reasonable maximum density. I usually do 25 MP or something. Your mileage may change depending on expected system power and color depth. Test VIs which create picture data. Those pictures have 32-bit coordinate space (16-bit for each axis) which allows for some very unreasonable map sizes if you're not careful. Any VIs you use to generate picture data should have their edge cases well defined and tested to make sure you're not trying to draw data which may demand terabytes worth of RAM when converted to a map. Be aware of vector vs (bit)map data. The native picture controls and indicators can work fine with pure vector data which will be more tolerant of large map spaces but generally perform really poorly if there's any real data density. Rendering maps is far quicker, but demands the use of the offending Picture to Pixmap VI. Cringe with fear that you've been reduced to having to work with the LabVIEW picture API. There's really no good way out if you have to go down this route.

-

DereferenceByName Xnode ( -> operator in C)

mje replied to UnlikelyNomad's topic in Code In-Development

Oh right. I jumped to objects by analogy to the c languages, completely forgetting the hard boundary between clusters and objects in LV. -

DereferenceByName Xnode ( -> operator in C)

mje replied to UnlikelyNomad's topic in Code In-Development

Property nodes already do this? Granted it requires implementing the property, but I'm generally not a fan of pulling data from class wires via the cluster primitives as it makes it hard to track where data is consumed. -

This amuses me. The policies I'm exposed to originate from nothing other than malice.

-

IBM Rational Team Concert. Because the bureaucracy hates us. There's an insurgency at my company trying to get the Atlassian/GIT solution traction, but I may have already said too m--

-

At the risk of veering further off topic, we some times use an arduino or the like to bang out a quick proof, but you're right in that these never make it into anything real. The idea is that the microcontrollers that these platforms are based on have a many peripherals that can do most of the common operations I need to do in hardware without the need for an FPGA. For most of my applications a 100 MHz 32-bit CPU is serious horsepower when I have the heavy lifting offloaded to the peripheral hardware. When the on board peripherals are insufficient there's literally over a dozen communication options, often with several instances of each never mind the ability to tap right into the system bus on the more sophisticated µC architectures. Need to upgrade up the ADC? Fine, that's a $15 IC that will tap into the SPI bus. Easy. Repeat for a few other obscure needs that the built-in stuff can't handle and when all is said in done I have a board that does exactly what we need and costs maybe $200-$500 per unit at volumes of 10-25. While I have no problem building a single $5,000 NI platform to test something, scaling the volume is sometimes a hard sell. What happens when we need to make 5 more to move from proof to concept? Then 25 more to ship around the world for collaborators to test and bang on for a bit? It's hard to sweep numbers like that under the rug for lower visibility projects, but even for the high ones where it could fly, how do I fit one of those NI boxes in my widget that's supposed to be smaller than any of the NI chassis (except the SOM, obviously)

-

The only public document I'm aware of discussing long term plans is their road map at http://www.ni.com/pdf/products/us/labview-roadmap.pdf. It is unfortunately light on details. Yes, I think Python is a serious contender. I was shocked when they announced DAQmx support for it on Tuesday which will further strengthen the Python position. For me the relevancy is the relative ubiquity of microcontrollers, the tools to program them, and the cheap cost for low volume PCB manufacturing. I'm hard pressed to find any need in my research group where I'd prefer to use the NI RT platform over rolling our own design with bare metal programming in C. Cortex-M devices are capable as hell and the cost of printing/populating several custom PCBs is usually cheaper than a single NI RT chassis. I'd still go to NI if an FPGA application sprang up, but those are few and far between in my line of work. As for the desktop, LabVIEW has been losing ground for quite some time, but I still touch it from time to time.

-

NG looks like it will be a solid modern development environment though it's too premature for me to jump on that bandwagon yet. None the less the long term plans address my major criticisms of the legacy platform should they come to pass-- I'm hoping they materialize soon enough before the platform as a whole fades from relevancy in my research. The fact that you don't see mobs with pitch forks marching on Austin from core partners with a vested interest in the old platform should give some indication of their commitment. While there will be an end for the old environment, I don't expect it will be until NG is well matured.