-

Posts

1,256 -

Joined

-

Last visited

-

Days Won

29

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by asbo

-

I'll bite - why?

- 74 replies

-

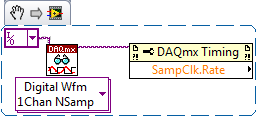

This is one of the first default settings I change. I think icons on the block diagram are unnecessarily large and provide no additional value over the terminals. You're actually the first I've (knowingly) come across which actively prefers the icons... you're like a LabVIEW unicorn

- 74 replies

-

- 4

-

-

-

Okay, so I made a new 2011 icon based off of blawson's style and probably will make another for 2012 when I have some more idle time. Applying these icons is pretty straight-forward; I use Resource Hacker which works for all the 32-bit LV executables I've tried so far (and automatically makes a backup). 1) Open up the victim executable in Resource Hacker. 2) From the menu bar select Action > Replace Icon. 3) Click the Open... button and select your icon of choice. 4) Make sure group 1 is selected in the listbox on the right, this is the ordinal of the main LV icon. 5) Click Replace, save your EXE, and you're done! LV2011.ico

-

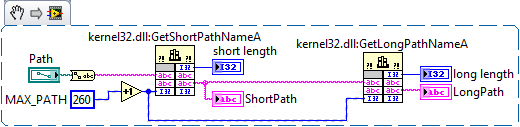

Not sure if this was directed at me, but I'm aware One things to keep in mind if you're going to act on the return value is that if the buffer is too small, the function returns the *size of the buffer* you need for the string; if the buffer is big enough, it returns the *length of the string* (no null terminator).

-

If you're worried about a tidy block diagram, you don't need either allocations - just wire in 260 to the buffer length terminals and configure the CLN to use that input as the Minimum Length for the output buffer. You'll over-allocate in most cases, but what's a few bytes among friends? Also, you don't need to run most of these utility APIs in the UI thread.

-

Which one are you trying to use, GetFullPathName or GetLongPathName?

-

I could reproduce this in LV2010 SP1 on Windows 7 Professional x64. I could not reproduce it in LV2009f3 or LV2011.

-

Thanks - I always forget to check the NI forums... I'm disappointed blawson doesn't have a 2011 icon, I'd like them all to be a similar style. Guess it might be time to dust off Photoshop.

-

I've been using the attached LabVIEW icons for a while now so that I can tell each version of LabVIEW apart when it's in the taskbar or Alt+TAB menu. Does anyone know who made them originally and whether there exists one for LabVIEW 2011 (or LabVIEW 8.6)? LV2009.ico LV2010.ico

-

I like to think of it as a guiding life principle, actually.

-

That's true - I realized after writing that post that you can always increase or decrease scope and eventually you'll be able to coin something as "hygienic."

-

A quick google looks like you're correct, but it dates back to LV7.

-

Off the top of my head, any VI that calls to hardware (particularly for measurement or I/O) or any VI expressly written to return data that is either time-sensitive (e.g., salted hash) or random in nature seems like it would qualify as non-hygienic.

-

Yeah, disable manually and see if it gives you the correct behavior. It's a shot in the dark, but maybe you could try using devcon to change you IP address to a different subnet, so even if it does try to keep talking it'll be on a different subnet - unless your BERT picks that up too ... In a past project, we weren't doing any error testing, but we did have to remove the UUT from the network briefly. We used managed switches and SNMP to pull that off though - maybe it'd be worth upgrading in your case?

-

The commas in your block diagram values *really* threw me off until I remembered you're from Europe.

-

That's a good clarification - programmers that have never had the pain/joy of working directly with pointers may not realized that branching a reference wire does not clone your TCP socket/VISA session/front panel control, it just lets you operate on it in more than one place simultaneously.

-

Crafty! I approve.

-

I don't disagree with you, but perhaps we're getting ahead of ourselves with that mental compilation. What a branch in a wire means, literally, is that the same piece of data needs to go to two places. What's the simplest way of accomplishing this? Copy the data. In reality, LabVIEW will optimize as much as possible of this copying, but fundamentally every wire branch should be viewed as copying data.

-

Interpolate 1D Array Always Coerces Index for Integer Arrays

asbo replied to mje's topic in LabVIEW Bugs

It seems that the IDE and compiler (?) are getting out-of-sync until the compiler catches, smacks the IDE in the face, and says, "Hey, you can't do that!" -

Interpolate 1D Array Always Coerces Index for Integer Arrays

asbo replied to mje's topic in LabVIEW Bugs

If you don't wire an input array, you can drop a constant off the index terminal which doesn't coerce. However, try changing the representation to another floating point (fixed or single) - still no coercion. Now undo and redo - coercion dot! Report this to NI. It's a bug of some sort, but it may not have any severe consequences. -

How to call user32.dll:GetWindowRect? (and why I need to)

asbo replied to MartinMcD's topic in LabVIEW General

The approach is pretty simple: re-create the RECT structure as a cluster, passing it in effectively as by-ref to the call, and out comes your coordinates! Check out the attachment to see it in action. GetWindowRect.LV2009.vi -

Googling that came up with some interesting results... I never used it, but definitely played around with similar tools. I messed around with all sorts of Apogee and 3D Realms games...