-

Posts

297 -

Joined

-

Last visited

-

Days Won

10

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by eberaud

-

Thanks for the idea, but since I never leave the file open, I will make it as easy as possible and simply create a FGV2 with only 2 cases: Read Key and Write Key. Read Key will do Open/Read/Close Write Key will do Open/Write/Close That should do the trick.

-

Thanks, you confirm my suspicion about the need for a locking mechanism like a semaphore or a LV 2 global. I thought I could get by without one, I was wrong

-

Thanks rolfk, my real code has error handling but my example doesn't generate any error so I omitted the error handling on purpose for higher readability. Taking a step back: all I want to do is to rely on a configuration file to store parameters. I have been counting on the possibility to have several pieces of code asynchronously either writing or reading one parameter (not the same one). Was I wrong to count on this possibility? How do you manipulate your INI files? Do you wrap the INI Vis inside your own API?

-

Thank you everyone. I should have insisted more on the fact that my issue is really with INI file. The only reason why I am tinkering with text file VIs is that they are the blocs used by the INI library. This new screenshot and attached VI shows that relationship. The 2 While loops are equivalent. The Text VIs of the top loop are the ones I found in the INI VIs of the bottom loop. As you can see, both loops encounter the same random rate of failure. In the case of the INI, the text VI returns an empty string, and therefore the INI VI tells us the key is not found. So my problem is not fixed yet. It seems I need a locking mechanism to prevent the reading and the writing of the INI file to happen at the same time. Read-Write TXT issue.vi

-

So after troubleshooting a complex piece of code for a few hours, I stripped things down to the screenshot below. Apparently writing and reading a text file at the same time causes the read operation to fail. If you force sequential operations by wiring the error cluster from the Read to the Write OR VICE-VERSA, then things work fine. Does anybody know about this? Obviously my code is way more complicated and the read and write operations are done in different unrelated VIs and in an asynchronous manner. So it seems my only way to fix this is to add a locking mechanism such as a FGV2... However, the file I'm manipulating is actually an INI file and I manipulate it using the native Configuration Data palette, which deep down relies on the R/W Text file VIs. So the FGV2 would need to be added to the native Configuration Data VIs if I wanted to have a generic fix... The attached VI is saved for LabVIEW 2015. Read-Write TXT issue.vi

-

As topics about LabVIEW NXG are going to start growing more and more numerous, it would be nice to have a proper folder to put them. Could an admin create a dedicated folder or category where it would make the most sense (at their discretion)?

-

I took the time to search for existing posts about this but surprisingly I didn't find anything. Here is what I want to achieve: I have a tiny LabVIEW executable that can perform basic operations on a single file. So far I need to open the executable, and then browse for the file. I would like to have a way to navigate in Windows Explorer while that executable is not running, and when I find a file I want to open in my executable I can right-click the file, and in the Windows context menu I can see my exe in the "Open with" menu. Even better, I would like to associate the special file extension to my executable so that I can simply double-click on it in Windows Explorer. There are probably several steps required to achieve this. Could you guide me through either one or all of them? Or point me to a useful resource? Thanks!

-

You should probably save your VI for an older version of LabVIEW. Not everybody has LabVIEW 2016.

- 9 replies

-

- crash

- troubleshooting

-

(and 1 more)

Tagged with:

-

I had memory leaks that I investigated and fixed in the past but never of that kind! Usually the symptom is a slow but constant increase of the memory, in the order of 1MB every few minutes which adds up to 100MB in a few hours and 1GB after a few days. When I look at the memory usage in a graph, I see a constant gentle slope and I know I have a leak. Usually a while loop which keeps opening a reference and never releases it. But this time I had 5 hours of no leak at all where my application stayed nicely at 356MB, and suddenly it jumped to 1.5G in a 2s interval! Then it increased by 69MB/s for about 20s before stabilizing at 3GB. Then it regularly jumped back to 2GB for 1s and then back to 3GB for 30s, 2GB for 1s, 3GB for 30s, and so on... How could that be possible? Can I really have a piece of code that could manage to allocate 69MB/s? Any comments welcome!

-

Probably a good idea to delete this post now.

-

I'd suggest modifying the mechanical action of the Keep button to "Latch when released" (or "Latch when pressed", doesn't matter). This will reset the button to FALSE as soon as LabVIEW detects that it was set to TRUE by the user. So this will execute the code that populates the 2D array only once each time the user presses the Keep button. Additional comment: don't you want to restart populating the 1D array from scratch each time the Acquire button switches from FALSE to TRUE? Right now you keep appending and never discard the old elements.

-

Have you checked the error description? It is defined as follows: The application is not able to keep up with the hardware acquisition. Increasing the buffer size, reading the data more frequently, or specifying a fixed number of samples to read instead of reading all available samples might correct the problem. The issue might come from the fact that you're in continuous sample mode, so the hardware is going to keep filling a buffer and your Read function is supposed to partially flush it each time, but you only flush 1 sample when the case structure is TRUE, and you don't flush it at all when the case structure is FALSE. So the buffer fills faster than you flush it and eventually gets full and generates the error. I'm not 100% sure of my description but it makes sense to me. Also the way you generate your array of X for your graph is weird. You reset your timestamp shift register each time the structure is false. So when it becomes true again you don't compute the elapsed time since you started the VI, instead you get the elapsed time since the last time the structure was FALSE. Is that by design?

-

Dear all, My company is hiring a new developer to join our software team. Please see link below for details and to apply. http://www.greenlightinnovation.com/company/careers/articles/253.php

-

- 1

-

-

Did the mass compile and everything works fine now

-

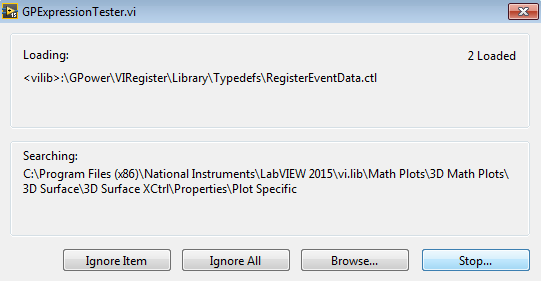

Thanks, that fixed my issue, but now it is stuck on "starting expression tester, please wait" (or something similar) and I need to kill the LabVIEW process after a few minutes

-

-

This is really nice! Koodos!

-

I started implementing exactly what you're describing Tim. This RPN stuff is pretty neat

-

I haven't had great experiences with DLL in the past so I wanted to stay away from it

-

I tried, but no, Eval Formula Node.vi doesn't support min and max. Only the formula node supports it (along with modulo and remainder and others that I also need).

-

A few years ago I wrote my own equation computing algorithm for my company's flagship software. The user will write equations using variable names and constants (for example a=2*b+3), and those equations run continuously every 100ms. The pros The user can add Min and Max expressions inside the equation. For example a=Min(b,c)+2. The syntax supports parenthesis. For example a=3*(b+c). The limitations You can have several operators but their priority is not respected. For example the result of 1+2*3 will be 9 instead of 7. The user has to write 1+(2*3) or 2*3+1 to get the correct result. You can't put an expression inside a "Power" calculation. For example, you can do a+b^c but you can't do a^(b+c). You would need to create a new variable d=b+c and then do a+d, so now you have 2 equations running in parallel all the time instead of 1. There is no support (even though it wouldn't be hard to add) for sin, cos, tan, modulo, square root... I am now thinking of using a built-in LabVIEW feature (or one the community might have created ) in order not to reinvent the wheel completely. Surely I am not the only person who needs to compute equations. I looked at vi.lib\gmath\parser.llb\Eval Formula String.vi and it seems to answer 90% of my needs, it is simple to use, but it doesn't support Min and Max expressions and writing a hybrid system would be complicated. What do people use out there? If I need to reinvent the wheel, I found interesting resources such as https://en.wikipedia.org/wiki/Shunting-yard_algorithm and https://en.wikipedia.org/wiki/Operator-precedence_parser so I think I can pull it off, but it's going to be very time consuming! Cheers

-

Thanks for the explanation. Yes, bad terminology then...

-

I read Darren's excellent article about creating healthy Dialog box (http://labviewartisan.blogspot.ca/2014/08/subvi-panels-as-modal-dialogs-how-to.html) I thought using a dynamic call and selecting the option Load and retain on first call would prevent loading the subVI in memory when loading but not running the caller VI. I created a super light project to test it but didn't get the expected behavior: - If I don't open the Caller, I don't see any VI in memory (except for the VI that reads the VIs in memory of course). - If I open the Caller, I see both the Static Callee and the Dynamic Callee, whereas I expected to see only the Static Callee. Did I misunderstand how that option works? VIs in memory.zip

-

Any difference between application 64 bits vs 32 bits?

eberaud replied to ASalcedo's topic in LabVIEW General

If at some point you need to integrate external code you'll likely find out that they only work with 32-bit. So unless your scope is well defined and you know it won't change, you're taking a big risk by moving to LV 64 bit.- 8 replies

-

- application

- 64 bits

-

(and 1 more)

Tagged with: