-

Posts

297 -

Joined

-

Last visited

-

Days Won

10

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by eberaud

-

Also be aware that LabVIEW doesn't like that much overlaying controls in front of a graph, that makes its rendering job much harder.

-

Plugins, PPLs and the MGI Solution Explorer

eberaud replied to QueueYueue's topic in Application Design & Architecture

Great presentation, thanks Derek. -

Thanks for replying. I'll go to the pub directly and see if I can meet fellow CLAs or NI staff!

-

I am attending the CLA summit for the first time next week. I'd just like to check if anybody here is going as well and if you'd be interested in meeting on Sunday evening and then heading to the Sunday night social together

-

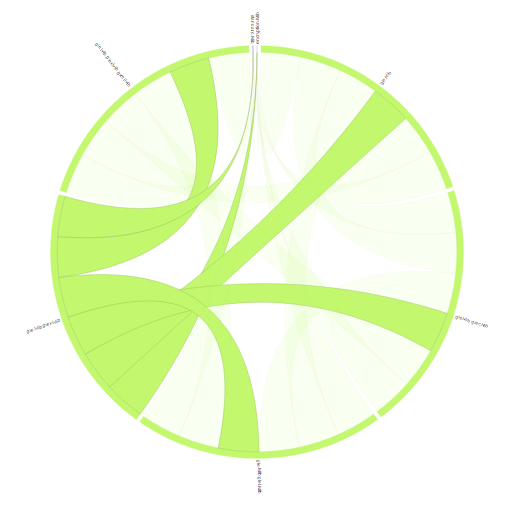

Visualizing dependencies between LabVIEW classes

eberaud replied to crelf's topic in Object-Oriented Programming

Good to know. I'll un-nest them! -

Visualizing dependencies between LabVIEW classes

eberaud replied to crelf's topic in Object-Oriented Programming

-

Visualizing dependencies between LabVIEW classes

eberaud replied to crelf's topic in Object-Oriented Programming

Truly amazing tool. I now have a very visual way to explain the impact of my code refactorization. Jon, where can we find the legend about the colors and shapes for the force diagram and dependency wheel? Also, I originally thought the shape of the leaves on the dependency wheels indicated the direction of the dependency, but it now seems to me that it only depends on the size the libraries takes on the wheel. -

Hi, I have contacted NI sales services but it's a great frustration as usual, so I will try to get some support here Basically for a project I need 2 CAN ports and I decided to go with XNET and Compact DAQ. I have 2 solutions I try to choose from: Solution 1 --> One 4-slot chassis with 2 NI-9862 modules (one port per module) Solution 2 --> One 1-slot chassis with 1 NI-9860 module (this module has 2 ports) I am confident that solution 1 will work well since I already had a project in the past with one 4-slot chassis (cDAQ-9174) and one NI-9862 module. But going with solution 2 will allow me to cut cost significantly. I just want to make sure it will be absolutely seamless and transparent for the software. Does anybody have experience with the NI-9860? Can it be considered as the equivalent of 2x NI-9862 as far as the software is concerned (LabVIEW driver) or does it remove some performance/flexibility/other? Thanks!

-

Thanks to all. Things are much clearer now!

-

Oooohh

-

Thanks, that makes sense. We only work in a executable environment, so I rarely think about the needs of those of us who need to run the sources.

-

Thanks. Still a bit of confusion: When a VI is started? You don't mean executed, right? To me it's rather "when its front panel is open". Sorry I just find the word "started" very ambiguous here. When would you ever want to do that? I always want to control when a VI runs, either statically as a subvi on a block diagram, or through the proper VI Server method if it's a dynamic call. Do you mean that, for example, you would use a simple "Open FP" invoke node and that will run the VI automatically in addition to opening its front panel? Any other use case?

-

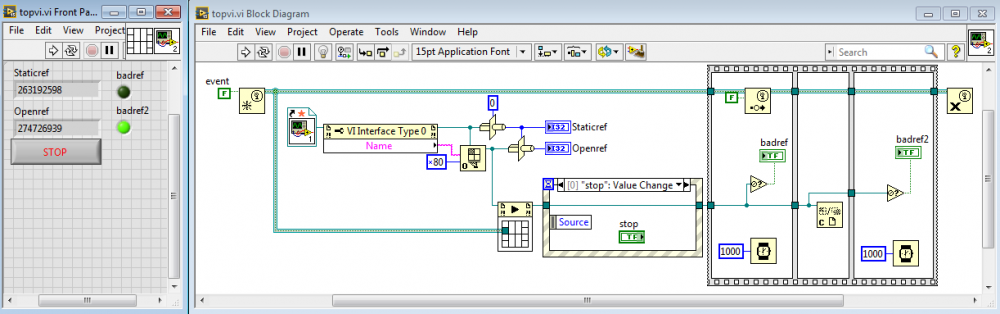

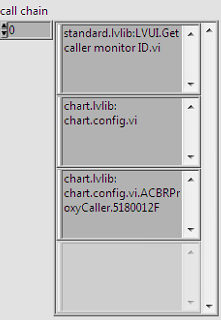

Is it possible to pass a static VI reference into Start Asynchronous Call?

eberaud replied to JKSH's topic in LabVIEW General

Reviving this discussion after being surprised by this behavior: (but now that I think of it I shouldn't have been surprised, it kinda makes sense!) The reference I get through the Static reference is different from the one I get through the Open VI Reference even though the VI in question is not re-entrant. Does it mean I have 2 references to manipulate the same VI? It seems it's the reference that gets prepared for asynchronous call and not the VI itself, since the Start Async function gives me an error if I wire Staticref instead of Openref to its input. While on the ACBR topic, I just noticed the subvi can't know which VI called it. In my screenshot, the caller of chart.config.vi calls it through an ACBR node, but the call chains shows that chart.config.vi.ACBRProxyCaller.5180012F was the caller... -

One of the significant confusion stone in my understanding of LabVIEW shoe is the difference between "Call", "Launch", "Load", and "Open". I'm not adding "Run" to this list, as it is more self-explanatory, at least I believe. In the Execution window of a VI Properties, we can see the following options: "Auto handle menus at launch" "Run when opened" In the Customize Appearance window, we can see those other options: "Show front panel when called" "Show front panel when loaded" I'm not asking for an explanation of those specific options, but I'd like a more general understanding of what those "Call", "Launch", "Load", and "Open" verbs mean. Thanks!

-

That was a very good idea. I never liked those guys.

-

Definitely. The worst thing about the LabVIEW 1 or 2-button dialogs is they use the root loop, so while displayed, many functions such as Open VI Reference just have to wait their turn to access the root loop, causing very undesirable behaviors! A few months ago I tracked down and replaced all those dialogs with my own standard dialogbox.

-

The factory pattern is great and I like to use it all over the place. I think your approach is good, and the variant method definitely works, I've seen it many times before. However it requires you to be extra diligent since a slight difference between what was passed inside the variant and the way to cast it back into usable data would generate an error that could be tough to troubleshoot along the road. Unless you use a typedef, modifying the data type on one side won't automatically modify the other side. One alternative idea would be that the initialization data is itself an object. You could have an abstract class "initialization data", and then child classes such as "InitDataClassA" and "InitDataClassB". Look at the decorator pattern, it might give you some idea. Of course you're adding another layer of LVOOP, so there is a complexity trade-off.

-

This thread is not a question, I just wanted to share the experienced I gained today by troubleshooting our application: Symptom: Engine A encounters an error (expected, so no problem so far) and display it to the user. Engine B, which is totally unrelated to engine A, freezes, and only comes back to like after the user acknowledges the error message from engine A. Consequence: The software engineer (aka me) is pulling his hair and yelling "what the h*** is going on in here?" Then he does some diligent troubleshooting and finds the culprit. Explanation: Engine A calls the "Simple Error Handler" VI, which itself calls the "General Error Handler" VI. This VI analyzes the error and opens a pop-up when there is an error to display. Engine B calls a subvi which calls a subvi...........which calls a subvi which calls "General Error Handler". This subvi doesn't have any error, but still calls "General Error Handler" because it knows that if there is no error, "General Error Handler" will simply return without doing anything. Problem: "General Error Handler" is not reentrant, meaning while it's busy waiting for the pop-up it called to be closed, it can't be used by the sub-sub...subvi of engine B. Therefore engine B is in a frozen state. Conclusion: Those error handlers are a great quick tool for creating super basic application, but not appropriate at all for large, professional applications. I'm pretty sure some of you will think "Well duh, we've been knowing that since LabVIEW 1.0!".

-

proposal Migration of OpenG Projects to LabVIEW Project Libraries?

eberaud replied to jgcode's topic in OpenG General Discussions

Old thread, but explains exactly what I've been working on for the last 3 weeks! I understand the concept of loading VIs into memory while in the dev environment. But what about building an executable? Let's say I have some VIs in a lvlib that are not being used anywhere in a certain application I'm going to build. But since there are other VIs in that lvlib that are being called, I see the lvlib and all its members in the dependencies. The unused VIs won't be loaded in memory, but are they going to be included in the executable? -

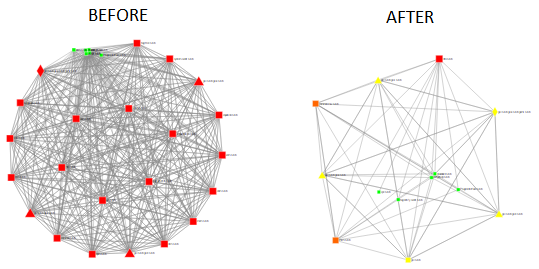

I started a new thread because I didn't want to hijack the great article about dependencies between classes : https://lavag.org/topic/19421-visualizing-dependencies-between-labview-classes/ Historically our code has contained numerous circular dependencies, where a member of library A would call a member of library B, and another member of library B would call another member of library A. As you know this situation isn't great for at least 2 reasons (but I'd love to hear even more reasons from you) 1) There is no way to load just a basic library in a small project without loading almost all the source code of the application 2) There is no hope to be able to switch to Packed Project Library one day After many days, I managed to refactor the code and got rid of almost all of the circular dependencies. I'd like to have a visual way to show the difference between the "before" and "after". The VI Hierarchy tool does show that but there is just way too much going on to really make sense of what we're seeing. I'd like a similar tool that would only show the dependencies between libraries, without seeing the details of the libraries content. Do you know such tool? Thanks!

-

LabVIEW and side libraries (NI-XNET, NI-VISA, etc.)

eberaud replied to eberaud's topic in LabVIEW General

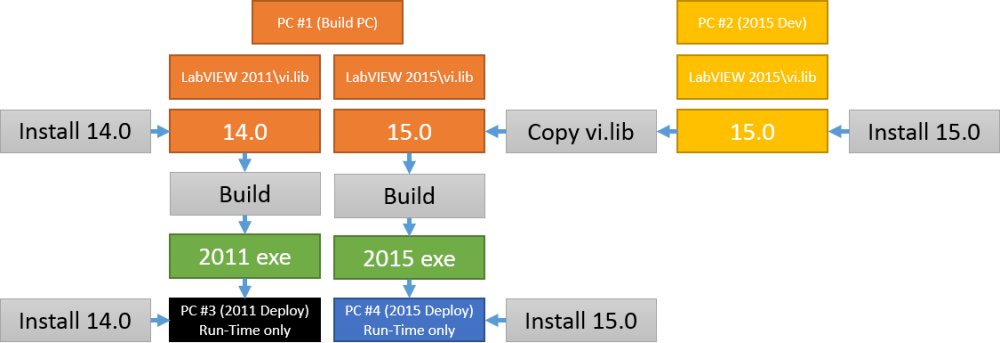

Thank you Rolfk! Seems like our best move is to use either 2 PCs (be it through virtual machines or not). We do have to guarantee the quality as those builds go to customers. -

LabVIEW and side libraries (NI-XNET, NI-VISA, etc.)

eberaud replied to eberaud's topic in LabVIEW General

Thanks, I think you understood my question, but I thought I would post a picture to be a bit more explicit about what I intend to do: -

Dear all, My team and I are in the process of migrating our application from LV2011 to LV2015. While in this process, we need to be able to make 2015 builds for testing purposes while continuing to build 2011 releases for our current customers. We have a dedicated build PC and I initially wanted to use to build both versions. The issue is: the version 15.0 of many drivers such as NI-CAN, NI-XNET, NI-VISA doesn't support LV2011. When I installed the version 15.0, the libraries were actually removed from the vi.lib folder of LV2011. The thing is for the LV2015 version, I do want to use the latest libraries (15.0) Before trying to reach a solution, I would like to understand one thing about those libraries: when I install them, they do 2 things: (A) Install the libraries in the vi.lib folder to be used by the development environment (Development PC) (B) Install some resource files to be used by the run-time engine (Deployment PC, aka customer PC) Here is the question: Can I use a version 14.0 for (A) and 15.0 for (B)? I'd guess not but I like dare asking naive questions, you never know... If I want to be able to keep my ideal scheme (14.0 for LV2011 and 15.0 for LV2015), the 2 solutions I see would be: 1) I copy the folder from the vi.lib of another computer which has the 15.0 installed to the LV2015 folder of the build PC which has the 14.0 installed (so I don't install 15.0 per say) 2) OBVIOUSLY I get a second build PC Have you ever had to deal with those issues? Thanks!