-

Posts

4,996 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ShaunR

-

-

39 minutes ago, Rolf Kalbermatter said:

I just can install the shared library with the 32 and 64 bit postfix alongside each other and be done, without having to abandon support for 8.6!!

Installing both binaries huh? Don't blame you. It takes me forever to get VIPM to not include a binary dependency so I can place the correct bitness at install time with the Post Install (64bit and 32bit have the same name. lol)

-

2 hours ago, hooovahh said:

I do occasionally have correspondence with him. He is aware of the spam issue and we've talked about turning on content approval. He seems to want to update the forums and just has limited bandwidth to do so. Until that happens I'll just keep cleaning up the spam that gets through.

The longer it is unresolved, the less likely users will bother to return. I used to check the forums every day. Now it is every couple of weeks. Soon it will be never.

Some might see that as a bonus

-

1

1

-

1

1

-

-

18 hours ago, Rolf Kalbermatter said:

It's a valid objection. But in this case with the full consent of the website operator. Even more than that, NI pays them for doing that.

The objection is that I (as a user) do not have end-to-end encryption (as advertised by the "https" prefix) and there is no guarantee that all encryption is not stripped, logged and analysed before going on to the final server. But that's not just a single server, it's all servers behind Cloudlfare, so it would make data mining correlation particularly useful to adversaries.

Therefore I refuse to use any site that sits behind Cloudflare and my Browsers are configured in such a way that makes it very hard to access them so that I know when a site uses it. If I need the NI site (to download the latest LabVIEW version for example) then I have to boot up a VM configured with a proxy to do so. I refuse to use the NI site and the sole reason is Cloudflare.

So now you know how you can get rid of me from Lavag.org - put it behind Cloudlfare

-

On 8/14/2024 at 10:37 AM, Rolf Kalbermatter said:

Now, spending substantial time on their forum is another topic that could spark a lot of discussion

Any site that uses Cloudflare is completely safe from me using it. As far as I'm concerned it is a MitM attack.

-

1 hour ago, Rolf Kalbermatter said:

Maybe there is an option in the forum software to add some extra users with limited moderation capabilities. Since I was promoted on the NI forums to be a shiny knight, I have one extra super power in that forum and that is to not just report messages to a moderator but to actually simply mark them as spam. As I understand it, it hides the message from the forum and reports it to the moderators who then have the final say if the message is really bad. Something like this could help to at least make the forum usable for the rest of the honorable forum users, while moderation can take a well deserved night of sleep and start in the morning with fresh energy. 🤗 It only would take a few trusted users around the globe to actually keep the forum fairly neat (unless of course a bot starts to massively target the forum. Then having to mark one message a time is a pretty badly scaling solution).

Not exactly a software solution though. I wrote a plugin for my CMS that uses Project Honeypot so it's not that difficult and this is supposed to be a software forum, right?

The problem in this case, however, seems to be that it's an exploit-it needs a patch. Demoting highly qualified (and expensive) software engineers to data entry clerks sounds to me like an accountants argument (leverage free resource). I'd rather the free resource was leveraged to fix the software or we (the forum users) pay for the fix.

The sheer hutzpah of NI to make you a no-cost employee to clear up their spam is, to me, astounding. What's even more incomprehensible is that they have also convinced you it's a privilege

-

Port 139 is used by NetBIOS. The person in the video is using port 8006 which is not used by any other programs.

-

12 hours ago, hooovahh said:

Also just so others know, you don't have to report every post and message by a user. When I ban an account it deletes all of their content so just bringing attention to one of the spam posts is good enough to trigger the manual intervention.

By now, you really shouldn't have to be deleting them manually.

If it's an exploit then it should have been patched already (within 24hrs is usual). If it's just spam bots beating CAPTCHA then maybe we can help with a proper spam plugin (coding challenge?). This is a software engineering forum and if we can't stop bots posting after a week then what kind of software engineers are we?

It's also quite clear to me that this is no more than a script kiddie. You can watch the evolution of the posts where originally they had unfilled template fields that, as time went on, became filled.

-

1 hour ago, LogMAN said:

They probably went to bed for a few hours, now they are back. Is CAPTCHA an option for posting new messages?

They are signing up when new registrations are disabled. It looks like an exploit.

-

3 hours ago, ensegre said:

well, NSV are out of cause here first because it's a linux distributed system, second because of their own proven merits 😆...

The background is this, BTW. We have 17 PCs up and running as of now, expected to grow to 40ish. The main business logic, involving the production of control process variables, is done by tens of Matlab processes, for a variety of reasons. The whole system is a huge data producer (we're talking of TBs per night), but data is well handled by other pipelines. What I'm concerned with here is monitoring/supervision/alerting/remediation. Realtiming is not strict, latencies of the order of seconds could even be tolerated. Logging is a feature of any SCADA, but it's not the main or only goal here; this is why I'd be happy with a side Tango or whatever module dumping to a historical database, but I would not look in the first place into a model "first dump all to local SQLs, then reread them and merge and ponder about the data". I'd think that local, in-memory PV stores, local first level remediation clients, and centralized system health monitoring is the way to go.

As for the jenga tower, the mixup of data producers is life, but it is not that EPICS or Tango come without a proven reliability pedigree! And of course I'd chose only one ecosystem, I'm at the stage of choosing which.

ETA: as for redis I ran into this. Any experience?

I've no experience with Redis.

For monitoring/supervision/alerting, you don't need local storage at all. MQTT might be worth looking at for that.

Sorry I couldn't be more helpful but looks like a cool project.

-

13 hours ago, ensegre said:

Reviving this thread. I'm looking for a distributed PV solution for a setup of some tens of linux PCs, each one writing some ten of tags at a rate of a few per sec, where the writing will mostly be done by Matlab bindings, and the supervisory/logging/alerting whatnot by clients written in a variety of languages not excluding LV. OSS is not strictly mandatory but essentially part of the culture.

I'd would be looking at REDIS, EPICS and Tango-controls (with its annexes Sardana, Taurus) in the first place, but I haven't yet dwelled into them order to compare own merits. In fact I had a project where I interfaced with Tango some years ago, and I contributed cleaning up the official set of LV bindings then. As for EPICS, linux excludes the usual Network Shared Variables stuff (or the EPICS i/o module), but I found for example CALab which looks on spot. Matlab bindings seem available for the three. The ability of handling structured data vs. just double or logical PV may be a discriminant, if one solution is particularly limited in that respect.

Has anyone recommendations? Is anyone aware of toolkits I could leverage onto?

My tuppence is that anything is better than Network Shared Variables. My first advice is choose one or two, not a jenga tower of many.

What's the use-case here? Is the data real-time (you know what I mean) over the network? Are the devices dependent on data from another device or is the data accumulated for exploitation later? If it's the latter, I would go with SQLite locally (for integrity and reliability) and periodic merges to MySQL [Maria] remotely (for exploitation). Both of those technologies have well established API's in almost all languages.

-

4 minutes ago, Mads said:

Oh, I knew. I just like to poke dormant threads (keeps the context instead of duplicating it into a new one) until their issue is resolved. Only then can they die, and live on forever in the cloud😉

I can't even find threads I posted in last week. It's a skill, to be sure.

-

46 minutes ago, Mads said:

The "LVShellOpen"-helper executable seems to be the de-facto solution for this, but it seems everyone builds their own. Or has someone made a template for this and published it?

Seems like a good candiate for a VIPM-package

(The downside might be that NI then never bothers making a more integrated solution, but then a again such basic features has not been on the roadmap for a very long time🤦♂️...)

-

10 hours ago, mcduff said:

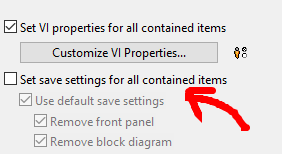

Excuse my ignorance and stupidity, but I never really understood the following settings in the Compile Settings. I always leave them as the default value. Do those settings remove both Front and Diagram from VIs in the EXE? I know the setting may affect debugging an EXE file and possibly some of the tools to reconstruct VIs from an EXE, but should I be checking or not checking those options in an EXE? Thanks

These are for shared libraries.

-

Quote

-

Use default save settings—Saves the VIs using default save settings. The default save setting for the VIs you add to the Exported VIs and Always Included listboxes on the Source Files page is to remove the block diagram. The default for all other VIs is to remove the block diagram and the front panel. Remove the checkmark from this checkbox to change the default settings for each item you select in the Project Files tree.

-

Remove front panel—Removes the front panel from a VI in the build. Removing the front panel reduces the size of the application or shared library. If you select yes, LabVIEW removes the front panel, but Property Nodes or Invoke Nodes that refer to the front panel might return errors that affect the behavior of the source distribution. LabVIEW enables this option if you remove the checkmark from the Use default save settings checkbox.

Note If you execute the Run VI method after placing a checkmark in the Remove front panel checkbox, the Run VI method will return error 1013. - Remove block diagram—Removes the block diagram from a VI in the build. LabVIEW enables this option if you remove the checkmark from the Remove front panel checkbox. If you remove the front panel, you also remove the block diagram. As a result, if you place a checkmark in the Remove front panel checkbox, a checkmark automatically appears in the Remove block diagram checkbox.

-

Remove front panel—Removes the front panel from a VI in the build. Removing the front panel reduces the size of the application or shared library. If you select yes, LabVIEW removes the front panel, but Property Nodes or Invoke Nodes that refer to the front panel might return errors that affect the behavior of the source distribution. LabVIEW enables this option if you remove the checkmark from the Use default save settings checkbox.

-

Use default save settings—Saves the VIs using default save settings. The default save setting for the VIs you add to the Exported VIs and Always Included listboxes on the Source Files page is to remove the block diagram. The default for all other VIs is to remove the block diagram and the front panel. Remove the checkmark from this checkbox to change the default settings for each item you select in the Project Files tree.

-

1

1

-

-

- Popular Post

- Popular Post

On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:As examples, why should the following routines have a GUI?

Because I can immediately test the correctness of any of those VI's by pressing run and viewing the indicators.

On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:But debugging is generally a very short stage in the lifetime of a VI.

Nope. That's just a generalisation based on your specific workflow.

If you have a bug, you may not know what VI it resides in and bugs can be introduced retrospectively because of changes in scope. Bugs can arise at any time when changes are made and not just in the VI you changed. If you are not using blackbox testing and relying on unit tests, your software definitely has bugs in it and your customers will find them before you do.On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:If I need to debug my VI, I will use a Front Panel during the debugging session.

Again. That's just your specific workflow.

The idea of having "debugging sessions" is an anathema to me. I make a change, run it, make a change, run it. That's my workflow - inline testing while coding along with unit testing at the cycle end. The goal is to have zero failures in unit testing or, put it another way, unit and blackbox testing is the customer! Unlike most of the text languages; we have just-in-time compilation - use it.

On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:Once I’m happy that the VI works well

I can quantitively do that without running unit tests using a front panel. What's your metric for being happy that a VI works well without a front panel? Passes a unit test?

On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:A VI may exist in a codebase for 30 years, out of which it may be debugged for a total of 1 hour.

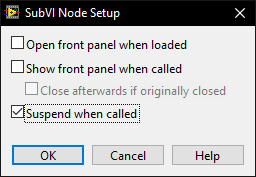

It may be in the codebase for 30 years but when debugging I may need to use the suspend (see below) to trace another bug through that and many other VI's.

There is a setting on subVI's that allow the FP to suspend the execution of a VI and allow modification of the data and run it over and over again while the rest of the system carries on. This is an invaluable feature which requires a front panel

On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:

On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:Our applications are bloated. They carry around lots of FP-related state data and/or settings for each and every VI that is part of the application, even for the VIs that will never show their Front Panel to the user once the EXE is built. This is extremely wasteful in terms of memory and disk usage

This is simply not true and is a fundamental misunderstanding of how exe's are compiled.

Can't wait for the complaint about the LabVIEW garbage collector.

On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:

On 7/6/2024 at 12:23 PM, Petru_Tarabuta said:Diagram-only VIs would constitute a significant improvement

We'll agree to disagree.

-

3

3

-

1

1

-

ECL Version 4.5.0 was released with CoAP support.

Next on the hit-list will be QUIC (when it's supported by OpenSSL properly).

I'm also thinking of opening up the Socket VI's as a public API so people can create their own custom protocols.

-

1

1

-

-

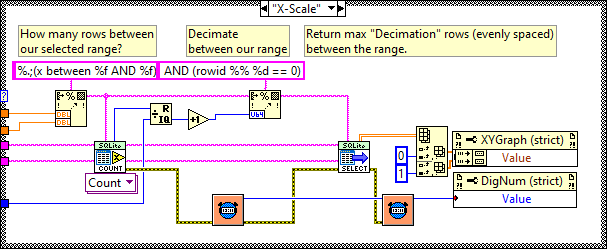

You want a table per channel.

If you want to decimate, then use something like (rowid %% %d == 0) where %d is the decimation number of points. The graph display will do bilinear averaging if it's more than the number of pixels it can show so don't bother with that unless you want a specific type of post analysis. Be aware of aliasing though.

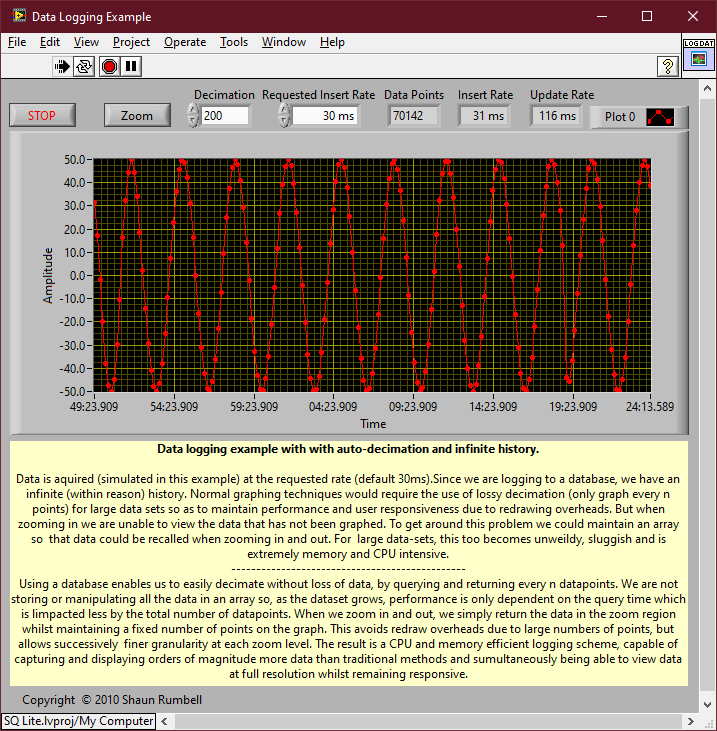

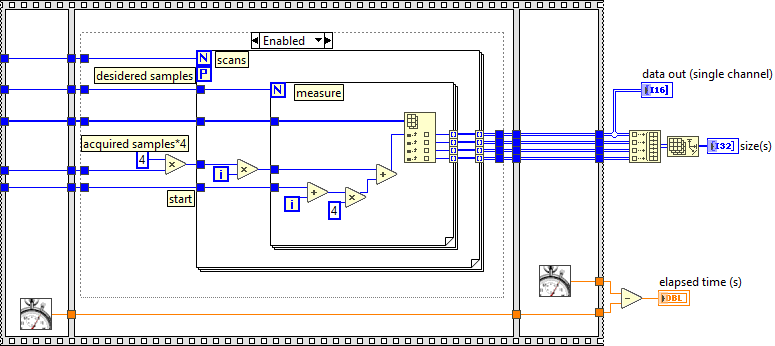

The above is a section of code from the following example. You are basically doing a variation of it. It selects a range and displays Decimation number of points from that range but range selection is obtained by zooming on the graph rather than a slider. The query update rate is approximately 100ms and it doesn't change much for even a few million data points in the DB.

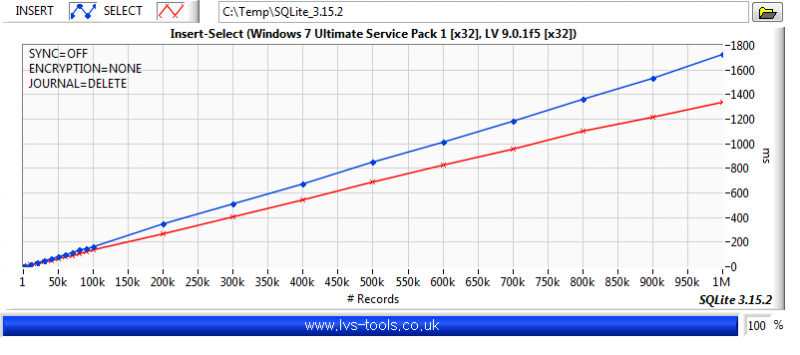

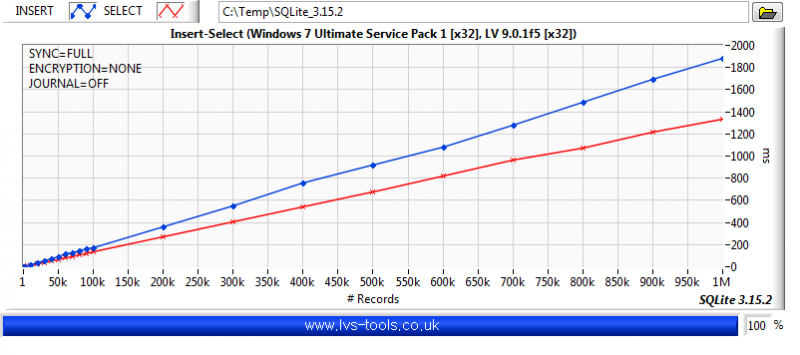

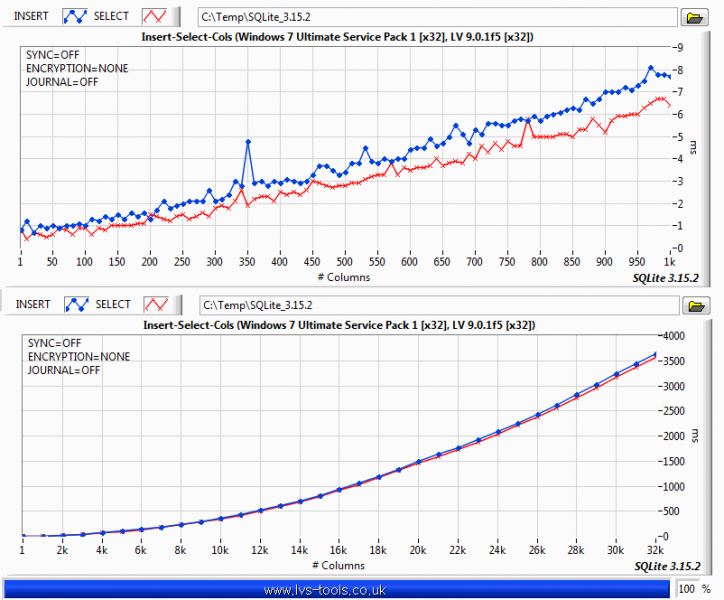

It was a few versions ago but I did do some benchmarking of SQLite. So to give you some idea of what effects performance:

-

1

1

-

1

1

-

-

I'm not sure what you mean by "not fully transparent" but if you want to get rid of the border you can do something like this.

-

1

1

-

-

13 minutes ago, Rolf Kalbermatter said:

For someone used to program to the Windows API, this situation is nothing short of mind boggling.

Not so much mind boggling - I used to support VxWorks

. It's not just Apple OS's though. Linux is similar. The same mind-set pervades both ecosystems. I used to support Mac, Linux and Windows for my binary based products because LabVIEW made it look easy. Mac was the first to go (nobody used it anyway) then Linux went (they are still in denial about distribution).

. It's not just Apple OS's though. Linux is similar. The same mind-set pervades both ecosystems. I used to support Mac, Linux and Windows for my binary based products because LabVIEW made it look easy. Mac was the first to go (nobody used it anyway) then Linux went (they are still in denial about distribution).

-

On 5/15/2024 at 5:13 PM, infinitenothing said:

The problem is that some day the customer will buy a new Apple laptop and that new laptop will not support LV2023. We need maintenance releases of LabVIEW RTE to keep it all working.

It doesn't have to. Just back-save (

) to a version that supports the OS then compile under that version. If you are thinking about forward compatibility then all languages gave up looking for that unicorn many years ago.

54 minutes ago, Rolf Kalbermatter said:

) to a version that supports the OS then compile under that version. If you are thinking about forward compatibility then all languages gave up looking for that unicorn many years ago.

54 minutes ago, Rolf Kalbermatter said:It seems they are going to make normal ordering of perpetual licenses possible again.

That is excellent news.

-

This wouldn't be much of an issue since you could always use an older version of LabVIEW to compile for that customer. However. Now LabVIEW is subscription based so hopefully you have kept copies of your old LabVIEW installation downloads.

-

Anyone interested in QUIC? I have a working client (OpenSSL doesn't support server-side ATM but will later this year).

I feel I need to clarify that when I say I have a working client, that's without HTTP3 (just the QUIC transport). That means the "Example SSL Data Client" and "Example SSL HTTP Client TCP" can use QUIC but things like "Example SSL HTTP Client GET" cannot (for now).

If you are interested, then now's the time to put in your use-cases, must haves and nice-to-haves.

I'm particularly interested in the use-cases as QUIC has the concept of multiplexed streams so may benefit from a complete API (similar to how the SSH API has channels) rather than just choosing between TLS/DTLS/QUIC as it now operates.

-

1

1

-

-

2 hours ago, Bruniii said:

Thanks for trying!

How "easy" is to use GPUs in LabVIEW for this type of operations? I remeber reading that I'm supposed to write the code in C++, where the CUDA api is used, compile the dll and than use the labview toolkit to call the dll. Unfortunally, I have zero knowlodge in basically all these step.

There is a GPU Toolkit if you want to try it. No need to write wrapper DLL's. It's in VIPM so you can just install it and try. Don't bother with the download button on the website-it's just a launch link for VIPM and you'd have to log in.

One afterthought. When benchmarking you must never leave outputs unwired (like the 2d arrays in your benchmark). LabVIEW will know that the data isn't used anywhere and optimise to give different results than when in production. So you should at least do something like this:

On my machine your original executed in ~10ms. With the above it was ~30ms.

-

Nope. I can't beat it. To get better performance i expect you would probably have to use different hardware (FPGA or GPU).

Self auto-incrementing arrays in LabVIEW are extremely efficient and I've come across the situation previously where decimate is usually about 4 times slower. Your particular requirement requires deleting a subsection at the beginning and end of each acquisition so most optimisations aren't available.

Just be aware that you have a fixed number of channels and hope the HW guys don't add more or make a cheaper version with only 2.

-

Post the VI's rather than snippets (snippets don't work on Lavag.org) along with example data. It's also helpful if you have standard benchmarks that we can plug our implementation into (sequence structure with frames and getmillisecs) so we can compare and contrast.

e.g

unzip.vi fails to unzip lvappimg

in Linux

Posted

I have the binaries as arrays of bytes in the Post Install. I convince VIPM to not include the binary dependency and then write out the binary from the Post Install. You can check in the Post install which bitness has invoked it to write out the correct bitness binary. Not sure if that would work on Linux though.