-

Posts

323 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by GregFreeman

-

Crashing Inheritance Dialog

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

I think I did. But the problem was that LabVIEW didn't actually crash, it just hung. I had to control+alt+delete out. Does that still generate the same crash report you're looking for? What I will try to do when I get a chance is narrow down the class/directory that is causing the issue. I can then zip it up and send it to NI if that's helpful. It may get cumbersome if I need to attach all my classes. -

Not necessarily an answer to your question, but if I had that many cases I would start to think about my architecture. That seems like you are handling too much in a single loop. You may want to consider more dedicated processes. The only case I can really think of that may have this many cases is message mediator, that handles/routes all messages in the system.

-

Dealing with State in Message Handlers

GregFreeman replied to AlexA's topic in Application Design & Architecture

You may not find your answer here but you may find some of the discussion relevant, especially on the end of page 1 and page 2. -

I am seeing a weird issue where my inheritance dialog doesn't load, and LabVIEW just freezes. It opens, but just shows the default "vehical, truck," etc in the tree. I will try a repair tomorrow. I assumed maybe it was project corruption, so I reverted, to a couple days ago but that didn't work. The strange thing is, if I create a new project, drag my RT target from the bad project to that one, the inheritance dialog works. But, as soon as I save the new project and restart LabVIEW, I run into the same issue. I get this in the dialog when LabVIEW restarts: The last time you ran LabVIEW, internal warning 0x1472AD65 occurred in objparser.cpp. Right now I'm wary of testing with other projects to see if they have the same problem, for fear they will run into the same issue. I'm trying to minimize the projects effected by this. Has anyone run into a similar issue before? I have also attached the dumps from the crash in case anyone from R&D wanders by and sees this. Edit: Just tried to reproduce creating a project from scratch and haven't been able to yet. If I can, I will post that project. Edit 2: I started adding files/folders one at a time to a blank projects and found the one that's causing the crash. It's my "classes" folder that's holding most of my classes. Doubt anyone can directly help me from this point; there must be something in one of those class files that's causing the problem. lvlog2013-06-16-15-14-32.txt lvlog2013-06-16-15-01-56.txt

-

Are You a Humble Programmer?

GregFreeman replied to brian's topic in Application Design & Architecture

Saying I'm guilty of number 2 is an understatement. -

Our general consensus on NSV: Critical stuff - don't use them. Non critical - sure, use them (but you already have other comm set up for the critical stuff so why not just use that ). I don't know the exact number, but at some point we have noticed NSV issues when you get large numbers of them on a system. I have recently written an object based TCP client/server so I just use that for everything now. Once it's written, you don't have to spend time writing tcp communication again. IMO your time is better spent doing something like this to avoid potential headaches down the road, and scrap NSV all together.

-

I have a config file that configures a specific process in my application. As far as I see it, I have two options to help with encapsulation. One is to just let the process that uses it, handle it. That process' class would have read config, save config, etc methods which would not be public. Or, I could wrap up the functionality in its own class, making methods protected, and making it a friend of the process that uses it. Any pros/cons if each method? Or, am I splitting hairs?

-

You're in Austin, just walk over to building C and drop it in someone's mailbox . But seriously, when I have stuff like this I go the online route so that I can work on other things until they get back to me. Although the AE support is decent, it's usually a case of try these 10 things (which you already have), let me check for any internal knowledgebases, then it gets escalated. I'd prefer to let them just crank on it and shove it through the necessary channels without a phone conversation.

-

Variants in Object messages and jitter

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

I think I may have been too quick to the forums. Looks like it might be a dev environment thing because I am not running into the issue when deployed. -

Variants in Object messages and jitter

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

Well, I'm having trouble getting it to reproduce on windows in a similar fashion. AQ, I can release the code to NI (although I can't publicly) if it's something someone would find to be worth taking a look at. It can be easily reproduced by switching on/off a diagram disable structure in my code, as long as someone could get the same cRIO setup that I have, since the loop that is effected is reading data from the FPGA. I know this is a long shot that you guys most likely won't have time for, but if someone is bored...... -

Variants in Object messages and jitter

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

AQ - I don't think anyone thought it would actually work...but I was grasping at straws and was willing to try anything. Eventually I hope to get something that I can use to reproduce this on a smaller scale and post it. I feel it may be tough to reproduce on a Windows OS with any reliability, but maybe not. This may also be some weird anomaly of running the RT in dev environment, and not deployed. Thanks, Mike. I'll take a look. -

So I ran into an interesting problem and workaround the other day. I haven't had time to reproduce or do any benchmarking but hopefully will at some point. I wanted to see if anyone had thoughts on this. I have a message object that carries a variant and this object gets sent via a user event. What I was seeing was "serious" (I know serious is relative but 10-20 ms) jitter in a loop parallel to the one actually sending data. This jitter was causing my DMA FIFO to overflow (It should be noted this parallel loop with the jitter introduced was running at a very fast rate already, 10 ms). Using diagram disable structures, I finally narrowed down the cause of the jitter to be the sending of an object with a cluster in the variant. But, per my coworkers suggestion, I tried flattening the cluster to a string first and putting this string in the variant. I found if I flatten the cluster to a string, and put that string in the variant, the jitter issue went away. Can anyone think of why this may be?

-

My counter argument to this would be: Then don't hire that person (assuming they wouldn't be willing to learn to understand them). Stop using lossy enqueue element and this problem will go away

-

I disagree with Darin a little...I think there are valid times when you should comment on what the code is doing. Sometimes what may be self documenting to you is not as self documenting to others. I run into this commonly with bit manipulations and bitwise functions, and lately even more so with FPGA. Often times if someone just left a comment "this checks if bit 4 changed" I would understand quickly vs. trying to read the ands and ors and scale by power of 2's that my mind doesn't yet process as easily. Maybe this is my own shortcoming, but I still think it's worth noting. The problem is at what point do you determine whether something is self documenting in general and the majority of programmers would agree that it is, or whether it's self documenting just for yourself (code reviews, anyone?). I find myself looking at my own code and thinking "that makes sense" but forgetting that it took me 3 hours to get it to work. Anyways, this is just one example and I think touches on the same thing Cat is saying above. One thing I am always sure to document is the random "increment" or "decrement" function. C'mon, we've all seen this, the code works, yet we wonder wth is that there for!

-

I heard an interesting idea at the CLA Summit, although I haven't tried it myself so I don't know how well it will work. Each time they launch the app they monitor change in windows memory usage from start to end and log it to a file. Then each time the application is opened, the memory usage is monitored and compared to this value from the previous time the application was run in order to calculate percentage loaded. I think this is what was done anyways, but I may be a bit off base. I supposed if you had some other process effecting your memory at the same time it could cause skewed results. But, it was an interesting concept nonetheless.

-

Is this a safe use of to more specific class?

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

Many thanks. -

Is this a safe use of to more specific class?

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

Thanks, this makes sense. Would you care to elaborate on this a bit more? I'm all for learning other ways to implement things, especially if it means trading run-time checking for compile time checking. -

I am attaching a simplified example and but I'm going to describe an example of where I'm running into this problem. Lets say I have a class "Dialog" and from that I have a child class "Channel Config Dialog" The Dialog class accepts Dialog Pages and puts them in its private data, and the Channel Config Dialog accepts Channel Config Dialog Pages (a subclass of dialog page), but wants to reuse the fact that its parent already caries around pages. So there is a protected set method defined in the dialog class that allows the Channel Config Dialog to put Channel Config pages into the Parent Dialog Class's private data. So, I do this by having a static method in the child class, call it "set pages," and this method only accepts Channel Config Dialog Pages on its connector pane. This method then calls the protected method of the parent class to set the pages in the parent data. However, now the pages are being carried around on a parent Dialog Pages wire. If I want to call a Channel Config Dialog Pages method I'm out of luck. So, I was using to more specific class, assuming it's fairly safe because I have specified the connector pane on the child class's "Set Pages" method to not accept any Dialog Page type other than the type it expects. What I can't determine is if this is Ok or if it's pointing to a bad design decision and there is a better way. If you are confused hopefully the attached code helps config.zip

-

Ah, yes, another OO architecture question

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

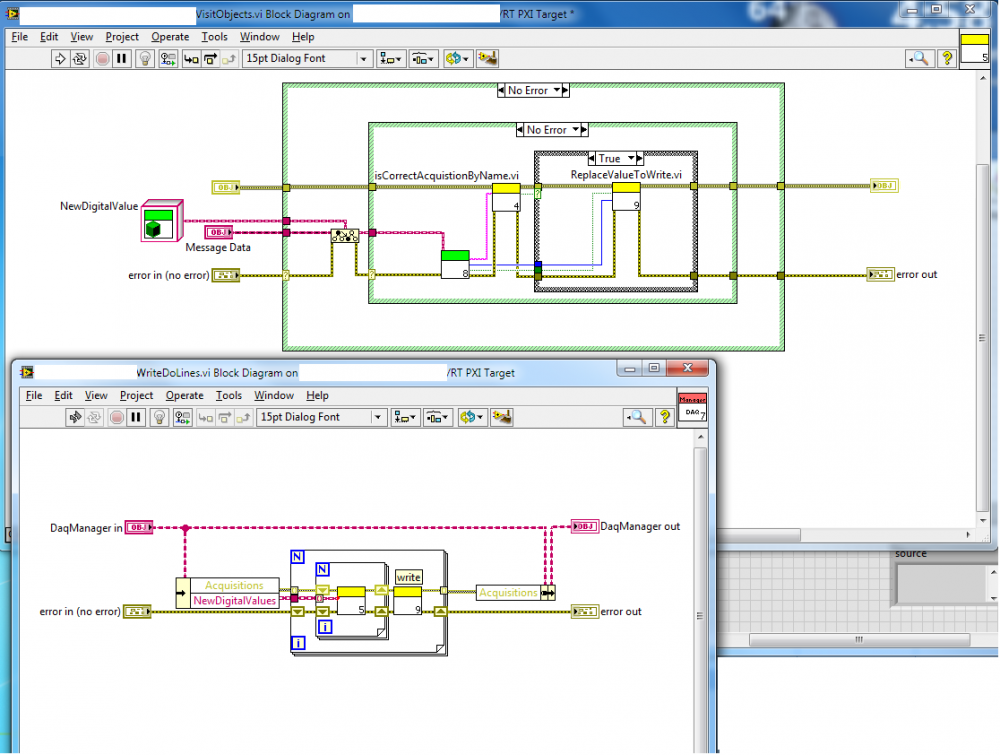

This is what i have and something seems wrong..I am not visiting in any way...VI 5 is the one shown in the back; I haven't made icons yet. -

Ah, yes, another OO architecture question

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

This is what I went with...using the class that already has the reference as the visitor. I have dug into AQs example on NIs site and am having a little bit of trouble understanding, mostly due to the fact that it doesn't look like the wiki examples (albeit they are text are in text languages). It looks to me basically like the command pattern (I understand they are similar). I don't see anywhere a "visitable" object is being given a visitor, unless I'm just not thinking about it correctly, which is most likely the case . It seems to me some of this may stem from the fact that we can determine at run-time if an object matches a specific type or not (by using to more specific class) so there is no need to have all the polymorphic functions for different visitable types (i.e. the second dispatch). For instance, in his class that is incrementing, he just does a cast and if there is an error because it isn't the correct type, he doesn't call the increment function. I may be off base here, but I am continuing on, getting this to work, and I can come back and adjust as need be later. In the mean time, any further clarification is always appreciated. -

Ah, yes, another OO architecture question

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

I like this idea. The way I have it now, the DAQmx Task reference is wrapped up in an "Acquistion" class, separate from the incoming array of messages to be iterated on. So would it be kosher to add a method to that Acquistion class "Get Visitor" which returns the appropriate visior with the DAQmx Task reference added to the visitor's private data. This visitor is then used to iterate on all message, gathering the required info, and then writing to the Task at the end. It seems like this would be an OK way of doing it. Or maybe make the acquisition class the visitor, since it already has the task reference. -

Due to the limited time I have to write this, I am going to try to be concise and hope that along with that comes enough clarity for you to refute . a) Lets say I have a DAQ Output class (hey, whadda ya know, I do!). Then I want to create child classes with NI DAQmx DO, or Third party card AO. It doesn't make sense, IMO to have a DAQ Output child class that doesn't have a "Write to DAQ Card" method. So, I want to enforce this, mostly from an it-makes-sense-in-this-scenario point of view. If you want to use my DAQ Output class, you better have some method for actually writing to an output! I don't care if it's a boolean for digital lines or a double for analog lines, but if you aren't writing to an output with the new child class, it most likely shouldn't be a child class of DAQ Output in the first place. Now, I could separate these classes out, scrap the DAQ Output parent class and have a Digital Out class and an Analog out class. This way the connector panes would have a boolean or double respectively. But then I have to add, to each class, a must override "write" method when it seems (again, maybe just to me) that this should be enforced in a higher level DAQ Output class so I am not continually redefining a must override method just due to different connector panes. This may be the point where you say, yes, you should . b) I'm not 100% sure, but maybe you can infer from my description above. Both? c) "Or is this "must implement" VI something that a caller of the child class would use. If it is just something that the user of the class would use, why is it of concern to the parent class whether that VI gets written or not"..........This, except in this case, it seems that it is of concern to the parent, because the parent is a DAQ Output. And why would you create a child class of DAQ Output, that doesn't write to the output of a DAQ, no matter what the data type is?

-

Ah, yes, another OO architecture question

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

Exactly what my coworker and I decided this morning. Buffer all the messages, and every TBD number of ms compile them all and write them.