-

Posts

122 -

Joined

-

Last visited

-

Days Won

12

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by smarlow

-

working with values of a table

smarlow replied to rb767's topic in Application Design & Architecture

Here is yet another simplified version based on a classic listbox editor idea. It has the following features: "Table" values are stored in a 2D DBL array. Editing is done using a multi-column listbox. DBL controls edit the individual row elements Listbox updates when the DBL control values change DBL values are returned and table value file is updated when VI is closed. Subvi_WCS_listbox_classic.vi -

working with values of a table

smarlow replied to rb767's topic in Application Design & Architecture

Here is a program that uses a multi-column listbox instead of a table. The selected row is highlighted, and the values in the row are output to the DBL indicators on the front panel. Selecting a row updates the output values. Using the "Set" buttons generates new values, and also updates the row selection. A cluster is used for the shift register data to clean up the diagram. The diagram has a case structure with the "0" case handling the file read initialization. The "0" case code should be placed in a subVI to get rid of the case structure, but I wanted to keep the code to a single VI. Subvi_WCS_listbox.vi -

working with values of a table

smarlow replied to rb767's topic in Application Design & Architecture

Here is another simplified version. Not as fancy as hooovahh's, but should do the job. From your last question it sounds like you might want to take a look at a multi-column listbox if you want to manually select rows of data in tabular form. Subvi_WCS_sm.vi -

working with values of a table

smarlow replied to rb767's topic in Application Design & Architecture

Can you post the code? I don't quite understand the description of the problem. -

-

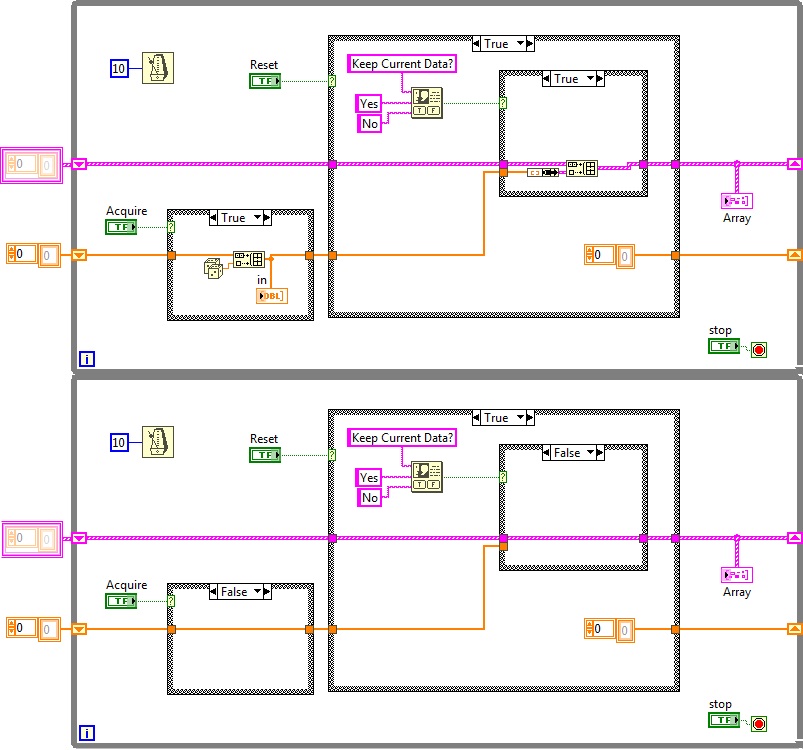

Manudelavega has a good point that you are appending new data to old with each cycle of the Acquire button, so that the data just keeps growing. When you save each row to the 2D array, it will be mixing the runs on each line, and also padding previously save 1D rows with zeros. LabVIEW expands 2D arrays to accommodate the largest row, and pads all other smaller rows with zeros. If you expect each test run to have different lengths, then a 2D array is not a good choice for saving the data. Why not save the test runs you want to keep to a file? Isn't that the objective anyway, to permanently save your valuable data? If you want to save to memory, and test runs are different lengths, you should bundle each run into a cluster and store the data as a 1D arrays of clusters of 1D data arrays. Take Manudelvegas advice and change your save boolean to a latch type. Also add some code to reset your acquired data to empty. An event structure, rather than multiple case structures, would probably serve you well in this regard.

-

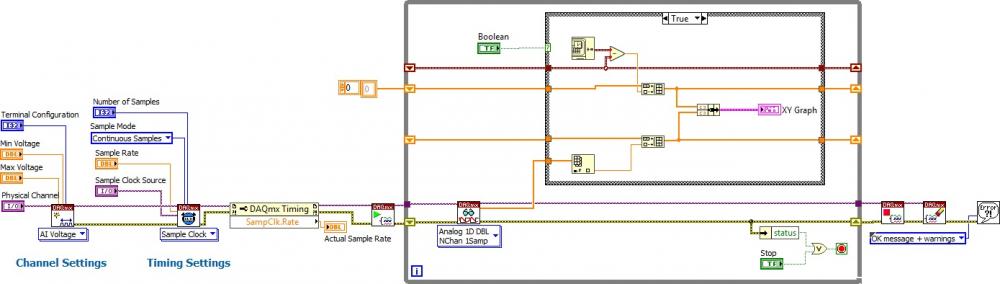

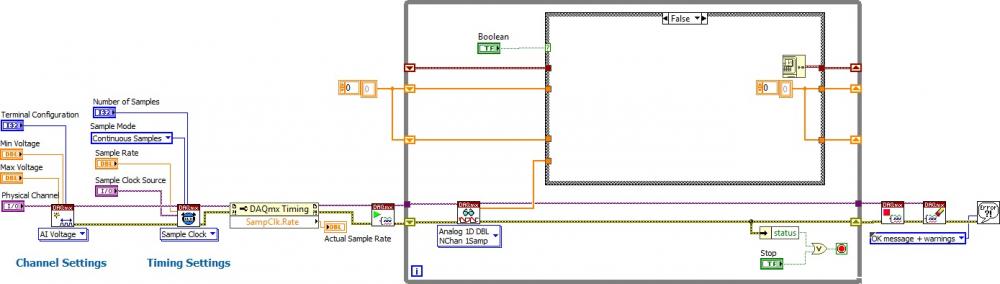

A few problems with your algorithm. You need to read the DAQ task continuously to keep the buffer from overflowing (error -20027). Move the DAQmx read outside the case structure. That will clear the buffer even if you don't use the readings. Also, get rid of the metronome timing function and let the DAQmx read function time your loop for you. If you don't want the old points from the last Boolean=true phase, reinitialize your shift registers with 0 arrays to clear the old data when your Boolean=false. Move the graph update into your Boolean=true case structure to retain data in the graph from the last run when boolean=false. Hope this helps. Here is the solution: I should also add here (after re-examination) that the DAQmx read should be changed to NChan NSamp for multiple channels, and 1Chan NSamples for one channel, if you set up the task as 100 samples per channel at 1000 S/s (your defaults). In your example, and my pics, you are only reading 1 sample out of the buffer for each channel. Also, if you run in continuous samples mode, you will need to wire the "Number of Samples" control to the Number of Samples input of the the Read function to force it to wait for the number of requested samples. Otherwise use the "Finite Samples" mode.

-

Since they are already acquiring signals with the USB-6366 DAQmx device, I'll assume they have it connected to a PC. What is the motivation for disconnecting the USB-6366 from the computer and connecting it to the cRIO? Are there space limitations? Are they trying to fit everything in a box? If not, why not just transfer the data from the USB DAQ device to the cRIO via a TCP connection? What is it you are really trying to do? Are you doing calculations and/or control based on data collected from both devices? Could you not also transfer the cRIO data to the PC? I guess the question is really why are you trying to get rid of the PC? Another option that might be possible, if there are space considerations, is to buy a small PC like an Intel NUC. That could run the code collecting data from the USB DAQ, and transfer it to the cRIO via a TCP connection. Since the USB device is non-deterministic anyway, you are not losing anything except a little more latency time added to repackage the data and send it out over the TCP connection. All of these options sound complicated versus just buying the cRIO modules needed to replace the USB device. As the others have stated, trying to communicate via USB raw is a big waste of time, since it would cost far more than just replacing the USB hardware.

-

My "company" at the moment is NASA.

-

33% of a potential market sounds great, until you realize it is one out of three, and the salient customer descriptor is "Big". Charging higher and higher prices to fewer and fewer deep-pocketed customers is not a long-term strategy for survival. It looks suspiciously like Keysight, which used to be Agilent, which was once HP... Well, you get the picture. For years HP tried to make HP-VEE into the next LabVIEW. Now it appears that NI is trying to make LabVIEW into the next HP-VEE. Unless they open up all the power of it to many more devices and hardware platforms, I feel it may become a novelty language used by the few data acquisition and test engineers that still have jobs.

-

There are a lot of Linux users here, that is true. But Windows still predominates by a wide margin, and the Python training bootcamp last year was mostly Windows machines and Macbooks.

-

Yes, I agree. But the younger generation doesn't seem to care much what it was designed for, and new libraries and bindings are appearing all the time to do all sorts of things. That is the point I was trying to make. The young folks like it because it is free to install and use. Never mind that it is a poor choice for the type of work LabVIEW is designed for, or that the true cost of it shows up later trying to get all the libraries to do something useful. The first time I saw Python and played around with it, I thought to myself "well this is a giant leap backward into the past", or "a command line interpreter? Are you kidding". I felt like it was 1982 and I was writing a gwbasic program with edline. I was impressed by its ability to dynamically reshape complex data types and large amounts of data, but other than that it looked like a big pile of crap to me. Again, the kids don't seem to care, nor do they mind spending hours trying get that pile of stuff working together as a unified development system of some sort. At least that is what I am seeing where I work. It has already almost completely obliterated Matlab here. All it is going to take is for someone to create a distribution that is specifically designed for data acquisition. There are examples: https://github.com/Argonne-National-Laboratory/PyGMI, and the Qwt library is specifically designed for control/dynamic plotting. I found a pretty good youtube video that explains what is available, and the work being done in that area:

-

Google trends for "Learn Python" Google trends for "Learn LabVIEW" I agree with another poster that it feels like LabVIEW is headed for the retirement home. Probably because that is where all the expert users are heading soon. Our LV user group is full of grey-haired old folks, while the yearly Python "boot camp" is full of 10 times as many bright-eyed kiddies. It doesn't take a math genius (although most of us are) to see what is happening. How can we stop the slow demise? The answer is, we can't. Only NI can do anything about it. I'd post a list of what they can do, but they won't do it.

-

Multiple Client, Single Server Configuration

smarlow replied to stefanusandika's topic in LabVIEW General

@stefanusandika I have attached a simple example of a multiple queue program architecture. The Main.vi creates three named queues. There are three copies of a reentrant subvi that generate three sine waves 45 degrees out of phase to simulate your "internal process" subVI's. The subVI enqueues a value from the sinewave very 100 ms. The Main vi reads these three queues and graphs the values. Notice I set the wait period to 200 ms on the dequeue function calls. This is longer than the 100 ms between enqueue operations in the subVI's. Queue.llb -

Multiple Client, Single Server Configuration

smarlow replied to stefanusandika's topic in LabVIEW General

Stefan: If you can't tolerate the loss of any data that is collected between reads, then a Queue is the way to go. There are several examples in the LabVIEW Help. Select Help>>Find Examples from the LabVIEW VI menu. Use Search from the Find Examples dialog and search for "queue". The "Simple Queue.vi" shows how to use a queue to share data between loops; but the same method can be uses to share data between VI's. Just make sure your Main VI reads the queues fast enough to clear them, or you could get an overflow. Examine the "Queue Overflow and Underflow.vi" example to learn about overflow. Good luck! -

Multiple Client, Single Server Configuration

smarlow replied to stefanusandika's topic in LabVIEW General

Is there any particular reason why you would use TCP and LocalHost communications, such as having pre-compiled internal process VI's that are in a different scope than the Main VI? If not, one of the best ways to do this is with Notifiers (or queues, but I prefer Notifiers). If all you want in the Main VI is the latest data, and you don't care about old data being overwritten, the Notifier is the way to go. Each of the 15 subVI's would poll the hardware continuously and send data to its own notifier. The Main VI waits on notification from the internal process subVI's notifier and gets the latest update when it arrives. Set the "ignore previous" input to the "Wait on Notification" function to TRUE to make sure you get the latest update when you call it. With this architecture, you don't need to send requests to the internal process VI's; you just wait for the latest update. As long as your update rate on the "Send Notification" end is faster than your longest tolerable latency period, you'll get the data within the time you need it. If you want to send requests, you can use a Notifier in the opposite direction. Call "Wait on Notification" in your internal process VI's and wait forever (-1). Send Notification from the Main VI to make a request for data, and use a separate return Queue or Notifier to send the data back to the main VI. This type of architecture is similar to the way the Actor Framework works, at which you also might want to take a look. -

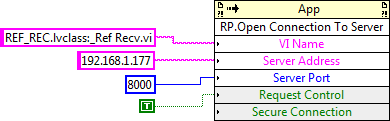

With almost no browser choices that still support the LabVIEW plugin for remote front panels, my colleagues and I have opted to just use the LabVIEW RTE to view remote panels. This is especially important for the RT targets we are running (RIO and Pharlap machines). We have a solution that works: using the App class "RP.Open Connection To Server" mehtod. This little function makes it possible to create a launcher applet that launches a remote front panel in a LabVIEW RTE window, no browser needed. However, the problem we have is that we have no control over how the new RP window is launched. For example, we'd like the window to come up maximized, but can't seem to find the tools (LabVIEW, Win API, or otherwise) that would allow us to control this new RP window that gets created. The LabVIEW RTE just seems to throw the window up on the screen in a random location. If anyone has done this, gained control of the new window launched by the App class "RP.Open Connection To Server" method, we would be eternally grateful if you gave us a clue. Thanks in advance.

-

LabVIEW EXE Running on a $139 quad-core 8" Asus Vivotab tablet

smarlow replied to smarlow's topic in LabVIEW General

I put an enum on the front panel to see how it would respond, and it seems to work fine. I have actually worked with LabVIEW and touch screens quite a bit in the past. I have done automation apps in which LabVIEW was used to program the touchscreen MMI. To make the job easier, I created a bunch of custom controls with oversized inc/dec controls, large font text, etc., and my own VI-based onscreen keyboard controlled with mouse events. Of course the on-screen keyboard in windows has improved since then (the old XP OSK was not resizable, and the keys were too small to use with fingers on a touch panel). One of the problems I've noticed with the Windows touch keyboard is that it does not re-position the application on the screen to keep the field of entry visible above the keyboard, so you can't see what you are typing if the field is at the bottom of the screen. My android tablet, and kids' iPad always keeps the field in view automatically. Windows also does not bring up the keyboard automatically when cursor is placed into an entry field, even in their own browser. You have to touch a button on the task bar. Perhaps this is a setting. Being a Win 7 user who refused to upgrade previously, I am not used to Win 8.1 or 10. I suppose I should Google it. -

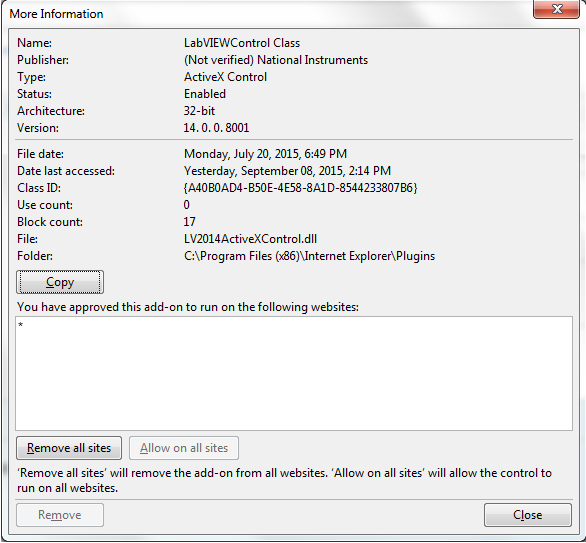

We have a LabVIEW 2014 SP1 RT system here that we need to access remotely, and have been using IE11 with no issues for the last year or so. A recent Windows 7 update over the holiday weekend busted the LV2014ActiveXcontrol.dll plugin. It comes up as "Not Verified" (see pic). You can see from the info dialog that IE is blocking it (17 attempted uses were blocked). We tried running regsvr32 from an admin command prompt with no effect. Has anyone else seen this issue? A LV2012 version of the plugin comes up as "verified". Did the latest Win 7 update block unverified plugins? Our Windows updates are rolled out by the IT department as a group policy, and we are unable to roll them back. If anyone has a solution to this problem, we would appreciate knowing what it it. Thanks.

-

LabVIEW EXE Running on a $139 quad-core 8" Asus Vivotab tablet

smarlow replied to smarlow's topic in LabVIEW General

Hooovahh: I get your point. There are issues unique to these devices, such as DAQ hardware, horsepower, etc. I guess I was just a bit unsure about where to post this thread, and had the thought of a dedicated category. -

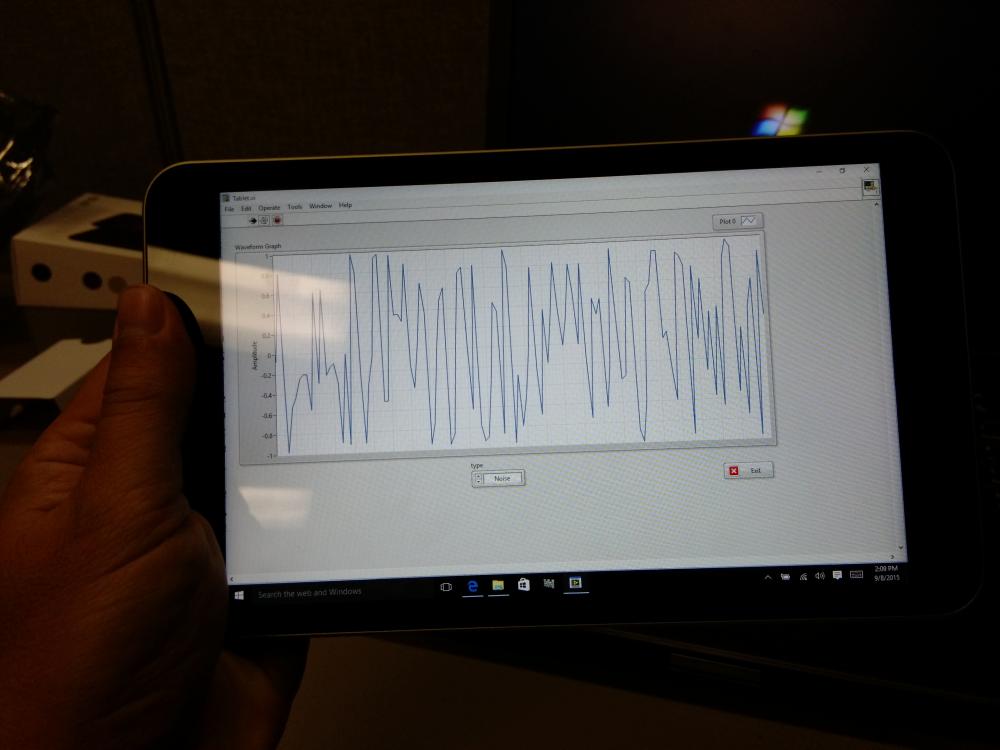

Hello everybody. I just wanted to show LabVIEW running on an Asus Vivotab 8 tablet. The tablet was purchased in a good deal for $139 (now gone), but can be purchased from Microsoft for $149. It runs the Intel Atom Z3745 "Bay Trail" quad-core cpu, has 2GB of ram (most other value tablets only have 1GB ram), and 32GB of flash, plus a micro SD slot and USB OTG. The unit I purchased came loaded with Win 8.1, but I believe the units being sold by MS come with Win 10 preloaded. I downloaded the LabVIEW 2014 SP1 runtime engine directly to the tablet and installed it with no issues. I then updated the tablet to Windows 10. I used my Win 7 pro laptop to create a simple application that throws some white noise into a waveform graph and compiled it to an executable. I transferred the application to the Vivotab 8 using a micro SD card, and ran the app directly from the SD card. It runs LabVIEW runtime like a champ. Very fast loading (although it is a simple app) and fast screen updates, virtually no difference from my laptop. So if you want a great tablet that will run LabVIEW for around $150, this puppy will do it. Shouldn't there be a forum category devoted to LabVIEW on tablet & phones? There is a PDA category, but that seems a bit dated.

-

LabVIEW snippet PNGs are being sanitized

smarlow replied to Phillip Brooks's topic in Site Feedback & Support

Old post, but I am having this problem, too. How does one post a snippet anyway? I've tried attaching them to the post, but they get resized, and the embedded code is stripped away. Not a very good feature for the premier independent LabVIEW web forum. Is there some other way to add a PNG to the message without attaching it, or clicking the "add to post" button? Here is an example of a snippet added using the attachment feature of the "More Reply Options" page. I also used the "Add to Post" link next to the attached file: Why does it shrink the image all the way down to a thumbnail? Most monitors today are huge, with wide aspect ratios, and plenty of resolution. Why not upload the PNG "as is"? Most snippets are below one screen in size, and any that are larger will get "resized" for display by the browser. Also tried the "Advanced Uploader": Being able to share snippets without the code getting stripped would be a good thing. -

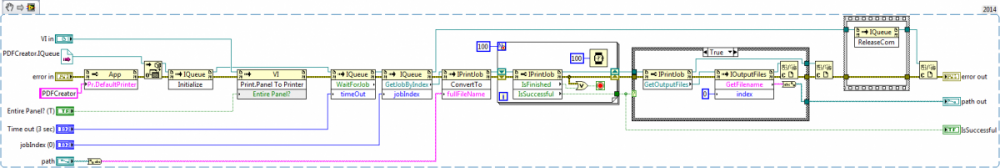

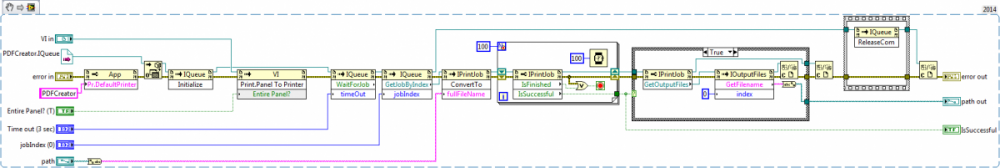

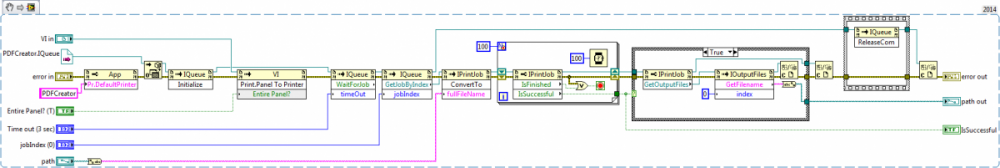

This is an old thread, but rather than start a new one, I thought I would reply here with some code. I wrote a subVI that uses the COM interface of the latest version of PDF Creator (2.1). It takes a VI ref as an input and prints the front panel to a PDF programmatically. It also sets the file name so there is no pop-up. You will need to install PDF Creator 2.1 and enable Auto-Save manually in the profile settings. All I can say is it works for me. If it doesn't work for you, you might have to fiddle with it. Buy, hey, it's free! Print VI panel to PDF.vi