-

Posts

691 -

Joined

-

Last visited

-

Days Won

11

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Justin Goeres

-

-

-

-

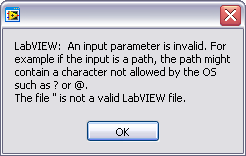

I suspect I'm seeing the same problem as mentioned previously in this thread, but my symptomology is a bit different and the fix mentioned in that thread doesn't apply to my situation.

I have an EXE built in LV85. It's fairly complicated, with a couple dozen classes, several levels of inheritance, a couple VI server-spawned VIs, an external DLL or two, and some various config files (and these all come from a handful of .lvlib files along the way). When I run the built application from the Destination Directory specified in the Build Spec, everything is fine.

However, when I move the EXE (and all its components) to another directory, I get the following error dialog on launch:

Now, the really frustrating thing is that after displaying that dialog, the application goes on to run just fine.

The problem does not appear to be related to the particular directory I copy the app to, as I've reproduced the problem in multiple directories on multiple computers and even tried building the app on multiple computers just to make sure it's not something weird on my development machine.

Does anyone have any insight on this? What could the RTE (or the app) be looking for that throws that error in anything but the original Build Destination directory?

-

QUOTE(rkanders @ Oct 2 2007, 02:45 PM)

Same here. I did have fairly frequent crashes and LV recovered *.proj file.Interesting that this gets posted today, because I noticed the same thing for the first time this morning. I, too, have had a few crashes and have probably recovered the .lvproj file a time or two. When I went to build an EXE today (one of several specs in this project), all the Source Files I'd selected before had been removed from the Build Spec. I checked a couple other Build Specs in the project and the same was true of them. I didn't check everything exhaustively.

-

QUOTE(george seifert @ Sep 27 2007, 08:25 AM)

Wow, not much going on there that you'd think would cause a problem.

You said it's happened in a few other VIs, too. Did they all look basically like this one?

Just as a shot in the dark, if you add an initializing value to the shift register in the top loop (i.e. wire something to the left side of it), does that make the problem go away? Over the years I've managed to confuse LabVIEW a few times when I didn't initialize a shift register and LabVIEW apparently couldn't infer its type properly.

-

QUOTE(george seifert @ Sep 27 2007, 07:33 AM)

When I try to duplicate a case in my case structure, LV crashes big time. :thumbdown: I tried it several times on one case and it crashed every time. Then I tried another case and it worked. I suspected that it happens if there's a control in the case so I removed the control and it duplicated fine. This has happened in at least 3 different VIs now. At least I'm ready for it now and make sure to save before trying it. This is definitely a bad bug. Anyone else seen this?I just tried this with a simple Case structure (T/F selector) and a DBL control terminal, and I was not able to reproduce it.

Can you post more info about what's going on in/around the structure? Maybe a screenshot of (some portion of) the code?

-

QUOTE(LV Punk @ Sep 25 2007, 12:12 PM)

The example code would actually just be the Advanced Serial Write and Read.vi from the LabVIEW examples. I have it running in "Read" mode on the receiver and "Write" mode on the transmitter.

Actually, using the VI Server and a queue was exactly where I started. I've been peeling back the layers ever since

.

.QUOTE(shoneill @ Sep 25 2007, 12:20 PM)

If you keep the Baud high, but lower the frequency of the data transmission (you're doing a simulation, right?), do you receive everything OK?Yes, a high baud rate but a lower transmission frequency makes the problem go away. In fact, you can gradually increase the transmission speed and see the system get more and more sensitive to smaller and smaller interruptions. It's fun, in that oh-look-this-is-really-broken kind of way.

QUOTE

If you reduce the packet size to 8 Bytes, do you receive everything OK?I have not tested 8 bytes specifically, but I have noted that shorter test messages seem to be more resistant to problems. So I think the answer is somewhere in the neighborhood of yes.

QUOTE

a link to the adapter I usually use, I'm pretty happy until now. I tried some Moxa (good reputation) but they bombed on me - on multiple occasions. They apparently don't support software flushing of their buffers! 64 Bytes of buffer. Really not much. I've tried to search for the 19Qi, but I've yet to find anything of use (like Specs). Maybe it's missing the HW buffer......Thanks for the link. I've been reasonably happy with Keyspan over the years, but a USB->RS232 adapter is one of those things that sometimes you just need to pony up and buy the really good one, so you can stop worrying about it. Perhaps it's time to step up and get a new one.

-

QUOTE(Aristos Queue @ Sep 25 2007, 08:13 AM)

Sorry, Justin... Ben is right. When you're playing "hide the dots" on an RT system, order of terminals does matter.

Is that a Note in the docs somewhere, then?

(and yes, I am too lazy to look, thank you very much.)

(and yes, I am too lazy to look, thank you very much.)You're all just piling on because I can't tell the difference between bits and bytes in that other thread, aren't you? Perhaps I'm losing my mystique.

-

Congratulations on your new high-speed, ultra-high bandwidth, massively parallel fuzzy-logic data acquistion and control system! :beer:

Don't forget to send in the registration card, so you can make use of the warranty! You did buy the extended warranty, right? I hear it's totally worth the extra money for those things. They malfunction a lot.

-

QUOTE(shoneill @ Sep 25 2007, 07:25 AM)

Oh, for Pete's sake... I hate it when I do that. I even used the capital B on purpose, not realizing I was doing the math entirely wrong.

(Problem is still unsolved, but at least I've had my daily dose of reminder that I'm still not the smartest person in the room :headbang: )

(Problem is still unsolved, but at least I've had my daily dose of reminder that I'm still not the smartest person in the room :headbang: )QUOTE(Louis Manfredi @ Sep 25 2007, 07:20 AM)

If you are working with a computer with a built in serial port, try changing the hardware buffering settings under the Advanced... control for setting the serial port. Not sure if that trick might also help with a USB based port.My USB adapter supports this (which leads me to believe the UART is in the adapter), but changing its value had no effect.

QUOTE(eaolson @ Sep 25 2007, 07:32 AM)

which offers a few suggestions. There's also a software buffer, I think. Have you tried increasing the size of that with VISA Set I/O Buffer Size?Yep, tried that, too. It really seems to be the hardware buffer that's overrunning.

QUOTE

I've also had trouble with one USB-to-serial adapter. What brand are you using?It's a Keyspan 19Qi. I'm using driver version 3.2, which is the latest (although it's dated 2002). Unfortunately this is the only USB adapter I have -- but it has performed admirably in many other projects, so I'm fairly trusting of it (and I know how hard it is to find one to trust). However, I don't recall that I've ever used it with flow control.

QUOTE(Ben @ Sep 25 2007, 07:33 AM)

You are reading ALL of the bytes at the port every time and NOT just a subset, CORRECT?Yes, but thanks for asking. Given that I can't reliably multiply and divide by 8, that's a fair question

.

. -

QUOTE(Ben @ Sep 25 2007, 07:00 AM)

Could you put a scope on the lines and make sure this is not a noise or bad ground issue?Sadly, no. That's due to lack of breakouts for the cable and lack of a scope.

QUOTE

Hardware handshaking should flag the transmitter to spot transmitting until CTS goes high again.What I can tell you that the handshaking definitely seems to be working, in the sense that the transmitter and the receiver each time out if the other isn't running. But I guess if there's a noise issue then the problem would be that the handshake hiccups every so often....

-

I have two computers connected via a null modem cable. One is the received for my serial data (where my application runs), while the other is simulating a sensor I will eventually have to talk to. The sensor continuously broadcasts updated readings (so this is not a query-response situation, it's more like drinking from a firehose). Each message from the sensor is about 20 bytes long, and the sensor wants to send around 500 messages per second (so we're around 10kBps here).

The serial ports on both computers are set to 115200N81 (although slower speeds still exhibit the problem; see below).

Here is the problem: Any significant use of the receiving computer (switching applications or even just clicking in the window's title bar causes the receiving computer to return a "VISA Error -1073807252 (0xBFFF006C): An overrun error occurred during transfer. A character was not read from the hardware before the next character arrived." I have replicated the problem with both my laptop (USB->RS232 adapter) on the receiving end and with a desktop machine (old-school RS232 on the motherboard). This happens even with the simplest of serial read/write code (e.g. NI's examples).

I was able to find a pretty good overview of the meaning of the error in this page on the Developer Zone. Basically, it looks to me like the speed of the data stream is overrunning the capacity of the UART in the receiving computer before Windows comes around to service the IRQ and move the data out of there. I have tried all of the suggestions on the NI page without success (switching to RTS/CTS handshaking seemed to help a little, but the error still occurred). Reducing the baud rate to 9600 (laptop receiver) helped quite a bit, but the error still occurs with moderate abuse of Windows.

So I'm bringing my problem here. My questions are as follows:

- Is there any way to mitigate this in software? Given that the sensor is just broadcasting data freely, I can't tell it to just wait for me to catch up.

- Is this really normal, for the UART on a modern laptop (and a modern desktop) to overrun at only Ten Kilobytes Per Second??? :headbang:

- Where (probably) is the UART in the laptop system? Is it on the motherboard, or is it in the USB->RS232 adapter? I've consulted all the documentation I have for both, and I can't find a clear answer.

- Is there any way to mitigate this in software? Given that the sensor is just broadcasting data freely, I can't tell it to just wait for me to catch up.

-

QUOTE(ned @ Sep 19 2007, 09:55 AM)

I'm going to be a voice of dissent here and suggest that this is sufficiently basic that it doesn't need to be documented. You run a wire to the N terminal and you don't get a broken wire, so it must be valid LabVIEW code. What other value, other than the actual value of N, could possibly be a reasonable value on that wire?I think there are a couple things at play here that make this behavior interesting, and not "sufficiently basic that it doesn't need to be documented." One is what Ben said, namely that you can run a wire that appears to just be "through" the N terminal, but its value changes on the way past the N. The other is that there are apparently a bunch of us around here, representing dozens of people-years of LabVIEW experience, that weren't hip to this little gem. I think those two factors alone point to the behavior being not at all "sufficiently basic." As I pointed out in the first post, all of this is terribly obvious once you see it, but there's something about the behavior that's a bit outside the box and may not be obvious even after drawing 10,000+ For Loops.

Now, I don't think it should be in the Release Notes or anything (since it's apparently been this way for 10 years or more), but I think Ben's comment alone would be a worthwhile Note in the documentation. On the other hand, stuff like this clearly gives geeks like us something to get excited about, so maybe it's better left for us to discover :thumbup: .

Just to add a final snark: I'd question what other features of LabVIEW you think are "sufficiently basic that [they don't] need to be documented." Perhaps NI should remove the documentation of the Add and Multiply nodes from the LabVIEW help? They're awfully basic, and the order of the inputs doesn't even matter!

-

QUOTE(crelf @ Sep 13 2007, 07:08 AM)

What's truly delicious is the amount of RAM it uses for large arrays! :thumbup:

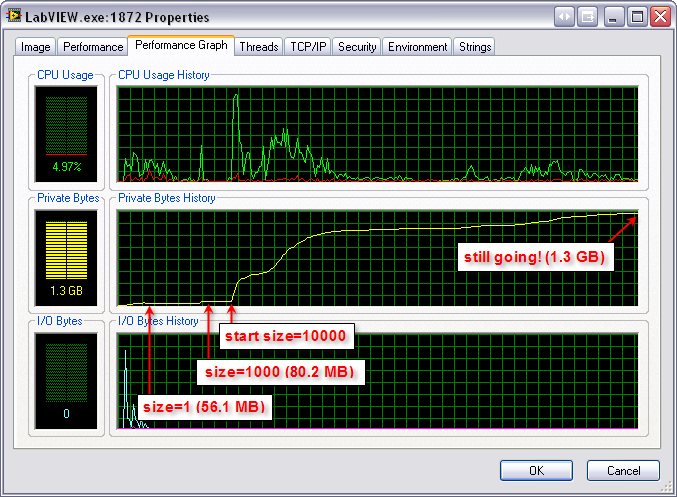

This is the process info for Example.vi in my previous post:

http://lavag.org/old_files/monthly_09_2007/post-2992-1189696774.png' target="_blank">

All arrays are DBL.

Array of 1 element: 56.1 MB (roughly LabVIEW's baseline usage on my machine with this .lvproj open)

Array of 1000 elements: 80.2 MB

Array of 10000 elements: 1.3 GB and climbing when I killed the process

It should be noted that my test machine has (only) 1GB of RAM, so it entered Swap Hell a minute or so into the calculation (where the curve goes pretty flat at around 1.0 GB).

-

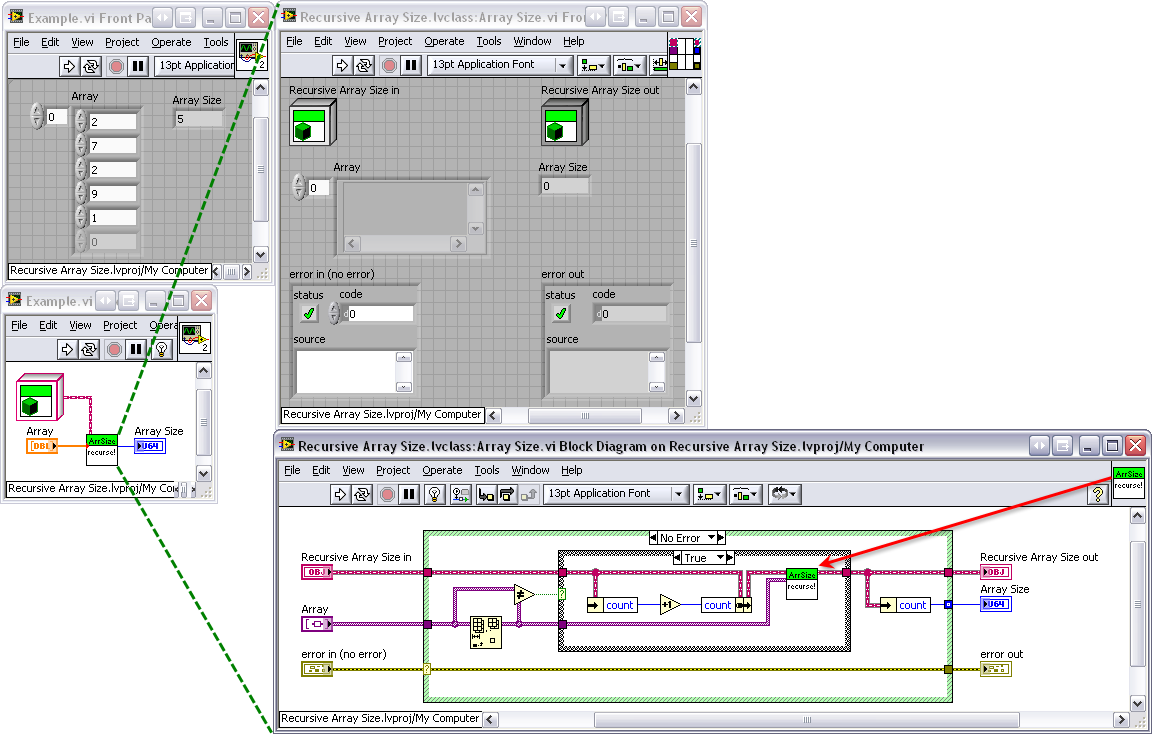

This technically breaks the "no subVIs" rule, but I gave myself a pass because it uses recursion in LV85. It also takes a variant as an input

.

.EDIT: Yeah, yeah, the error wire should go into the recursive call. -5 points.

-

First, welcome to the board! :beer:

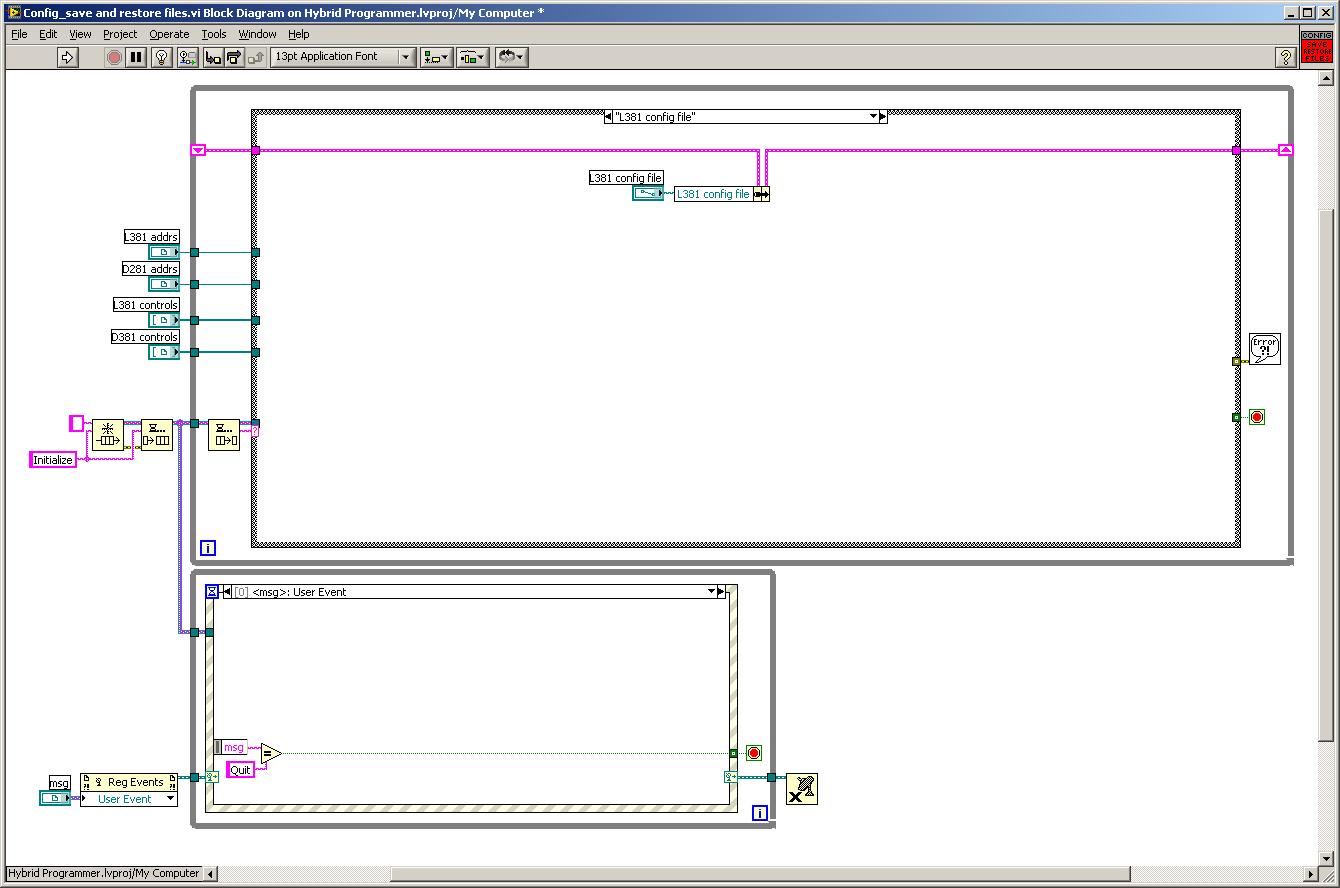

Second, I've run into a "missing/misplaced files" problem very similar to what you're describing

. Please see this thread in the LabVIEW 8.5 Buglist forum for more details.

. Please see this thread in the LabVIEW 8.5 Buglist forum for more details.I have not, however, seen the problem you describe with the inclusion of .lvlibs. I see them being included just fine, until the Installer breaks everything. Can you describe that symptom a little more specifically? Maybe I'm just misunderstanding.

-

-

QUOTE(Thang Nguyen @ Sep 12 2007, 11:31 AM)

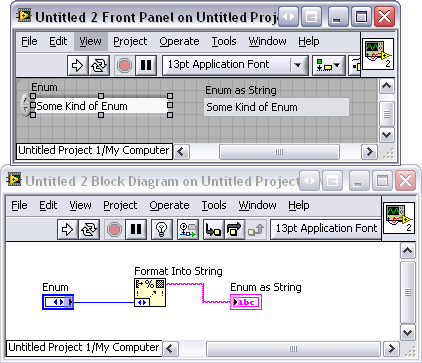

Welcome to The Cool Kids' Club:

http://lavag.org/old_files/monthly_09_2007/post-2992-1189622895.png' target="_blank">

Format Into String is in your String palette.

BTW, there's a more versatile Get Strings from Enum function in the OpenG Data Tools library. It takes a variant as input, so you're not limited to using it with just one enum.

-

QUOTE(crelf @ Sep 12 2007, 08:06 AM)

There's a better way?

This gives me an idea for kind of a cool thing to do. Not a Coding Challenge exactly, but more like a Cleverness Festival! :beer:

We could pick an interesting trivial problem (Array Size is a really good one) and challenge LAVA members to find original (not optimal) ways to solve it. We could constrain it with a few simple rules like

- Must not use any subVIs except those provided in vi.lib or OpenG.

- All code must fit in a block diagram window of a certain size (and no stacked sequences!)

- All code must obey basic LabVIEW style conventions

or whatever. I'm just brainstorming here. Might be fun to see what's lurking in the corners of our brains.

- Must not use any subVIs except those provided in vi.lib or OpenG.

-

QUOTE(ragglefrock @ Sep 12 2007, 08:38 AM)

I'm a user of basic Apple programs (or I was until my last Mac gave up the ghost), and an engineer. I don't particularly want "intergral control over everything"; I just want to solve problems. That's what has made programs like iPhoto and iTunes so successful. They really help (the targeted) users solve problems with photo/music management. I don't think it's that LabVIEW developers want so much control over everything (but see below). It's that our problem space is (or seems to be) much broader than that served by applications like iTunes or iPhoto. Add to that the fact that we're accustomed to "rolling our own" solutions to VI/project management (see my earlier post about the Olden Days), and you end up with a sort of inherent distrust of anything NI does that comes and mucks up our status quo.

QUOTE(Jim Kring @ Sep 12 2007, 09:36 AM)

B) Do LabVIEW user really want integral control overeverything?All I ask for is enough control over everything such that all bugs in the development environment have workarounds. I'm looking at you, LabVIEW 8.5 Build Specification

.

. -

-

QUOTE(mross @ Sep 12 2007, 06:58 AM)

I'm using Firefox 2.0.0.6 on WindowsXP, and the original image link behaves correctly when I click on it. About half the text links also behave correctly, but a couple of them just give me the thumbnail (although I'm using GreaseMonkey with Greased Lightbox for image display).

-

I just realized that calling that indicator "% complete" in the example code was an absolutely braindead choice... :headbang:

-

As a side note to all this, the receipt is from Thursday night. Everyone should stay for Thursday night.

Also,

drapesdrano (?!?)

It is pitch dark. You are likely to be eaten by a grue.

in LAVA Lounge

Posted

Did anyone else around here grow up on Text AdventuresInteractive Fiction like I did? If so, you and MC Frontalot might have something to talk about.