-

Posts

497 -

Joined

-

Last visited

-

Days Won

36

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by JKSH

-

-

The way I see it, XControls are for cases where you want to create a custom interactive "widget", and you plan to embed multiple copies of this widget in other front panels. (Unfortunately, it doesn't always work well, but that's a different topic.) So, do you want multiple copies of your custom box? If you don't, then you definitely don't need an XControl. If you do, then I'd consider it.

Also, does it have to be an overlaid box? Would you consider a separate pop-up dialog altogether?

-

On 1/31/2017 at 6:55 AM, Shleeva said:

I put a Feedback Node in the red rectangle and wired 0 to the Initializer Terminal and the cycle error got fixed so I was able to run the VI, but the graph wouldn't even move forward, so it doesn't work properly. And when I put an additional Feedback Node in the green box with 0 wired to the Initializer Terminal, I got a new error

This is just a guess, but I think the Control and Simulation (C&S) loop treats your code as a continuous-time model, and does some conversion behind-the-scenes to produce a discrete-time model for simulation. A feedback node breaks time continuity, so it's quite possible that the feedback nodes would interfere with the C&S loop's ability to solve your equations.

I don't have the toolkit installed so I can't play with it, but I'm not sure if the C&S Loop is capable of solving such complex simultaneous differential equations like yours.

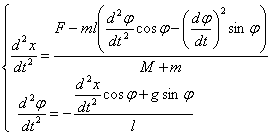

I would still do some calculus and algebra to make your equations more simulation-friendly first, before feeding them into LabVIEW. For example, it's pretty easy to get an expression for x. See:

- Finding dx/dt: http://www.wolframalpha.com/input/?i=Integrate+A-B*(d^2y%2Fdt^2*cos(y)+-+(dy%2Fdt)^2*sin(y))+with+respect+to+t

- Finding x: http://www.wolframalpha.com/input/?i=Integrate+A*t-B*(dy%2Fdt*cos(y))+%2B+C+with+respect+to+t

...where y = φ, A = F/(M+m), B = m*l/(M+m), C = constant of integration (related to initial conditions).

If you still want to try to get the C&S loop to process your equations unchanged, try asking at the official NI forums and see if people there know any tricks: http://forums.ni.com/

-

On 1/28/2017 at 0:52 PM, Tico_tech said:

1. I compared the distance from bottom to first edge; shown in Right_image.png and Left_image.png. The lower the difference the better the alignment (in theory).

Is this a good approach? Is there a better process for doing this?

That's a simple but valid approach, assuming that both sensors are very close together and face the same direction at all times.

The technical term for alignment is "image registration" or "image fusion". The Advanced Signal Processing toolkit contains an example for doing image fusion -- do you have it installed?

-

1

1

-

-

On 1/28/2017 at 9:36 AM, parsec said:

I am experiencing a very odd issue when running a VI for a long period of time in the dev environment. After some time the VI goes into this slow stepthrough mode, reminiscent of how subvis are highlighted when an error is found.

The video shows that the nodes that get highlighted are those that take references as inputs. So, I'm guessing that your reference(s) became invalid somehow. I'm not sure why no error messages pop up though -- did you disable those?

Wire the error outputs of those highlighted nodes into a Simple Error Handler subVI. What do you see?

On 1/28/2017 at 9:36 AM, parsec said:Additionally, I am encountering an issue where the main VI takes a long time to close, often crashing labview when the abort button is used. The two issues seem to be related.

It's hard to say without seeing your code in detail.

Anyway, may I ask why you use the abort button? That can destabilize LabVIEW. It's safer to add a proper "Stop" button to your code

-

On 1/27/2017 at 4:38 PM, Shleeva said:

the Simulation loop in LabView has built-in ODE solvers (like Runge-Kutta) for different equation orders, and can include a fixed or a variable time step

That's quite cool.

Note that your system is non-linear (due to the sine and cosine functions). Do you know if those built-in ODE solvers cope well with non-linear systems?

On 1/27/2017 at 4:38 PM, Shleeva said:That's what I tried doing next, and it did allow me to solve for φ, but it returns some weird values. And I also need to solve for x somehow, and it repeats the error in φ.

It's best to get it working for φ alone first (meaning you need to make sure all your values are non-weird), before you even consider solving for both x and φ at the same time.

One quick and dirty technique is to take your "solved" φ variable, pass it through differentiator blocks to calculate (d^2 x / d t^2), and then pass that through integrator blocks to find x. This will avoid the "member of a cycle" problem, but might cause errors to accumulate.

On 1/27/2017 at 4:38 PM, Shleeva said:The initial conditions for x and φ should both be 0, but I just don't know how to set them in this loop.

See http://zone.ni.com/reference/en-XX/help/371894H-01/lvsim/sim_configparams/

-

8 hours ago, Jordan Kuehn said:

Looking at the equations suggests to me that the issue is not the code, but the equations themselves. They rely on each other cyclically...

That's fine in mathematics. Writing equations like this provides a concise yet complete way of describing a system. However, this format doesn't lend itself nicely to programmers' code.

On 1/25/2017 at 5:30 AM, Shleeva said:I have to model and simulate a gantry crane in LabView for my engineer's thesis.

...

I'd really appreciate a fast and simple answer

The easiest answer for you is one that you already understand.

So tell us: What techniques have you learnt in your engineering course for solving differential equations in LabVIEW (or in any other software environment, like MATLAB or Mathematica)?

On 1/25/2017 at 5:30 AM, Shleeva said:Here are the final equations for the model:

Since I'm not really big on all the modeling stuff like transfer functions etc. I used the simplest way I know to model this - by using summation, gain, multiplication and integrator blocks in a simulation loop and then wiring all the necessary parameters.

I haven't used the Control & Simulation loop before so I don't know what features it has for solving differential equations.

However, the first thing I'd try is to substitute the top equation into the bottom equation, and see if that allows you to solve for φ.

If not, then my analysis is below.

--------

Those are Differential Equations, which describe "instantaneous", continuous-time relationships. You can't wire them up in LabVIEW as-is to solve for x and φ.

I would first convert them into Difference Equations, which describe discrete-time relationships. Difference Equations make it very clear where to insert Feedback Nodes or Shift Registers (as mentioned by Tim_S), which are required to resolve your "member of a cycle" problem.

You'll also need to choose two things before you can simulate/solve for x and φ:

- Your discrete time step, Δt. How much time should pass between each iteration/step of your simulation?

- Your initial conditions. At the start of your simulation, how fast and which directions are your components moving?

-

8 hours ago, Filipe Altoe said:

(I hate reading EULAs

)

)

This isn't related to EULAs or LabVIEW FPGA, but you might like this site: http://tosdr.org/

-

2

2

-

-

1 hour ago, RayR said:

Do others have trouble posting at times? Maybe I take too long to write my post? Often, when I click on "Submit Reply" I see a message "Saving", but the post does not make it..

Curious...

I've experienced this a few times in recent weeks. I then become unable to post on the original page, so I have to copy + paste my comments into a new tab.

Running Google Chrome 55.0.2883.87 m, and I often take a long time to post too.

-

1

1

-

-

On 1/18/2017 at 5:40 PM, ASalcedo said:

In PC3: CPU ussage is about 45-50%.

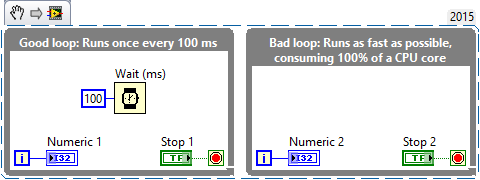

PC 3 has 2 cores, right? 50% CPU usage means that one of your cores is running at 100%. Jordan is probably correct: You have at least one while loop running wild.

Run Resource Monitor on PC 2 too. I suspect you'll find your application using 25% CPU there (1/4 cores).

On 1/18/2017 at 5:42 PM, ASalcedo said:How can I see if a while is missing timing?

Look at your code. For each loop, tell us: How frequently does the code inside the loop run? (Is it... once every second? Once every 10 ms? Once every time a queued item is received?)

Anyway, here's a small note about loop timing:

On 1/18/2017 at 5:40 PM, ASalcedo said:

On 1/18/2017 at 5:40 PM, ASalcedo said:LAN 1 and 2 is about 90%.

You haven't answered the questions asked by ShaunR and hooovahh: Are your Ethernet ports 100 Mbps or 1000 Mbps? Since you are using GigE cameras, you need to use Gigabit (1000 Mbps) Ethernet ports.

On 1/18/2017 at 5:40 PM, ASalcedo said:Memory ussage is about 40%.

This should be fine, if you don't run anything else on the PC.

-

Run your application on PC 2 and PC 3. Launch Resource Monitor.

Are you hitting the limit of any resource(s)?

-

On 1/6/2017 at 4:50 PM, shoneill said:

Never inherit a concrete class from another concrete class

One thing's not clear to me: Does that statement mean, "You should not inherit from a concrete class, ever", or does it mean "you should not make a concrete class inherit from a concrete class, but you can let an abstract class inherit from a concrete class"?

Also, before we get too deep, could you explain, in your own words, the meaning of "concrete"?

On 1/7/2017 at 1:53 AM, planet581g said:shoneill, Mercer said something prior to the sentence you quoted that is important to include in his statement about inheritance - specifically that it is "general" advice.

I can think of a recent example where inheritance was a useful tool, but I broke from that convention. However, I would say that generally (most of the use cases I encounter) I would not want concrete classes inheriting from other concrete classes.

Agreed, I'd call it a "rule of thumb" instead of saying "never".

An example where multi-layered inheritance make sense, that should be familiar to most LabVIEW programmers, is LabVIEW's GObject inheritance tree. Here's a small subset:

-

GObject

- Constant

-

Control

- Boolean

-

Numeric

-

NamedNumeric

- Enum

- Ring

-

NumericWithScale

- Dial

- Knob

-

NamedNumeric

-

String

- ComboBox

- Wire

In the example above, green represents abstract classes, and orange represents concrete classes. Notice that concrete classes are inherited from by both abstract and concrete classes. Would you ("you" == "anyone reading this") organize this tree differently?

On 1/6/2017 at 4:50 PM, shoneill said:How about only ever calling concrete methods from within the owning class, never from without?

What's the rationale behind this restriction?

-

GObject

-

8 hours ago, ShaunR said:

It's not as straightforward as that. The issues are fairly straight forward to resolve for any one version

This small part is straightforward enough, no? The process of making LabPython 64-bit friendly is orthogonal to process of dealing with compatibility breaks in 3rd party components. I myself have not used LabPython (or even Python), so I wasn't commenting (and won't comment) on how the project should/shouldn't be carried forward.

Rolf suggested a quick and dirty fix for a specific issue (i.e. 64-bit support), which exposes a large gun that a user could shoot themselves in the foot with. I suggested an alternative quick and dirty fix that hides this gun, and asked what he (and anyone else reading this thread) thought of the idea.

8 hours ago, ShaunR said:Rolf has already lost the battle for keeping it current, requiring significant effort with almost every release.... Why should Rolf shoulder the problems caused by the intransigence of a completely different language that is nowhere near as disciplined as he or NI are?

Unless Rolf has a business need in one of his projects, this is just a millstone caused by open source altruism. We can hope he finds the time and energy to update it but I suspect he would have done so already if that was the case. This is Rolfs very polite way of saying "here are all the problems I know about, fix it yourself if you are good enough".

Understood.

OK, it's now recorded here for posterity: 2 different suggestions on how to achieve 64-bit support, for anyone who's happy to work on LabPython.

8 hours ago, ShaunR said:If I was in his shoes, I would make it 64 bit compliant, make all the CLFNs orange nodes and tell the users they have to use a specific version of Python or patch it themselves (it's open source after all). Then freeze it for others to champion.

That sounds sensible.

8 hours ago, ShaunR said:since the Linux community don't see any problem with breaking ABIs at the drop of a hat and that backwards compatibility is a fairy tale;

Such blanket statements are a bit unfair...

- https://www.python.org/dev/peps/pep-0384/

- https://community.kde.org/Policies/Binary_Compatibility_Issues_With_C%2B%2B

- https://en.wikipedia.org/wiki/Linux_Standard_Base

- http://forums.ni.com/t5/Machine-Vision/IMAQdx-14-5-breaks-compatibility-with-IMAQdx-14-0/m-p/3168479

Granted, what's now in place in the Python and Linux worlds probably isn't enough to smooth things out for LabPython, but snarky remarks help nobody.

-

4 hours ago, rolfk said:

- More changes need to be made to the code to allow it to properly work in a 64 bit environment. Currently the pointer to the LabPython private management structure which also maintains the interpreter state is directly passed to LabVIEW and then treated as a typed log file refnum. LabVIEW refnums however are 32 bit integers, so a 64 bit pointer will not fit into that. The quick and dirty fix is to change the refnum to a 64 bit integer and configure all CLNs to pass it as a pointer sized variable to the shared library. But that will only work fro LabVIEW 2009 on onwards which probably isn't a big issue anymore. The bigger issue is that a simple integer will not prevent a newby user to wire just about anything to the control and cause the shared library to crash hard when it tries to access the invalid pointer..

Would you consider encapsulating the 64-bit "refnum" in an LVClass for the user can pass around?

-

1 minute ago, MikaelH said:

is this a new feature?

The reduced contrast is intentional on NI's part. There is an Idea Exchange post calling for its reversal: https://forums.ni.com/t5/LabVIEW-Idea-Exchange/Restore-High-Contrast-Icons/idi-p/3363355

-

1

1

-

-

18 hours ago, ShaunR said:

Note:

That is the BSD-2 Clause licence. There is also the BSD-3 Clause liicence which adds a clause disavowing the use of the providers' name for promotional and/or endorsement of derivative works.

And for those who like compact lists:

- https://www.tldrlegal.com/l/freebsd (2-clause, GPower's article)

- https://www.tldrlegal.com/l/bsd3 (3-clause, Shaun's highlight)

- https://tldrlegal.com/license/4-clause-bsd (4-clause, the original BSD license)

-

2

2

-

11 hours ago, ShaunR said:

There is also the btowc which I could do char by char

From earlier posts, it sounds to me like btowc() is simply a single-char version of mbsrtowcs(). Good to check, though.

11 hours ago, ShaunR said:and LabVIEW has a Text->UTF8 primitive

You'd then still need to convert UTF-8 -> UTF-16 somehow (using one of the other techniques mentioned?)

11 hours ago, ShaunR said:Don't forget I don't care about the system encoding. Only from LabVIEW strings to UTF16 regardless of how the OS sees it.

As @rolfk said, LabVIEW simply uses whatever the OS sees.

-

On 9/12/2016 at 2:37 PM, ShaunR said:

Even so. isn't that what iconv does? I'm just trying to get a feel for the equivalencies and trying to avoid bear traps because Linux isn't my native habitat.

Yes, iconv() is designed for charset conversion.

Possible bear trap (I haven't used it myself): A quick Google session turned up a thread which suggests that there are multiple implementations of iconv out there, and they don't all behave the same.

At the same time, I guess ICU would've been an overkill for simple charset conversion -- it's more of an internationalization library, which also takes care of timezones, formatting of dates (month first or day first?) and numbers (comma or period for separator?), locale-aware string comparisons, among others.

On 9/13/2016 at 10:29 PM, rolfk said:wchar_t on Unix systems is typically an unsigned int, so a 32-bit unicode (UTF-32) character, which is technically absolutely not the same as UTF-16. The conversion between the two is however a pretty trivial bit shifting and masking for all but some very obscure characters (from generally dead or artificial languages like Klingon

).

).

Also btowc() is only valid for conversion from the current mbcs (which could be UTF-8) set by the C runtime library LC_TYPE setting. Personally for string conversion I think mbsrstowc() is probably more useful, but it has the same limit about the C runtime library setting, which is process global so a nasty thing to change.

Thinking about it some more, I believe @ShaunR does want charset conversion after all. This thread has identified 2 ways to do that on Linux:

- System encoding -> UTF-32 (via mbsrtowcs()) -> UTF-16 (via manual bit shifting)

- System encoding -> UTF-16 (via iconv())

On 9/13/2016 at 5:09 PM, rolfk said:All in all using Unicode in a MBCS environment is a pretty nasty mess and the platform differences make it even more troublesome.

Hence the rise of cross-platform libraries that behave the same on all supported platforms.

On 9/13/2016 at 6:43 PM, ShaunR said:With Mac support. Well. No-one has bought a Mac licence so I only care for completeness and will drop it is it looks like to much hassle.

The toolkit used to support Mac but I quietly removed it because of the lack of being able to apply the NI licence. So it still works, but I don't offer it

The toolkit used to support Mac but I quietly removed it because of the lack of being able to apply the NI licence. So it still works, but I don't offer it

Do you have the NI Developer Suite? My company does, and we serendipitously found out that LabVIEW for OS X (or macOS, as it's called nowadays) is part of the bundle. We simply wrote to enquire about getting a license, and NI kindly mailed us the installer disc just like that

-

1

1

-

5 hours ago, ShaunR said:

Is there a good reason to use a third party library like that rather than

btowcwhich is in glibc?Ah, I misread your question, sorry. I thought you wanted codepage conversion, but just wanted to widen ASCII characters.

No extra library required, then.

-

ICU is a widely-used library that runs on many different platforms, including Windows, Linux and macOS: http://site.icu-project.org/

It's biggest downside is its data libraries can be quite chunky (20+ MB for the 32-bit version on Windows). However, I don't see this as a big issue for modern desktop computers.

-

8 hours ago, Neil Pate said:

I just wanted to know if people still have a use for Action Engines (enough so to actually make one) these days.

I still use AEs for very simple event logging. My AE consists of 2 actions:

- "Init" where I wire in the log directory and an optional refnum for a string indicator (which acts as the "session log" output console)

- "Log" (the default action) where I wire in the message to log.

Then, all I have to do is plop down the VI and wire in the log message from anywhere in my application. The AE takes care of timestamping, log file rotation, and scolling the string indicator (if specified)

Having more than 2 actions or 3 inputs makes AE not that nice to use -- I find myself having to pause and remember which inputs/outputs are used with which actions. I've seen a scripting tool on LAVA that generates named VIs to wrap the AE, but that means it's no longer a simple AE.

-

6 hours ago, Neil Pate said:

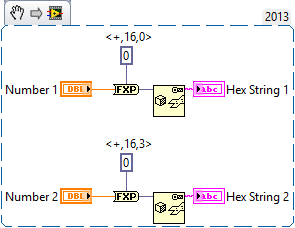

This is the bit that is making my brain hurt... I have done some reasearch and it turns out that fixed point multiplication does not really care about the format of the number, only the bit-width. So you can use exactly the same algorithm to scale <+16,0> and <+16,3>. Anybody know if this is correct?

Correct

A few useful notes:

- Your 16-bit fixed point number is stored in memory exactly like a 16-bit integer*.

- Fixed point multiplication == Integer multiplication. The same algorithms apply to both.

-

The algorithms for integer multiplication don't depend on the value of the number. By extension, they don't depend on the representation of the number either.

- e.g. Multiplying by 2 is always equivalent to shifting the bits to the left (assuming no overflow)

(* LabVIEW actually pads fixed point numbers to 64 or 72 bits, but I digress)

Anyway, to illustrate:

Bit Pattern (MSB first) | Representation | Value (decimal) =========================|===============================|================= 0000 0010 | <+,8,8> (or equivalently, U8) | 2 0000 0010 | <+,8,7> | 1 0000 0010 | <+,8,6> | 0.5 -------------------------|-------------------------------|----------------- 0000 0100 | <+,8,8> (or equivalently, U8) | 4 0000 0100 | <+,8,7> | 2 0000 0100 | <+,8,6> | 1

6 hours ago, Neil Pate said:Almost totally unrelated to LabVIEW, and is not really just a question more of just a ramble.

That's borderline blasphemy...

You can redeem yourself by getting LabVIEW involved:

You can redeem yourself by getting LabVIEW involved:

-

On 8/15/2016 at 7:03 PM, drjdpowell said:

The current LabVIEW version for this JSON package is 2011, but I am considering switching to either 2013 or maybe even 2015. What versions do people need?

I'm using JSON LabVIEW with a 2013 project, but I don't need the latest and greatest library

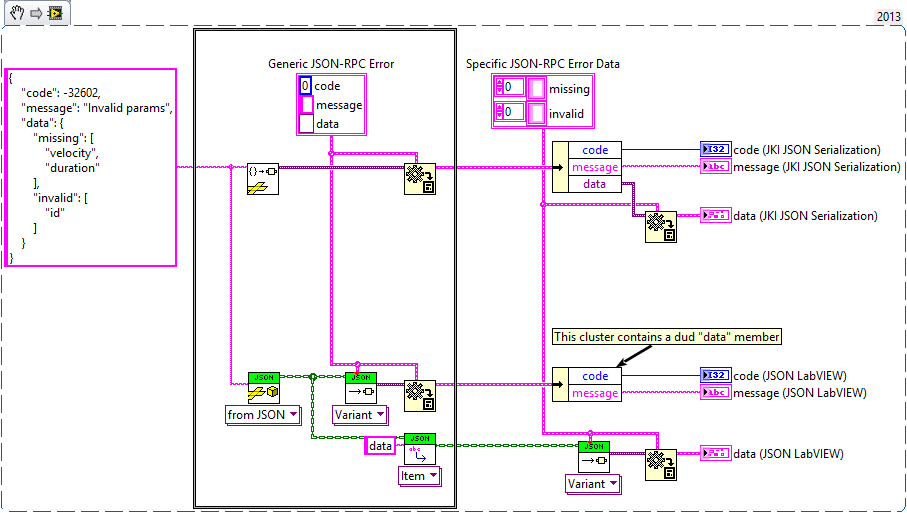

There is one feature I would love to see added though: Support for non-explicit variant conversion. JKI's recently-open-sourced library handles this very nicely:

The black frame represents a subVI boundary.

Several months ago I implemented a JSON-RPC comms library for my project, based on JSON LabVIEW. Because the "data" member can be of any (JSON-compatible) type, I had to expose the JSON Value.lvclass to the caller, who then also had to call Variant JSON.lvlib:Get as Variant.vi manually to extract the data. This creates an undesirable coupling between the caller and the JSON library.

It would be awesome if Variant JSON.lvlib:Get as Variant.vi could use the structure within the JSON data itself to build up arbitrary variants.

-

On 7/27/2016 at 10:53 PM, Phillip Brooks said:

This article does a good job at narrating the author's journey from being an OOP enthusiast to an FP advocate, and helps us understand his feelings and frustrations. However, it does a poor job at illustrating OOP's actual shortcomings/pitfalls.

Let's have a closer look at the author's arguments.

Inheritance: The Banana Monkey Jungle Problem

This is a strange example.It's like an OpenG author who decides to reuse some OpenG code (say, Cluster to Array of VData__ogtk.vi) by copying the VI into his project folder, and then is gobsmacked to discover that he needs to copy its subVIs and their subVIs too. This shouldn't be so surprising, should it…?

Anyway, it sounds like his main gripe here is with excessive dependencies (which is influenced by code design too, not just the paradigm), yet his stated solution to this problem is "Contain and Delegate" (a.k.a. composition). Sure, that eliminates the class inheritance hierarchy, but I don't see how that helps reduce dependencies. It feels like he's trying to shoehorn the "You need composition!" message into every problem he listed, just so that he can argue later that inheritance is flawed.

Inheritance: The Diamond Problem

Yes, this is a real issue that can trip up the unwary. However, the author claims that the only viable way to model a diamond relationship is to do away with inheritance ("You need composition!"). He disingenuously ignores the numerous inheritance-friendly solutions that can be found in various languages.

Inheritance: The Fragile Base Class Problem

Yes, this is a real pitfall. This is his most solid point against inheritance.

Inheritance: The Hierarchy Problem

His main point here is, and I quote, "If you look at the real world, you’ll see Containment (or Exclusive Ownership) Hierarchies everywhere. What you won’t find is Categorical Hierarchies…. [which have] no real-world analogy".I'd like to hear from someone who has implemented a HAL (or someone who's a taxonomist). Would you agree or disagree with the author's claim?

Encapsulation: The Reference Problem

This is another bizarre example.His logic is akin to, "An LVClass lets you have a DVR as a data member, and it lets you initialize this member by passing an externally-created DVR into its initialization VI. Therefore, an external party can modify LVClass members without using an LVClass method. Therefore, LVClasses don't support encapsulation. Furthermore, attempting to prevent external modification is inefficient (and perhaps impossible). Therefore, the whole concept of encapsulation in OOP is unviable."

This is a huge stretch, IMHO.

Polymorphism

His main point here is, "You don't need OOP to use polymorphism". This is true, but is not a shortcoming.Also, the author paints a picture of interfaces-vs-inheritance. They are not mutually exclusive; they can be complementary as they serve different use cases.

-

2

2

-

-

A-ha. How very sneaky. I wonder if this is a bug such that the dialog is supposed to say"The feature you just selected is only available in VIPM Pro Edition. Please choose from the following options" instead of "Error"...

Anyway, this wasn't what I hoped to hear, but at least the cause is confirmed now. Thanks for testing this for me, @LogMAN!

storing a value

in Application Design & Architecture

Posted

Hi, I wanted to clarify:

Why?