-

Posts

3,909 -

Joined

-

Last visited

-

Days Won

270

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Rolf Kalbermatter

-

-

On 12/15/2023 at 5:28 PM, Jack Parmer said:

This is exactly the kind of feedback we're looking for - thank you!

In terms of a test sequencer / test orchestrator, the UI that we've been contemplating would allow you to import full Flojoy test apps (or Python scripts) as blocks on the canvas, then wire these "super blocks" together in parallel or series. The last block might generate a report and upload it to a data store. Do you think that this would be sufficient for batch test orchestration, or should we consider a different UI?

You may want to reconsider trying to advertise this as a test sequencer competitor. Wiring together blocks is NOT a test sequencer by a long stretch. If that was the case there would be no Test Stand, since LabVIEW can do that already since 35 years. Users of applications like Test Stand expect a lot more than that. Maintenance of test limits, possibly with database backend, easy logging of results to databases, simple log and report file generation, ease of adaption of test conditions and test flow during production. Generally Test Stand and similar applications still require a lot of work to get a full test application together, but they do not require any programming for at least these sort of things anymore. I'm not a fan of the often hidden configuration for these things, but this is the added value proposal for Test Stand and friends that its users expect. Yet another flow chart application is not going to cut it for any of them.

In terms of LabVIEW, there is obviously still a long way to go for Flojoy to get near to being a real contender in data flow programming. But I do definitely applaud you guys for the effort so far, and for the short time you are working on this thing, it looks already quite useful. The comparison with NodeRed looks pretty apt. I always found that rather limited in many ways compared to LabVIEW. A little like a Visio Flow Chart with some added execution logic behind it. Potentially useful but not quite able to implement more complex program logic that has many parameters flowing from a lot of nodes to even more other nodes. Some would probably say that that is an advantage as the complexity of a typical LabVIEW program can get overwhelming for a beginner. But I would rather have a bit to much complexity at the tips of my fingers than to run into all kinds of limitations after moving from simple node flow charts to real programs.

-

AFAIK, documentation about this part of LabVIEW is only available as part of the cRIO C module development toolkit. And you had to sign a special agreement to get that. The part number is 779020-03 and it costs currently € 6200 and has a delivery time of 85 days according to the NI order system, but it has no product page anymore and it is questionable that you can find anyone at NI who even knows what an MDK agreement is. AFAIK it included also an NDA section.

The whole Elemental IO was developed for cRIO module drivers but its functionality was never really finished.

-

1

1

-

-

17 minutes ago, Neon_Light said:

Hello RolfK,

Thank you again for the great help. The terminology is not completely clear to me. Although the 9074 does not run Linux but VxWorks, I try to make a quick compare between Linux to make it easier to understand for me.

- In this screen from MAX I did click next and did install the recommended software. Is this what you mean with "the base system"? This is the same in linux as apt-get upgrade?

- I did not update the BIOS with the button on the left. Updating the bios would be going from Ubuntu 20 to Ubuntu 22?

Do I need to do a BIOS update to install and test the new version of RT Main ?

For non-Linux systems and Linux systems before 2018 or 2019 you simply go into NI-Max and install the NICompactRIO Base image for the LabVIEW version you are using to target the controller.

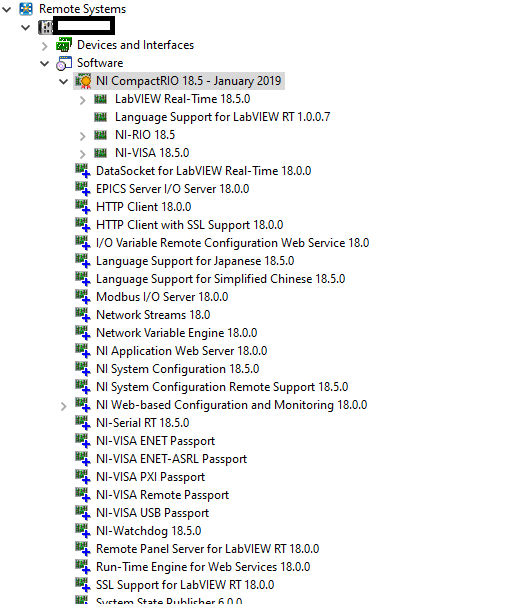

Here I installed CompactRIO 18.5 which is the version needed for the LabVIEW 2018 SP1 development system. there is a lot more installed on this controller but most is not really needed and will get removed in the final system setup.

There is no "apt-get upgrade" on any NI cRIO system at all. For non NI Linux systems everything needs to be done from within NI Max.

And in fact the base setup for the correct runtime system needs to be done even for NI Linux targets from your host machine.

Once the base system is installed on NI Linux systems, you can then use opkg (not apt-get) on the command line through an SSH link to update the base package to possible bugfixes from the NI servers.

-

On 12/1/2023 at 10:50 AM, Neon_Light said:

Hello all,

At work there is an Old 9074 which is running test software. There is also a 9148 connected to the 9074. I did change the old software tested it in simulation in simulation it works fine.

What I already did do:

- I got RAD working, so I can restore the old software

- I did install the packages needed for Labview 2018

- I did find I can use MAX to install new software clicking the cRIO --> software --> add remove software gives me the option to install Labview 2018 recommended packages I did this it removed some files and added a lot of new files

Now I do need to deploy the new RT main to the cRIO there are a lot of actions I do need to perform. Can someone please tell me the basic steps I need to take to deploy the new software and test it on the real hardware? Is it as simple as hitting the run button or do I need to stop the old RT main first? Is action 3 above enough or did I had to remove old software.

If you upgraded the cRIO base system to LabVIEW 2018 I do not see how the old rtexe still could be running. It means the chassis got restarted several times, much of it got probably wiped for good and even if the old rtexe would still be there, it could not load into the new 2018 runtime that is now on the system.

So yes for first tests it should be as simple as opening the main VI and pressing the Run button. Even if you had an old version of the application deployed to the target and set to run automatically (which would have to be done in LabVIEW 2018) LabVIEW will tell you that there is already a deployed application running on the target and that it needs to terminate that in order to upload the new VI code and then run it.

-

On 12/1/2023 at 2:07 PM, Neon_Light said:

Can I remove the LOCK file without serious consequences ?

That's the Unix way of creating interapplication mutexes. There are functions in Unix that allow for atomic check for this file and creating it if it doesn't exist and return a status that indicates if the file already existed (another process has gotten the lock first) or if it was created (we are allowed to use the locked resource). If that process then doesn't remove the lock file when it shutdown, for instance because it crashed unexpectedly, then the file remains on disk and prevents other instances of the process from starting.

If you are sure that no other process is currently running, that could have created this lock, you can indeed delete it yourself.

-

But those ASM source code files are just some glue code that were initially needed for linking CINs. CINs used originally platform specific methods to use object files as loadable modules. They then had to be able to link back to the LabVIEW kernel to call those manager functions and there was no easy method to do that in standard C. CallLVRT was the calling gate through which each of those functions had to go through when trying to call a LabVIEW manager function. In order to reference the internal LV function table required at that point assembly code to work and there were also some initialization stubs that were required. These assembly files were normally not really compiled as there were some link libraries too that did this, but I guess with 2.5, the release was a bit rushed and there were definitely files released in the cintools directory that were a bit broader than strictly needed.

With LabVIEW 5 for 32-bit platforms (2000/95/98/NT) NI replaced this custom call gate to the LabVIEW kernel through a normal module export table. On these platforms the labview.lib link library replaced the CallLVRT call gate through a simple LoadLibrary/GetProcAddress interface. With LabVIEW 6i, which sacked Watcom C and the according Windows 3.1 release, the need for any special assembly files at least for the Windows platform was definitely history.

There were strictly speaking 3 types of external code resources. CINs which could be loaded into a CIN node, LSBs which were a special form of object file that could be loaded and linked to by CINs (it was a method to have global resources that were used by multiple CINs) and then there were DRVRs which were similar to CINs but specifically meant to be used by the Device interface nodes. DRVRs were never documented for people outside NI but were an attempt to implement an interface for non-Macintosh platforms that resembled the Macintosh Device Manager API. In hindsight that interface was notoriously troubled. Most Macintosh APIs were notorious for exposing implementation private details to the caller, great for hackers but a total pita for application developers as you had to concern yourself with many details of structures that you had to pass around between APIs. And when Apple changed something internal in those APIs it required them to make all kinds of hacks to prevent older applications from crashing, and sometimes they succeeded in that and sometimes they did not! 😀

serpdrv was a DRVR external code resource that translated the LabVIEW Device Interface node calls to the Windows COMM API.

-

Never heard anything about the complete source code being accidentally released. And I doubt that actually happened.

They did include much of the headers for all kinds of APIs in 2.5 and even 3.0 but that are just the headers, nothing more. Lots of those APIs were and still are considered undocumented for public consumption and in fact a lot of them have changed or were completely removed or at least removed from any export table that you could access.

Basically, what was documented in the Code Interface Reference Manual was and is written in stone and there have been many efforts to make sure they don't change. Anything else can and often has been changed, moved, or even completely removed. The main reason to not document more than the absolutely necessary exported APIs is two fold.

1) Documenting such things is a LOT of work. Why do it for things that you consider not useful or even harmful for average users to call?

2) Anything officially documented is basically written in stone. No matter how much insight you get later on about how useless or wrong the API was, there is no way to change it later on or you risk crashes from customer code expecting the old API or behavior.

Those .lib libraries are only the import libraries for those APIs. On Linux systems the ELF loader tries to search all the already loaded modules (including the process executable) for public functions in its export tables and only if that does not work will it search for the shared library image with the name defined in the linker hints and then try to link to that.

On Windows there is no automatic way to have imported functions link to already loaded modules just by function name. Instead the DLL has to be loaded explicitly and at that point Windows checks if that module is already loaded and simply returns the handle to the loaded module if it is in memory. The functions are always resolved against a specific module name. The import library does something along these lines and can be generated automatically by Microsoft compilers when compiling the binary modules.

HMODULE gLib = NULL; static MgErr GetLabVIEWHandle() { if (!gLib) { gLib = LoadLibraryA("LabVIEW.exe"); if (!gLib) { gLib = LoadLibraryA("lvrt.dll"); if (!gLib) { /* trying to load a few other possible images */ } } } if (gLib) return noErr; return loadErr; } MgErr DSSetHandleSize(UHandle h, size_t size) { MgErr (*pFunc)(UHandle h, size_t size); MgErr err = GetLabVIEWHandle(); if (!err) { pFunc = GetProcAddress(hLib, "DSSetHandleSize"); if (pFunc) { return pFunc(h, size); } } return err; }

This is basically more or less what is in the labview.lib file. It's not exactly like this but gives a good idea. For each LabVIEW API (here the DSSetHandleSize function) a separate obj file is generated and they are then all put into the labview.lib file.

Really not much to be seen in there.

In addition the source code for 3.0 only compiled with the Apple CC. Metroworks for Apple, Watcom C 9.x and the bundled C Compiler for SunOS. None of them ever had heard anything about 64 bit CPUs which were still some 10 years in the future. And none was even remotely able to compile even C89 conformant C code. LabVIEW source code did a lot of effort to be cross platform, but the 32-bit pointer size was deeply engrained in a lot of code and required substantial refactoring to make 64-bit compilation possible for 2009. The code as is from 3.0 would never compile in any recent C compiler.

-

1

1

-

-

1 hour ago, ShaunR said:

I love Rolf's eggcorns. I never point them out because he knows my language better than I do.

Interesting word 😀. Learned a new thing and that this was only invented in 2003.

As to knowing English better than you do, you definitely give me more praise than I deserve.

-

20 minutes ago, ShaunR said:

Don't have that problem with dynamic typing.

Typecasting is the "get out of jail" card for typed systems. This one seem familiar?

linger Lngr={0,0}; setsockopt(Socket, SOL_SOCKET, SO_LINGER, (char *)&Lngr, sizeof(Lngr)); DWORD ReUseSocket=0; setsockopt(Socket, SOL_SOCKET, SO_REUSEADDR, (char*)&ReUseSocket, sizeof(ReUseSocket));Of course it looks familiar. When not programming LabVIEW (or the occasional Python app) I program mainly in C. It's easy to typecast and easy to go very much ashtray. C tries to be strictly typed and then offers typecasting where you can typecast a lizard into an elephant and back without any compiler complaints 😀. Runtime behavior however is an entirely different topic 🤠

-

7 hours ago, Porter said:

Linux 32-bit support has been axed. There is no 32-bit LabVIEW for linux since 2016 if I'm not mistaken.

I was just recently trying to find out where the cutoff point is. In my memory 2016 was the version that stopped with 32-bit support. But looking on the NI download page it claims that 2016 and 2017 are both 32-bit and 64-bit.

But that download page is riddled with inconsistencies.

For 2017 SP1 for Linux you can download a Full and Pro installer which are only 64-bit. Oddly enough the Full installer is larger than the Pro.

The 2017 SP1 Runtime installer claims to support both 32-bit and 64-bit!

For 2017 and 2016 only the Runtime installer is downloadable plus a Patch installer for the 2017 non-SP1 IDE, supposedly because they had no License Manager integration before 2017 SP1 on non-Windows platforms. Here too it claims the installer has 32-bit and 64-bit support.

So if the information on that download page is somewhat accurate, the latest version for Linux that was still supporting 32-bit installation was 2017. 2017 SP1 was apparently only 64-bit.

Of course it could also be that the download page is just indicating trash. It's not the only thing on that page that seems inconsistent.

-

1 minute ago, ShaunR said:

Oddly specific. I would want a method of being able to coerce to a type for a specific line of code where I thought it necessary (for things like precision) but generally...bring it on. After all. In C/C++ most of the time we are casting to other types just to get the compiler to shut up.

Easy typecasting in C is the main reason for a lot of memory corruption problems, after buffer overflow errors, which can be also caused by typecasting. Yes I like the ability to typecast but it is the evil in the room that languages like Rust try to avoid in order to create more secure code.

Typecasting and secure code are virtually exclusive things.

-

1

1

-

-

On 11/12/2023 at 4:00 PM, dadreamer said:

As shown in the code above, when the termination is activated, the library reads data one byte at a time in a do ... while loop and tests it against the term char / string / regular expression on every iteration. I can't say how good that PCRE engine is as I never really used it.

It's the same that LabVIEW adopted many years ago when they added the PCRE function to the string palette in addition to their own older Match Pattern function that was obviously Perl inspired (and likely generated with Bison Yacc), but more limited (not necessarily a bad thing, PCRE is a real beast to tame 😀). Not sure about the exact version used in LabVIEW but since it appeared around LabVIEW 8.0 I think, it would be likely version 6.0 or maybe 5.0, although I'm sure they upgraded that in the meantime to a newer version and maybe even PCRE2.

-

On 8/19/2022 at 3:44 PM, AutoMeasure said:

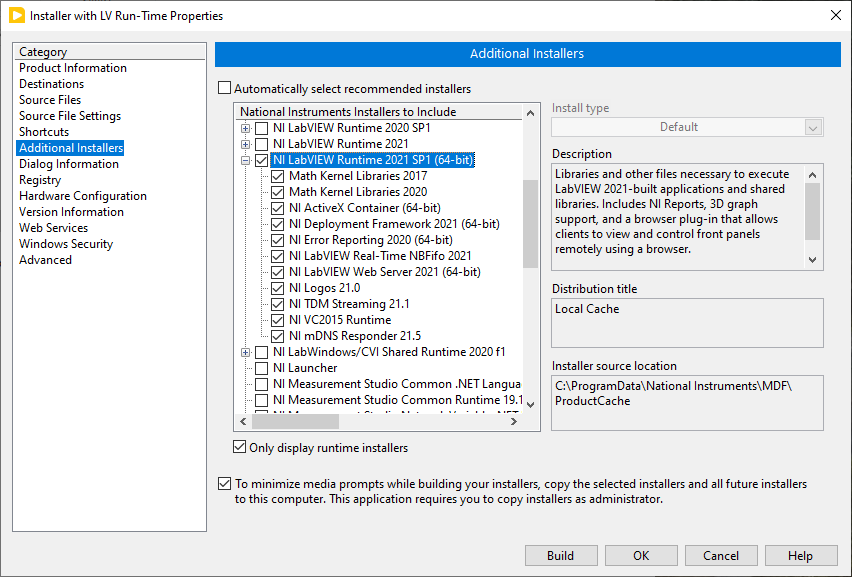

Hello Martin, thanks very much! Back in 2008 I ceased the practice of including LabVIEW Run-Time (and other drivers) in my app installer, and have been installing those separately. I forgot about the run-time components selection, thanks for pointing that out, I'll try that. For the app today, I don't need any of the checked subcomponents below.

You actually will need the NI VC2015 Runtime or another compatible Microsoft Visual C Runtime. Since the Visual C Runtime 2015 and higher is actually backwards compatible, it is indeed not strictly needed for Windows 10 or 11 based systems since they generally come with a newer one. However that is not guaranteed. If your Windows 10 system is not meticulously updated, it may have an older version of this Runtime Library installed than your current LabVIEW 2021 installation requires. And that will not work, it's only backwards compatible, meaning a newer installed version can be used by an application compiled towards an older version, not the other way around. You mentioning that the system will be an embedded system makes me think that it is neither a state of the art latest release, not that it will be regularly updated.

-

1

1

-

-

20 hours ago, Dave21 said:

I am trying to setup calling some simple python scripts from LabVIEW 7.1 using labpython 1.2-2. I installed labpython and python 2.7, but cannot get the script to work.

Keep getting errors like PYTHON New Session__ogtk.vi->PYTHON Symple Execute__ogtk.vi or LabVIEW: LabVIEW cannot initialize the script server.

in PYTHON Test Script Node__ogtk.vi

I assume I don't have something configured. Are there instructions or can someone let me know what I am missing?

Also tried installing python 2.4 - same issue.

Never mind - Resolved issue by pointing to the correct directory to load the dll. On 64-bit systems the directory is syswow64.

LabPython needs to know which Python engine to use. It has some heuristics to try to find one but generally that is not enough.

But as Neil says. For some things, old-timers can have their charm, but in software you are more and more challenging the Gods by using software that is actually 20 years or more old!!!

-

3 minutes ago, ShaunR said:

None of that is a solution though; just excuses of why NI might not do it.

That's because I don't have a solution that I would feel comfortable with to share. It's either ending into a not so complicated one off for a specific case solution or some very complicated more generic solution that nobody in his sane mind ever would consider to touch even with a 10 foot pole.

-

6 minutes ago, ShaunR said:

What I think we can agree on is that the inability to interface to callback functions in DLL's is a weakness of the language. I am simply vocalising the syntactic sugar I would like to see to address it. Feel free to proffer other solutions if events is not to your liking but I don't really like the .NET callbacks solution (which doesn't work outside of .NET anyway).

Unfortunately one of the problems with letting an external application invoke LabVIEW code, is the fundamentally different context both operate in. LabVIEW is entirely stackless as far as the diagram execution goes, C is nothing but stack on the other hand. This makes passing between the two pretty hard, and the possibility of turnarounds where one environment calls into the other to then be called back again is a real nightmare to handle. In LabVIEW for Lua there is actually code in the interface layer that explicitly checks for this and disallows it, if it detects that the original call chain originates in the Lua interface, since there is no good way to yield across such boundaries more than once. It's not entirely impossible but starts to get so complex to manage that it simply is not worth it.

It's also why .Net callbacks result in locked proxy callers in the background, once they were invoked. LabVIEW handles this by creating a proxy caller in memory that looks and smells like a hidden VI but is really a .Net function and in which it handles the management of the .Net event and then invokes the VI. This proxy needs to be protected from .Net garbage collection so LabVIEW reserves it, but that makes it stick in a locked state that also keeps the according callback VI locked. The VI also effectively runs out of the normal LabVIEW context. There probably would have been other ways to handle this, but none of them without one or more pretty invasive drawbacks.

There are some undocumented LabVIEW manager functions that would allow to call a VI from C code, but they all have one or more difficulties that make it not a good choice to use for normal LabVIEW users, even if it is carefully hidden in a library.

-

1 hour ago, ShaunR said:

Of course. Currently we cannot define an equivalent to the "Discard?" output terminal. They'd have to come up with a nice way to enable us to describe the callback return structure.

And that is almost certainly the main reason why it hasn't been done so far. Together with the extra complication that filter events, as LabVIEW calls them, are principally synchronous. A perfect way to make your application blocking and possibly even locking if you start to mix such filter events back and forth.

Should LabVIEW try to prevent its users to shoot themselves in their own feet?

No of course not, there are already enough cases where you can do that, so one more would be not a catastrophe. But that does not mean that is MUST be done, especially when the implementation for that is also complex and requires quite a bit of effort.

-

it’s a general programming problem that exists in textual languages too although without the additional problem of tying components in the same strict manner together as with LabVIEW strict typedefs used in code inside different PPLs.

But generally you need to have a strict hierarchical definition of typedefs in programming languages like C too or you rather sooner than later end in header dependency hell too. More modern programming languages tried to solve that by dynamic loading and linking of code at runtime, which has it’s own difficulties.

LabVIEW actually does that too but at the same time it also has a strict type system that it enforces at compile time, and in that way mixes some of the difficulties from both worlds.

One possible solution in LabVIEW is to make for all your data classes and pass them around like that. That’s very flexible but also very heavy handed. It’s also easily destroying performance if you aren’t very careful how you do it. One of the reason LabVIEW can be really fast is because it works with native data types, not some more or less complex data type wrappers.

-

38 minutes ago, ShaunR said:

There could be no argument against inclusion, IMO.

Maybe there isn’t other than that you and me and anyone else using LabVIEW never really was asked when they did their “reliable independent” programmer questioning. Trying to draw conclusions from a few 1000 respondents that where not carefully selected among various demographical groups is worse than a guesstimate.

Also most LabVIEW programmers may generally have better things to do than filling out forms to help some questionable consulting firms draw their biased conclusions to sell to the big world as their wisdom. 😀

-

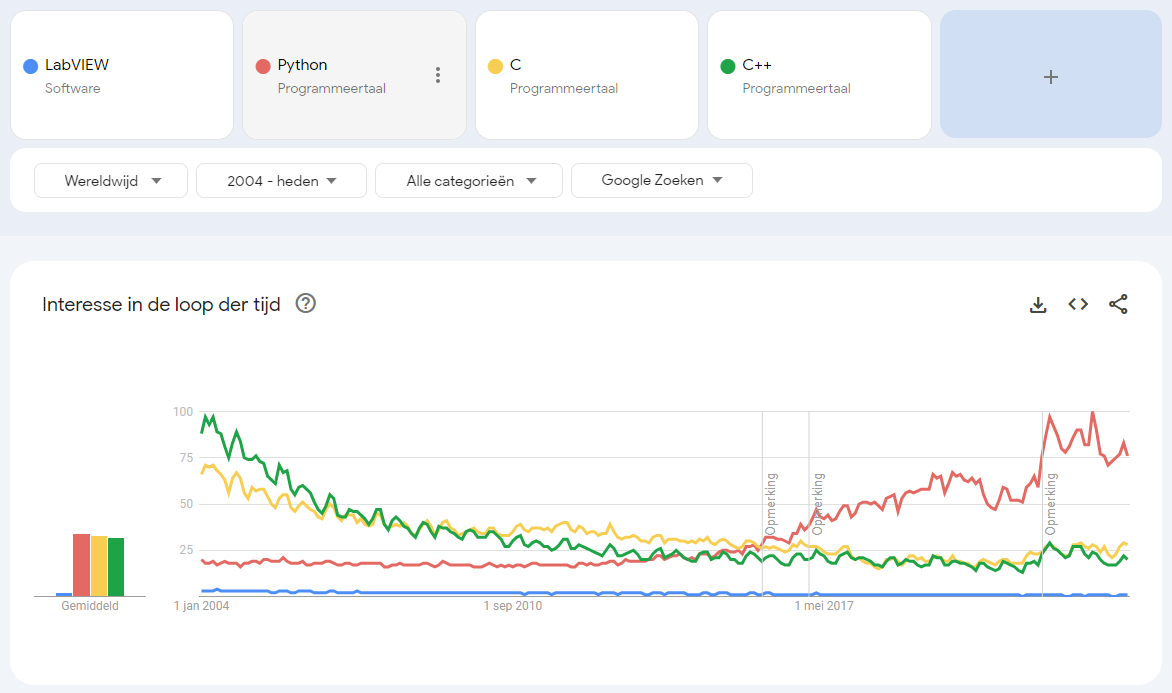

In Google Trends LabVIEW is considered a software, while the others are considered programming languages. Many programming language comparisons don’t consider LabVIEW as they see it as a vendor software. On the other hand is G usually associated with something else. So LabVIEW falls through the cracks regularly.

On the other hand is your statistic website also not quite consequent. I would consider Delphi also more the IDE than a programming language. The language would be really (Object) Pascal.

And HTML/CSS as programming feels also a bit off, can I enter Microsoft Word and Excel too, please? Or LaTex for that matter? 😀

Rust on the other hand for sure is going to grow for some time.

-

It is more complex than that. First it is a trend so it is relative to some arbitrary maximum.

Second if you combine multiple items the trend suddenly looks a little different. Interesting that C started lower than C++ back in 2004 but seems to be now slightly higher than C++. It's obvious that LabVIEW always has been a minor player in comparison to these programming languages.

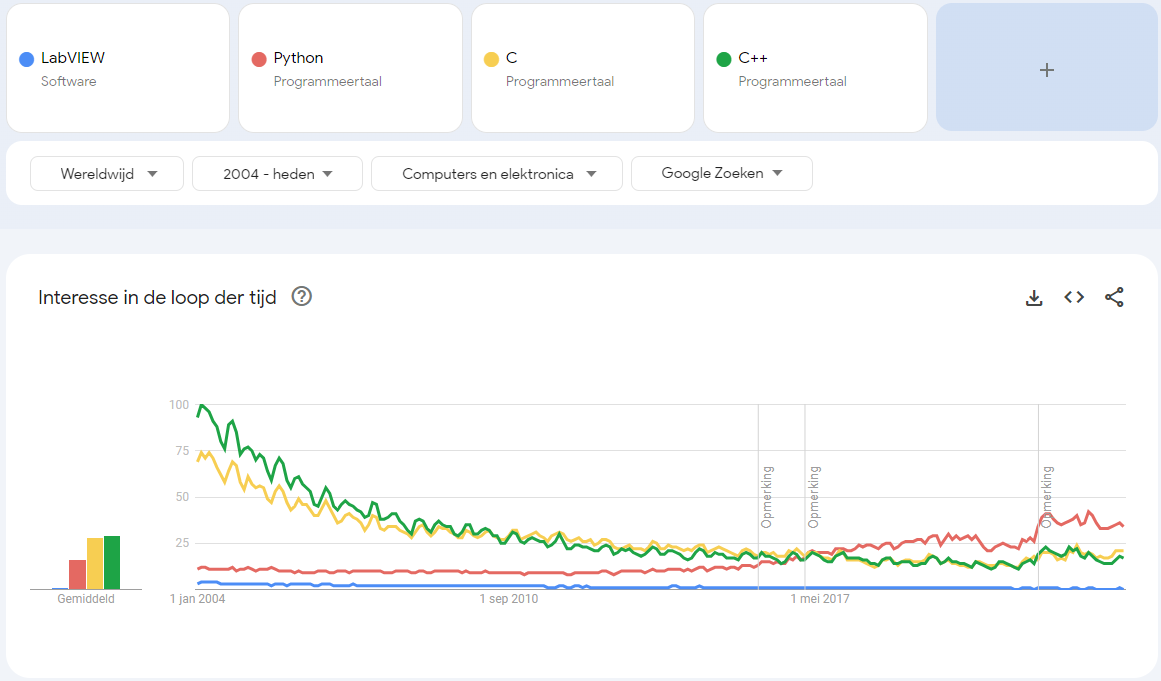

And if you limit it to computers and electronics as search categories it looks again different:

-

4 hours ago, hooovahh said:

Every year I have to ping Michael about adding the new version of LabVIEW to the selectable drop down. It is something on the admin side, not moderator side. I'm guessing he just wanted to save getting bothered, and added 2024 when he added 2023, believing that there would be a LabVIEW 2024 eventually.

I'm fairly sure his believes will be proven right. What it will bring in terms of new features will be anyone's guess.

-

18 hours ago, Bryan said:

This also appears to have been released back in 2016 - so I'm not sure if there are any compatibility issues that may arise with later versions of LabVIEW (e.g. LabVIEW 2024 as shown under your avatar).

LabVIEW 2024? Where did you get that??😁 I sense someone from a movie from Christopher Nolan has been posting here. 😀

-

On 11/3/2023 at 9:49 PM, smarlow said:

Maybe separate the front panel and diagram into different files/entities so that the GUI can be used with other programming languages in addition to G.

That's what LabWindows/CVI was. And yes the whole GUI library from LabWindows/CVI was under the hood the LabVIEW window manager from ca. LabVIEW 2.5! My guess (not hindered by any knowledge of the real code) is that at least 95% of the window manager layer in LabVIEW 2023 is still the same. And I'm not expecting anyone from NI chiming in on that, but that LabWindows/CVI used a substantial part of the LabVIEW low level C code is a fact that I know for sure. but look where that all went! LabWindows/CVI 2020 was the latest version NI released and while they have mostly refrained from publicly commenting on it, there were multiple semi-private statements from NI employees to clients, that further development of LabWindows/CVI is not just been put in the refrigerator but definitely has been stopped.

As to AI as promoted by OpenAI and friends at this point: It has very little to do with real AI. It's however a very good hype to raise venture capital for many useless things. Most of it will be never ever talked about again after the inventor went off with the money or it somehow vaporized in other ways. It feels a lot like Fuzzy Logic some 25 years ago. Suddenly everybody was selling washing machines, coffee makers and motor controllers using Fuzzy Logic to achieve the next economical and ecological breakthrough. At least there was a sticker on the device claiming so, but if the firmware contained anything else than an on-off controller was usually a big question. People were screaming that LabVIEW was doomed because there was no Fuzzy Logic Toolkit yet (someone in the community created eventually one but I'm sure they sold maybe 5 or so licenses if they were really lucky). Who has heard for the last time about Fuzzy Logic?

Sure AI, once it is real AI, has the potential to make humans redundant. In the 90ies of last century there was already talk about AI, because a computer could make some model based predictions that a programmer first had to enter. This new "AI" is slightly better than what we had back then, but a LLM is not AI, it is simply a complex algorithmic engine.

labVIEW2018 build EXE reports an error!

in LabVIEW General

Posted · Edited by Rolf Kalbermatter

More importantly the path to the DAQNavi.dll definitely is wrong! Yes it tries to find the DLL, which might or might not be there.

No, it should NOT try to copy it into your application build.

Any DLL located in the System folder got installed there by its respective installer. And should be placed there by that installer on any system you want to run an application that uses that DLL.

LabVIEW has a particular treatment of the DLL library name in the Call Library Node. If the path specified there is only the shared library name without any path, it will let the OS search the DLL and Windows will automatically find it in the correct System directory. And the Application Builder will treat such a library name as indication to NOT include the DLL in the build. This CAN be important for custom DLLs that get installed in the System folder. It is ABSOLUTELY paramount and important to do for Windows standard DLL. If the path is not the library name alone, the Application Builder will try to copy the DLL to the built folder too and for Windows DLLs that means that the application will try to load that "private" copy of the DLL, and that goes catastrophically wrong for most Windows DLLs, as they rely on being located in the canonical System directory or all hell breaks loose.

Try to edit all Call Library Nodes that use that DLL to only specify the DLL name alone without any path. The path usually looks like reverting back to full path, but that is a sort of quirk in the Call Library Node dialog. LabVIEW generally will attempt to update the path to where it actually got the DLL loaded from, yet it still stores the former user entered path in the VI itself.

And are you using 64-bit LabVIEW 2018? Or a 32-bit Windows system? Otherwise that path is anyhow wrong. On a 64-bit Windows system, C:\Windows\System32 is for 64-bit DLLs. Unless your LabVIEW system is 64-bit too, that DLL in that folder definitely should not be the right one for your LabVIEW system. If the according installer put a 32-bit DLL in there, which would be pretty hard thanks to Windows path virtualization, this same Windows path virtualization will make it impossible for LabVIEW to reference that DLL there.