-

Posts

3,785 -

Joined

-

Last visited

-

Days Won

243

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Rolf Kalbermatter

-

-

2 hours ago, ShaunR said:

Yes. But he will be populating the array data inside the DLL so if he mem copies u64's into the array it's likely little endian (on Intel). When we Moveblock out, the bytes may need manipulation to get them into big endian for the type cast. Ideally, the DLL should handle the endianess internally so that we don't have to manipulate in LabVIEW. If I'm wrong on this then that's a bonus.

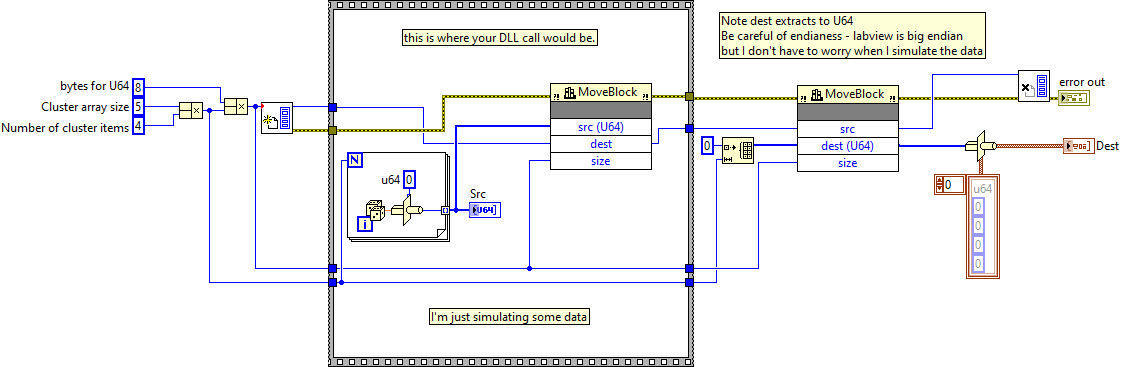

No! The DLL also operates on native memory just as LabVEW itself does. There is no Endianness disparity between the two. Only when you start to work with flattened data (with the LabVIEW Typecast, but not a C typecast) do you have to worry about the LabVIEW Big Endian preferred format. The issue is in the LabVIEW Typecast specifically (and in the old flatten functions that did not let you choose the Endianness). LabVIEW started on Big Endian platforms and hence the flatten format is Big Endian. That is needed so LabVIEW can read and write flattened binary data independent of the platform it works on. All flattened data is Endian de-normalized on importing, which means it is changed to whatever Endianness the current platform has so that LabVIEW can work on the data in memory without having to worry about the original byte order. And it is normalized on exporting the data to a flattened format. But all the numbers that you have on your diagram are always in the native format for that platform! Your assumption that LabVIEW somehow always operates in Big Endian format would be a performance nightmare as it would need to convert every numeric data every time it wants to do some arithmetic or similar on it. That would really suck great time! Instead it defines an external flattened format for data (which happens to be Big Endian) and only does the swapping whenever that data crosses the boundary of the currently operating system. That means when streaming data over some byte channel, be it file IO, or network or a memory byte stream.

And yes, when writing a VI to disk (or streaming it over the network to download it to a real-time system for instance), all numeric data in it is in fact normalized to Big Endian, but when loading it into memory everything is reversed to whatever endianness format is appropriate for the current platform.

And even if you use Typecast it only will swap elements if the element size on the input side doesn't match the element size on the output. For instance Byte Array (or String, which unfortunately still is just a syntactic sugar to a Byte Array) to something else. Try a Typecast from an (u)int32 to a single precision float. LabVIEW won't swap bytes since the element size on both sides is the same! That even applies to arrays of (u)int32 to array of single precision (or between (u)int64 and double precision floats). Yes it may seem unintuitive when there is swapping or not but it is actually very sane and logical.

QuoteI think this can also be done directly by Moveblock using the Adapt to Type (for a CLFN) instead of the type cast but I think you'd need to guarantee the big endian and using a for loop to create the cluster array (speed?).

Indeed, and no there is no problem about Endianness here at al. The only thing you need to make sure is that the array of clusters is pre-allocated to the size needed to copy the elements into and that you have in fact three different size elements here:

1) the size of the uint64 array, lets call it n

2) the size of the cluster array, which must be at least n + (e - 1) / e, with e being the number of u64 elements in the cluster

3) the size of bytes to copy which will be n * 8

-

2 hours ago, ShaunR said:

You can forget about that comment about endianess. MoveBlock is not endianess aware and operates directly on native memory. Only if you incorporate the LabVIEW Typecast do you have to consider the LabVIEW Big Endian preference. For the Flatten and Unflatten you can nowadays choose what Endianess LabVIEW should use and the same applies for the Binary File IO. TCP used to have an unrealeased FlexTCP interface that worked like the Binary File IO but they never released that, most likely figuring that using the Flatten and Unflatten together with TCP Read and Write does actually the same.

PS: A little nitpick here: The size parameter for MoveBlock is defined to be size_t. This is a 32-bit unsigned integer on 32-bit LabVIEW and a 64-bit unsigned integer on 64-bit LabVIEW.

-

1

1

-

-

1 hour ago, bna08 said:

I don't work with a DLL in C#. The DLL is in C++/CLI which allows working with managed and unmanaged data at the same time. Therefore, in this case I pass a 64-bit pointer to data buffer allocated with DSNewPtr (as suggested by ShaunR above) by calling one of the DLL functions as UInt64 value to the DLL where I use memcpy to fill the memory pointed to by the LabVIEW pointer.

Basically:- allocate a buffer in LabVIEW with DSNewPtr

- pass this pointer to the .NET DLL by calling my .NET DLL function SetExternalDataPointer(UInt64 lvPointer)

- use memcpy in the DLL to copy the data to lvPointer address

- read the data in LabVIEW by calling GetValueByPointer.xnode

That's hardly efficient as you actually copy the memory buffer at least twice (but most likely three times), likely once in the .Net function you call, then with memcpy() in your C++/CLI wrapper and then again with your GetValueByPointer.xnode. Basically you created a complicated solution to supposedly make something performant, but made it anything but performant.

If your C++/CLI DLL instead provides a function where the caller can pass in the pre-allocated array as an actual array (of bytes, integers, doubles, apples or whatever) and request to have the data copied into it, you are already done. Without pointer voodoo on the LabVIEW diagram and at least one memory copy less.

-

1

1

-

1 hour ago, bna08 said:

Thank you, Rolf. However, what do you mean by "pass it as an Array as Array Data Pointer"? Pass where? Into which function? I am calling .NET DLL functions with calls via .NET Invoke Node in LabVIEW. Passing as Array Data Pointer is possible when calling unmanaged DLL functions via Call Library Function which I cannot use in my case, or can I?

So far it's all guessing. You haven't shown us an example of what you want to do nor the according C# code that would do the same. It depends a lot on how this mysterious array data pointer by reference is actually defined in the .Net method. Is it a full .Net Object, or an IntPtr?

-

1

1

-

-

17 hours ago, bna08 said:

In my application I need to get a few hundred bytes from a .NET C++/CLI DLL as fast as possible. This would be achievable by initializing an array in LabVIEW and then passing its pointer to the DLL so it can copy the data to the LabVIEW array directly. As far as I know this cannot be done. Or is there another way?

Currently, I am achieving this behavior by using IMAQ GetImagePixelPtr.vi which allocates a small IMAQ image (array of pixels) and returns pointer to this image. Afterwards, I pass the pointer to the DLL, write my data to it and read the values back in LabVIEW. Is this too much of a hack? It seems to work OK.

It's definitely a hack. But if it works it works, it may just be a really nasty surprise for anyone having to maintain that code after you move on. It would figure very high on my list of obscure coding.

The solution of Shaun is definitely a lot cleaner, without abusing an IMAQ image to achieve your goal.

But!!! Is this pointer passed inside a structure (cluster)? If it is directly passed as a function parameter, there really is no reason to try to outsmart LabVIEW. Simply allocate an array of the correct size and pass it as an Array (correct data type), Pass as: Array Data Pointer and you are done. If you want to keep this array in memory to avoid having LabVIEW allocate and deallocate it repeatedly just keep it in a shift register (or feedback node) and loop it through the Call Library Node. The LabVIEW optimizer will then always attempt to reuse that buffer whenever possible (and if you don't branch that wire anywhere out of the VI or to functions that want to modify it, this is ALWAYS).

-

1

1

-

-

On 11/28/2022 at 5:57 PM, drjdpowell said:

Edit: actually, even a thread switch shouldn't take 40 milliseconds, but give it a try anyway.

The thread context switch itself to the UI thread and back again should and won't take that long, it's more in the tenths of microseconds. But that UI thread may be busy doing your front panel drawing or just about anything else that is UI related or needs to run in the only available single threaded protected context in LabVIEW and then the context switch to the UI thread has to arbitrate for it. And that means the LabVIEW code simply sits there and waits until the UI thread finally gets available again and can be acquired by this code clump.

-

17 hours ago, Francois Aujard said:

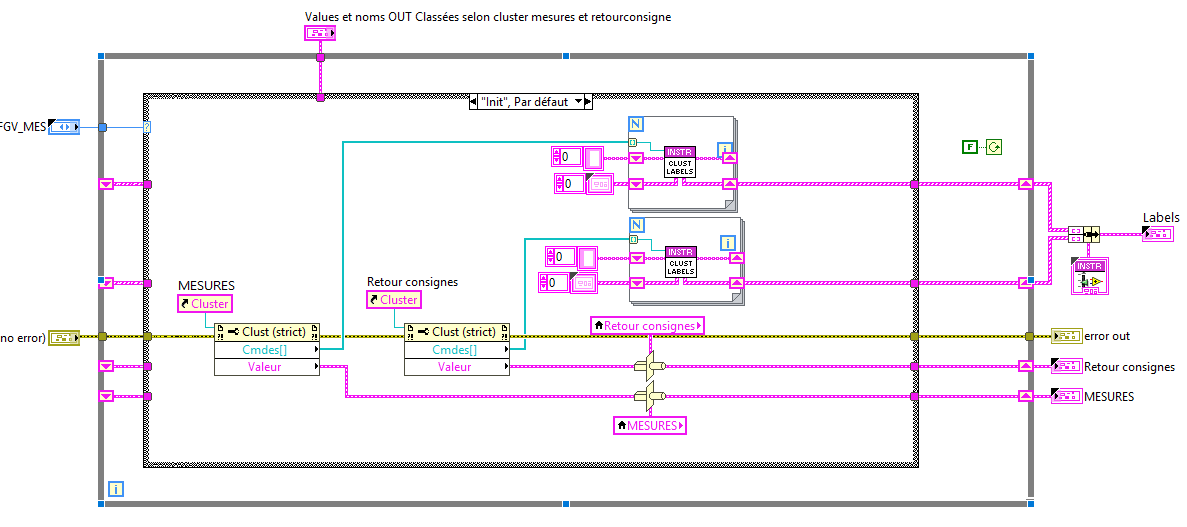

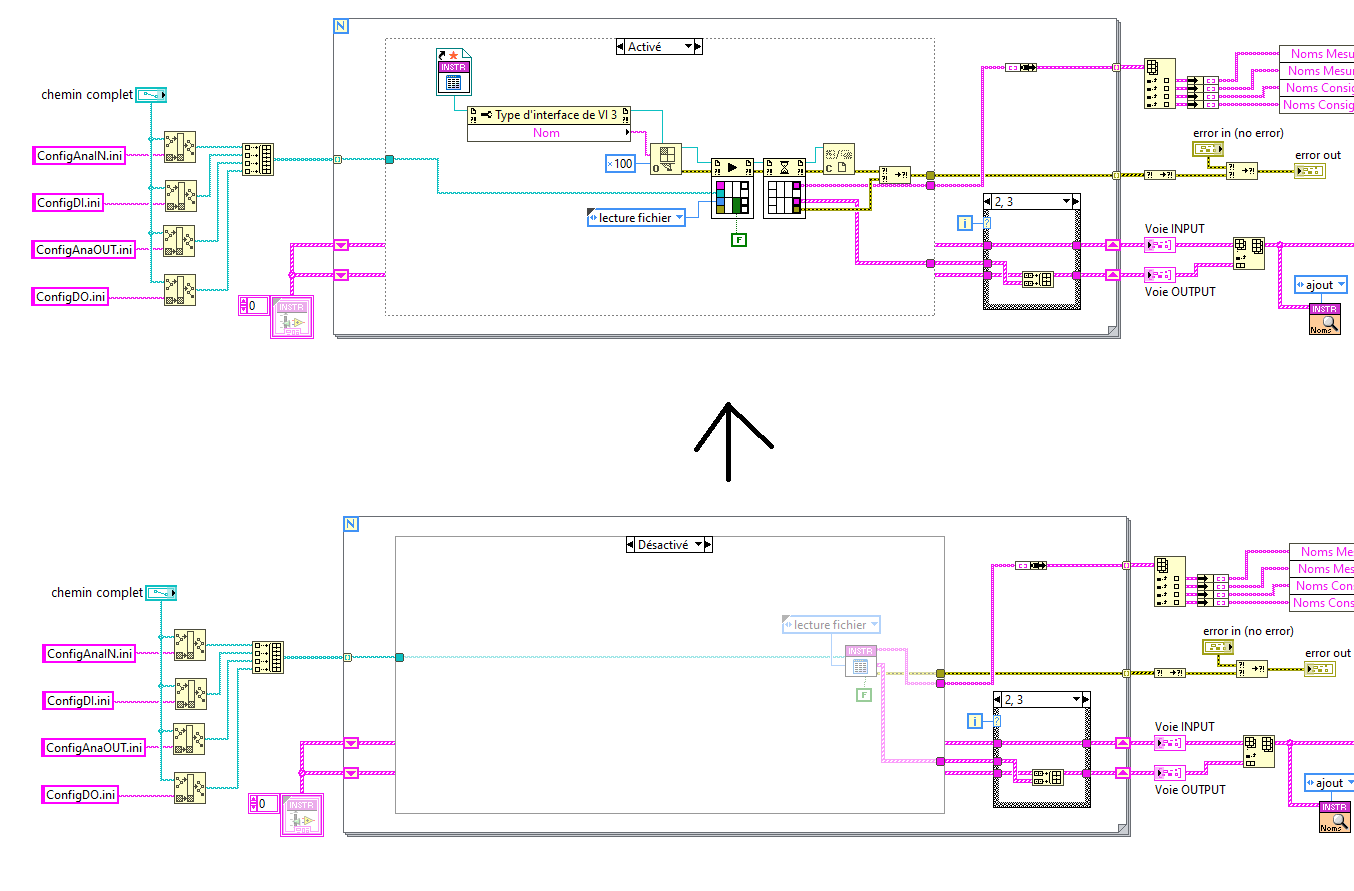

@ensegre I have found my problem 🙂. The initialisation of a FGV use property node. I put it in other VI and this solve my problem :

I use this VI a lot of years, with windows 7 and with labview 2017 and labview 2013. It's strange I have problem now. Never see the problem before, and I think that I have no visible problem before. 🤔

What is "CLFN" in your sentence : "CLFN configured for UI thread, for instance"

UI Element Property Nodes ALWAYS execute in the UI thread. This applies to VI server nodes operating on front panel and control refnums (and almost certainly on diagram refnums too, but this would be pure scripting so if you do anything time critical here, you are definitely operating in very strange territory).

CLFN is Call Library Function Node. These calls can be configured to run either reentrant or in the UI thread. If set to run in UI thread and it is a lengthy function (for instance waiting for some event in the external code) it will consume the UI thread and block it for anybody else including your nice happy property nodes!

Now don't run and change all CLFN to run reentrant! If the underlaying DLL is not programmed in a way that it is multithreading safe (and quite some are not) you can end up getting all sorts of weird results from totally wrong computations to outright crashes!

So your VI may have worked for many years by chance. But as we all had to learn for those to nice to be true earnings from investments, results from the past are no guarantee for the future! 🙂

-

1

1

-

-

3 hours ago, Neil Pate said:

This is not the same as polymorphism as the connector pane is not changing. We could not write any normal LabVIEW code that had this same behaviour.

No we can't and neither can NI for VIs. But these nodes are built in and the C code behind those nodes can exactly do that and regularly does. Same about custom pop-up menus for nodes. You can't do that for VIs.

Pretty much all light yellow nodes (maybe with an exception here or there where a VI fakes to be a node) are built in. There is absolutely no front panel or diagram for these, not even hidden. They directly are implemented as LabVIEW internal objects with a huge C++ dispatch table for pup-up menus, loading, execution, undoing, drawing, etc. etc. The code behind them is directly C/C++. It used to be all pure C code in LabVIEW prior to about 8.0, with hand crafted assembly dispatcher but most probably got eventually all fully objectified. Managing 100ds of objects with dispatch tables that contain up to over 100 methods each by an Excel spreadsheet, with command line tools to generate the interface template code is not something that anyone wants to do out of his or her free will. But it was in the beginnings of LabVIEW the only way to get it working. Experiments to use C++ code at that point made the executable explode to a manifold of the size when using the standard C compiler and make it run considerably slower. The fact it is all compiled as C++ nowadays likely still is responsible for some of the over 50 MB, the current LabVIEW executable weights, but who cares about disk space nowadays as long as the execution speed is not worse than before.

-

11 minutes ago, drjdpowell said:

I haven't used them yet, but I thought LabVIEW Interfaces are like LabVIEW Classes, just with no private data.

I have to admit that I didn't use them either, yet! And you could be right about that. They definitely need a VI for each method as there is no such thing as a LabVIEW front panel only VI (at least for official non NI developers 🙂 ). I would expect them to be however all at least set to "Must Override" by default, if it is even an option to disable that.

-

19 hours ago, drjdpowell said:

Yes, the new Interfaces will takeover much of the work previously done with abstract parent classes.

Just to be clearer here. In Java you have actually three types of classes (interfaces, abstract classes and normal classes).

Interfaces are like LabVIEW interfaces, they define the method interface but have no other code components. A derived class MUST implement an override for every method defined in an interface.

Normal classes are the opposite, all their methods are fully implemented, but might be empty do nothings sometimes if the developer expects them to be overwritten.

Abstract classes are classes that are a bit of both. They implement methods but also have method interfaces definitions that a derived class MUST override.

If you have a LabVIEW class that has some of its methods designated with the "Must override" checkbox you have in fact the same as what Java abstract classes are, but not quite. In Java, abstract classes can't be instantiated, just as interfaces can't be instantiated, because some (or all) of their methods are simply not present. LabVIEW "abstract" classes are fully instantiable, since there is an actual method implementation for every method, even if it is usually empty for "Must override" methods.

-

On 11/1/2022 at 6:06 PM, PyLabVIEW73 said:

When I try to run an open and read my SQLite Database I keep getting an error 7 that it can't find the shared library it needs. Strange because the files are in the exact directory where the error code says it is looking for them.

Not sure what I am doing wrong. Any help would be greatly appreciated! I know there was some discussion regarding the library locations on a Linux RT quite a few years back.

That's usually a dependency error. Shared libraries are often not self contained but reference other shared libraries from other packages and to make matters worse sometimes also minimum versions or even specific versions of them. Usually a package should contain such dependencies and unless you use special command line options to tell the package manager to oppress dependency handling, should attempt to install them automatically.

But errors do happen even for package creators and they might have forgotten to include a dependency. Another option might be that you used the root account when installing it, making the shared library effectively only accessible for root. On Linux it is not enough to verify that a file is there, you also need to check its access rights. A LabVIEW executable runs under the local lvuser account on the cRIO. If your file access rights aren't set to include both the read and executable flags for at least the local user group, your LabVIEW application can't load and execute the shared library, no matter that it is there.

-

On 11/11/2022 at 2:18 PM, Antoine Chalons said:

Why is this option "not recommended"?

Well, my guess is that it is normally a lot safer to compile everything than to trust that the customer did a masscompile before the build. That automatic compile "should" only take time, not somehow stumble over things that for whatever strange reasons don't cause the masscompile to fail. That's at least the theory. That it doesn't work out like that in your case is not a good reason to make it recommended for everyone to do it otherwise.

-

27 minutes ago, codcoder said:

Hi,

We have a PXI-system with a PXIe-8821 controller running LabVIEW RT (PharLap). On this controller we have a deployed LabVIEW developed application. So all standard so far.

From time to time the application ends up in an erroneous state. Since the overall system is relatively complex and used as a development tool for another system, it is tedious to detect and debug each situation that leads to an error. So the current solution is to regularly reboot the controller. Both at a fixed time interval and when the controller is suspected to be in an erroneous state.

To achieve this remotely we send a reboot command by spoofing the HTTP POST command sent when clicking on the "restart button" in NI's web based configuration GUI. I was surprised that this worked, but it does, and it does so pretty well.

But there is an issue: we have an instrument that can get upset if the reference to it isn't closed down properly. And since this reboot on system level harshly close down our application this has increasingly started to become an issue.

So I have two questions:

- Is there a way to detect that a system reboot is imminent and allowing the LabVIEW application to act on it? Closing down references and such.

- Is there another way to remotely reboot a controller? I am aware of the built in system vi's but those are more about letting the controller reboot itself. What I'm looking for is a method to remotely force a reboot.

Any input is most welcome!

I would attack it differently. Send that reboot command to the application itself, let it clean everything up and then have it reboot itself or even the entire machine.

-

1

1

-

1 hour ago, Francois Aujard said:

Not always happen, sometimes... perhaps once in 20 or 30...

Still, VERY high frequency if it is true that you don't continuously try to write to that file.

-

3 hours ago, LogMAN said:

This functionality is a post LabVIEW 8.0 feature. The original config file VIs originate from way before that. They were redesigned to use queues instead of LVGOOP objects, but things that were supposedly working were not all changed. Also using the "create or replace" open mode on the Open File node has the same effect.

Still something else is going on here too. The Config file VIs do properly close the file which is equivalent to flushing the file to disk (safe of some temporary Windows caching). Unless you save this configuration over and over again it would be VERY strange if the small window that such caching could offer for corruption, would always happen at exactly the moment the system power is failing. Something in this applications code is happening that has not yet been reported by the OP.

-

1

1

-

-

On 9/21/2022 at 3:59 PM, Francois Aujard said:

In fact with IMAQdx, I want to create a 15s frame buffer, at a rate of about 60fps. A priori the image data I'm trying to store must be a reference. So it can't work. If I convert the reference to a Variant I have the same problem. And if I flatten it into a string, my execution time becomes too long...

Does anyone have an idea or an advice?

IMAQ Vision images are by reference, not by value as other LabVIEW data types. And their identifying attribute is the name used when creating that IMAQ Vision image. Making a variant of the image doesn't change anything about the fact that this image is still a reference to to same memory area that the IMAQ refnum refers to.

So if you want to create a buffer of 15s at 60 mages per second you would have to create 900 buffers. That's a lot of memory if you don't use old low resolution cameras.

-

Your request is VERY open ended. LabVIEW is used by many semiconductor manufacturers for testing their chips, especially in the development departments, often in combination with Test Stand. But how to access those chip pins is very variable. Some projects I worked on were using rather complex PXI setups with all kinds of programmable power supplies, relay multiplexers and digital and analog IO. Others were using mainly programmable SMU (Source Measurement Units) for characterization of semiconductor parts. In some cases it is as "simple" as using a JTAG test probe to access the on board JTAG interface.

If you talk about a tester to identify chips, I would go with COTS solutions rather than trying to invent my own. It's simply a to complex topic to try to make your own.

-

On 10/3/2022 at 6:51 PM, ensegre said:

So yes, this is actually OT because it is not about Hybrid Xnodes or whatever...

Are you sure the Elemental IO nodes are not actually Hybrid XNodes under the hood? There used to be a Toolkit that you could get after signing an NDA, swearing on your mothers health to never talk about it to anyone and making a secret voodoo dance, about how to create Elemental IO Nodes. It was required for anyone designing their own C modules to be put into a cRIO chassis when wanting to provide an API to access that module. With the current state of green NI, it may be however pretty impossible to get that anymore.

-

You can either have those purple VIs use an explicit array of objects inputs and outputs on the upper corner. However then they are not a class method but simply VIs. Or you can create a new class that has as one of its private data elements the array of objects. Then instead of just appending the objects to the shift register array you call a method of that class to add the object to its internal object array. Then you can make your purple methods be part of that new object collection class.

-

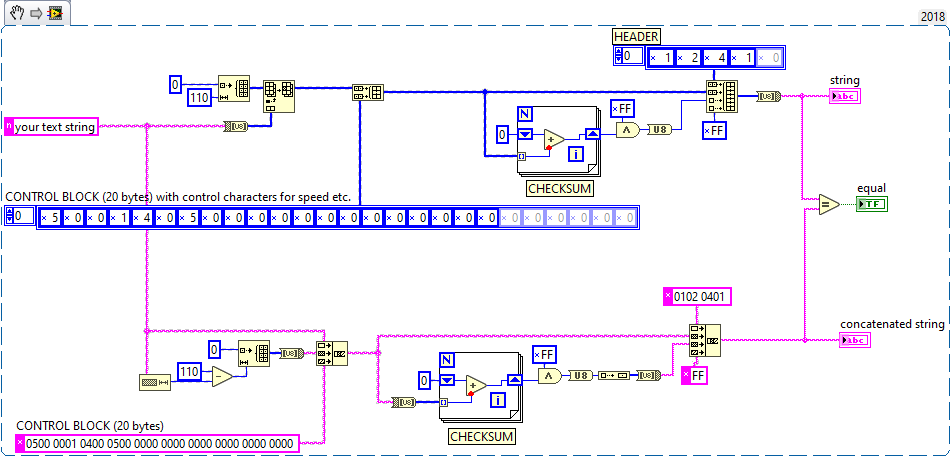

My Portuguese is absolutely non-existent but the documentation seems pretty clear.

You need to send a binary stream to the device with a specific header and length.

Try to play with the display style of the string.

First you need a string of four bytes, if you enable Hex Display you can enter here "0102 0401"

Then follows the actual string you want to display, filled up to 110 characters by appending 00 bytes (in Hex code) then follows the epilog with the speed indication and what else which you again want to fill in as Hex Display string. Concatenate all together into one long string and send it off. The most difficult part would be to calculate the checksum, everything else is simply putting together the right bytes, either as ASCI characters or hex code.

This shows two ways to build an according string to send.

"your text string" is the string you want to send.

the CONTROL BLOCK part needs to be further constructed by you to control speed etc for the display message

Notice the glyphs at the left side of the strings and numeric values indicating the display style, n for normal string display, x for hexadecimal display.

-

22 hours ago, Francois Aujard said:

It sounds weird to me. Somehow you seem to open a file refnum in that configuration VI without closing it. How that could happen with the NI Config VIs is vague to me.

When you call a VI through the Call Asynchronous node, it is in fact started as its own top level VI. This means that LabVIEW resource cleanup rules apply to it separately. Once a top level VI goes idle (stops executing) LabVIEW will garbage collect any refnums that were opened during its execution in an subVI of it. So those refnums you see being open will go away as soon as that asynchronous call stops executing.

But how the standard INI files could cause this is pretty unclear to me. The NI Config Open VI opens the file, parses its contents and stores it in the object wire and then closes the file. The NI Config Close does only open it for writing when it determines that the configuration file contents is modified AND the boolean to save changes is set to true and then closes it again. If you set that boolean to false it never will open the file at all.

This makes me believe one of 2 things:

1) you do something else with the file in your configuration VI and don't properly close the refnum

2) you or someone else has modified the NI Config Open vi on your LabVIEW installation and that does not anymore properly close the config file after reading its contents

The only other possibility I can think of is a highly corrupted LabVIEW installation or some weird Windows configuration that keeps files open in the background despite the application having requested to close it. But the fact that making the VI asynchronous will "fix" it, would contradict the weird Windows configuration. It's simply LabVIEW again requesting to close that file handle as soon as the LabVIEW file refnum is garbage collected. This is the same happening when your code explicitly closes the file refnum.

-

My own class hierarchies seldom go beyond 3. I try to keep it flat and with interfaces, which don't quite count as class, it is even easier. Usually I used to have a Base class that was pretty much nothing else than an interface, but before LabVIEW knew interfaces this was the best I could do. I also regularly put some common code in there, so strictly speaking it is an abstract class more than an interface but I digress.

A lot of my VI classes are in fact drivers to allow for different devices or hardware interfaces to be treated the same. For these you seldom end up having more than 3 or 4 hierarchy levels, including the base class (interface).

And then I use compositing a lot which doesn't really count as inheritance, so I don't count it here. If you would add that in, things would get a lot deeper as there are typically several levels of compositing.

In a project I have been helping on the sidelines and which I'm going to be working a lot more in the future, there are actually deeper levels sometimes. Some of them approach 9 levels easily nowadays. The system uses PPLs per class and loads them all dynamically. It works for Windows and NI Linux. Aside from the pretty well known issues about being very strict about never opening more than one project (each class/PPL needs its own project to work like this) and keeping the paths very strictly consistent (with symbolic links to map the current architecture tree of dependent packed libraries Windows/Linux into a common path) this works quite well. It's a pain in the ass if you try to take shortcuts and quickly do something here and there, but if you can keep to the process flow it actually works.

As long as you do this only for Windows, things are fairly simply. PPL loading on LabVIEW for Windows is fairly forgiving in many aspects. On NI Linux however things go awry fast and can get very ugly if you are not careful. The entire PPL handling on non-Windows platforms seems to have been only debugged to the point where it started to work, not where it really works reliably even if you don't follow the unwritten rules strictly.

For instance we noticed that if you rebuild a higher level PPL/class under Windows, LabVIEW will happily keep loading child classes that depend on this. Don't try to do that on NI Linux! LabVIEW will simply refuse to load the child class as it seems to remember that it depended on a different version of the parent than what is there now and simply bails out during load. So whenever you rebuild a higher level parent class, you need to also rebuild the entire dependency chain. The MGI Solution Explorer is an indispensable tool to keep this manageable.

-

On 10/31/2022 at 2:56 PM, hooovahh said:

I'm convinced most of the users of my software are illiterate. The good users of my software know the common troubleshooting techniques, and will blindly run through turning it off and on again before telling me. Then if "The red screen" comes up again they'll tell me, or take a picture with their phone. Honestly I prefer this over the "I don't know what it said".

I know such users too. But I have also come across another kind of users, or rather not come across them often. We write an application and install it and test it and the customer seems happy. Years later they call us because they want to have a new feature or extension and you go there to discuss the feature and take a look at the application and start it up and almost the first thing that happens is that you see an error message when running a quick test. And the resident operator than comes and tells you: "Ahh yes that dialog, you have to first start this other terminal program or whatever and do this and that in there and then you can start this application and all works well."

"You mean you are doing this all this time already like this?"

"Yes sure, it works, so why bother any further about it?"

"Ahh right, ok!"

And when you then look at the code you can quickly see where things must go wrong and sometimes even wonder how it ever could have worked fine, but these people experimented and came up with a solution that I would never even have dreamed about to work. If they had called, it would have been a question of a one or two hour fix, but they never called.

-

14 minutes ago, Dan Bookwalter N8DCJ said:

quick question

Are the fpga and realtime bundled with any NI products , i have a copy of all the serial numbers from our one facility , but , there doesnt seem to be any specific to fpga/realtime , but , they do use them. So , i was curious if it is included in somethign else ?

Dan

It depends on the license. If you purchase a normal single seat license these things are usually separate products that also come with their own serial number. If you have a Software Developer Suite license such as what Alliance Members can purchase, but there are other Developer Suite licenses too, then one single license number can be for pretty much all of NI Software or at least a substantial subset of them. So you really need to know what sort of license the serial number belongs to.

Pointer to LabVIEW array for .NET DLL

in LabVIEW General

Posted · Edited by Rolf Kalbermatter

That loop looks nice, but I prefer to use Initialize Array. 😀

But I'm pretty sure the generated code is in both cases pretty similar in performance. 😁