-

Posts

317 -

Joined

-

Last visited

-

Days Won

7

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by gb119

-

In the specific example I had in mind, keeping the input at the same value would cause the instrument to reset, whilst not changing the input doesn't - so 'No Change' is different from 'the same again'. You can then either expose the "No Change" option to the end user - but that might be more confusing. The alternative (which is what I normnally end up doing) is to have separate enums/rings for the UI and the underyling driver - but then you have to keep them consistent otherwise it gets very confusing(!). (there's other alternative strategies like maintaining state either in the caller or subvi - or possibly hardware - of course). In the subvi, I'd argue that code that detects sentinel values e.g. by looking for NaN in floats or default values for rings would be less transparent than each control having a property node IsWired? that is only true if that control was wired when that subvi was called. Bounds testing on control values is then just about making sure its a valid value and doesn't get overloaded with a "value was supplied" meaning as well.

-

I'd quite like this feature for cases where the natural type for an input is a ring or enum, but you also want a "don;t change" as the default option. Yes, you can have "don't change" as one of the enum/ring values, but that then gives an ugly API. There's certain instrument driver type subvi's I have where the instrument has a mode e.g. a waveform shape, and some common parameters - amplitude, offset, duty cycle. One could have separate subvis for each of these parameters, but it's also nice to have just the one vi that would change only the parameters that were wired up and would leave alone those that weren't.

-

HDF5 is used quite widely in big facilities based research (synchrotrons, neutron sources and such like). It's a format that supports a virtual directory structure that contains meta-data attributes, and multi-dimensional array data sets). Although it's possible to browse and discover the names and locations of all of the data in the file, it's generally easier if you have some idea of where in the virtual file-system the data thatr you are interested in is being kept. There;s a couple of LabVIEW packages out there that provide an interface to HDF files and read and write native LabVIEW data types - they work well enough in my experience, although I haven't personally used HDF5 files in anything other thn proof of concept code with LabVIEW.

-

One of the things I wanted to be able to do was to read from both ends of the buffer - so to have a way to get to the most recent n items for n less than or equal to the size of the buffer. So I tweaked the read buffer XNode template to handle a negative offset meaning to read from the current buffer position backwards (i.e. Python style). This, of course breaks LabVIEW array indexing semantics, but for this particular situation it makes some degree of intuitive sense to me. The attached is my hacked template. XNode Template.vi

-

I really liked this, so I couldn't resist a minor hack - I added a search method that lets you specify a regular expression (also made the method selector a type def to make it easier to add even more methods. The only downside wasn't that I can't inline the Search Filter 1D Array of strings because I've added an XNode in there in the form of the PCRE search vi - so it'll have killed performance a little. Find References XNode 1.0.2.1.zip

-

Hmmm, that's odd because I'm running Python 2.7.10 and 2.7.11 with LabPython VIPM packages just fine when I installed Python from Python.org, installing the same versions from Ananconda tended to cause LabVIEW to crash, but I had been assuming that was something to do with which version of Visual Studio had been used to build the two sets of binaries. Do the changes you've made in the LabPython codebase potentially allow Python 3.x to be used, or is goign to continue to be just a Python 2.x thing ? In the ideal world, I guess one might replace LabPython with something that looked more like an ipython/juypter client that could talk to juypter kernels to allow multithreaded LabVIEW to play with non-threadsafe Python modules. But in the ideal world, I'd have the time to look at trying to do that...

-

LabVIEW and Python, especially numpy and scipy

gb119 replied to Nikodem Czechowski's topic in Calling External Code

LabPython only supports python modules that are threadsafe (this is because LabVIEW is multi-threaded, but Python has places where it isn't (related to the global interpreter lock). Unfortunately numpy and scipy are not thread safe, so it's easy to trip over this and crash LbaVIEW. Newer versions of Anaconda also seem to have problems with LabPython (Anaconda 2.3 onwards I think), but vanilla Python from www.python.org seems ok.- 1 reply

-

- 1

-

-

Python 2.7 works with LabPython on LabVIEW up to (at least) 2014, 32bit only, BUT there is something about the anaconda builds that causes LabVIEW to crash on recent 2.7 releases. Running plain vanilla python as downloaded from python.org works fine. One gets the feeling that LabPython in its current form is not goign to be viable for very much longer as both languages continue to develop (Python 3.x and 64 bit code are not supported for example, the scripting node interfaces are not well supported with public documentation) - probably a better solution would be to build an interface between LabVIEW and a Juypter (ipython) kerenel process - but I know I don't have time to work on anything this complicated :-( Any python module that is not thread safe will also cause problems - unfortunately that includes numpy - but sometimes one can avoid tripping over the non thread-safe bits if one is cautious in which bits of numpy one tries to call).

-

So I'm still (slowly) looking at what it would take to connect LabVIEW to an IPython kernel. As well as the 0MQ binding (which I'd seen but never played with), and JSON serialisation support, it looked like being able to sign things with HMAC-SHA256 might be useful. So I've created a little SHA-256 library (pure G to make it OS and bitness agnostic). I might put it in the code repository if it seems generally useful. university_of_leeds_lib_sha_256_library-1.0.0.2.vip

-

I have the impression that Juypter is very much focussed around textual languages - I think most of the existing clients expect to send text to their kernels and get back a mixture of text and graphics. LabVIEW doesn't really lend itself to this paradigm. You could, of course, write an interpreter in LabVIEW that took textual input and did stuff with it, but frankly there are probably easier things to do. Where it did occur to me that Juypter might be interesting for LabVIEW developers would be in something along the lines of LabPython - which although it works great, does have issues with both 64bt platforms and using non-thread safe Python modules (notably numpy!). I have a feeling that Python 3.x is not supported either. A LabVIEW based client for Juypter kernels might be a way around this by decoupling the scripting language from the LabVIEW executable - allowing mix and match 32bit/64bit, newer versions of Python, alternative language bindings etc.... Just needs someone who understands the LabVIEW scriptnode interface, C bindings to 0MQ, and how to do the Juypter protocol - which rules me out on erm, 3 counts :-( !

-

It's on my list to overhaul the update state ability and ensure the version numbers are sanitized for a future release - but, whilst I happy that folk are using the code, the XNode versions tarted up as a 'proof of concept' to show that it could be done.... Unfortunately the update will be delayed by small things like teaching undergraduates :-)

-

Hmmm, not sure what has happened there. I'd have to go back over those versions, but I don't recall that I made a change to the state type of the control which is where I'd expect to have to use the UpdateState ability. Unless that was the point where I jumped from LabVIEW 8.6.1 to LabVIEW 2012, in which case the version jump might be causing the problem.

-

I guess as the proponent of the "other" method of using templates in XNodes, I'd say it's a trade-off between work done in preparing the template and work done in the XNode ability - but mainly when I started playing with XNodes we didn't have inline subvi's (I think, or they were very new and I didn't trust them inside an XNode). Working out which thing to copy from the template can be just a convention that there is one node object on the top-level of the template - tagging the tunels just made it easier to keep track of what got wired where.... I've been toying with the idea of setting up an LVClass that implements all of the XNode abilities as dynamic dispatch methods of the class. An XNode with an instance of a child of this class as it's state control and whose abilities just wrapped calls to the relevant methods would be a sort of "Universal" XNode whose behavious was controlled by the class. Setting up a new XNode would then just involve making a new copy of the Universal XNode, setting the default value of the state to be an instance of a new child class and then filling in the child class methods. The advantage is that you can edit a class with regular LV tools - so it makes it all a bit easier.... One could even write an intermediate generic templating XNode class, perhaps that got information about which controls needed to be adapted to type from the control captions - implementing a new templated XNode would then just mean creating a new child class, adding the template and going...

-

YEs, that would mean implementing LZW compression in native LabVIEW which seemed like a lot of work....:-)

-

Complex Numbers .... coding ... need help

gb119 replied to Dan Bookwalter N8DCJ's topic in LabVIEW General

Orwire angle to a cos and sin function and then feed them both to a real amd imaginary parts to complex number (e^{i\theta}=\cos \theta + i \sin \theta) -

Ok, you're not going to get much help just posting an abstract problem without showing what you have tried already. You should post a copy of your vi that you're trying to get working. Also, it will help if you give more context to your problem - what are these points representing? Why do you need them in that particular matrix? Folk on this forum are generally happy to help with real test and measurement problems, but we also get asked to help with homework for classes. The way you present your problem makes it seem more like that you want us to do your homework for you than solve a real problem.

-

I went the second route of heirarchy (base -> typeof instrument -> specific) but now rather wish I'd split it into one heirarchy that did the data transport and command formatting - so handling different terminators, SCPI vs non SCPI, instruments that reposnd by repeating the command back to you etc, and the measurement function heirarchy. Currently my base class is too full of things that are not fully generic (like commands to help prepare SCPI command strings). That said, the core idea of writing instrument drivers as classes does work really well and makes it significantly faster to implement drivers for new instruments where the manufacturer has made an incremental change that adds extra functionality.

-

I'm also in the "definitely don't break vis unless you really have to" camp, with a caveat of "unless the user asks you to". There is a problem with being overwhelmed by warnings, but I wonder whether some UI that let the developer selectively make warnings 'break' VIs might work - so I could choose to let race conditions break things, or choose other warnings to cause breakage. That sort of 'soft' breakage might be helpful.

-

Could also make it check and fix re-entrancy and execution settings and access scope. Another thing on my wishlist if I ever get a chance to implement it would be a tool that makes the on-disk layout of a class match the project folder layout - not sure how one tie this into revision control systems though...

-

I'm seeing a slightly weird (and inconvenient) bug with LabPython... I've a Windows 7 64bit machine with 32bit LabVIEW 2012 and 32 bit LabVIEW2013 and both 64bit and 32bit Python 2.7 installed (the former I use standalone, the latter is just for linking to some LabVIEW code that makes use of LabPython). My 32bit python27.dll therefore lives in C:\Windows\SysWOW64\ and I point LabPython to it (for both versions of LabVIEW) and can see in both labview.ini files that the correct key has been added. On LabVIEW 2012, everything work s absolutely fine. On LabVIEW 2013, if I load a VI containing the Python script node, it complains that it can't load lvpython.dll via a debug window message. This is uually followed by a fatal exception, which is not a massive surprise ! If I use the LabVIEW vi API to the LabPython, then I don't have load errors and LabVIEW crashes about as much as it normally does. The only other LabVIEW 2013 setup that I've played with are 32bit Windows 7 machines running 32bit LabVIEW 2013 only, and they seem all fine. My current workaround is to load the VI that creates a session in the LabVIEW vi API mode of LabPython operation, thus forcing the dll to load, which then seems to let me load and use the script node (which sort of makes sense as Windows will use the already loaded dll rather than loading it again) - but it's a little messy and doesn't explain why LabVIEW 2012 works just fine.... Any thoughts anyone as to what's going on ?

-

There used to be a similar problem with XControls in classes (and I guess libraries) - loading the library would load the facade which would lock the library which would stop you removing te XControl from the library. NI eventually made it impossible to add XControls in ways that would deadlock the project like this - but I guess as XNodes don't exist there's no checks on putting them into libraries and classes :-)....

-

Ok, this is what I did, (but not what I'm going to do....( I installed the package with a blank palette, then in LabVIEW I created the palette file. Although LabVIEW doesn't offer .xnode as a type of VI that you can add to a palette, if you try it, it does what you'd hope. I then copy the palette file back to my package source folder and set it up to be a special target. Rebuild the package and it's good to go. The slight complication is that one could do this for free in VIPM 201x for x some number less than 4 but when I tried it recently I triggered the give JKI the money they so obviously deserve dialog (unfortunately I find it difficult to justify do this to the UK taxpayer as releasing packages to the community doesn't obviously advance the specific research they're paying me to do ). There's also some slightly complicated algorithm for selecting the icon and description that I think changes in different version - certainly everytime I think I've nailed it, something chnages and it breaks again). The better way that Michael and Jack suggested after I raised this on vishots live is to use a merge vi that I can add to the palette. That's what the next release will do (when I've added petrification to all XNodes.

-

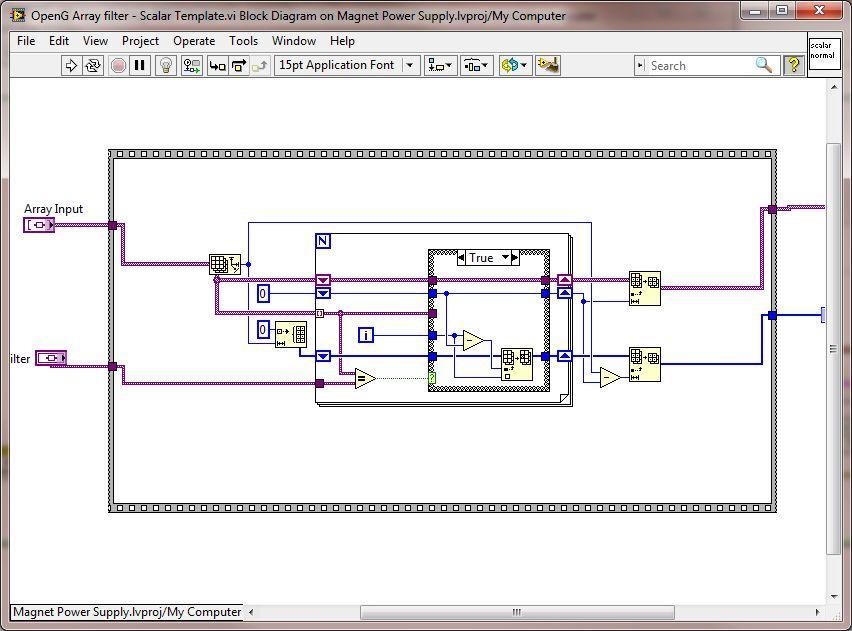

There certainly was a bug in filtering arrays of clusters with a scalar cluster in that version. If you aren't able to upgrade to the newer VIPM then you have a couple of options - 1) manually edit the template vi for that case (you'll find them in <user,lib>_LAVAcrOpenG Array XNodesOpenG Filter Array, you need to set the comparison mode of the equality est to compare aggregates (see attached image). 2) Download the latest source from github and unpack the zip over the installed files - this will get you the latest XNodes (but you probably want the latest Scripting Tools library as well, which will need to be manually unpaocked in the same way. Github repository is at: https://github.com/gb119/LabVIEW-Bits The second way is preferred as you get the current version with the Petrify XNode feature (but only for the filter array so far !)

-

Errr, Jack, that's not me ! This is me.... (how sad am I for checking the LinkedIn profile....)