Tim_S

Members-

Posts

875 -

Joined

-

Last visited

-

Days Won

17

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Tim_S

-

I've not tried what you're proposing, however it seems you are going to be sending a lot of data strait to disk. RAID, of whatever flavor, itself won't help that, however it may let you link enough hard drives to have the space you need. I believe your bottleneck is the hard drives ability to write data fast enough. Anything with very high bandwidth may also be designed for a computer room and not a shop floor (15k RPM drives do not survive long when exposed to 108-degC temperatures instead of nice A/C environment). Tim

-

Best Practices in LabVIEW

Tim_S replied to John Lokanis's topic in Application Design & Architecture

Sadly I quite understand this... -

Found this a while back, though I think I like the .NET implementation better. Tim EDIT: Helps to attach the file before posting... wguid.zip

-

Do you have Vision Builder? You would have to do a fair amount of poking about, but it's a very powerful tool to determine what you need to do (plus you can export LabVIEW code). People may be able to offer some suggestions if you can post an image of what you're trying to work with. That being said, image processing can take a lot of time-devouring playing about and tweaking in both software and physical setup of your camera and light source. Tim

-

Update manager

Tim_S replied to Jubilee's topic in Application Builder, Installers and code distribution

I assume you're talking about updating the LabVIEW development or run-time environment. The answer is no to that. If you are talking about an application written in LabVIEW, then you may want to look at VIPM. Tim -

Medium to large applications are going to have multiple pieces, each of which can be a small application (e.g., UI, security, PLC communication, DAQ). Each one, depending on the requirements, can use a different model. For example, I really like the JKI state machine for UI, but wouldn't think of using it for DAQ or communication where a producer/consumer model could be better. Tim

-

It would be appreciated if you mention that this is a homework assignment. The forums get a number of people who expect help with homework without putting effort in, so it is good to see you post some code showing you've tried something. Unfortunately I'm not understanding what you are getting hung up on. Your post reads as though you're unclear on what you doing, so I would recommend sitting down with pen and paper and laying out how you want to solve the assignment you've been given. (I like to use flowcharts.) I expect this will clear up some of your questions. Tim

-

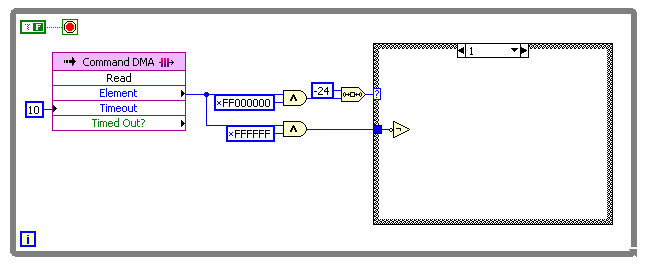

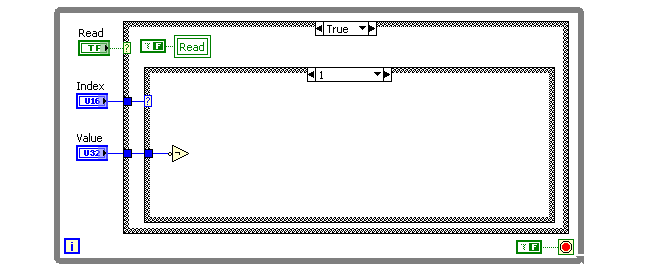

I really appreciate the responses. I should mention I'm using LabVIEW 8.6 for my current effort as it appears that there have been significant changes with FPGA between 8.6 and 2010. That's two interesting approaches. I think the DMA would be less effort on the PC side (send and forget versus needing to check the boolean?) and allow the setting of multiple values at once, but the DMA would require sending two values (index and value) or reserving bytes for index and value. I tried compiling each: DMA FIFO is 63 elements long of U32. FRONT PANEL I didn't see much difference in resource usage. Okay, globals are still to be avoided. It appears the memory is what I should have used for in a one-write-location, multiple-read-location situation. Globals, unfortunately, are nice in that it's clear what data I'm trying to access. The only ways I can see to make it clear what is being accessed in memory is, 1) to create single-element memory blocks for every piece of internal data to be stored, 2) to create an enumerated typedef to provide the indeces of the data in the block, or 3) to create a subVI to read each data element. (I'm liking 1 or 2...) How is a Target Only FIFO different than a DMA FIFO? I've not been able to locate anything on NI's website or the help files. Tim

-

I'm still working out structure a FPGA program versus one on a PC, so I have a more theoretical question. I have a FPGA program I'm working on that involves two things: A large number of hardware I/O and parameters sent to an R-Series card (specifically a PCI-7813R) Internal calculations made in the that are used in multiple other internal subroutines I pondered and wound up using global variables to store this glob of internal and external values. I've kept pondering as I'm accustomed to considering globals to be akin to "demon spawn and should be avoided as the plague they are." I've poked about example and tutorial code, but everything I've found is too simplistic in that the entire code is a couple of VIs with no connections between them. How have you structured code to deal with large number of values that have to go various places? Are globals so evil in a FPGA? How have you dealt with sending subsets of a large number of values from subVI A to subVIs B, C and F without creating obstufication or unmanagable code? Tim I didn't fail the test, I just found 100 ways to do it wrong. - Banjamin Franklin

-

Calculate average and Max from csv file

Tim_S replied to Electronics Engg's topic in LabVIEW General

You seem to have broken down what you need well. Have you looked through the pallet at what primatives and VIs are present? Looking through the File and Array palletes should make what you need to do obvious. I expect you're going to get limited help until you've shown you've tried to do something especially as this sounds like a homework assignment. Tim -

I prefer the 99 rule of project scheduling... The first 90% of the project will take 90% of the time. The last 10% of the project will take 90% of the time.

-

Nice! I'm going to have to remember that one.

-

Grab a good Russian novel with that sleeping bag. I typically start that sort of thing before I go home for the night. Tim

-

I'm not familiar with CANopen, but I've implemented CAN with the new card and driver. The serious improvements are in transmitting periodic signals and commonality with the DAQmx library. The new card and driver can simulate an entire vehicle where the old driver would run out of "bandwidth" somewhere (I'm told it was not the card, but the driver). The only way I've seen to do what you're discribing is to read in the message into a VI and then write the response. Tim

-

I've saved a project, closed LabVIEW, restarted LabVIEW, opened the project and had this. Doesn't seem to be very real to me. Tim

-

Changing the x-axis tick labels for a bar graph

Tim_S replied to MartinMcD's topic in User Interface

My first thought is to use a picture control, but could you use a mathscript node to do what you're looking for? Tim -

I've buit 2010 executables that I've run on my development machine, but I've not deployed them to customers yet. So far the small apps that I've created have worked well over hours, though admitedly the ones I have so far are service apps so they sit there doing "nothing" most of the time. Tim

-

NI Tech support tested this code with their own PC and the same series card I have without any problems transferring the data across the FIFO. The difference seems to be that they used a multi-core PC and I have a single core PC. DMA transfer appears to have an exceptionally low priority, so any operation is enough to potentially disrupt the transfer. Their recommendation was to increase the size of the PC-side buffer. Tim

-

I'm not sure what you're listing, but I've allowed a NULL option in doing something similar sounding, so there was an empty string option, thus always creating at least one entry if the directory was empty, two entries if the directory had one item. Tim

-

Assuming you're still having problems... You have a multiport card; have you tried to loop the port back into another in the card? Are you sure you have a good cable? Plugged into the port you think you are? Have the connector pushed on all the way? (That last one bit me again a few minutes ago.) Tim

-

The FIFO read appears to poll the memory heavily thus causing the high CPU usage. I did try bumping the number of samples read at a time; the CPU usage stayed at 100%, hence why I'm thinking the FIFO read is polling and thus pegging the CPU. I have boosted the FIFO depth to 5,000,000 (i.e., a insane amount) on the host side and put a wait in of 100 msec. I get ~25,000 samples each read until I perform my 'minimize-Windows-Task-Manager' check at which point the samples goes to ~32,000 and Timeout check in the FPGA flags. Bumping the FIFO depth from 16k to 32k exceeds the resources on the FPGA card. Tim

-

I'd suggest not using automatic detection; I've not had good luck with it. You should certainly perform Asbo's point in it's not just the duplex settings that you need to check. Tim

-

Have you looked into the port settings in MAX? It sounds like you have a half-duplex communication; you will need to set the port up in MAX the same way. Tim

-

ShaunR - I appreciate the replies. At this point I have NI Tech Support performing a lot of the same head-scratching that I've been doing. I like the use of the shift register to read additional elements. The CPU pegged at 100% usage, which isn't surprising since there is not a wait in the loop. I opened and closed various windows and was still able to create the timeout condition in the FPGA FIFO. The timeout didn't consistently happen when I minimized or restored the Windows Task Manager as before, but it does seem to be repeatable if I switch to the block diagram of the PC Main VI and then minimize the block diagram window. I charted the elements remaining instead of the data; the backlog had jumped to ~27,800 elements. I dropped the "sample rate" of the FPGA side to 54 kHz from 250 kHz. I was able to run an antivirus scan as well as minimize and restore various windows without the timeout occuring. That is good, though the CPU usage is bad as there are other loops that have to occur in my final system. Tim

-

The depth is 16,383 on the FPGA side. I wasn't setting it on the PC side either, but did find it after posting and tried up to 500,000 with no improvement. I was able to avoid the timeout by changing the value of the wait to 0, but that hard-pegged the CPU. I can create the timeout with a wait of 1 msec. Tim