-

Posts

3,183 -

Joined

-

Last visited

-

Days Won

205

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Aristos Queue

-

I've heard it said that Von Neumann's architecture is the single worst thing ever to happen to computer technology. Von Neumann gave us a system that Moore's Law applied to and so economics pushed every hardware maker into the same basic setup but there were other hardware architectures that would have given us acceleration even faster than Moore's Law. At the very least we would have benefited from more hardware research that broke that mold instead of just making that mold go faster. All research in the alternate structures dried up until we exhausted the Von Neumann setup. Now it is hard to break because of the amount of software that wouldn't upgrade to these alternate architectures. Don't know how true it is, but I like blaming the hardware for yet another software problem. ;-)

-

Actually, there are many ideas of parallel code development that were non-viable with older hardware tech, and so the ideas were declared dead. I'm finding lots of nuggets worth developing in the older CS research. Actors are very old, but there was no compiler that could generate good code for such a setup.

-

A) No, there's no mechanism for forcing the use of property accessors within a class. As Mikael points out, that's a sign of a class that has grown beyond its mandate if you're having problems with that. It's usually pretty trivial to make either a conscious decision to use the accessors internally or not. Commonly, multiple methods all need direct access to the fields, using IPE structures to modify values, so such a restriction would have limited use. B) The property node mechanism is nothing more than syntactic sugar around the subVIs. Since I eliminate the error terminals from my property accessors these days, the property node syntax isn't even an option for me -- the dataflow advantages (not forcing fake serialization of operations that don't need serialization) are obvious... the memory benefits of not having error clusters are *massive* (many many cases of data copies can be eliminated when the data accessors are unconditional). I've been trying to broadcast that far and wide ... it isn't that property nodes are slow or getting slow. It's that the LV compiler is getting lots smarter about optimizing unconditional code, which means that if you can eliminate the error cluster check around the property access LV can often eliminate data copies. This gets even more optimized in LV 2013. That means creating data accessor VIs that do not have error clusters, which rules out the property node syntax.

-

LabVIEW 2013 Favorite features and improvements

Aristos Queue replied to John Lokanis's topic in LabVIEW General

My own rankings, based on both my own perceptions as I work in these versions, on the CARs I was fixing for each version, and the amount of complaints I heard from customers on the forums. Note that these do not really reflect the stability of the modules, just of LabVIEW itself. I realize that for many of you, RT and FPGA are LabVIEW, but for me, they're add-on libraries that are separate products to be evaluated separately. I don't have enough data to evaluate the FPGA module. For RT, I've included comments where I feel it differs from the rating for LabVIEW Core. In general, RT cannot be better than Core simply because the crashes are cumulative. If Core is unstable, RT can't help but be unstable. 6.1 - Average (RT Below Average) 7.0 - Far Above Average (RT Above Average) 7.1 - Far Above Average <<< almost identical to 7.0 except for work in the modules 8.0 - Far Below Average 8.2 - Below Average (RT Far Below Average) <<< RT impacted by lingering 8.0 project issues 8.5 - Below Average but better than 8.2 8.6 - Average 2009 - Average 2010 - Below Average but better than 8.2 2011 - Above Average <<< Many people called this the new 7.0 and it had extremely fast adoption rate 2012 - Above Average 2013 - Above Average (keep in mind that I've been actively developing in 2013 for months longer, in alpha and beta, than most of you, so I feel I have enough time under my belt to evaluate this one) Some folks are likely to chime in that the SP1 (or previously the x.x.1) releases are more stable than any of these and so they never upgrade the software when it first comes out. It's an ok position, but, honestly, the particular set of bugs may shift, but none of the recent versions since 2010 has had any retrograde in overall quality. There's enough code quality checking in the modern era that I don't think users should be generally concerned about upgrading when the new version comes out other than the churn it creates in their own business processes. -

LabVIEW 2013 Favorite features and improvements

Aristos Queue replied to John Lokanis's topic in LabVIEW General

I know mileage may vary, but I question your judgement as a result of this evaluation. 7.0 was the "extra year with nothing but bug fixes". It was rock solid -- 7.1, which you credit as stable, was a release where essentially nothing changed in the core, only in the RT/FPGA modules. Any stability of 7.1 was due entirely to 7.0. And if you're going to classify 8.2 as stable, you have to classify 8.5 as stable if for no other reason than the vast majority of the code changes between those two were stabilization fixes (still cleaning up the disaster that was 8.0). I don't care if you change 8.2 to unstable or if you change 8.5 to stable, but there's a deep and obvious trend in the bug reports from that era. -

There are few things more professionally exciting than having the fastest code developer and prototyper (text, not G) on your team walk up and say, "I'm bored and out of projects. Do you have anything for me to work on?" Oh, boy, do I!!!

-

The only way I know of is to have every window you open monitor for when it is the active window and signal the floating window to hide. The operating system will take care of hiding that window when you click on a non-LV window.

-

Define "sensible". Probably not. You have to close your project, open a project that just has the class in one context, and edit the icon there, then reload your project. I've explained why this lock exists in other posts on forums; I'm not gong to go through it again here. I thought it would be changed by now, but it hasn't been a priority. If you want NI to fix this, please contact your field sales engineer and convince them to feedback just how much this is blocking your development progress.

-

I would say "most". They're all the same use cases that are entirely missed by the XML and binary string prims.

-

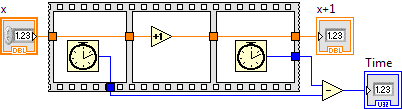

Why would you expect that? Are you are doing your testing on a real time operating system? If not, that's a huge amount of jitter. This code will NOT time how long it takes to do a Subtract (even if we assume that the Tick Count primitive had infinite precision, which it doesn't): It will only tell you how long it takes to do the subtract IF that the whole Flat Sequence executes without being swapped out by the processor. It could be that the first Tick Count executes, then the thread swaps out, then the Subtract executes, then the thread swaps out, then the second Tick Count executes. There could be hours of time between those swaps (ok, probably not in practice, but in theory, yes) unless you're on a deterministic OS and that code is running in the highest priority thread. Even on a real-time OS, then you'll run into additional problems because parallel code can't run in the single highest priority thread, by definition. So you would still have skew across your CPUs as they get serviced in various ways. But it is easier to close down all the other services of the system (like removing any scans of network ports, for example). Timing benchmarks for low level components are not something you can do on your own without lots of training and, at the least, a real-time system. I don't have that training... I leave it to a team of experts here at NI when I need benchmark questions answered.

-

supersecretprivatespecialstuff Basic Object Flags

Aristos Queue replied to Sparkette's topic in VI Scripting

There are various levels of confidential. -

There are many use cases that the new JSON primitives miss. There are a few that they hit, and those few are home runs. Essentially, if the same code base that does the serialization is the same code base that does the deserialization -- i.e., they are chunks of code that rev in lockstep and where there's an agreed schema between the parties -- the prims are great. Step outside that box and you may find that making multiple "attempt to parse this" calls into the JSON prims is more expensive than getting some VIs somewhere and doing the parsing yourself. That was my experience, anyway. For those who are familiar with my ongoing character lineator project (which released a new version on Monday of NIWeek), the new prims are useless for me. I still have to do my own parsing of the JSON in order to handle all the data mutation cases that serialization requires.

-

If you have multiple processors, as most machines do these days, they really are parallel. Bjarne: I've heard -- but have not confirmed -- that some of the browsers have stopped moving the meta information for some reason -- security, I think, with people doing things like, oh, embedding runnable code in the meta data... like LV is doing. ;-) Try saving the PNG to disk and then add it to your diagram.

-

How to display this in UML

Aristos Queue replied to GregFreeman's topic in Object-Oriented Programming

I believe the convention is that you put it hanging out in space on your UML diagram and connect an "instantiates" line from the *method* that creates the instance of the class to your class. A small circle next to the method name with a dotted line from the method to the class. -

The long path problem in Windows sometimes gives some weird error messages back to us, so that is potentially an issue. But personally, I'd be looking more for a cached VI compile for which the source file has moved *after* the cache was loaded into memory. Something like "VI A calls VI B as a subroutine. You load A into memory, which loads B, but since B uses 'separate source...' option, LV loads B from the cache. Then you moved B on disk. Then something happened to make B want to recompile. It went to load its actual source and couldn't find it." That would be my guess. No idea how that's coming about, but the error message seems related to something like that.

-

supersecretprivatespecialstuff Basic Object Flags

Aristos Queue replied to Sparkette's topic in VI Scripting

Each class in the hierarchy is free to reinterpret the flag sets differently and each class may (and frequently does) shift the meanings around between LV versions as we add new features. The flag set is really convenient, so an older, not-used-so-much feature may get bumped out of the flag set and moved to other forms of tracking if a new feature comes along that wants to use the setting (especially since much of the the flag set is saved with the VI and a feature that is a temporary setting in memory may have used the flags since they were easy and available on that particular class of the hierarchy). Tracking what any given flag does is tricky. Even the three flags that haven't changed in any class for the last five versions of LV have very different meanings depending upon the class using the flag. For example, flag 1 on a DDO specifies control vs. indicator, but on a cosmetic it turns off mouse tracking. On structure nodes it indicates "worth analyzing for constant folding", and on the self-reference node it indicates that dynamic dispatching isn't propagated from dyn disp input to dyn disp output. It's pretty much pot luck. You can use Heap Peek to get some translation. -

Durable DAQ USB hardware and software that is easy to use?

Aristos Queue replied to michelle827's topic in Hardware

michelle827: Check out myDAQ: http://www.ni.com/mydaq/ -

Tim_S has the classic pattern that we are investigating. Yair, yours sounds like one I'd like to see a visual of. It sounds distinct from the ones collected so far. We don't have anything in mind to solve your case at this time. We're collecting use cases to see what we can come up with. Tim_S's use case might be solvable with some extensions to the Parallel For Loop, but we're looking into other possibilities.

-

We all know about the pain of having to write two nearly identical VIs, differing only by some single function or some data type and the code maintenance burden that creates. There's lots of discussion about what LV could do about that. Today I'm asking about a different problem entirely. I want to hear about (and if possible, see posted pictures of) VIs where you have multiple blocks of nearly the same code over and over. Maybe its some manual pipelining that you've done, or for some reason you manually unrolled several instances of a loop. Perhaps you have six copies of your UI on the front panel and so you have six identical event structure loops on the block diagram. These are the trivial cases that I can rattle off the top of my head. Do you have examples of places where you had to ctrl+drag blocks of code? They can be the same block of code exactly or code that you duplicated and then tweaked each copy. Regardless, it needs to be on the same block diagram. Why am I looking for these? There are a couple categories of code replication that R&D has identified as a priority to do "something" about to make writing and maintaining such code easier. The current proposals would address some fairly specific niches on FPGAs, but we think we can create a feature that is much more powerful with about the same amount of effort, but we need VIs as fodder for analysis. So, if you have a VI with such replicated code and you don't mind discussing it and/or posting pictures of it, please, load 'em up in this thread.

-

Moral assertions for code

Aristos Queue replied to Aristos Queue's topic in LabVIEW Feature Suggestions

I agree. Damn the DAQ driver with its inheritance hierarchy of functions! Curse the evil VI Server! I say we remove all the property nodes and invoke nodes. GPIB and static user interfaces, the way God intended! -

I have a weird art project in mind involving spinning tops, but I have no idea what the physical requirements are to make it work. The Internet has been no help -- a lot of the sites either describe how to build one particular gyroscope or they're fairly advanced physics books that assume you already know the basics. Here's what I'm hoping someone on LAVA can answer: A spinning disk or top resists being knocked over. Assume that the spin is motor powered so it doesn't stop spinning. Is there some formula that relates the mass of the disk and the rate of its spin to the height of the stand that it will support on its own? Are there other relevant physical quantities that affect height? To some degree, the spinning disk resists being knocked over by a sideways force. How do you compute how much force the disk will resist and then right itself?

-

Cluster Version control Design question

Aristos Queue replied to ritesh024's topic in Application Design & Architecture

Ah, the "versioning data for preservation or communication with older clients" problem. You are not the only one to encounter this. 11 months ago, I released a draft of my solution to this problem. The library is complete and ready to use, although there are features that aren't there yet. I hope a new version is available within the next month or so. https://decibel.ni.com/content/docs/DOC-24015 You may not like my solution -- it relies on classes instead of clusters for various reasons. But the patterns of usage that I establish there are based on lots of research into the data serialization mechanisms of other programming languages/libraries and various file formats. But the short answer is that this approach allows the reading and writing of older formats for arbitrary data. Other libraries: MGI: http://mooregoodideas.com/robustxml/ OpenG also has a very robust solution but I cannot find where to download it -- my Google skills have completely failed this morning. Someone else may post a link to it.