-

Posts

68 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by mcduff

-

I have always used this library to prevent the screensaver and windows lock from occurring. Our IT locks down the computer so the screensaver, lock screen, cannot be changed. This library bascially tells Windows it's in Presentation mode, e.g., slideshow, watching a movie, etc, such that the screen will not got to screensaver or lock screen.

-

What about the flat style controls from JKI or Dr Powell ? For a graphical programming language making a nice UI is the hardest thing.

-

DMC has controls that are editable and resemble system controls (enum). You can get it on VIPM or here.

-

Serial Communication Question, Please

mcduff replied to jmltinc's topic in Remote Control, Monitoring and the Internet

Can you try some serial port monitor when the EXE is running? I have no experience with this, but maybe something like it. There is probably a better tool that someone elese here can suggest. -

I don't have extensive experience with PXIe systems, but have made some systems in the past and present. A current system collects data continuously at 20-30MSa/s for 32 channels. At that rate the 15TB RAID array is filled up in a few hours. Advantages: Throughput. Unless your modular instruments are attached via thunderbolt, hard to beat PXIe throughput. However, it might not be needed in your case. Triggering. Simple to implement triggering or advanced triggering through the backplane. Can be done with modular instruments also, but more wires and more hassles. If you need a synchronized start, the PXI backplane is your friend. Synchronization. Can share reference/sample clocks through the backplane. Can be done with modular instruments also, but more wires and more hassles. Compact. Somewhat more compact than modular instruments. Disadvantages. Expensive. Noted in previous message. Support. If you have instruments from different vendors and there is a problem, each vendor may blame the other. I had a chassis, a controller, and digitizers from three different companies. When there was an issue with the cards and the slots they could occupy, everyone at first blamed the other, Eventually, it was found that the chassis had an issue with the interrupts. PXI is supposed to be standardized but ... Future proof. The embedded PXIe controllers seem to always be a generation or two behind current CPU offerings. In addition, their components are difficult to upgrade or have limited upgrade capabilities. You may want to also purchase an external TB controller card. This allows to you to attach the chassis to a computer via the TB port and control from that computer instead of using an embedded controller.

-

I don't work in Industry, I work in a R&D facility but I am slightly pessimistic. My gut feeling is that Python will take over sometime in the near future. I have seen it before. When I first joined my group about 15 years ago all of the analysis was done using Matlab, now everyone uses Python. NI seems seems to be pushing solutions such as Flex Logger, Instrument Studio, etc, instead of LabVIEW. (Interestingly, those solutions look like they were built with NXG. ) On the plus side, we recently had a presentation by a NI rep who detailed plans for new DAQ equipment that was/is going to be in development. They were looking for feedback. The future is interesting.

-

Excuse my ignorance and stupidity, but I never really understood the following settings in the Compile Settings. I always leave them as the default value. Do those settings remove both Front and Diagram from VIs in the EXE? I know the setting may affect debugging an EXE file and possibly some of the tools to reconstruct VIs from an EXE, but should I be checking or not checking those options in an EXE? Thanks

-

You may not be able to specify the channels in any order you want. If I recall correctly for some DAQs you can only specify them in ascending order. Not sure if this holds true for simulated instruments.

-

Std Deviation and Variance.vi outputs erroneous value in corner cases

mcduff replied to X___'s topic in LabVIEW Bugs

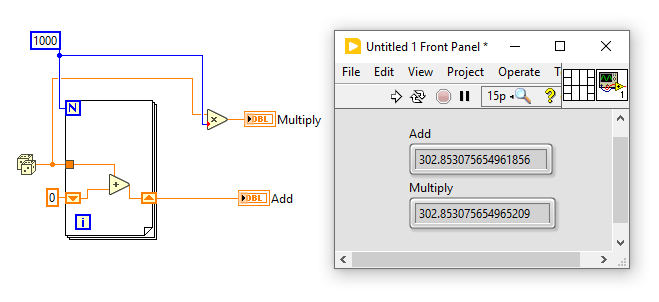

I do not think it is a bug, just floating point math. You don't have infinite precision. See below for another example. -

Does this token need to be added to the INI file of a compiled exe or does it automatically get included?

-

The curve shown in the XY graph is inconsistent with the actual data

mcduff replied to Oliver_Lei's topic in LabVIEW General

This is why "Export to Excel" is a useless feature. The data is exported using the display format of the plot's axes; since the plot uses SI formatting, it is expressed as 10k, 100k, etc. This does not help if someone wants to plot the data in Excel. -

Including solicitation of interest from potential acquirers

mcduff replied to gleichman's topic in LAVA Lounge

Good Read here, a bit depressing https://nihistory.com/nis-commitment-to-labview/ -

The LabVIEW Multicore Analysis and Sparse Matrix Toolkit is definitely faster but it seems to have issues with the large number of points. For example, on my laptop I cannot do a FFT of 20M points, but the built in FFT does 100M points no problem.

-

Read and copy the file in chunks. No need to open the whole file at once. To increase speed write is multiples of the disk sector size.

-

Including solicitation of interest from potential acquirers

mcduff replied to gleichman's topic in LAVA Lounge

Is this number greater than the number of COBOL programmers? It seems like it's getting close. -

Before you convert to a string why not take the String from the Read Function, convert to Byte Array, split it into the fixed lengths you want then convert into string. This can be done in a for loop, have an array of lengths and use that to split the string.

-

You should link this to the original discussion on the darkside, https://forums.ni.com/t5/LabVIEW/Help-issues-with-arrays/m-p/4299181 There you marked the VI by Altenbach you posted here as the solution to your problem. What array do you want to extend? Your question isn't clear to me. Maybe some of the learning resources at NI would help?

-

To make things really efficient, echoing what was said earlier: Read about 100 ms of data (1/10 of the sample rate). Except set the number of samples to the closest even multiple of the disk sector size. Typically the sector size is 512 B, so for that case set the number of samples to 10240. Set the buffer size to an even multiple of (1) that is at least 4 - 8 s worth of data. Use the built in logging function in DAQmx to save the data; don't roll your own with a queue as in your example. Get rid of the unbounded array in your example; it keeps growing. I have not taken data for years non-stop, but using 1 & 2 above, taken 2 weeks continuously 8 channels at 2MSa/s each, using a laptop and USB-6366.

-

Their "LabVIEW distribution" is nothing more than using the DLL import function for the RSA.DLL. I have not looked at the other sources on the GitHub page; not sure if the source code for the DLL is included on that site or not. I have only downloaded the DLLs.

-

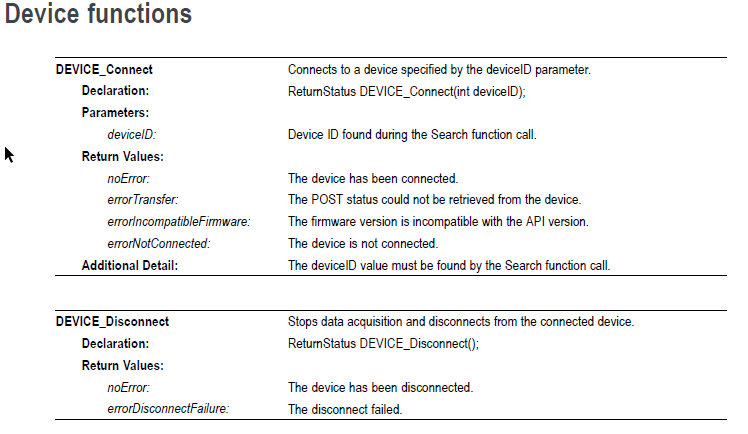

TEK did not make a LabVIEW API. I used the DLL import wizard along with the RSA DLL. Not sure what API Matlab is using but below is a screenshot from the programming manual; no device ID for a disconnect. These are the functions exposed in their DLL. This Spectrum Analyzer has its own DLL and it can continuously stream data up to 56MB/s; as ShaunR said, this type of streaming is not well suited for a COM port. This device is not using SCPI commands with their provided DLL. However, it can use SCPI commands if you install their software (Signal Vu) and make a virtual VISA Port. But if you do that, then no high speed streaming which is needed for this application.