-

Posts

544 -

Joined

-

Last visited

-

Days Won

17

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by PaulL

-

Thanks for creating a CAR! (I have mentioned this to NI Support before but I guess it was always adjunct to a more pressing issue.) I don't have the Desktop Execution Trace Toolkit in the currently installed version of LabVIEW (2010) so I'm afraid I don't know if this applies there or not. Thanks again!

-

I am hoping you can clarify the end goal of this task a bit better. I think you are trying to get a list of suitable objects in the step you show. Later you will load an object of the type the user selects, in a sort of factory (not shown)? So this is an effort to use a plug-in architecture? Or am I missing this entirely? Here are a couple ideas: 1) I show in the paper linked in this thread (http://lavag.org/topic/14213-strategy-pattern-example/page__fromsearch__1) how we use the Factory Method Pattern. 2) Another trick we use to determine a directory at run-time is to create a determinePath method on an object we do load. This method calls the "Current VI's Path" function (and then we can use relative paths from there). Paul

-

I discuss interfaces in this context carefully in Appendix I to the paper linked in this thread: http://lavag.org/topic/14213-strategy-pattern-example/page__fromsearch__1 I hope you find it helpful.

-

We have deployed quite a few Object-Oriented applications on RT on cRIOs now. We did encounter some serious issues early on (see below for what I can remember off the top of my head) but now that we know how to work around these our applications work great (and within the confines of what we do working with objects on RT is very easy to do). Relevant problems and solutions: 1) Performance issue accessing objects within another object. We had a set of individual objects to do specific things and added these objects as attributes inside a larger collection class, using accessor methods to pull off each of these, and then performing operations as appropriate. (That is, one object was a collection of other objects.) Our tests showed that in this circumstance (lots of objects within another object) the execution time to access an object in the collection was prohibitively lengthy for our RT applications. We improved performance (and the API was easier anyway) by putting the objects inside a cluster instead, which is OK since this was strictly a collection and had no behavior itself. (Note that accessor methods otherwise seem to work just fine.) [This is an issue in LabVIEW 2010. We haven't tested it in LabVIEW 2011. I do not know what the root cause of this issue is.] 2) Also in LabVIEW 2010, we encountered many issues with builds. The build times were extraordinarily lengthy and more often than not failed (meaning it would complete on the same code maybe one out of five times). National Instruments helped us around this issue (thanks, NI!) in a couple ways, but most importantly we learned that we could avoid these issues by minimizing source interdependencies in our code. We began implementing interfaces (OK, not Java-style interfaces but our own version of interfaces), which dramatically reduced interdependencies between different sections of our code. This had a huge impact and we haven't had a problem with a build in many months now. (The use of interfaces results in a better application design in any case!) 3) For the record, we also encountered issues with debugging in the earliest release on RT (stepping into a dynamic dispatch VI didn't always work correctly) but we haven't seen this in LabVIEW 2010. 4) Also for the record, the RTETT traces do not show which dynamic dispatch VI is running (as of LabVIEW 2010). This hasn't been a big problem for us in practice. Now that we have "conquered" these issues, working with objects in RT is typically a quite pleasant experience for us. So, go for it, but use interfaces! [i remembered that I had started a similar topic very nearly two years ago here: http://lavag.org/topic/11928-lvoop-on-rt-stories-from-the-field/page__hl__%2Bobjects+%2Bon+%2Breal-time__fromsearch__1, The only comment there is my initial comment.]

-

There is a more recent thread on this topic here: http://lavag.org/topic/15449-lvoop-on-rt-concerns/page__pid__93244#entry93244

-

Re-Designing Multi-Instrument, Multi-UI Executable

PaulL replied to jbjorlie's topic in Application Design & Architecture

On the Windows side we use the name of the computer (e.g., 'DCS') and update the hosts file appropriately. (I think eventually IT will configure the network so we won't have to use the hosts file, but for the moment that is how we do it.) On RT I think we could do more or less the same thing, but in practice we store the IP address in a configuration file, and construct the URL programmatically. This just seems simpler and, yes, removes the mysterious element. To answer your specific question: Currently the control computers do have static IP addresses, yes. -

Re-Designing Multi-Instrument, Multi-UI Executable

PaulL replied to jbjorlie's topic in Application Design & Architecture

OK, just a couple comments: SVE on RT: Our model is as follows: Views run on Windows. They can open and close independently of the controllers running on the cRIOs (RT). The controllers can run indefinitely, but they do require a connection to the SVE hosted on Windows in order to operate normally. (If the Windows machine shuts down or the Ethernet connection breaks the controllers enter a [safe] Fault state.) If you can't count on the Windows machine being up, then I agree you need to host the SVs on RT. I don't think there is any reason to do so otherwise (even though the page on SVs tells us we should). (I can explain that further if anyone is curious.) We haven't seen the issue you see with buffering, but in our applications we don't let listeners fall behind (probably we don't buffer more than 10 items, if that). Yes, what you describe sounds like a serious issue for that use case. For the record, we use NI-PSP and the Shared Variable API for programmatic access to networked shared variables. -

Re-Designing Multi-Instrument, Multi-UI Executable

PaulL replied to jbjorlie's topic in Application Design & Architecture

While I certainly agree there are some areas where SVs can improve (and a few years ago I would have agreed with your assessment when they had much more serious problems) I think SVs now deserve more credit. (I will state upfront that we still see occasional issues with the SVE--mostly having to do with logging, but we have workarounds for these, and I think if there are more users NI will fix these issues more quickly.) 1) Weight. We've never had an issue with an SVE deployed on Windows. We never deploy the SVE on RT. (We don't have any reason to do so.) Our RT applications (on cRIOs) read and write to SVs hosted on Windows without any problem. 2) Black box: It is true that we don't see the internal code of the SVE, but we don't have to maintain it either. At some level everything is a black box (a truism, I know)--including TCP/IP, obviously--it just depends on how much you trust the technology. I understand your concerns. By the way, in my tests I haven't noted a departure from the 10 ms or buffer full rule (plus variations since we are deploying on a nonRT system), but there may be issues I haven't seen. The performance has met our requirements. 3) Buffer overflow: I'm not sure I understand this one. SV reads and writes do have standard error in functionality, so they won't execute if there is an error on the error input. We don't wire the error input terminal for this reason but merge the errors after the node. (I don't think this is what you are saying, though. I think you are saying you get the same warning out of multiple parallel nodes? We haven't encountered this ourselves so I can't help here.) 4) While we don't programmatically deploy SVs on RT, as I mentioned, I agree it would be good (and most appropriate!) if LabVIEW RT supported this functionality. For the record, we do programmatically connect to SVs hosted on Windows in our RT applications, and that works fine. 5) Performance: SVs are pretty fast now, both according to the NI page on SVs, and according to our tests and experience. I'm sure there are applications where greater performance is required, and for these they would not be suitable, but for many, many applications I think their performance is sufficient. 6) Queues, events, and local variables are suitable for many purposes but not, as you note, for networked applications. (We do, of course, use events with SVs.) TCP/IP is a very good approach for networking, but in the absence of a wrapper does not provide a publish-subscribe system. Networked shared variables are one approach (and the only serious contender packaged by NI) to wrapping TCP/IP with a publish-subscribe framework. If someone wants to write such a wrapper, that is admirable (and that may be necessary and a good idea!), but I think for most users it is a much shorter path to use the SV than to work the kinks out their own implementation. I haven't tried making my own, though, and the basics of the Observer Pattern do seem straightforward enough that it could be worth the attempt--it's just not for everybody. (I'd also prefer that we as a community converge on a single robust implementation of a publish-subscribe system, whether this be an NI option or the best offered by and supported by the community. At the present time I think SVs are the best option readily available and supported.) 7) Binding to controls: We do use that feature sometimes, but honestly we tend to do most of the updates ourselves using events. 8) SV events: Yes, I wholeheartedly agree that SV events should be part of the LabVIEW core, and I have said as much many times. By the way, we do generate SV user events on RT without the DSC Module by using the "Read Variable with Timeout" function and generating a user event accordingly. This is straightforward to do and works fine. We have only done this with a single variable (which supports many possible message types via the Command Pattern), but I am guessing it would not be too terribly difficult to extend this to n variables and avoid the use of the DSC Module altogether. (We haven't attempted this to date because we also use the logging capabilities of the DSC Module.) Perhaps the best evidence I can provide is that we have deployed functioning, robust (and quite complex) systems that use networked shared variables effectively for interprocess communication. Hence, at least for the features we use, in the manner in which we use them (which is in the end quite straightforward and simple to implement), we know networked shared variables offer a valid and powerful option. -

Object serialization (XML) and LabVIEW

PaulL replied to PaulL's topic in Object-Oriented Programming

Yes, of course, I would be most interested in it. Yes, I spent a lot of time trying to use the references to work with XML even back in 2009, but they don't give you what you need, at least not in the run-time environment. It would be very interesting to know what methodology the native Flatten To XML and Unflatten From XML methods use and if it is possible for someone outside NI to do the same. -

closed review Suggestions for OpenG LVOOP Data Tools

PaulL replied to drjdpowell's topic in OpenG Developers

I've thought about this, and I wasn't asking for the correct thing--but there is a need that I still don't think LabVIEW fulfills--and that I view as quite important. It's not exactly a new issue. Rather than hijack this thread, I started a new thread here: http://lavag.org/topic/15440-object-serialization-xml-and-labview/ -

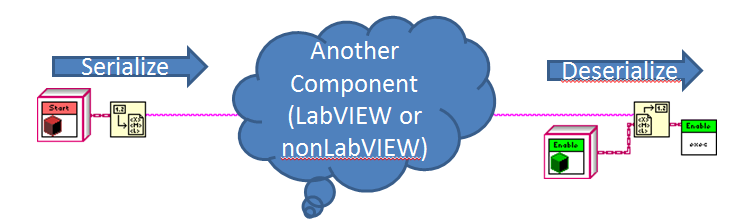

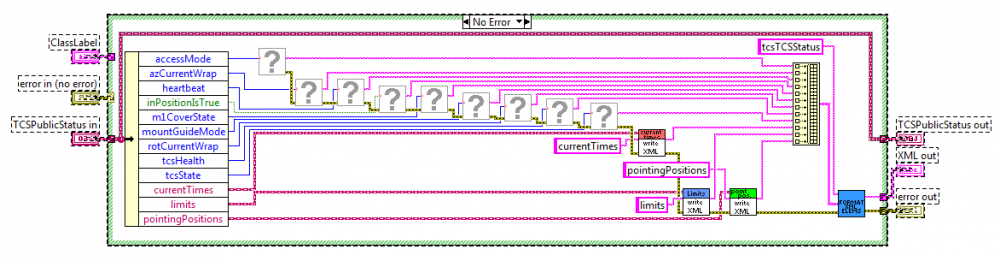

For reference: This thread is in part an off-shoot of post #18 in this thread: http://lavag.org/top...oop-data-tools/. There is however, a thread (and an idea!) here (http://forums.ni.com...m/idi-p/1776294) and the discussion thread there includes links to quite a few related LAVA threads. Top-level goal: I will describe what we want to be able to do at the top-level using the attached diagram: We have an object that we wish to serialize (flatten) [start in the diagram] and send to another system or system component. The other system component needs to process that information, and may itself send us some other serialized object [Enable in the diagram] that we need to deserialize (convert back to an object) for use in our component. Why do this? This is a common use case in many software engineering applications. Our particular use case is to implement the Command Pattern as a way of sending instructions. For instance, Enable is one object of type Command. The system executes Enable::execute() when Enable is the object on the wire. Similarly, it executes MoveToPosition::execute if the object type is MoveToPosition(). Note that the objects may contain attributes suitable for the command type (e.g., MoveToPosition has an attribute for the demand position). [serialization captures the object state information attribute values, not the all information about the object. In particular, the methods form part of the class definition.] Basic Implementation: We serialize and deserialize objects to send between between applications within a component (for instance, between the View application and the Controller application) and between components throughout our system quite effectively. The Flatten To XML and Unflatten From XML methods in the diagram work quite well for this purpose, as do the Flatten To String and Unflatten To String functions on the Data Manipulation palette, with one catch. They only work within LabVIEW, however. Limitations (the problem): We need to handle the general case where we may implement one or more of the components [Another Component in the diagram] in some other development environment. The two components must be able to speak the same language across the interface, of course. XML is, I think, the best suited approach for this, but the native LabVIEW Flatten To XML and Unflatten From XML methods will not work for this purpose. The critical problem is that if an object contains default data the flattened XML indicates that the data is default, which is only useful if the reader has access to the original class (in this case in LabVIEW). Another serious problem is that that the flattened XML format is not in a format that it is appropriate for this sort of data interchange. A much better approach is that of Simple XML (which is the approach JKI’s EasyXML takes). Workaround implemented: We have actually implemented a working interface between LabVIEW and Java components by writing specific methods on each class to break down the data into pieces JKI’s EasyXML can digest. This works but it is quite cumbersome to provide a functionality that I think is super advantageous for effective implementation of interoperable systems and I suggest, not coincidentally, for the future success of LabVIEW. [Aside: the other critical piece that is missing, I think, is the other ML—UML integration.] Other workarounds? We have spent a great deal of time attempting to implement other approaches to serialization (starting in 2009). These have centered on interpreting or transforming the flattened object information (XML or string). We have inevitably run into two road blocks. The “default” issue mentioned above. There is no reasonable way, to my knowledge, to deal with this issue. Access to type information (especially to build an object from XML data). In the thread mentioned above, I sought the default value of the class. This is actually not sufficient (at least not in the form we have it). What we need is access to the class data cluster (i.e., be able to assemble a cluster of the proper type and values)--and then be able to write the cluster value to the object. I don’t think this is currently possible. Possible solutions: All possible solutions involve some action on NI’s part, as far as I can see. Allow for a third party developer to implement an XML framework. This requires resolution of both road blocks I just mentioned. (Note that resolution of road block #2 can be problematic from a security point of view, so NI may not want to do this.) Develop a serialization format suitable for this purpose. This would involve creating a variation of the existing XML framework that already exists (and would be relatively easy to do, I think).

-

Re-Designing Multi-Instrument, Multi-UI Executable

PaulL replied to jbjorlie's topic in Application Design & Architecture

Which state? :-) On subpanels: We are using subpanels more and more. Here are some reasons why: Encapsulation: A set of controls or indicators handles some closely related functionality. We can focus on that one thing without worrying about (or worse, breaking) other functionality. Reusability: We can use this same VI in a subpanel in another view in our system (e.g., for a higher-level component) as appropriate. Grouping: We can make an entire subpanel visible or invisible in certain states. (Certainly this means we need to encapsulate the proper information together!) We have not observed any performance issues with such an approach. In general, we don't see performance issues with updating numeric displays for modern computers at rates we use (up to 62.5 Hz). Of course, users won't be able to see things that fast. (Your 50 ms update rate is barely observable, if at all.) When we do want to limit the updates (e.g., complex graphs or charts that we don't want to scroll at ridiculous rates) we either update at specific intervals (making sure we always display valid data, as mentioned above) or we apply a value deadband. For the record, we use shared variables (a button event writes to a shared variable; a shared variable value change events triggers an update to one or more display elements; shared variable binding for the simplest cases). This works quite well. The event handling deals with initial reads cleanly. We do use the DSC module for the shared variable events. By the way, you are asking a lot of good questions! :-) On communication: As I said, we use shared variables (networked shared variables). We use this approach because we communicate over Ethernet (between various computers and locations and between controllers--often on cRIOs--and views) and because we want to use a publish-subscribe paradigm--that is, multiple subscribers will get the same information (or a demand may come from a view or a higher level component), and shared variables handle both of these needs well. (We also don't need to create or maintain a messaging system.) -

closed review Suggestions for OpenG LVOOP Data Tools

PaulL replied to drjdpowell's topic in OpenG Developers

Yes, we need this in order to make a generic XML parser. Currently (as of LabVIEW 2010) this is only accessible to the NI folks, as I understand it. -

How to get strings from a ring w/o UI thread swap?

PaulL replied to Stobber's topic in LabVIEW General

We recently discovered this VI among the utilities: vi.lib\Utility\VariantDataType\GetNumericInfo.vi that returns all the string values for an Enum. We have found it quite useful. (You can then index the array to get the specific string.) (I just tried it and it doesn't seem to work for a ring control. I always use Enums anyway.) -

I found some time to look at the code in post #9. (One of my colleagues has 2011 installed.) I realize ShaunR and O-o already pointed out why this doesn't work (the event handler processes events in the order it receives them). From a high-level view, if I analyze this as a statemachine, I say there are two triggers, one for addAndMultiply, and one for addAndMultiplyThenDivide. Since the controller always responds to a given trigger in the same way (i.e,, with the same behavior), there is only one state. So we have one state that handles two triggers. There isn't then a need for a statemachine here, but if I did implement it thus, within OneState (for me this is a class but it needn't) be, I would call three model methods. For example OneState::addAndMultiply() would call Calculator::add and Calculator::multiply(). Similarly, OneState::addAndMultiplyThenDivide would call Calculator::add, Calculator::multiply(), and Calculator::divide. I realize that isn't what O_o's plan is, but I think there are a couple things to keep in mind, most importantly that when we are talking about state machines we need to distinguish between triggers (what are asking the system to do) and the state-based behavior (what the system does in response to the triggers) that varies between the various states and is the reason for using a state machine.

-

Would it be possible for you to provide a real example of this? I don't describe state-based behavior quite the same way. When I design a state machine I define states and the behaviors the system has for those states in response to various triggers (external or internal). If the system has different behavior in response to a trigger, then I have a new state. If you describe the behavior that you want then I will show you what I mean....

-

LVClasses in LVLibs: how to organize things

PaulL replied to drjdpowell's topic in Object-Oriented Programming

Just to clarify what I meant: I have a template and in that template I may have a set of classes. I use those classes in the same way in different applications (say, AppA and AppB), but each instance must be unique (because I need to use both pieces in a still larger application, and they link to specific typedefs for A and B, for example, so they are not common code). I can rename each class with an A or B extension to provide unique namespaces, but if there is a group of classes it is simpler to package them together in a library and then rename just the library with an A or B extension to provide a unique namespace. [i'm looking at my actual template and I only see one case where we actually do something like this with classes. More frequently we have libraries for typedefs and shared variables where the libraries have component IDs in the names. The templatized code also has libraries with classes for States and Commands (concrete implementations of abstract classes), since we want to load these into memory as a unit; they form collections.] -

The main point is to separate tasks into separate pieces of code, and in this case, into separate applications. (We actually have separate LabVIEW projects for each of the pieces.) As you pointed out, you can rebuild and redeploy one piece without touching the other(s). With that comes better maintainability (in particular, one doesn't break one thing while fixing another) but I think also better design (we think about what really belongs where). If you want to read more on this, you can search for topics on tight cohesion, loose coupling, encapsulation, open-closed principle, and especially Model-View-Controller (deals directly with this issue, and is super-important, but there are lots of subtleties here).

-

LVClasses in LVLibs: how to organize things

PaulL replied to drjdpowell's topic in Object-Oriented Programming

We went through pretty much the same process of discovery. Short answer: Yes, we put classes that work together to perform some specific tasks (e.g., configuration) in a library (.lvlib). We also put classes (or other files) in a library when a group of these form part of a template but in each instance we need a unique namespace. (The library provides a namespace and we don't have to rename all the individual contents. We can also copy the entire library on disk at once.) Finally, sometimes we have a group of child classes that obviously go together (for examples, we have Commands.lvlib and States.lvlib that contain children of CommandThisComponent.lvclass and State.lvclass, respectively.) In all cases we need all elements of the library in the project anyway, and it is actually quite handy to have everything grouped under a library in the project. (I think this is an especially important practice, by the way.) When these situations do not apply (i.e., we have a class that we may call all on its own) we usually do not put classes in libraries since we do not see an obvious benefit. (Putting disparate classes in a library adds unneeded items to the dependencies, as you say. We could make a library for an individual class, but especially since a class is already a library there doesn't seem to be anything to gain by doing that.) The other thing to keep in mind is that if you open a nested library it will open the parent library and any other libraries in the nest. Paul -

LabVIEW RT does support event structures, at least in the newer versions of LabVIEW. (We use event structures in RT ourselves!) Generally one doesn't have front panels with a deployed RT application. (We don't handle front panel events directly. The way we handle this is to run the View as a separate application on a desktop machine. The View handles front panel user events and then sends messages to the RT application. Each time the RT application receives a message it generates a user event that a controller loop, properly speaking, handles.)

-

DSC Toolkit, does anyone use it?

PaulL replied to Jordan Kuehn's topic in Remote Control, Monitoring and the Internet

We also use DSC for NSV events and Citadel logging and find it to be a powerful tool. The DSM and MAX interfaces are helpful (DSM much more so) but for complex data we still have to write our own methods. We have had issues with Citadel reindexing databases on occasion (which can pretty much bring the computer to a halt temporarily) but we are implementing strategies to avoid this (most notably, archiving and deleting databases every day). (It is worth noting that we are writing a lot of data at relatively high logging rates. The performance here is really pretty good.) For the record, we have separately implemented dynamic events in RT on VxWorks (not supported by DSC) by using the "Read Variable With Timeout" method and generating a user event when we read something. Surprisingly simple. -

Yes, I would agree that it is easier to develop (and especially debug!) code on the real-time side. I am guessing there are no environmental requirements that preclude the use of PXI. (I only ask because that is the primary reason we opted to work with cRIOs--they are specified to work in our environment.)

-

Are you doing the PID on the RT side or the FPGA side? If you do this on the FPGA side, you might be able to achieve your 2 kHz loop rate. If you do this on the RT side, I would recommend a more powerful device. We use a cRIO-9074 with the PID loops on the RT side. The fastest loop, though, runs at 62.5 Hz, which is about as fast as we have been able to achieve with reliable performance with a cRIO-9074. The applications are fairly complex, though, and we the inputs come in over the network via shared variables. I did a test where, as I recall, I was just testing shared variable communication (my memory is not so good here) and 2 kHz was the absolute limit, but 1 or 0.5 kHz was the meaningful limit where there weren't significant buffering delays. I know that's a sloppy answer, but it is something.

-

Dave, The scheme my group developed a couple years ago is very similar to what you did. I have attached an example. The missing VIs are EasyXML VIs--my computer crashed and I haven't reinstalled these just yet. The major difference is that we didn't create an intermediate cluster for each class. Doing so is extra maintenance, doesn't help with writing except to include a version number, and I don't think helps much on reading. (If the data exists with the correct tag and format in the XML, the reader can always find it--even if the order of the data has changed. If the data doesn't exist the reader will throw an exception, unless we write some complicated mutation code within the reader method itself, which, I think, is not a winning proposition. Even then, we would really have a different object as an output. I'm not sure this is even possible.) I must be missing something here, but I don't see what. Paul "In my current project I have a SystemConfig object that is an aggregation of its own data and unique config objects for several subsystems. I did this so I wouldn't have half a dozen different config files. Each config object has it's own serialization implementation, similar to the one above. (It doesn't use JKI's Easy XML. I've been exploring that on my own.) When the SystemConfig.Serialize method is invoked it in turn invokes each object's Serialize method, puts all the serialized strings in an array, adds the version number, and flattens it all to xml so it can be written to disk." OK, we have found it is much easier to have multiple configuration files. Small, specific files are much, much easier to maintain. (If I add an element, just the one small file changes, not everything.) Moreover, we can read the required configuration exactly where we need it, which we have found much simpler than reading everything globally and then distributing the pieces. When each class has only the configuration it needs it is much more coherent as well (which is the most important benefit). The individual configuration files are much smaller (so that we don't have the monstrous files another poster complained about) and the files are much more easily readable as well, since the structures are inherently simpler. We can always read our files! For clarification: Again, within LabVIEW (i.e., for configuration files) we don't use EasyXML. We just use the native serialization tools (flatten to string or XML) with clusters, not objects. This works fine. Yes, there could be an issue between versions, but in practice this is not a problem for us, since when we modify a typedef we generate new XML files anyway.

-

I thought I had posted such an idea to ni.com/ideas, but I couldn't find it. Hence I created a new idea: http://forums.ni.com/t5/LabVIEW-Idea-Exchange/Support-serialization-of-LabVIEW-objects-to-interchangeable-form/idi-p/1776294