-

Posts

1,185 -

Joined

-

Last visited

-

Days Won

110

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Neil Pate

-

-

@Mahbod Morshediif you are new to software development then forget about things like OOP and HALs. Just start small and slowly add features.

Pick a single framework (DQMH is probably a good place to start) and go slowly.

OOP is not the only way to write software, and in fact I would say it generally promises far more than it delivers. The implementation in LabVIEW also comes with its share of "interesting pain points".

My advice would be stick to well understood imperative concepts (i.e. just code it as you think, forget about trying to force things into an OO model).

-

-

-

-

13 minutes ago, crossrulz said:

This will only affect you if you use custom fonts. If using the default since Windows 7, you will see no change. Neil was using fonts to match XP.

Neil, and his current team, and all the previous teams he has coached.

My problem is with the lack of choice.

-

3 hours ago, hooovahh said:

This idea was implemented.

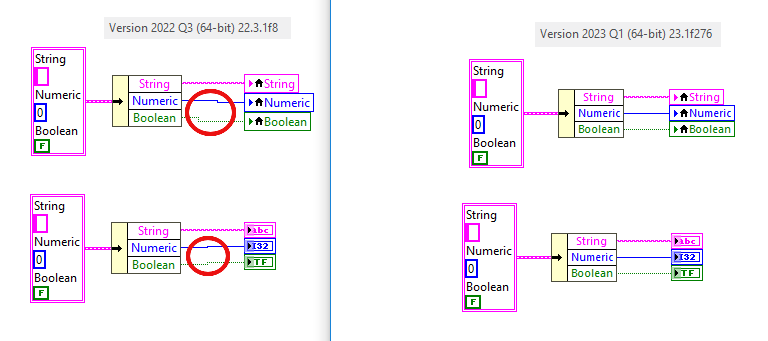

Opening existing code will create bends in wires where there weren't any before.

Thanks @hooovahh. And I hate it. Changes to the editor experience should be opt-in. I cannot imagine the outrage if Visual Studio Code changed some editor setting and then did not make it configurable.

To quote Poppy from Trolls ("I mean, how hard can that be"). You will know it if you have kids under 10.

-

The forced font size change for clusters (and other thing) meant it was an immediate uninstall for me.

-

Making a cluster a class is not going to help at all unless you also take some time to restructure your data.

-

2 hours ago, infinitenothing said:

That link is still invalid for me

Weird, sorry about that.

Does this work? https://discord.gg/KaXKg5Jw

-

For now I would prefer to keep the link expiring. Here is the new one https://discord.gg/ZghDZsxZ

-

1 hour ago, Elbek Keskinoglu said:

@Niel Pate sorry for my misexplaining. I tried to mean that I am putting the experimental setup at the Front Panel inside of the Cluster. So when there are graphs and charts they will be transformed to the JSON format to be saved in a file.

I think you need to share some code of what you are trying to do, as this still does not really make sense to me. When you say "experimental setup" I think you mean some kind of configuration controls on the FP of a VI, but I do not see how that related to some graphs and charts unless you look at the code also.

-

5 hours ago, Elbek Keskinoglu said:

At first I wanted to do this through understanding the block diagram. But now, I am doing it by simply putting the block diagram to the input cluster and collect the graph and similar data in the json format.

Sorry I do not follow the you. The block diagram is the code, it is not represented by a cluster that you can wire in to your json writing code.

-

@Elbek Keskinoglu are you trying to get a textual representation of a data structure or the block diagram itself?

LabVIEW does actually have a way to save a VI as some kind of XML file or something, but its a bit hidden away and I don't actually know how to make this work. Others in the forum probably will though. See here if you are curious.

-

Thanks, this is gold! I did actually brwose to that (via ftp.ni.com which now redirects) but I did not find the `support` directory.

-

Thank you anyway for your attempt @dadreamer, I do appreciate the effort.

-

OK, got it. I got in touch with NI support and they helped me very quivkly!

-

Hi,

I am trying to help a colleague restore an old ATE and we are looking for the identical DAQmx driver that was present originally. This is DAQmx 8.0.1

Does anyone know where I can download this? Unfortunately the DAQmx drivers on ni.com only go back to version 9.0

Thanks!

-

1 hour ago, TENET_JW said:

Hello guys,

Once upon a time, our company stocked up on NI controllers and systems. Due to business reasons, we are getting out of the NI integration game. Lots of goodies we're looking to sell, most of stock is brand new in box or barely been used. Check these out:

[FS] Several PXIe-8880 and PXIe-1082 units, w/ extra RAM

[FS] cRIO-9035, cRIO-9065, cRIO-9034, cRIO-9032

[FS] sbRIO-9606's brand new in box

NI 9697 mezzanine card for sbRIO, $250USD

[FS] NI-9239E (24-bit, 4ch, 50kS/s) $300USD per unit

(The NI-9239E is just a NI-9239 without the enclosure, it functions exactly same as a $2000 NI-9239, see this video from Linkedin)

Oh and if you're looking for NI parts and don't want to get ebay-scammed, here's the NI Trading Post forum. Maybe it should have been a board in LAVA? The group is on linkedin as well: https://www.linkedin.com/groups/14121153/

Cheers,

John

Maybe you can make a channel on the Discord? https://discord.gg/fP3mmBty

-

I seem to recall you do need to start with one of the controls that has 6 pictures. I played around with this years ago but cannot remember how I started. Will try and remember!

Did you try the System style controls? They have 6 pictures and are editable (I think).

Edit 2: Sorry I don't think I read your original post properly...

-

Sure here you go

-

As your application grows I would expect the need to pass additional state information between the frames of your VI. You could make a "Core Data" type cluster and put whatever you want inside that. Then you just have one wire that gets passed on the shift register.

-

1

1

-

-

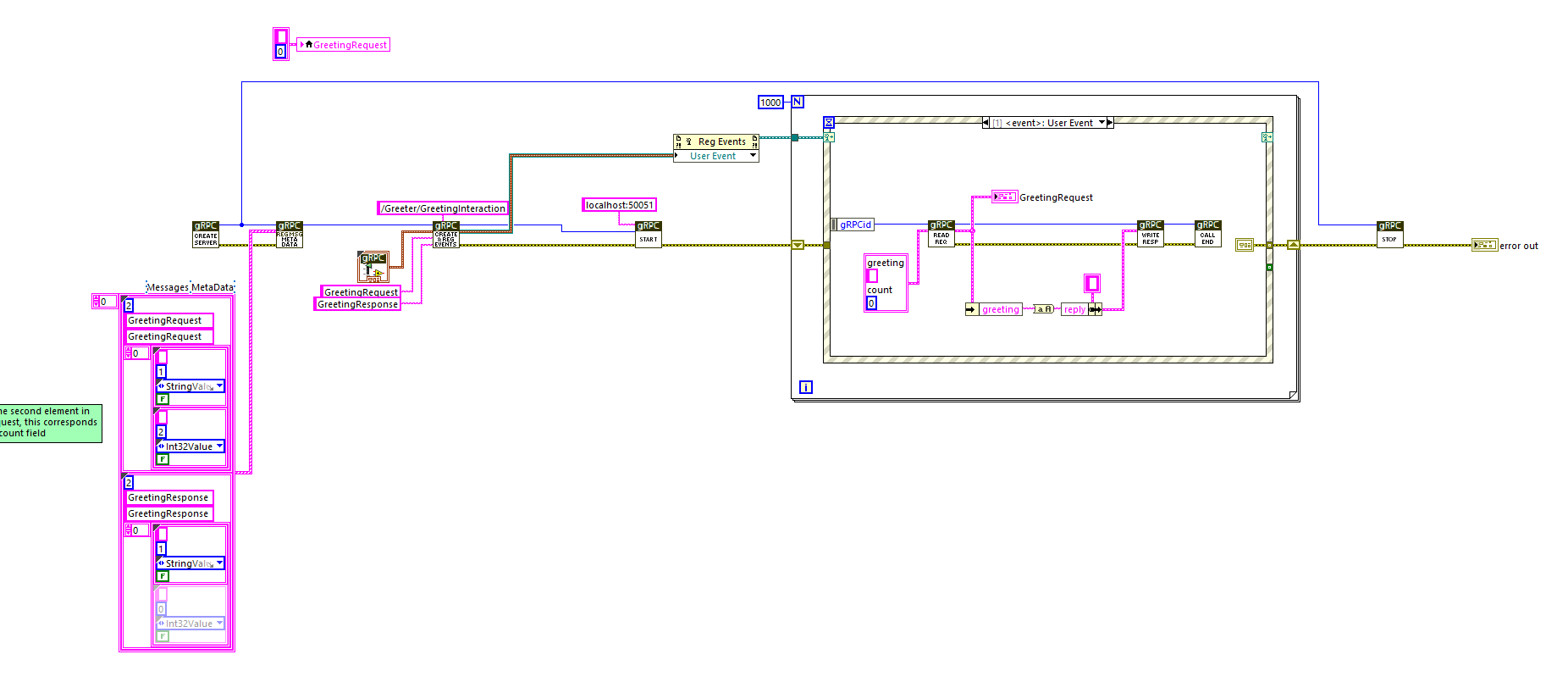

James, I found the NI automatically generated stuff quite hard to understand. I spent time stipping it down to the VIs with the black banners (under those are just the DLL calls) and actually it really is not complicated, its just packed up in a way that is completely not usable for me.

-

That means you are not reading from the DAQ quick enough. You can set the DAQ to continuous acquisition, and then read a constant number of samples from the buffer (say 1/10th of your sampling rate) every iteration of your loop and this will give you a nice stable loop period. Of course this assumes your loop is not doing other stuff that will take up 100 ms worth of time.

-

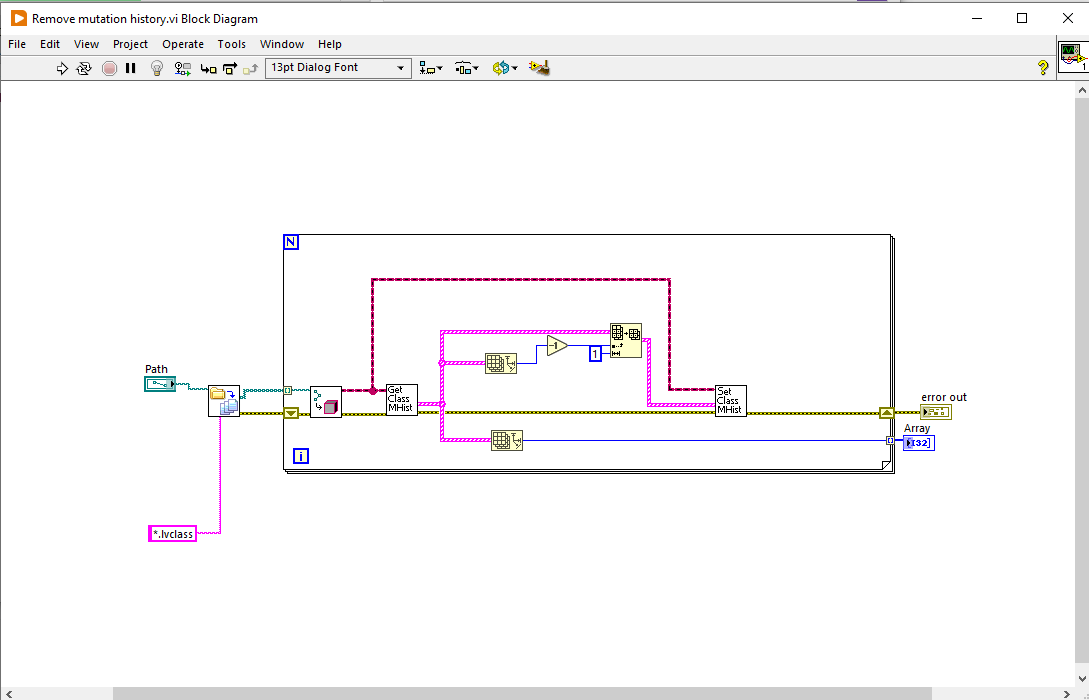

My understanding is that the class mutation history is present in case you try and deserialise from string. It is supposed to "auto-magically" work even if a previous version of the data is present.

This is a terrible feature that I wish I could permanently turn off. I have had lots of weird bugs/crashes especially when I rename class private data to the same name as some other field that was previously in it.

This feature also considerably bloats the .lvclass file size on disk.

For those not aware, you can remove the mutation history like this

LabVIEW 2023Q1 experience

in LabVIEW General

Posted

So according to the link it seems it does work as long as you have 2021 installed, right?