Leaderboard

Popular Content

Showing content with the highest reputation on 12/09/2010 in all areas

-

This thread branched from the latter part of this discussion. Visit that thread for the background. I'll start off by responding to Shaun's post here. (Although this started as a discussion between Shaun and myself, others are encouraged to join in.) The OOP mantra is "traditional LV programmers create highly coupled applications..."? Huh... I didn't realize LV had become so visible within the OO movement. I think you're extrapolating some meaning in my comments that I didn't intend. You said most LV users would use a typedef and be done with it, implying, as I read it, that it should be a good enough solution for the original poster. My comment above is a reflection on the primary goal of "most" LV programmers, not an assertion that one programming paradigm is universally better than another. "Most" LV programmers are concerned with solving the problem right in front of them as quickly as possible. Making long term investments by taking the time to plan and build generalized reuse code libraries isn't a priority. Additionally, the pressures of business usually dictates quick fix hacks rather than properly incorporating new features/bug fixes into the design. Finally, "most" LV programmers don't have a software design background, but are engineers or scientists who learned LV through experimentation. In essence, I'm refuting the implication that since "most" LV programmers would use a typedef it is therefore a proper solution to his problem. He is considering issues and may have reuse goals "most" LV programmers don't think about. With uncommon requirements, the common solution is not necessarily a solution that works. My statement can be shown to be true via deductive reasoning: Premise 1: *Any* software application, regardless of the programmer's skill level or programming paradigm used, will become increasingly co-dependent and brittle over time when the quick fix is chosen over the "correct" fix. Premise 2: "Most" traditional LV programmers, due to business pressure or lack of design knowledge, implement the quick fix over the "correct" fix most of the time. Therefore, most traditional LV programmers create applications that limit reusability and become harder to maintain over time. However, I suspect you're asking about scientific studies that show OOP is superior to structured programming. I read a few that support the claim and a few that refute the claim. Personally I think it's an area of research that doesn't lend itself to controlled scientific study. Software design is a highly complex process with far too many variables to conduct a reliable scientific study. As much as critical thinkers eschew personal experience as valid scientific evidence, it's the best we have to go on right now when addressing this question. Just to be clear, I don't think an OO approach is always better than a structured approach. If you need a highly optimized process, the additional abstraction layers of an OO approach can cause too much overhead. If you have a very simple process or are prototyping something, the extra time to implement objects may not be worth it. When it comes to reusablity and flexibility, I have found the OO approach to be clearly superior to the structured approach. One final comment on this... my goal in using objects isn't to create an object oriented application. My goal is to create reusable components that can be used to quickly develop new applications, while preserving the ability to extended the component as new requirements arise without breaking prior code. I'm not so much an OOP advocate as a component-based development advocate. It's just that I find OOP to be better than structured programming to meet my goals. So does a class. Classes also provide many other advantages over typedefs that have been pointed out in other threads. No time to dig them up now, but I will later if you need me to. I'll bite. Explain. I'm curious what you have to say about this, but no programming paradigm is suitable for all situations. Hammering on OOP because it's not perfect doesn't seem like a very productive discussion.1 point

-

1 point

-

Make sure to use a non-blank string for the IP Address. A blank string indicates the local application instance, regardless of the port specified. I've made that mistake, too. Instead use the string "localhost".1 point

-

Like most LabVIEWer's I started the out in the world using Traditional LabVIEW techniques and design patterns e.g. as taught in NI Courses etc... Of course, I implemented these rather poorly, and had a limited understanding at the time (hey - I was learning after-all!). After a while I discovered LVOOP, and above all, encapsulation saved my apps (I cannot overstate this enough). I then threw myself into the challenge of using LVOOP exclusively, without fail, on every project - for every implementation. This was great in terms of a short learning curve, but what I discovered was that I was creating very complex interactions for every program. (Whilst I quickly admit I am not full bottle on OOP design patterns) I found these implementations were very time consuming. I also saw colleagues put together projects much faster than I could Traditionally, and they were achieving similar results (although IMHO using LVOOP is much easier to make simple changes and test), but I wanted to weigh up the time involved and answer the question ...could I do it better? Pre-8.2 (aside from RT where we could only start using Classes in 2009), people (some very smart ones at that - who have been around for ages in the LabVIEW community) have been solving problems without LVOOP, successfully. This lead me to recently undergo a reassessment of my approach. My aim was to look at the Traditional techniques, now having a better understanding of them (and LabVIEW in general), and reintegrate them with what I was doing in LVOOP etc... - and I am having success (and more importantly fun!). Damn, I have even started to find I like globals . Anyways, at the end of the day I find using what works and not trying to make something fit is the best and the most flexible approach. With the aim of becoming a better programmer, I hope I continue this iterative approach to my learning (and of course these means I want to keep learning about LVOOP and OOP too as part of this too). JG says enjoy the best of both worlds!1 point

-

Since I was in on the original thread, I thought I'd weigh in here as well. First, let me say I'm following this thread because I know from all of their contributions to LAVA both ShaunR and Daklu will have something intelligent and interesting to say. Second, I feel like I'm positioned somewhere between you two on the LVOOP question. I (and my team, since I make them) do all of our development in native LV OOP. I seldom use any class as by-ref as it does break the dataflow paradigm, although as we all know there are times when breaking dataflow is necessary or at least desirable. But I may not be an OOP purist. I use typedefs - I even find occassion to use them in private class data. My typical use is something like this - create a typedef and add it to the class as a public control - place the typedef in the private class data cluster - create a get/set for the typedef in the class. This is typical of a class that I may write to enable a specific DAQmx functionality. The user may need to select a DAQ channel, sample rate, and assign a name but nothing else. So I create a typedef cluster that just exposes this. Now, the developer can drop the public typedef on the UI, wire the typedef to the set method (or an init method if you really want to minimize the number of VIs), and have a newly defined instance on the wire. Then wire that VI to a method that either does the acquisition or launches the acquisition in a new thread. What I like is that the instance is completely defined when I start the acquisition - I know this because I use dataflow and not by-ref objects - and I know exactly which instance I'm using (the one on the wire). So this leverages data encapsulation and dataflow, both of which make my code more robust and only adds one or two VIs (the get/set and maybe the init) to the mix. So I don't think by-val LVOOP compromises dataflow and doesn't add (to me at least) excessive overhead. But, I clearly have not designed the above class as a reuse library since my get/set and init depend on a typedef. If I try to override any of these methods in a child, I'll find it difficult since the typedef can't be replaced so I'm stuck with whatever the parent uses. But that's OK - not everything can (or should) be designed for general reuse. At some point, one has to specialize the code to solve the problem at hand. A completely general re-use library is called a programming language. But there are real candidates for general classes that should support inheritance and LVOOP gives us the ability to leverage that tool when needed. A recent example was a specialized signal generator class (decaying sines, triangles, etc). Even I could see that if I built a parent signal generator class and specialized through inheritance this would be a good design (even if it took more time to code initially). And it proved to be a good decision as soon as my customer came back and said "I forgot that I need to manipulate a square wave as well" - boom - new SquareWave class in just a few minutes that integrated seamlessly into my app. I guess my point is that dataflow OOP is a whole new animal (and a powerful one) and one should try to figure out the best ways to use it. I don't claim to know what all of those are yet, but I am finding ways to make my code more robust (not necessarily more efficient, but I seldom find that the most important factor in what I do) and easier to maintain and modify. I do feel that just trying to shoehorn by-val OOP into design patterns created for by-ref languages isn't productive. It reminds me of the LV code I get from C programmers where the diagram is a stacked sequence with all of the controls and indicators in the first frame and then twenty frames where they access them using locals. They've used a dataflow language as an imperative language - not a good use of dataflow! Mark1 point

-

Only on LAVA The "Mantra" I was referring to isthe comment about re-use and inflexibility I disagree vehemently with what you are saying here (maybe because you've switched the subject to "Most LV users/programmers"?). I'm sure it is not your intention (it's certainly out of character), but it comes across as most LV programmers and Labview programmers ALONE , are somehow inferior, lack the ability to design, plan and execute programming tasks. I thought that being on LAVA you would realise this is simply not true. There are good and bad programmers (yes even those with formal training) and not only in Labview. Whether a programmer (in any language) lacks forethought and analysis/problem solving skills is more a function of persona and experience than the programming language they employ. It comes across that you view Labview as an environment that "real" programmers wouldn't use. It's verging on "elitist". Most traditional LV programmers......OK. Lets qualify this. I consider "traditional" programmers as those who do not employ LVOOP technologies. I also use the term "Classical" on occasion too. Traditional LV programmers would, for example, use an "action engine" (will he bite? . I know it's one of your favourites) in preference to a class to achieve similar perceived behaviour. But. On to the my type-def proposal. It's not a "proper" solution? How so? How does the mere fact of using a "type-def preclude it being one or indeed re-usable?. Using a typedef with queues is a well established technique. For example it's used in the "Asynchronous Message Commucication Reference Library", You are right. in one aspect The OP on the other thread is considering issues and he nay have goals that perhaps not even you can predict . But that does not mean it it should not be considered. Without the use of OOP, What other methods would you proffer (we are talking about "Most Traditional Labview Programmers after all). For deductive reasoning,it must be valid, sound and impossible for the result to be false when the premises are true (which in my mind make the exercise pointless anyway).The conclusion can be false (it might be possible) since it can be neither proved nor disproved and premise 2 is not sound since it is an assumption and cannot be proven to be true. They also don't work well for generalisations because "generally" a premise may be true, but not exclusively. Premise 1 Program complexity adds higher risk and cost to any project. Premise2: Most OOP programs are more complex than imperative functional equivalents, favouring future proofing over complexity. Therefore Most OOP programs are higher risk and more costly than their imperative functional equivalents for any project.. that works. However, Premise 1 Program complexity adds higher risk and cost to any project. Premise2: All OOP programs are more complex than imperative functional equivalents, favouring future proofing over complexity. Therefore All OOP programs are higher risk and more costly than their imperative functional equivalents for any project.. that doesn't since it is unknown whether the absolute premise 2 is sound or not. and the conclusion cannot be proven although we suspect it is false. That's a no then The fact is there is no evidence that code re-use is any more facilitated by OOP than any other programming (I'd love to see some for any language). Yet, it is used as one of (if not the) primary argument for its superiority over others. There are those who believe that the nature of programs and the components that constitute them tends towards disposable code (diametrically opposite to re-use) and, that in terms of cross project, re-use is a welcome side effect rather than a plannable goal . I'm on the fence on that one (although moving closer to "agree"). My experience is that re-usable code is only obtainable in meaningful measures in a single project or variants on that project. I have a very powerful "toolkit" which transcends projects and for each company I obtain a high level of re-use due to similarity between products. But from company to company or client to client there is little that can be salvaged without serious modifications (apart from the toolkit). I don't view it in this way at all. OOP is a tool. OK a very unwieldy and obese tool (in Labview). But it's there non-the-less. As such it's more about choosing the right tool for the job. I tend to only use OOP in LV for encapsulation (if at all). It's a very nice way of doing things like lists or collections (add, remove, sort etc as opposed to an action engine), but find the cons far outweigh the pros for projects that I choose to use Labview on. Projects that lend themselves to OOP at the architecture level are better suited to non data-centric tools IMHO. Heathen You mean not everything "is a" or "has a"? I see this approach most often (and I think many people think that just because it has a class in it....it is object oriented - I don't think you fit into this category though). Pure OOP is very hard (I think) and gets quite incestuous, requiring detailed knowledge of complex interactions across the entire application. I can't disagree that encapsulation is one of the stronger arguments in favour of OOP generally. But in terms of Labview.... that's about it and even then marginally. Indeed. The huge numbers of VI's. The extra tools required to realise it.. The bugs in the OOP core.. The requirement for initialisation before using it... all big advantages (don't rise to that one I'm pulling your leg) OK. Now the discussion starts There are a couple of facets to my statement. I'll introduce them as blood bath discussion continues. Labview has some very, very attractive features. One of those is that, because it is a data-flow paradigm, state information is implicit. A function cannot execute unless all of its inputs are satisfied. Also, it doesn't matter in what order the data arrives at the functions inputs. Execution automatically proceeds once they have. In non-data-flow (imperative) languages. State has to be managed. Functions have to be called in a certain order and under certain conditions to ensure that all inputs are correct when a particular function is executed. OOP was derived from such imperative languages and is designed to manage state. In fact, much of OOP implementation involves the responsibility of the class to be able to manage it's internal state (detail hiding) and managing the state of the class itself (instantiate, initialise, destroy). In this respect an object instance is synonymous to a dynamically launched VI where an "instance" of the vi is launched. A dynamically launched VI breaks the data-flow since now it's inputs and outputs are independent from the main program data-flow and (assuming we want to get the data from the front panel) we are again back to managing when data arrives to each input and in when we read the result (although it's not a "classic use of a dynamically launched VI). A class is the same. If you query a "get" method, do you specifically know that the all the data inputs have been satisfied before calling the method? Do you even know if it has been been instantiated or initialised? The onus is on the programmer to ensure that things are called in the correct order to make sure the class is first instantiated and initialised and additionally that all the required "set" parameters have been called before executing the "Get" method. In "traditional Labview" the placing of the VI on the diagram instantiates and initialises a VI. And the result cannot be read until all inputs have been satisfied. OOP forces emphasis away from the data driving the program, back to the implementation mechanics. So what am I saying here? In a nut-shell, I'm suggesting that in LVOOP, the implicit data-flow nature nature has been broken (it had to be to implement it) and requires re-implementation by the programmer. Ok you may argue at the macro level it is still data flow because it uses wires and LV primitives. But at the class level it isn't. It is a change in paradigm away from data-flow and although there may be benefits to doing this, a lot of the features that "traditional" LV programmers take for granted can be turned into "issues" for non-traditional" programmers. A good example of this is the singleton pattern (or some might say anti-pattern ) Classic labview has no issue with this. A VI laid anywhere, in any diagram, any number of times will only access that one VI with its one data store and each instance must wait for the others to have finished. No need for instance counting mutexes or locking etc. It's in-built. But in an OOP world, we have to write code to do that.1 point

-

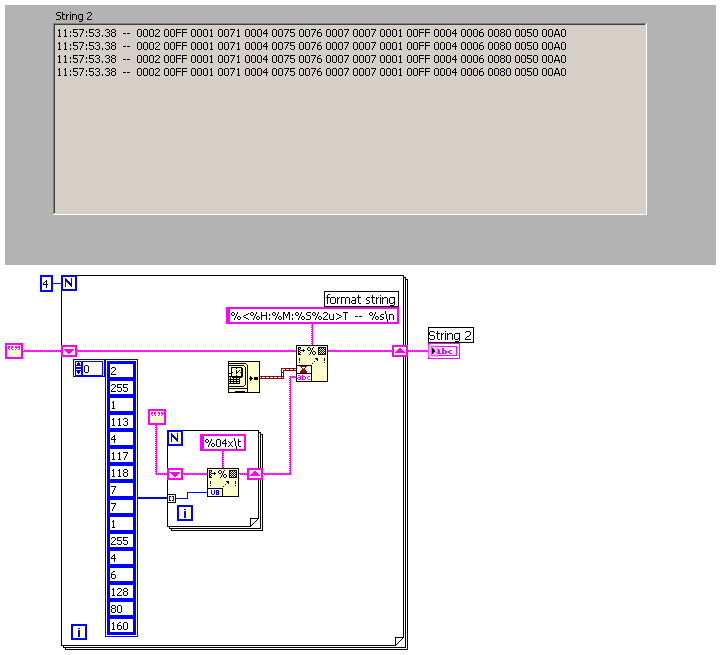

QUOTE (Gavin Burnell @ Apr 21 2008, 02:57 PM) Hi Gavin, By default STDIN and STDOUT have no meaning for a GUI based application (process to be exact...) like LabVIEW. But you can create one for a process does not have this. A use-case would be to output debug information. There is no Native LabVIEW way to do this (as far as I know). The System Exec.vi just executes a process on system level that could have a Console. I have been using the following VIs that call the Win32 API to create a Console Window for the LabVIEW Process and write to it. I primary use it for debug output so I did not look into reading from the Console. Download File:post-1819-1217341336.vi Download File:post-1819-1217341345.vi Tested in LabVIEW 8.5.1 on Windows Vista SP1 32 bit1 point