-

Posts

1,068 -

Joined

-

Last visited

-

Days Won

48

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by mje

-

If I'm debugging a VI clone, I can easily find the original by using View: Browse Relationships: Reentrant Original. But is there a way to do the opposite? Given an original VI, I'd like to see the list of clones currently in memory.

-

Can't offer direct help now because I'm on my mobile, but the STA/MTA threading mode issue creeps up from the to time. I last remember this discussion in the context of showing the system folder browser dialog. Maybe searching for that might turn the answer up?

-

True that events don't occur in the UI thread, but processing them to display information would involve a context switch to the UI thread if you want to show that info. However with a synchronous method called from a context already running in the UI thread, can that context switch be avoided?

-

Like Shaun said, if you have some kind of application, once you have a callback you can slap a counter on it, then use that knowledge from then on I'm sure you guys have thought of all of this already, but I have to ask. So events won't work. How about some sort of synchronous method, like an object that calls a virtual method, or a strict VI refnum? Granted both of those would add some overhead after each call, but I can't imagine VI hierarchies getting too big, maybe 10,000 items? What's the overhead in a dynamic dispatch call or by reference call in a loop with that many iterations? A second? My largest app is about 2000 VIs and takes just under a minute to load. I'd gladly add a second to that for an easy to use method that allows me to track progress.

-

This has become common place in my larger application which also uses classes extensively. I'm not sure if it's the actual use of classes, or the proliferation of VIs as you start to get a bunch of read/write accessors, or perhaps the dependency trees that get mapped out between the various classes. Regardless, any simple edit of a VI is followed by 1 second or so of "busy" cursor during which the IDE locks up for recompiling etc. I've been dealing with this so long I hardly notice anymore, editing VIs just has a built in cadence where I make a change, wait, make a change, wait... Build times for this application used to take about 45 minutes, but now that 2011 SP1 hit it managed to get down to 15 min or so. I have not noticed a large change in the "wait" duration from SP1 though.

-

That makes sense as I recall the history of the control pretty much followed what you assumed. They should be. The typedef in question is actually the ctl that defines the private members of a class, so I imagine all of the member methods would have been in memory and saved along with the class by the save all operation, and I had not been playing around with the files outside of the LabVIEW context. This might be another instance of the history information carried around by LabVIEW classes causing unintended problems. For what it's worth, the offending file is attached (without the containing class library). Not sure if you can pull any information out of it. When opened on my desktop computer, the UBN node picks the ER field, but any other system I have tested it on the UBN node picks the DN field. If relevant, I can get you (or anyone at NI) the whole class library if you contact me via PM, I've taken note of the SCC revision number where I captured the problem. Edit: Scratch that, reverting to the revision I had noted no longer shows the problem. The VI seems to always select the DN field now...argh. Validate Error.vi

-

Indeed, that's what I find scary. Had the type not changed it would have been one hell of a bug to hunt down.

-

I like your idea, but unfortunately saw no evidence of this. Looking at the paths of files loaded in the project explorer showed no hint of things creeping in from unexpected locations. I also expect that since in this case we had an lvclass file, loading an out of date copy would have caused other issues. Clearing the object cache or forcing a recompile had not showed any sign of working either. I also verified the issue on a pair of development virtual machines I run, both had the wrong field selected when opening thier copy of the same source code revision. It seems the only instance where the correct field was selected was on my main development machine. After saving the VI on another machine, committing to SCC, and updating my main development version the problem seems to have vanished. Yay?

-

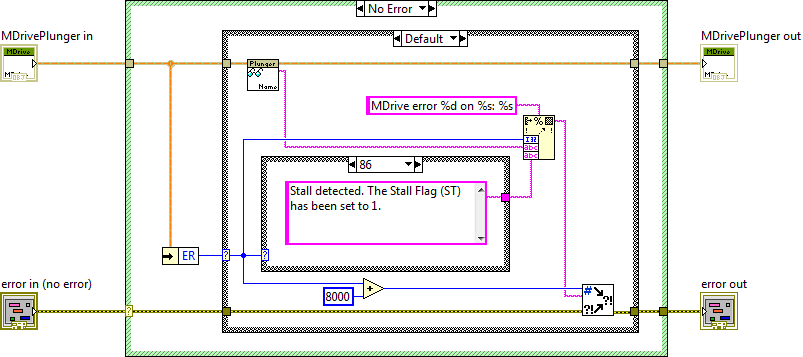

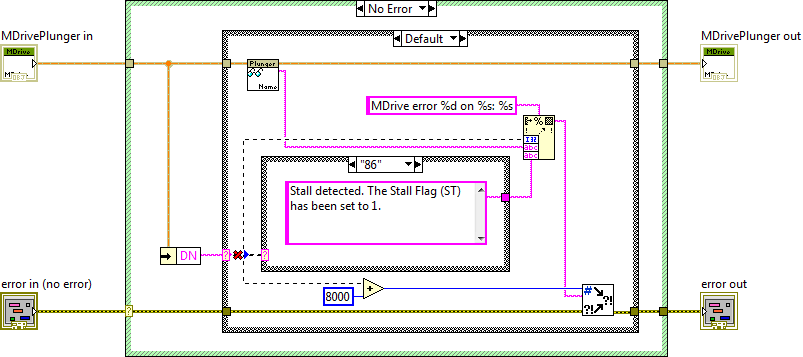

Anyone seen behavior like this before? I've seen stuff like this happen before when mucking about with field names in clusters, but never before just by changing what computer I'm opening a VI on. Here's the code for a VI when it's opened on my main development machine. Note the unbundle by name primitive is selecting the ER field: Now here's the same code opened up on my laptop: Yeah, somehow the unbundle by name prim now selecting the DN field and the corresponding case structure has also mutated its type. Fixing this is dead simple, I just re-select the ER field, but the more important question is why is my source code changing as a function of which computer opens the VI? This scares me...

-

We only have four devices connected to the bus which are involved in continuous data exchange, and an optional fifth device which is for the most part silent. Over the last few days I've deployed the Win32 solution into my main application and it works beautifully. For what it's worth, I'll attach the library I made as a wrapper to the Win32 API. Consider it public domain, anyone can do what they will with it. LabVIEW.Win32Serial LV11SP1.zip Caveats to those who are downloading it: It does exactly what I need it to do, it might not do what you need it to do. That is it's by no means complete. It operates synchronously. It's set up so read operations operate by reading a "line" at a time. That is waiting for a line termination sequence. If you want to use other read modes, you'll need to modify the library. Behavior is undefined if you do not set a timeout. Timeouts don't quite work the same as they do in LabVIEW. As-is, if you use the Set Timeout method, it will configure the port such that Read Line will timeout if no data is received for the specified duration. That is Read Line can block indefinitely if data slowly trickles in at a rate that's faster than the specified timeout... Have fun with it if you'd like. -m

-

I think the thing that confuses people the most about these two primitives is the run-time behavior. You stated the proper behavior in your blog comment, but the distinction is at best very subtle the way you put it. Furthermore the help really distinguishes them by use-cases, not their underlying behavior which I think would be more helpful. To More Specific tests against the compile-time wire type, whereas the preserve run-time class tests against the actual run-time type of the object on the wire which might not be the same as the wire type. One is essentially a static type of cast where you're always testing against a known type, and the other is a dynamic cast which evaluates the type at run-time. However I hesitate to use the static/dynamic language because it carries with it carries with it some legacy baggage from C++.

-

Agreed. This is a very important part of UI design, especially when you start to have multiple UI components which can independently operate on whatever the underlying data model is. Even more important if these operations are performed asynchronously. To me it's the lack of "intelligence" that's the problem. For this reason I usually try to send some sort of context along with an update message. That is not a global "something changed", but more along the lines of "DataX has changed". Sometimes a context can't be supplied, in which case a UI is forced to do a full re-draw, but in many cases narrowing down what has changed can really help. This means however that each UI needs to maintain some state information so it can cross reference the the context with what it's displaying. Does that make sense or am I being too abstract?

-

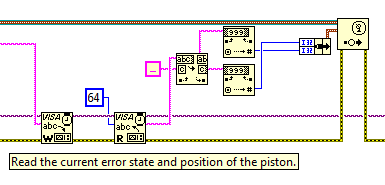

Update:I still have no idea why the VISA calls are so slow. As rolfk implied, I expect some amount of slow down due to the various abstraction layers, but to be diplomatic: something in the millisecond timescale is a little unexpected. I had a few problems with the software from the link vugie shared, so I rolled my own proof with the Windows API. Using Win32 takes my "frame" duration down to 10 ms. A "frame" involves: Writing bytes to the port. This is a simple WriteFile call. Reading a "line" from the port. This involves looping on ReadFile and concatenating the returned bytes into a buffer until I successfully find my end of line character. The port is configured such that read calls return immediately if there is any data waiting, or to wait for 1 ms for new data if nothing is there. For this test, my write operation is a constant 13 bytes, read operation is a constant 5 bytes. Transit time should be around 5 ms at 38400 baud for the raw data. I have yet to roll this into my main application, so I can't comment on how well it will scale when I start communicating with the four devices on our bus and have variable sized replies (up to perhaps 20 bytes), but I expect I'll be fine since I'm initially targeting for a 10 Hz control loop. Thanks everyone for the advice, -m

-

I've never done serial programming in Win32, but I know you can access COM ports using the basic CreateFile/ReadFile/WriteFile/etc functions. Though this assumes synchronous access which might interfere with my ability to log data. Also looking into async (overlapped) operations. I found http://msdn.microsoft.com/en-us/library/ms810467.aspx, which seems to be a pretty good (though very old) article. No idea though if all that work will get me anything. You're probably right though. I can live with 30 ms if its not additive per device. Maybe I'll break apart my bus, and just put everything on a dedicated on RS-232 line. Need to hunt down a USB-serial converter, not enough ports to do that at the moment. Oh yeah, and 38400 bps is the limit for these devices when in party mode on an RS-422 bus. If I put each on a dedicated line I can go faster, but no real need for that if its the VISA calls that are occupying the overhead.

-

Negative, Ghost Rider. No observable change. I'm starting to explore options about busting out the Win32 API...

-

Haha, cool. More numbers. So with a single read and single write, I get 30 ms frame time. Subtract out the 5ms transit time over the serial bus and that leaves 12.5 ms per VISA operation. Since my test is fixed at 4 bytes received, I broke the read operation down to four single byte reads, for a total of five VISA operations per frame. Wouldn't you know, that leads to a frame time of 70 ms. Knock 5 ms off that for the communication time leaving 65 ms, 65/5 operations = 13 ms. Seems to be constant behavior of 12-13 ms per VISA operation. Those settings don't seem to make a difference on my system. Moving the sliders, unchecking the FIFO option doesn't affect my observed latency. I think my control loop has just hit a brick wall...

-

Interesting idea. So I've set things up with a fixed outbound packet size of 13 bytes and a reply of 4 bytes. I can add a delay of up to 5 ms between send/receive primitives without noticing any change in the rate at which data frames arrive. This makes sense since it should take ~4-5 ms to move the 17 bytes. Delays longer than 5 ms add linearly to the observed time between data frames. To me this means my device is responding very quickly. Hmm, needs more exploring... Todd, I'm not aware of any such setting.

-

I think he was referring to the underlying data structure of these composite types. I have no idea how LabVIEW holds path data, but conceptually a path is really just an array of strings. For example ["c:", "foo", "bar"] only becomes "c:\foo\bar" when it's acted on in a Windows environment. Each OS has their own grammar on how paths are represented, and on what's legal in the context of individual path elements. Ideally it would be nice to simply inherit the serializable behavior of the underlying components of these types for "free". Exactly. If we want to treat array itself as a unit of serializable content, you'd be forced to create an interface for 1D, 2D, 3D, etc, for each supported data type due to strict typing. I was thinking that arrays could perhaps be a non-issue if the "magic" part of the code first calls a method to record array properties then delegates data serialization to the individual elements.

-

Are there any fundamental limitations to the speed of dealing with NI-VISA in relation to serial communication? Basically I need to continuously handshake off on a series of devices on an RS-422 bus. Each exchange looks something like this: I send off a string down the bus and wait for a response. My command is a fixed size of 12 bytes and the responses can be of varying sizes from 3 to maybe 20 bytes. I'm operating at 38400 baud and seeing a cycle time of about 30 ms for this exchange. This definitely won't do for the number of devices and how fast we want to run our control loop. I suspect that most of this latency is from the device that I'm talking to. Do any of you have ideas on how I'd be able to test the response time of the device to confirm this isn't a latency associated with VISA? I imagine (hope) the overhead on the NI-VISA calls are low enough that I should be able to get close to the maximum data transmission rates on this timescale? If I have 38400 bps and 10 bits per byte (1 start + 1 stop bit) I ought to be able to get close to 3840 bytes/sec I hope?

-

Indeed, things would be much easier for this application if scalars and composite types like timestamps and paths were objects, but of course that would open such a huge can of worms for practically every other situation that I shudder to think of how NI could ever even consider moving from that legacy. Out of curiosity, I'm wondering if there's a creative way of handling arrays with the scripting magic? Maybe upon coming across an array some method is called to record info about the array rank (number of dimensions, size of each), then the generated code would loops over each element using the appropriate scalar methods to handle serialization of each element? This could potentially allow support for arrays of arbitrary rank, but would likely be slow as it would involve serializing each element individually. Just thinking aloud for now, I don't have time to really think it through thoroughly yet.

-

Excellent. This is definitely needed. I'll review it over lunch!

-

It's an instrument control and data analysis program for a mass spectrometer. There are different windows for viewing data in the time domain opposed to the mass domain for example, and other windows for instrument control, sample queue management, etc. All the windows interact in various ways, and depending on context they can force each other to the top of the z-stack, but simply activating them never does. Amen to that!

-

Excellent responses thank you. I never considered MDI, but the behavior is definitely consistent with that UI model. I was asking because this MDI behavior runs counter to another application that my users will often have running side by side. My application will bring all its windows to the front as soon as you use any of them, whereas the other does not enforce this behavior. The inconsistency is annoying. I personally have no preference between either model, I just don't like how each application behaves differently.

-

Quick question about behavior of multi-window UIs in a LabVIEW application. If I'm going about my business in another application, then click one of the windows in my application, all of the windows belonging to my application come to the top of the z-stack. Is this behavior part of the OS or part of the LabVIEW RTE? Do we have control over this behavior? This is a Windows app by the way, in case it's OS related and not RTE.