-

Posts

1,068 -

Joined

-

Last visited

-

Days Won

48

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by mje

-

Network Messaging and the root loop deadlock

mje replied to John Lokanis's topic in Application Design & Architecture

Of course. You can't cache a connection for a machine you don't yet know which exists... -

Network Messaging and the root loop deadlock

mje replied to John Lokanis's topic in Application Design & Architecture

How so? I thought you can avoid the root loop when spawning as long as you hold a refnum to build clones off of for the lifetime of an application? Of course that implies at some point you need to open the initial target refnum, but if done at start-up, no root access should be required whenever you need to spin off a new process. -

Both the replies above are definitely true and recommended if you're seeing slow updates. Unfortunately though, even observing these practices can lead to updates which are still unacceptably slow. If you have decent sized data sets consider maintaining the list virtually and only displaying the subset of data that's needed. If you search the forum for a thread named Virtual Multicolumn Listbox (or something similar) you ought to be able to find a post I made a while ago demonstrating as much. Hah, Hooovahh beat me to it.

-

Dynamic UI Elements using HTML & CSS - Thumbnail Grid

mje replied to Ravi Beniwal's topic in User Interface

Re: clicking sound from navigation Rather than rendering a file via a navigation event, in the past I've directly manipulated the document object model from LabVIEW. I'm not sure if any of the above code does that, just throwing it out there... -

Neat. I don't think I've ever thought to use the PRTC primitive in that context. I've never considered using the IPE structure to change type because the two ideas are incompatible in my mind. But there you have it. Well there are other situations it can throw an error, but the code you showed makes sense. Your Node List is a LabVIEW Object by inheritance, so the run-time type is preserved, even if the actual type is a descendant of the target. The code above though is deceiving because everything inherits from LabVIEW Object. If your DVR was of a class unrelated to Node List (other than the common LabVIEW Object ancestor) then the cast definitely fails, which would be the expected behavior. However there you have it, your example. I guess then the DVR can mutate in type, so long as the mutation results in a more specific run-time object? This seems...odd to me. I too can't help but wonder if that's intended behavior. Need some time to mull this one over. Editing doesn't seem to work right now, so I'll clarify: The above statement wasn't meant to stand outside the context of my earlier post. The fact is the example you showed indeed works, so I can understand the argument that since any object is a LabVIEW Object, you can also say that indeed yes, there has been some type of preservation in that the resulting value on the DVR is still a LabVIEW Object-- I'm just not really convinced this is supposed to be able to happen. I would rather argue that since the type is in fact mutating that there is no preservation. But maybe this is all by design?

-

The PRTC primitive is a confusing beast. To understand what it does, you need to understand the difference between the wire type in your diagram, and the actual value type that's riding on that wire at run-time. You might have a wire type of "Pack", but the actual value riding on the wire at run-time could be a Pack object or any descendant class of Pack, including your PCPV4 class, among others. The DVR is used to operate in place, which means you need to ensure that the value on that wire is the exact same type as what was originally there. You might be accessing the DVR as a "Pack" wire, but there could be any child class of Pack actually riding on that wire at run-time-- you just don't know ahead of time. That's why you need to check at run-time what type of class is on the wire. The PRTC does this, whereas the other casting operators do not. The To More Specific Class simply compares a value to whatever wire type is connected to the middle terminal at compile time and is clueless as to other possible descendant classes that might actually be riding on the wire at run-time. So having said that... "OK" depends on what exactly you're expecting out of the construct you created. Do you expect to actually mutate the type of object on the wire at run-time? If so then I expect your code isn't quite doing what you hope. The PRTC primitive is there to enforce the value type definitely does not mutate at run-time. If the cast fails, then the run-time class from the middle terminal is used (NOT the wire type, but the actual value type that is riding on the wire). Hence the name of the primitive, it preserves the run-time class. Check the error out terminal if you fail to actually preserve the type. If you're just looking to put a "new" value on the wire but for whatever reason have knowledge that your types will never be mutating, then yes this is pretty much a textbook example of why the PRTC primitive is needed and it will work. But looking at the case structure in you code implies you're actually trying to perform the former. I don't have time to get into any more detail, but I've done similar things to what I believe you're after. Basically you have a DVR of type "Pack", and what to be able to change the value in the DVR to mutate to an arbitrary child class of Pack, correct? In this case, destroy the DVR and create a new one. The two values are different types, it makes no sense to operate on the values in-place. -m

-

Negative, the control, pane, or VI mouse leave event does not fire. Argh! Nevermind. It does fire. Stupid stupid stupid. (Found a typo in a string constant.)

-

I have an application that has several different data views, any number of which could be open at a time in their own window. Many views track mouse movements and display context information by showing small frameless panels, and hiding them when not relevant. I'm having trouble though capturing mouse events when the cursor "leaves" a window by moving on top of other windows which are higher on the z-stack. Basically none of the control, pane, or VI mouse leave events fire since the mouse technically never left any of the respective boundaries, so I'm left with an orphaned tooltip window until the mouse happens to stray back to the window. This video quickly demonstrates as much: http://www.screencast.com/t/aRCEDlqWVG Now I can think of a few ways to fix this such as having each view broadcast an application level event to the effect of "Hey I have the cursor now so the rest of you hide your tooltips," but that seems inelegant. Surely there's a way to manage this locally without starting to throw around global brodcasts? We want the tooltips to display even if the window does not have focus, so tracking focus is not really an answer.

-

Perfect, thank you!

-

This is a bit of a cross-post from the darkside, but I'm not seeing much traction there after a few hours... We have a LabVIEW application which we distribute in both 32- and 64-bit versions and ran into a show-stopper defect when trying to build the 64-bit installer for our application using the LabVIEW 2011 IDE. To make a long story short while we work through this with NI engineers, I'm also tasked with exploring using third-party installer tools. Basically I need to create an installer that distributes our application, along with three run-times (LabVIEW, .NET, MSVC) for Windows systems. So the question is how would I determine if a particular LabVIEW RTE has been installed? The registry subkeys under HKLM\SOFTWARE\National Instruments\LabVIEW Run-Time\ seem to be a good hint, but how do I distinguish between a 32-bit and 64-bit RTE that might be on the same computer? Is this even the right way to do it? Anyone have experience with this? -m

-

Thanks, mabe. Indeed, if there's a way to avoid the leak, I have yet to find it. The "good" news is the leak is not cumulative, you get only one leak for each VI that is operated on regardless of how many times you call Set Busy.vi on that VI.

-

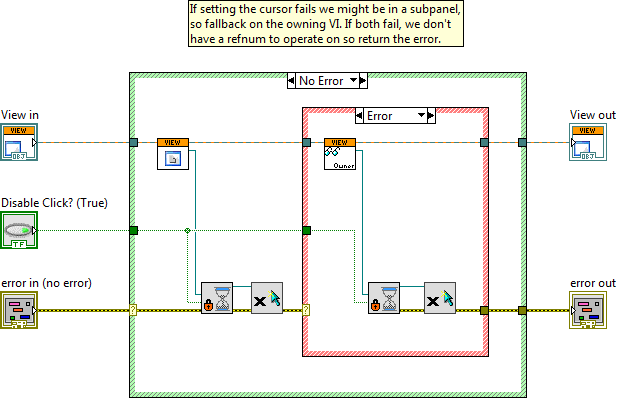

What's the correct way to set the cursor to a busy state? I'm getting this in the trace execution toolkit when I run my application: The SetBusy.vi for me looks like so: Originally I didn't have the Destroy Cursor.vi calls in there, they are an (unsuccessful) attempt to clean up the reference leaks. Note the special handling, this method is part of an extensible class representing a view, there's logic in there to handle if the user interface is in a subpanel or not since you can't set the cursor in a subpanel (don't get me started on that one). I'm thinking this might stem from the use of subpanels because I don't have this issue with parts of my application which are stand-alone windows without subpanels. Any ideas?

-

I should clarify "kludge" though. I don't mean to imply it's bad code. To reduce jitter it makes sense RT FIFOs would have to be typed to a fixed size element. However somewhere along the line there must have been a desire/requirement to support the native waveform type and since they're variable sized some form of compromise would have to be made. I suspect waveforms are handled as a special case by the primitives since LabVIEW does not expose constant sized arrays/waveforms to us.

-

I believe the support for waveforms is a bit of a kludge. You need to specify the waveform size when you create the FIFO, so I suspect that under the hood the primitive is mutating the waveform to a constant sized element, discarding the variant, and not transmitting the native cluster. Pure conjecture of course...

-

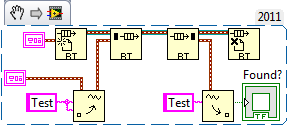

I don't have a citation, but here's a snippet to show the issue. I have very little experience with RT FIFOs, but I expect this likely arises to them needing constant sized elements, thus not accepting variants.

-

The plots will only plot your literal data, they have no proper interpolation routines so you will have to apply your own interpolation. There are plenty of methods in the Interpolation & Extrapolation palette, of particular interest might be the Interpolate 1D method which among others, includes spline. If you plan to use it on a chart, you'll need to be careful which method you use as you won't be able to go back and change previous interpolations without clearing the chart, in which case a graph might prove more useful...

-

Yeah, I hate regex because they're so difficult to decipher, but love them because they're so bloody powerful. Reminds me of perl code.

-

Storing Initialisation Data in a Strict Typedef

mje replied to Zyl's topic in Application Design & Architecture

I have very little experience with the flatten XML primitives so I'm curious, what happens if the XML doesn't match the typedef? Say you're typedef changes, or a user modifies the XML? Do the primitives try their best to match what they can or is everything lost? -

Yes, I'm aware. This is part of the frustration because I truly don't know why these problems arise and any small understanding of the matter I have is muddled with contradictions. But I don't wish to derail the thread. With regards to Jack's issue, I can't say I've observed it. However does it not stem from how the password protection mechanism interacts with friendship? I believe the application of friendship here is truly one of the defining case studies-- I have used it in similar factory patterns. I can't attest to how Jack was trying to distribute his code, but it would seem to me that for him to do so the password also needs to be available? How is he doing it? Is it wrong? Is there not another way? I'm curious to know how to do what Jack is trying to do. Wanting to distribute password protected VIs is something I understand, and knowing if having the VIs couple via friendship prohibits this is something to file under the "good to know" category if it is indeed true.

-

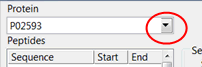

I have a tester for one of our applications that has UI issues like so: You're looking at a system ring control, and the pull-down button is being clipped. This user has non-standard font or display settings to the point where there are several UI issues I need to address due to poor scaling of the UI, but is there anything that can be done as far as what I posted above?

-

Interesting. We are still on LV2011 and will remain so for another month or so. I've spent quite a bit of time de-coupling my application in an effort to make it more editable. Basically I now have an application level project which defines the entire scope of the application, bringing in each component such that the run-time entity can be defined. Then a bunch of individual component level projects for working on each part by itself. If you look at the dependency tree for a component level project, each has some common framework ancestors as dependencies, the core application level object, and then whatever else it ends up using as it goes about it's business. With one exception no component is aware of other components. In some situations it has helped, but not others. Some classes remain un-editable in the larger application level project, the process for working on these classes is tedious at best: Open the component level project to perform editing. Save. Close project. Open the application level project. Run, debug, identify problem. Close project. Open the component level project to do more editing. Save. Close. Etc... Fortunately this process only really needs to be done when some fundamental changes are being made to the classes. I never try to change the private data clusters from the application scoped project for any class. Deleting or renaming VIs can (but not always) throw LabVIEW into a tizzy. Generally editing an existing VI is fine, though you need to step into a cadence where you make an edit then wait for the compiler to catch up. What I wouldn't give for an option to have LabVIEW wait to recompile until the UI idles for a few seconds. Yes it takes me 30 seconds to wire up a VI because I have to wait. After. Every. Wire. Or. Constant. I. Drop. A few minutes of that usually ends with me closing the application project and going back to the component project only to switch back a few minutes later. In the end though, I've actually learned a lot from all of this. I still don't know exactly why it happens, but I have learned a tremendous amount when it comes to large application development in LabVIEW. Over the years this project has grown so much and I am a far better programmer now for having to had tackle these challenges. For all my criticism of LabVIEW, I still love it.