-

Posts

4,999 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ShaunR

-

-

There's no VI that falls into that category. There's no DLL that isn't core to LV itself that falls into that category. In short, everything that currently ships with LV that falls in that category is installed by LV and thus requires no secondary notation from a user. A VI in vi.lib is not part of the runtime engine and so would require separate acknowledgement by any EXE that used that VI.

Well. Tell us which licence IS acceptable then. Alternatively, NI could create a licence (LabVIEW Open Source Licence?) that doesn't require giving up IP then everyone will be happy.

-

For goodness sake, Shaun, have you listened at all to my explanation for why the attribution requirement is a problem? It has *nothing* to do with NI and everything to do with the next user downstream. It's an impossible tracking problem.

“You seldom listen to me, and when you do you don't hear, and when you do hear you hear wrong, and even when you hear right you change it so fast that it's never the same.”

Marjorie Kellogg

As the NI run-time (for executables) or the development IDE must be distributed to use anything; from NI, that aspect is a bit moot. If it wasn't the case, and there weren't other examples that require attribution already shipped; then I would probably have agreed with you by now.

-

I was digging into the BSD license and exploring the reason for the attribution requirement.

The reason is credit where credit is due.

If someone wants to use some software and not even give the author credit for it (or even pretend they wrote it themselves). Then they really don't deserve to use the software.

You are obviously trying very hard to find a way through, but the real question to ask is "what is it about the Apache licence that allows NI to use software under that licence?". The Apache licence has far more restrictions than BSD (including attribution).

However, I think it is more a case of will than law or technicalities which is the stumbling block. And without a corporate lobotomy, that's gong to be hard to overcome.

-

First thing we could use are functions to convert between JSON-format strings and regular LabVIEW Text (escaped characters, encoding, add/remove the quotes). I’m weak on regex.

I've already implemented the removal of quotes. The only escaped chars that I know "must" be escaped are unicode strings and I'm not sure what to do about that with LabVIEW not supporting Unicode without using OS dependent code.

-

I’ve added a new version to the CR (sorry Ton, I will need to learn how to use Github).

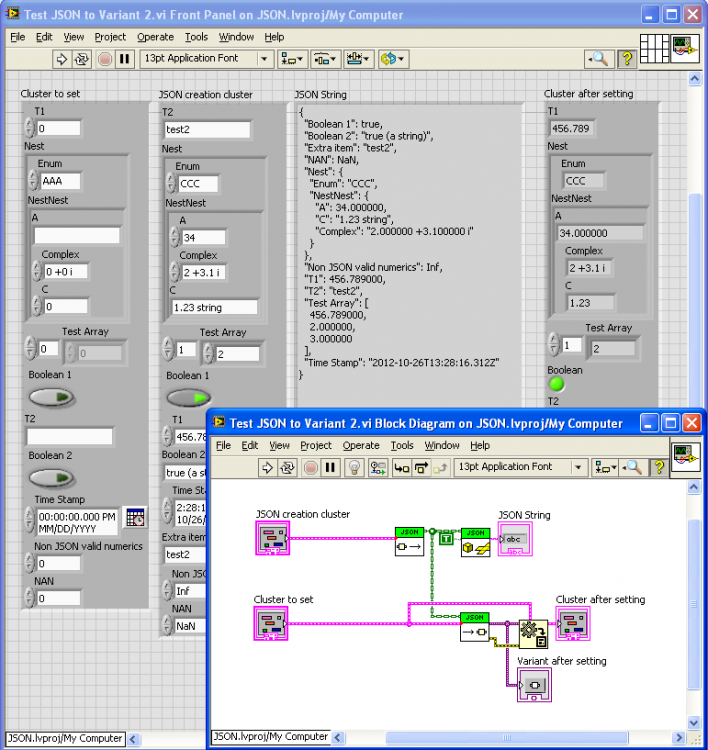

I added a JSON to Variant function. Note that I’m trying to introduce as much loose-typing as possible (very natural when going through a string intermediate), so the below example shows conversion between clusters who have many mismatched types, as well as different orders of elements. It would be nice to think about what type conversion should be allowed without throwing an error.

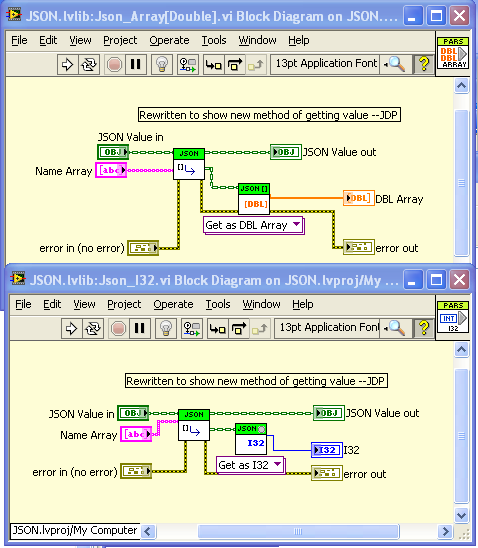

And I’ve started on a low-level set of Get/Set polymathic VIs for managing the conversion from JSON Scalers/Arrays to LabVIEW types (very similar to Shaun’s set but without the access by name array). I’ve reformatted two of Shaun’s VIs to be based of the new lower-level ones. The idea is to restrict the conversion logic (which at some point will have to deal with escaped characters, special logic for null (==NaN), Inf, UTF-8 conversion, allowed Timestamp formats, etc.) to be in only one clearly defined place. At some point, I will redo the Variant stuff to work off of these functions rather than relying on the OpenG String Palette as they do now).

Do you want to list out the tasks that need to be done so that we can apportion them between us?

-

Are you dynamically loading the addons using a path?

If so. You should be aware that when compiled into the exe the path changes so

c:\temp\myfile.vi

becomes

c:\temp\myexecutable.exe\myfile.vi

-

No way!

Yup. I think originally they were using it to invert (instead of using the primitive) and during debugging/mods changed the booleans around so it was completely redundant.

-

1

1

-

-

Problem solved.

There is already a Json library on NI.com written by an NI employee that AQ can use and can be included in LabVIEW. So we can now broaden the discussion away from the Json library here to a more general Lavag CR to NI compatibility without getting bogged down on a specific piece of software.

-

-

The EULA does not require it to be public domain. It requires that you own the copyright or it is in the public domain I.e. you are not breaching copyright by posting it.

Well. The latter would be true if we released the next version as "Public Domain" and the former would be true even if we posted it there under a BSD licence. So where's the problem?

-

1

1

-

-

You can be sued for anything you post to LAVA also if you didn't have the legal right to post it.

That's what the law requires... you're the copyright owner. You have to do something to allow NI to use it. There's nothing NI can do on its own to appropriate the rights -- and you wouldn't want there to be a way to do that.

We've made it as easy as possible to transfer those rights through the ni.com EULA. I'm still arguing for changes to that EULA that would clarify some aspects, but it's the only mechanism I have ever heard of for you to easily state unambiguously, "I don't mind if NI unconditionally uses this, sells this, and redistributes this." If you know of another mechanism, I'd love to hear it.

I'm not sure that's true since rights assignment requires a real signature. What are you going to do about the OpenG toolkit stuff that the API uses?

There is a solution I think, however.

We release the next version as public domain (which OpenG allows us to do I think), then it can be posted on NI.com (as their EULA demands). Basically we give up our rights but to no-one.

-

Do you lose all the windows title-bar transparency? (i.e. it's dropping out of Aero)

-

Gives a whole new meaning to being "two faced"

-

Uh, wait, what? Can I be sued for stuff I post on NI.com? Sounds like an argument to not post anything on NI.com. Ties back to my previous point about the NI.com Terms of Use containing disclaimers to protect NI but not posters; do I need to add a legal disclaimer to every post and uploaded sample VI?

And if something is adopted into LabVIEW, it becomes NI’s responsibility, surely?

That’s why I like the “1 clause BSD”, dropping the binary requirement. The source code requirement is trivially satisfied by placing the license in the FP or BD or hidden away in the documentation.

BTW to OpenG developers (JG if he’s reading): does OpenG not have some transfer of copyright to OpenG itself? It will be impossible to change licensing terms on OpenG once some of the authors die. I’d like to propose dropping the binary clause.

The only solution that will satisfy NI is ownership and they seem completely intransigent on that point. It looks like*you* (meaning not NI) will have to jump through all the hoops just so they can use it and I'm getting to the point where I just think the risks and the hassle outweigh the benefits (not that I see much in the way of benefits to begin with

). There's too many unknowns at this point; all the risk is ours and NI are taking none

). There's too many unknowns at this point; all the risk is ours and NI are taking none  . Everyone else is quite happy with the current state of the licencing, so I suggest we wait and see what happens with another piece of code (like you suggest-the trim whitespace or something similar) - find out exactly what the process is, what the Software Freedom Law Center advise and what the implications are. I'm in no rush to a) be a guinea pig and b) see it disappear until next august or even completely! (yes I know November is when the new features are defined but once again, that's NIs constraint not ours).

. Everyone else is quite happy with the current state of the licencing, so I suggest we wait and see what happens with another piece of code (like you suggest-the trim whitespace or something similar) - find out exactly what the process is, what the Software Freedom Law Center advise and what the implications are. I'm in no rush to a) be a guinea pig and b) see it disappear until next august or even completely! (yes I know November is when the new features are defined but once again, that's NIs constraint not ours).NI have a whole department of lawyers on payroll, get them to show by example how to get around NIs own policies (they wrote them) without the community bending over forwards and dropping their knickers.

-

And as an added bonus, people think it's way creepier when cameras are looking down at them!

Especially the bald ones

-

Hello,

I'm not familiar with the Vision package and I would make you a question.

Does you know if it should be possible to use it to count people entering or exiting a door?

Thanks

Max

Yup. The trick is to mount the camera above the door looking down. Then you don't suffer from occlusion,have fairly regular shapes and a constant, uniform background to contrast against. Once you get used to it, you can even start counting prams, pushchairs, wheelchairs, adults/children etc.

-

1

1

-

-

Reminds me of perl code.

That's because it is PCRE (Perl Compatible Regular Expressions).

How about this for graphical programming?

-

2

2

-

-

- Popular Post

- Popular Post

Anyone else have any utterly ridiculous code? Not spaghetti, just off the wall, makes no sense but works perfectly kind of code?

-Ian

Yup. Any application I write after 6 months has elapsed

-

3

3

-

-

Or drjdpowell could make a decision to post his VIs to ni.com.

Thats another can of worms. What about mine and Toms contributions in relation to NIs TOCs? I don't think just posting it there solves all NIs self inflicted problems.

-

I think the difference is the nature of what is included.

If LabVIEW contains BSD software as part of the LabVIEW environment then we include the attribution in the readme and I guess all is well, this is what many of the third party licenses that distribute with LabVIEW appear to be for.

What I suspect causes the issue here is that if we include libraries under a BSD license as source then that means that everyone that builds an application in LabVIEW using that must:

- Ensure they also have the required legal information distributed with the EXE.

- Ensure they are allowed to use software licensed under the BSD (this is probably NI's biggest concern, I can't talk for the decision makers but it is highly likely we have customers who cannot include BSD source code in their own applications).

Indeed. But as all LabVIEW software must be distributed with either the IDE (for source) or the run-time engine (executables) this doesn't seem prohibitive. In fact the shared directory contains a copyright.txt that lists all the attributions.

In fact it states in the NI Forums TOCs that you must warrant

you are the sole owner of the Communications and/or such Communications constitute material in the public domain

So. Public domain stuff can be posted and, by implication, can be used by NI.

Just having re-read the NI TOCs and saw the liabilities disclaimer for user contributions which only disclaims NIs liability. Does this extend to the uploader/author via the other disclaimers in different sections? . This would be reason enough for me not to post software on there at all. On Lavag we are covered by the Creative Commons disclaimer (apparently

). There are so many ambiguities or "open to (lawyer) interpretation" aspects to the NI site. Lavag is simple and straight forward. You are much less likely to have a hoard of lawyers bearing down on you (and we all know the deepest pockets win regardless of right/wrong). By posting code here on Lavag, the interests are more aligned with "community" than business.

). There are so many ambiguities or "open to (lawyer) interpretation" aspects to the NI site. Lavag is simple and straight forward. You are much less likely to have a hoard of lawyers bearing down on you (and we all know the deepest pockets win regardless of right/wrong). By posting code here on Lavag, the interests are more aligned with "community" than business.-

1

1

- Ensure they also have the required legal information distributed with the EXE.

-

It simply requires that you believe that NI would act in its own best interests and would follow the terms laid out in its own EULA, which seems a pretty "well duh" sort of bet in my book.

Of course. Rather than in the best interests of the author or even it's users (although NI is arguably better that most companies in this respect).

Rather than argue about "legal" implications (NI have a team of lawyers, where as we don't) I suggest the legal issue is passed to the Software Freedom Law Center and let them advise as to what it means if BSD software is posted on the NI site.

The main issue for me, though, is that I don't believe NI cannot use software if it is under a licence (whatever it is), they just choose not to if they can get away with the author signing all their rights away to them (makes good business sense). Whether the NI site terms actually mean that or not, is a lawyers question. In my laymens reading it "looks" like it is just implicit distribution rights, so exactly the same problems as are being proffered here would apply even it it where posted there with or without a licence. Conversely, if it isn't an issue there, then it should also not be an issue on Lavag for the same reasons. (Most sites' TOCs are there just to protect the site owner from breach of copyrights should someone else upload copyrighted content and allow digital distribution). I'm reticent at that interpretation, however, since there is an obvious determination that software should be posted there instead of elsewhere and I can only think of one reason why that would be especially since other licenced software is distributed with LabVIEW under much more strict conditions than BSD..

-

Depending on the type of noise; a running average or median filter might suffice.

-

Late Friday night and almost in sleep mode...

AQ said that to really reach out to all customers we really need to give our code to NI, but since LV 2011 we have the LabVIEW Tools Network built in to LabVIEW and this enables each and every user to easily install features they need (from OpenG, LAVA or LVTN).

LVTN has no restrictions for licensing that I know of, but NI still reviews and put code under the NI umbrella and even publish some code of their own.

I currently know nothing about JSON, but from reading through the threads here at LAVA it is probably something I will look into in the future. And when I do, I like to be able to select the implementation that I want to use; NI version, LAVA version or any other version.

Installing these from LVTN/VIPM mean that new features/versions are available to me directly and I don't have to wait until the next LabVIEW release. Bugs detected in LVTN modules are also likely to be fixed much faster since the fix can be released regardless of the LabVIEW release cycle.

/J

Absolutely. How many bugs are still around from Version 8.x? There's no denying that bug fixing is far better out of the NI release cycle.

Wait a second: that's a little personal, and I think misguided: I don't think Stephen is arguing that NI should won it,

Misguided? (see my previous quote). AQs premise is that he can't use the Json library because it's not NI owned and is writing reams to justify why you should hand your IP over to NI. Most of the time AQ has his engineers head on. In this instance he has his corporate employee head on. No one else siad "I do think that you should hand the IP over to NI"

That said, based on the licenses Mike found, I don't think that's true - NI already includes BSD-licensed components with LabVIEW. So what gives?

I have a feeling this has more to do with the Data Dashboard than anything else.

[CR] JSON LabVIEW

in Code Repository (Certified)

Posted · Edited by ShaunR

Not throwing an error makes it quite hard to find out what the problem is in even moderately sized streams. However. I'm of the opinion that it should at least try a best guess and raise a warning (preferably identifying where in the stream). Errors are a two edged sword since you can end up halting your program just because someone left off a quote (we are heavily reliant on quotes being in the right place).

I'm just waiting for Ton to give me a login the the repo since I've implemented the decoding of escaped chars (not unicode I might add-we need to think about that). It doesn't work for your modified JSON_Double[array] though, since it is utilised in the Get Item By Name rather than Get Text. I have, however, made it a VI so you can put it where you think best.