-

Posts

4,996 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

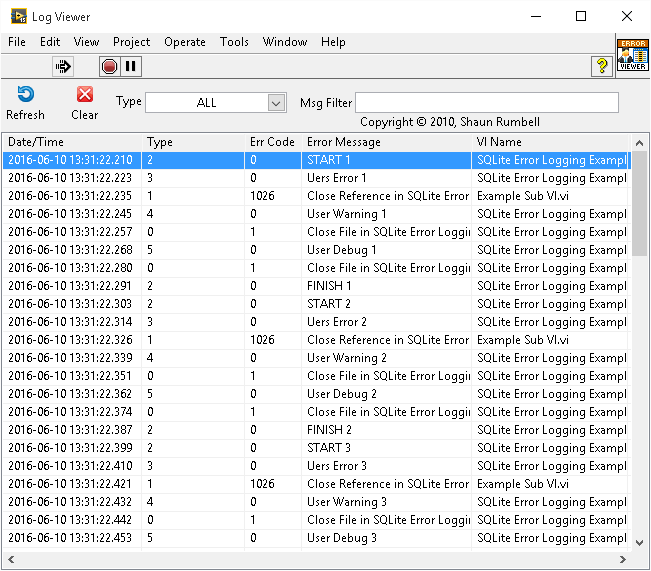

The API distinguishes between user errors and native LabVIEW errors. Since the implementation does not allow you to input user error numbers, the code number for user errors or warnings is moot and defaults to zero.This way it does not interfere with native LabVIEW errors and warnings. If you want to force an error with a specific error number then there is already a primitive to do so (Error Cluster From Error Code.vi) and the API will log it when you pass it in. But don't get hung up on specifics. It's a an example of using a SQLite database for error logging so it isn't meant to be the ultimate silver bullet to error logging (although I don't think it is far off-it's a case of it works for me in everything so far ). If you have some good ideas (like your suggestion of more levels/priorities) then maybe we can improve the example. But at the very least it is a good starting point. Lets see how it fails your use-case and what we can do about it, eh?

-

I think you misunderstand. An API doesn't decide the meaning of a priority as such. It's just another field that you can use to group errors. The developer still decides if it's fatal, recoverable or just nice to know but he can decide to limit the granularity of written data or filter and sort it (e.g.fatal only). Think of it as categories of errors. Most APIs have a few built-in "categories" for the developer to use.

-

Or as Linus Tovalds says: "Never break user space" and "if you change the ABI, I will crush you!" . (Shame they don't listen to him )

-

Umm. No. The numbers in the user source string is just so you can see which message belongs to which error otherwise you'd just have a load of starts and finishes and unable to check which write generated it. The "priority", or "level" as it is called is the Typedef (Error, Warning, User etc). I've used 4 because that was always enough for me, although I think I will change it from a U8 field to a U64 to give 61 User level/priorities in total rather than 5. That should be enough, right?

-

Have a look at the SQLite API for LabVIEW. It has an errors API and an example. I use it in messaging systems with a service that listens to error messages.

-

This is what LavaG is here for. No-one has even seen this new structure yet and we are already trying to figure out how we can abuse it

-

Sweet. OK. So the default behaviour is no error (accept everything), each case is then tested at design-time in order to make sure it compiles (using normal primitives and preserving coercion and polymorphism) and there is a little bit of manual fnuggery with two new primitives to force a type error so we can implement our own logic. An awesome, outside-of-the-box solution to controlling permissive types. If we have a "To variant (Type Only)" does that mean we can wire it directly to this new structure and use it like an ENUM wired to a case statement but for different types? (I'm thinking outside of macros and at run-time here )

-

Sweet. Similarly. Many of the primitives have default transformations for type, an example being numerics where we can wire in a double and output an Int (coercion) and this is also apparent on controls and indicators. A case-like feature that detects it's terminals has the potential to "explode" that convenience into umpteen subdiagrams where the type must be explicitly defined. Do you have any thoughts how to keep the generic convenience?

-

What is your solution here to the "polymorphic problem" - where we have to define a subVI (or in this case a subdiagram) for every LabVIEW type.

-

Nice. You are only 1 femtometer away from replacing the VIM and removing the old-school named cluster.

-

Well. The fist thing I will do is fire up the VIM Hal Demo. If that works without having to replace all the VIMs and has meaningful event names; I will be a very happy bunny.

-

It sounds from the [brief] description that if no cases match then it could break the Vi, thus achieving what you desire by adapting to only those types you define in the structure (maybe an optional default case?). Except this is more powerful in that you could also do things like "nearly equal to zero" if it is a double type, for example.

-

Dealing with multiple DNS entries

ShaunR replied to John Lokanis's topic in Remote Control, Monitoring and the Internet

Oooooh. Never knew it could do that. -

I don't claim it works under Windows. Thats why I suggested the file change notification for Windows..

-

SQLite doesn't support UPSERT.

-

You misunderstand. It won't work cross process because it is not a system-wide hook. It works great in LabVIEW on all platforms but won't tell you when another application has changed it.- not even on Linux Funnily enough, it does work the way you think it does on VxWorks because there is only one process.

-

That's not the function you are looking for. This is.

-

I wasn't hopeful, but it's always worth asking the question I think there are similar issues with some of the DAQ too. I've only touched the surface with limitations since I've never used Linux in anger, so to speak-just for testing toolkits. It will be interesting to see what NI do with their PXI racks as ETS is Windows 7 (nee windows XP).

-

Interesting. Anything you can share? I've been eying Linux with the advent of Windows 10. All my toolkits (except the MDI Toolkit) are tested and work under Linux - it is just the NI licencing toolkit that prevents distribution (for now) on that platform. Vision is a rare requirement for me but, none-the-less, I really want a single platform rather than "choices" dependent on requirements. That used to be Windows but it looks no longer fit-for-purpose. If the holes in support are gradually closed (preferably by NI) then I would make the transition to Linux as the first-choice platform. I'm seriously thinking about formulating a "LabVIEW Linux" virtual machine image and distribution depending on licencing concerns since I have been using LabVIEW on VPSs for some time now for testing Websockets. That has given me some experience with Linux MIS and so now have standardised on LabVIEW, Codeblocks and CodeTyphon as a cross platform development configuration for Linux as well as Windows - dual boot, VM or VPS. Makulu Linux has a feature whereby you can create a distribution as a clone of your desktop so I'm looking to see how that works 'cos that's brilliant and just the job! At this point I am fairly comfortable about breaking 20-odd years of Windows only development with a move to Linux for test and automation.

-

Unregistered users can't download (or, apparently, register))

ShaunR replied to Yair's topic in Site Feedback & Support

Yes. But that's not really how it works and depends a lot on the provider. The service provider will either black-hole your server address for 24-48 hours at a time - so no-one can see it - until it goes away or you may get stung for exceeding your bandwidth and have to pay extortionate amounts per GB (if you have a limit). The rest of your sentiments I agree with, though, I'm just boggled why Michaels decision is even being questioned because I consider it is a black and white choice for the site owner and he's made the decision. -

Unregistered users can't download (or, apparently, register))

ShaunR replied to Yair's topic in Site Feedback & Support

You said unless there where technical reasons and I gave you a very important one.Now your objection is about DDOS policies and registration? . I'm struggling to understand the vehemence of your objection to a standard practice for websites (which is standard because of the reason I outlined) and why it is such an issue for you on LavaG. -

Unregistered users can't download (or, apparently, register))

ShaunR replied to Yair's topic in Site Feedback & Support

You can't ban malicious people that hog your server resources by downloading every file on your site from just a couple of computers if it's open to everyone. At least if they have to register you increase the work they have to do to and can mitigate it somewhat without having to spend huge amounts on DDOS services. -

You can always use Windows 7 until end of life. Linux is getting some love by NI and device manufacturers have standardised on TCPIP and USB so it's becoming a pretty good alternative if you don't need Vision. It seems the time is ripe for Linux RT Desktop :

-

Apparently they can only be deferred by months and security updates cannot be prevented at all.

-

OK. So I decided to evaluate Windows 10 - not as a desktop OS, but as a DAQ and automation platform like Windows 7 on PXI racks. My conclusion was that it is no longer a viable platform for test/DAQ and automation. There were 4 main killers for me. Updates are forced by Microsoft when connected to the Internet which not only means that we no longer have control over versioning of a deployment but we also have no control over bandwidth usage. The only options are for immediate update or deferred (6 months) update. WE are no longer able to stage deployments with tested targets that are guaranteed to remain stable and prevent mutation of the customers system. Resource usage by background windows processes that cannot be disabled are prohibitive for DAQ and unpredictable when they occur (yes I'm looking at you "Microsoft Common Language runtime native compiler") It is opaque as to exactly what information is being sent to Microsoft and the system is set for maximum disclosure of private data as default. This means it is difficult or even impossible to guarantee a customers privacy. Even if a comprehensive assessment is made of leaked information, there is no confidence that settings will not be reverted or measures circumvented by future updates (see Item 1.) Bandwidth usage is hijacked in order for M$ to deploy updates to nearby machines using "Background Intelligent Transfer" (basically bittorrent). At present this can be disabled in the services but I am not confident that will always be the case. This has caused a couple of mobile applications to exceed limits and result in reduced service speeds and high data usage charges.