-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

Unregistered users can't download (or, apparently, register))

ShaunR replied to Yair's topic in Site Feedback & Support

You said unless there where technical reasons and I gave you a very important one.Now your objection is about DDOS policies and registration? . I'm struggling to understand the vehemence of your objection to a standard practice for websites (which is standard because of the reason I outlined) and why it is such an issue for you on LavaG. -

Unregistered users can't download (or, apparently, register))

ShaunR replied to Yair's topic in Site Feedback & Support

You can't ban malicious people that hog your server resources by downloading every file on your site from just a couple of computers if it's open to everyone. At least if they have to register you increase the work they have to do to and can mitigate it somewhat without having to spend huge amounts on DDOS services. -

You can always use Windows 7 until end of life. Linux is getting some love by NI and device manufacturers have standardised on TCPIP and USB so it's becoming a pretty good alternative if you don't need Vision. It seems the time is ripe for Linux RT Desktop :

-

Apparently they can only be deferred by months and security updates cannot be prevented at all.

-

OK. So I decided to evaluate Windows 10 - not as a desktop OS, but as a DAQ and automation platform like Windows 7 on PXI racks. My conclusion was that it is no longer a viable platform for test/DAQ and automation. There were 4 main killers for me. Updates are forced by Microsoft when connected to the Internet which not only means that we no longer have control over versioning of a deployment but we also have no control over bandwidth usage. The only options are for immediate update or deferred (6 months) update. WE are no longer able to stage deployments with tested targets that are guaranteed to remain stable and prevent mutation of the customers system. Resource usage by background windows processes that cannot be disabled are prohibitive for DAQ and unpredictable when they occur (yes I'm looking at you "Microsoft Common Language runtime native compiler") It is opaque as to exactly what information is being sent to Microsoft and the system is set for maximum disclosure of private data as default. This means it is difficult or even impossible to guarantee a customers privacy. Even if a comprehensive assessment is made of leaked information, there is no confidence that settings will not be reverted or measures circumvented by future updates (see Item 1.) Bandwidth usage is hijacked in order for M$ to deploy updates to nearby machines using "Background Intelligent Transfer" (basically bittorrent). At present this can be disabled in the services but I am not confident that will always be the case. This has caused a couple of mobile applications to exceed limits and result in reduced service speeds and high data usage charges.

-

If you are sure, then will bow to your better judgment and familiarity with the API. It's such an awful thing to do in modern APIs that I would have only expected that sort of thing in 1990s 32 bit code to thunk down to 16 bit :D. It was (is) actually Aristos Queue's signature and it was C++ rather than C. Unless you are a masochist, you don't need write any C/C++ code in LabVIEW because ..... whats a pointer? I'm sure Microsoft said exactly the same thing right before their first "Blue Screen of Death" report.

-

There is something wrong here. Constants don't change from 32 to 64 bit. Are you sure you are not looking at a pointer to a structure? .....later, after some googling.... #define VIDIOC_S_FMT _IOWR('V', 5, struct v4l2_format) It's not a constant, it is a macro expansion to a function.You re inspecting a function pointer.

-

Actually you already answered your own question which was "No it isn't a bug" (the many surprises about typdefs?). Therefore you only have two options; put up with it or write a script. I'm guessing most of us don't encounter it at all (like me, although I know it happens) or it's just a minor and rare annoyance not warranting a script. So you can't really blame us for wandering off and musing the merits of enums (which would solve your problem). Good point. Breaking a VI so you know where to modify is definitely an advantage of enums but are you still writing systems with lots of cases that need to be modified everywhere? I thought we had retired those organic architectures years ago

-

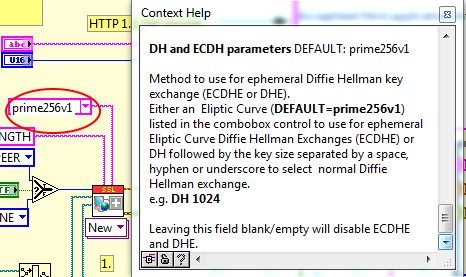

One of the biggest (but not the only) "pro" for enums used to be that case statements created readable case names when wired and for things like state-machines it was a lot easier to see what was happening-readability. That really became a moot point when cases started to support strings. Enums are overrated and overused now IMO and pretty useless in flexible messaging architectures. I was only recently turned on to the text combo box too which, when you consider string case structures, is an excellent enum replacement, I used it for the Encryption Compendium for LabVIEW since there is a predefined list of algorithms to choose from. Not only did it allow for using new algos if the user updates the binaries without having to wait for me to release a new version (they just type in the name) but it enabled paramterisation of the algorithms (like DH 1024 or DH 2048) for advanced uses. Catering for the different methods could have got real messy and much harder to use the standard set of algos without it.

-

This also has other subtle implications such as you can change the ring strings at run-time whereas you cannot with enums. This means that if you want to translate a UI.......don't use Enums on the FP.

-

How to increase performance on lage configuration INIs?

ShaunR replied to flowschi's topic in OpenG General Discussions

Buy an SSD? Only load the sections that are not the same as the LabVIEW in-memory defaults? Only load what you need when you need it (just in time config)? Split out into multiple files? (diminishing returns) Refactor to to use smaller configurations? Lets face it. 15-30 seconds to load 10,000 line inifiles is pretty damned good for a convenience function where you are probably stuffing complex clusters into it. What is your target time?- 7 replies

-

- 1

-

-

- variantconfig

- configuration ini

-

(and 2 more)

Tagged with:

-

Well. you are sending a waveform as a block (array) of data which has a fixed dt so you need to know the start time, Prepend the date/time to your double array and read it the other end to set your x axis start. You can then compare that value with the time you received it to get a rough latency of transmission.

-

Sharing configuration information between processes

ShaunR replied to Neil Pate's topic in Application Design & Architecture

OK. So you want to "pull" and like ini-files. Hell. there is a whole operating system based on ini files, eh Linux? So why not? Each "actor" (I hate that terminology) has its own ini-file, whos name is dependent on the actor, process, service or whatevers name Lets say you have an "Acquire_DC_volts" then you could have acquire_dc_volts.ini. Your "System config Engine" broadcasts a "UPDATE" message (event or queue list-up to you and your architecture) that prompts each actor that wants config info to issue a query message to the "System Config Engine" that says "I want this file". The "System Config Engine" then loads the file and sends it to the requester. Why do it this way instead of relying on each actor to load it? Because you can make an API and handle more than just config files, you can order the requests if there are inter-dependencies and you can swap out ini-files for a DB later once you have succumbed to the dark side .. You can see this type of messaging in the VIM Hal Demo. There is a FILE.vi [service] that does the actual reading and if you look in MSG.vi you will see the requests (e.g. MSGPOPUP>FILE>READ>msg.txt" and "MSGPOPUP>FILE>READ>bonnie.txt" ), It doesn't happen on config changes because there is no user changeable config, rather, when certain events happen - but it's the same thing. -

Sharing configuration information between processes

ShaunR replied to Neil Pate's topic in Application Design & Architecture

Yes. I use a different topology-I'm at the other end of the spectrum I talkedabout earlier. I only send UPDATE messages and whoever needs the configuration goes and queries the database for whatever they are interested in. You push; I pull -

Sharing configuration information between processes

ShaunR replied to Neil Pate's topic in Application Design & Architecture

Well. Messages are ephemeral and config info is persistent so at some point it needs to go in a file. This means that messages are great for when the user changes something but a pain for bulk settings. Depending on the storage, your framework and your personal preferences, the emphasis will vary between the extremes of messaging every parameter and just messaging a change has occurred. -

Sharing configuration information between processes

ShaunR replied to Neil Pate's topic in Application Design & Architecture

If you can use a LV2Global to transfer information then it isn't a separate process-in the operating system sense. May seem pedantic, but it makes a huge difference to the solutions available. Anyway..... I think most people now use a messaging system of various flavours so it's usually a case of just registering for the message wherever you need it. Usually for me that just means re-read the database which can supply you with the sharing like a global but without dependencies.. -

Find orphan VIs in project hierarchy (on disk)

ShaunR replied to LogMAN's topic in Source Code Control

Commented Out Diagrams? No Specifies whether LabVIEW returns dependencies that LabVIEW does not invoke, such as those in the Disable case of a Diagram Disable structure. Also, if you wire a constant to the selector terminal of a Case structure, LabVIEW considers dependencies in non-executing cases to be commented out and does not invoke them. -

Oooh. This is fun. http://www.indeed.com/jobtrends/q-labview-q-programming.html?relative=1 Python is far superior to PHP and IMO is why it is enjoying the attention. http://www.indeed.com/jobtrends/q-php-q-python.html?relative=

-

I have access to all versions from 7 through to 2015 (32 and 64 bit as well as other platforms). Let there be no mistake. I am not calling into question LabVIEWs performance and stability - which has always been exemplary. My jocularity is that people still believe this is a valid excuse for shipping commercial software and proffering it as a feature. We have "software as a service" (SAAS) so I will coin the phrase "excuse as a feature" (EAAF) Also, to an extent, it's a case of "you have to laugh or else you would cry" to my disappointment that this myth is allowed to perpetuate when it was a single version (2011) that was supposed to be the "Stability and performance" release after the pain of 2010. I laughed then when they came out with it and I'm still laughing 5 years later (You can find it on LavaG.org) Try charging your customers for a "performance and reliability" version of your software and see how far you get

-

-

Register for shared variable data value change notifications

ShaunR replied to george seifert's topic in LabVIEW General

It did. It was called "BridgeVIEW". They didn't sell many so decided to make it a toolkit instead . -

Register for shared variable data value change notifications

ShaunR replied to george seifert's topic in LabVIEW General

The same way you do from any DLL - PostLVUserEvent. Generating events for LabVIEW is trivial. Maintaining a DLL (especially multi-platform) is a right royal pain in the proverbial,however. -

Find orphan VIs in project hierarchy (on disk)

ShaunR replied to LogMAN's topic in Source Code Control

I do this slightly differently (in Project Probe). Yours might be faster if you only look at link info from the file rather than loading the hierarchy but I have to look at lots of other VI stuff that require opening the VI anyway too (like re-entrancy, debugging enabled, icon, description etc). I also use it to sort VI refnums when force compiling hierarchies. I create the file list similarly to you then I open each VI and check if it has dependencies (Get VI Dependencies Method Node). The VI opened and its dependencies are added to a [found] list. If a VI in the file list is already in the list or in memory then it doesn't need to be opened and could be considered already processed (it has a parent that was previously opened). If a VI has no parent and has no dependencies then it is an orphan. It can also find VIs in conditional disables. So I deduce if it is an orphan from the dependencies of all the VIs