-

Posts

4,998 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

I only half agree with this. To replicate VIPM, yes. To make an installer, no. (Are you still using a modified the modified OpenG Pakager) As you are probably aware, I use a Wizard style installer for SQLite API for LabVIEW because VIPM can't handle selective 32&64 bit installs. It can also integrate into a global "package Manager" which is SQLite based rather than the "spec" inifile . In fact. It was the reason I added the capability to import an ini file to the SQLite API for LabVIEW so as to import the VIPM spec files The downside to the wizard is the size of the deployment but it suffices for isolated installs and it can be retrospectively integrated to the global manager. The upside, however, is that, in addition to the standard pages, you can add custom pages for your install and that makes it much more flexible-if a little more effort. So the wizard has all the bits and pieces to install (pallet menus, recompiling etc) and I did start to make a Wizard Creator where the Installer was an example. Just one of the many half finished projects. The global manager (which is the other side of VIPM) would take a lot to productionise and I'm, not currently interested in stepping on anyone toes. It works great for me and I don't have to produce help documentation which takes me longer than writing software . It will ultimately also handle my own licencing scheme too (which we have discussed previously) but even then it will be limited to only my products as there are a few now. So yes. It is totally doable (and has been done) with enough effort but I would do it slightly differently to VIPM.

- 25 replies

-

- open source

- alternative

-

(and 1 more)

Tagged with:

-

This is really a source code control issue rather than an installer issue. The two are related in that an installer usually installs a particular dependency version but the goals are different. In NIs refusal to give us a proper source control system that is fit for purpose, the VIPM software is the best of a bad situation for simple version control but it isn't really a version control tool any more than an RPM package installer is (on which it is based),

- 25 replies

-

- open source

- alternative

-

(and 1 more)

Tagged with:

-

Question about Write Panel to INI__ogtk.vi

ShaunR replied to kgaliano's topic in OpenG General Discussions

I was emphatically with you right up until the "train tracks" (my OCD prevents your version and there are genuine reasons for pass-through for optimizations) and palettes.(time and motion studies on placing primitives is ridiculous - you can make mistakes faster? ). Nice to see a fellow heathen picking holes in accepted dogma. Beginning to think I was the only one -

Why not ask on the support thread then so that the developer gets a notification.to answer your query

-

I have no idea what toolkit you are using but if it is the OpenOffice RGT addon then...... Support thread is here.

-

Is that still an issue? (it says version 3.3.1 and was last updated in 05/05/2011)

-

Question regarding Error Wire in some OpenG subVIs

ShaunR replied to kgaliano's topic in OpenG General Discussions

That's a partitioning argument but more importantly it's just that sort of thinking that means we have an error emitted reading an entire text file which is a glorious pain in the backside . -

Question regarding Error Wire in some OpenG subVIs

ShaunR replied to kgaliano's topic in OpenG General Discussions

Yawn. We are back to the beginning of the circle then. Every few years someone decides that this or that is a bad idea so as to be "progressive" then a few years later another person decides it is the opposite again. It's a non argument in the same way as how many different types of errors should we have? I use warnings to tell users/developers that data is being dropped and an error is not appropriate for that.as it is a designed feature to protect the server/client. -

Actor Framework settings dialog

ShaunR replied to chrisempson's topic in Application Design & Architecture

Yes. the "advanced" or dependent configuration is not easily catered for by just blindly loading and saving FP controls.It would be a mistake to try and add logic to a generic API to cater for application specific dependencies. I chose to have the basic load/save behaviour which is fine 8 times out of 10 and then use a query to update specific controls so it is a two step update for corner cases. It is very simple but does require a little bit of thought about naming your controls. The current query function only interrogates label names as a partial match so adding "Avanced_" to a control, for example, means you can achieve that behaviour. A slightly more complicated query would be nice across dialogues and in my personal version the default query string can be overriden but since I never get any feedback as to features people want or bug reports to drive another release; I haven't implemented it as yet.- 6 replies

-

- dialog

- architecture

-

(and 2 more)

Tagged with:

-

Actor Framework settings dialog

ShaunR replied to chrisempson's topic in Application Design & Architecture

I think if you move to a DB, it will supply the decoupling and still give you all the same capabilities. A while ago I added a "Settings" library to the SQLite API which, I think, does exactly what you're describing - almost direct replacement. You just place the VI on the diagram and you can load and save the FP controls to the DB. It also gives you "Restore to Default" and has a "query" function so you can return as many or as few parameters as you like (see the Panel Settings Example). I was also thinking about adding the "Update" event broadcast similar to your system since for Data-aware xcontrols it would be a very nice feature to have them all update on a change and would only be a couple of minutes to add.- 6 replies

-

- dialog

- architecture

-

(and 2 more)

Tagged with:

-

The issue I have with most unit test frameworks is that they effectively double, triple or even quadruple your code base with non-reusable, disposable code - increasing effort and cost substantially and often for minimal gain - more code = more bugs. This means you are debugging a lot of code that isn't required for delivery; for a perceived peace of mind for regression testing. I skeptical that this is a good trade-off. I couldn't install the VIP since it is packaged with VIPM 2014+ (I'm still livid at JKI for that). I did, however, extract the goodness and look at it piecemeal but couldn't actually run it because of all the dependencies that were missing.So if I've missed some aspects, I will apologise ahead of time It looks like early days as you find your way through. Some things you might want to look at...... 1. Instead of creating a VI for every test with a pass/fail which you then scour for that indicator Look at "hooking" the actual VIs front panel controls' (there is an example on here I posted a long, long time ago) and comparing against expected values and limits. This will move the "pass/fail" into a single test harness VI, much like a plug-in loader, and for non iterative tests-will give you results without using a template or writing test specific code.. 2. Consider making the "Run Unit Tests" a stand-alone module. This will enable you to dynamically call it from multiple instances to run tests in parallel, if you so desire. 3. Think about adding a TCPIP interface later on so that you can interact with other programs like Test Stand (forget all that crappy special Test Stand Variable malarkey ). This means that your test interface is just one of a number of interfaces that you can bolt on to be the front end and doesn't even limit you to LabVIEW. (how about a web browser ? ) With a little bit of thought, this can be extended later into a messaging API to cater for iteration, waiting and command/response behaviour. This, along with #2 is also the gateway to test scripting (think about how I may have tested the Hal Demo as both a system and as individual services). Edited: 'cos I can

-

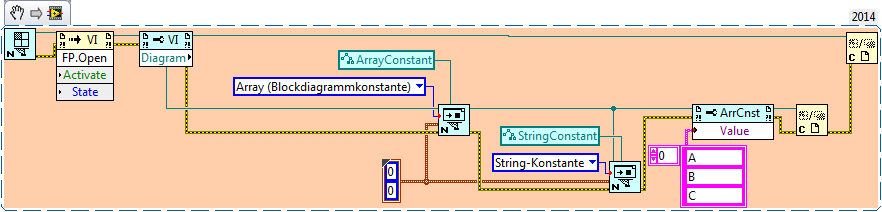

Create an array constant and fill it with n-elements

ShaunR replied to weigsi's topic in VI Scripting

-

Like this

-

I merely meant that scientist and engineers sat in laboratories really don't care about this sort of stuff. They just want their experiment/machine to work. They choose LabVIEW because they don't have to worry about pointers, memory, stacks and registers - all the crap other languages have to deal with - so the mindset of "how do I break/exploit this?" isn't there. With the introduction of the academic licences - not that long ago - LabVIEW has been exposed to more of those with exactly that mind-set and many are polyglots - not only on Windows but on Linux. I'm not saying you are in academia, just that because of the academic exposure we should see more black, white and grey hats targeting LabVIEW where previously there were next to zero.

-

d) Nothing to do with LabVIEW. I think this is very valuable work but useless from a LabVIEW for Engineers point of view. This is really just showcasing a (zero day?) exploit that will hopefully be addressed in the next update and the "feature" will disappear. I would have preferred a responsible disclosure of the exploit and I expect this will get very little love from the community as I would guess that outside NI R&D the number of people that even understand it, let alone could leverage it, can probably fit in a small family car. It is, however, a splendid example worthy of DEFCON and CeBIT for demonstrating attacks on the niche language called LabVIEW. Hopefully we will see DEFCON videos of LabVIEW RT and PXI boxes being pwnd and machines taking peoples arms and legs off. Maybe then the malaise and complacency around security in the LabVIEW community might be eroded somewhat. To be honest, I expected this sort of thing a while ago but I guess we are only now reaping the benefits of the academic licences Keep exploiting while I go for some more popcorn . I'm sure there are a couple of eyes on this work just itching to marry it with self expanding VIs

-

Oooooh. Memory mapped files as a DVR?

-

You are not really talking about "Style", rather "State". There are two basic schools of thought. Try to keep state locally in a model or let the device keep the state. The former is fast but more error prone (desynchronisation between your model and the actual device) while the latter is slower but more robust and easier to recover. There are also shades in between the two which tend to be dependent on the device itself. If a device has multiple programmable config memories then a single command to switch recipes and read a value continuously may be needed (e.g. motors with profiles). If you have 1,000 UI controls to turn an LED on, then you may have to selectively see what the user has changed and send the command appropriately and hope they didn't press the reset button on the device. My preference is device 1st, local model 2nd (when performance becomes an issue). This is mainly because you usually end up re-inventing the wheel, that is, recreating the logic of the device firmware in a local model and some of them can be very complicated indeed. Some devices even have their own controllers so it becomes more of a distributed control system. Then I like to chop the hands off of operators and leverage advanced features of the device for configuration (recipes) if it has any.

-

The dialogues use "Root Loop" and the message pump is probably halting before sending the message once the first dialogue is shown. Use your own dialogue or set the string to "Update value while typing" to separate the events.. This should give you the result you are expecting.

-

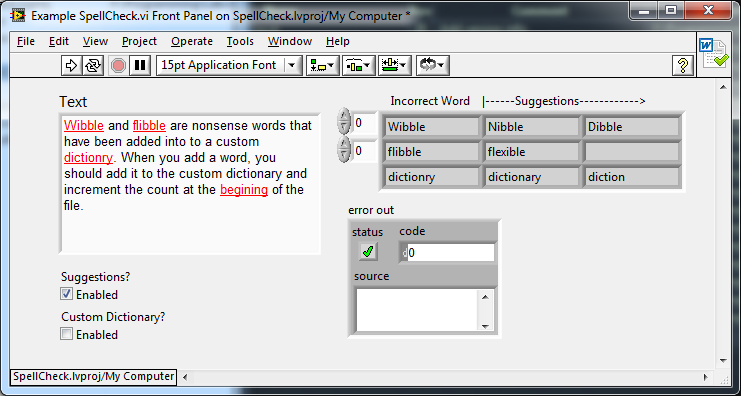

There's probably a few improvements that it could benefit from. The Hunspell library only checks individual words so the regex I used to split text may need tweaking, for example. As long as there are no show stoppers; leave it a month for people to play and make suggestions like yourself. then I'll revisit.

- 18 replies

-

- right-click

- spell check

-

(and 1 more)

Tagged with:

-

1.0.1 has been released with the dependency removed. You should be good to go!

- 18 replies

-

- right-click

- spell check

-

(and 1 more)

Tagged with:

-

Not really. There isn't much too it. The build environment was imported from a VC++ workspace into CodeBlocks so I'll go through it so that external dependencies aren't required - I thought that was too easy...lol. In the meantime you can get a 32 bit binary from here to play with (rename it to libhunspellx32.dll) or with a bit more google-fu you can find others. They are all 32 bit, though. If there were 64 bit available, I wouldn't have bothered distributing them with the API.

- 18 replies

-

- right-click

- spell check

-

(and 1 more)

Tagged with:

-

Mission accomplished!

- 18 replies

-

- 1

-

-

- right-click

- spell check

-

(and 1 more)

Tagged with:

-

No. It's my bad. The thread is about IDE spell checking through a right click plugin so my use case is a super-set. It was just the only Lavag thread that was about spell checking. There is an external spell checking library (scroll up for link). It works very well. The problem is it's LGPL.GPL or MPL (have a choice) but they all burden the distribution of binaries with source (for a couple of years). So I'm umming and arring about not supplying the binaries and just supply the LabVIEW code as BSD3.Then whoever wants to can build their own binaries or find pre-built ones elsewhere . The problem there is that it will make it almost unusable for many LabVIEW people who are using 64 bit if I don't supply them..

- 18 replies

-

- right-click

- spell check

-

(and 1 more)

Tagged with:

-

I have four use cases. 1. Add spellchecking to "Project Probe" and integrate the "VI Documenter" with auto correct. 2. Spell check readme and changelog files. 3. Create an Xcontrol (string control) with "as you type" spell checking built in. 4. Add spell checking to a report generator someone has asked me to create. So far. looking good.

- 18 replies

-

- right-click

- spell check

-

(and 1 more)

Tagged with:

-

No. I want a spell checker that I can use in applications on any text - not just in the LabVIEW IDE.That's very short-sighted. Time to write a wrapper for Hunspell, I suppose

- 18 replies

-

- right-click

- spell check

-

(and 1 more)

Tagged with: