infinitenothing

Members-

Posts

372 -

Joined

-

Last visited

-

Days Won

16

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by infinitenothing

-

Lookup or add Variant attribute using IPE example

infinitenothing replied to Michael Aivaliotis's topic in LabVIEW General

The variant on the inside is referencing the child's data. The author wants to add the new attribute to the parent variant. -

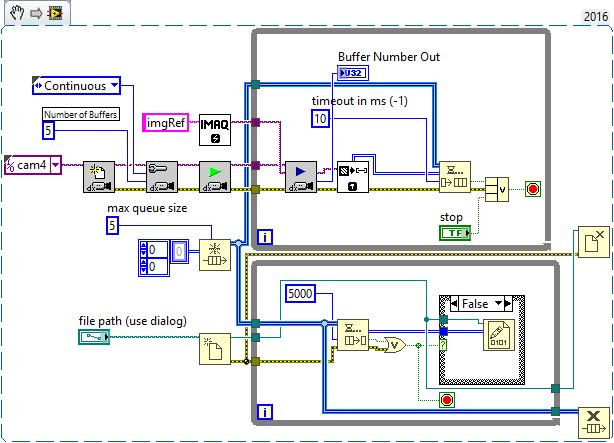

Here's an example of using producer consumer. If you have a solid state hard drive or a RAID disk, something like this should work. You'll notice there's two places we're storing images in memory. The "number of buffers" and the "max queue size". In this example, we'd primarily increase the max queue size to store more in "RAM". IMAQdx already decouples acquisition for you so another option (not shown) is to increase the "number of buffers". While you were saving the image (where the enqueue is now), IMAQdx would be filling the next buffer in parallel. The only caveat is that IMAQdx uses a lossy buffer so you would have to monitor buffer number out to make sure you don't miss any frames.

-

Your reference id: 0x496d6167 is hex ascii for "Imag". That is, it's the first four bytes of your image name. Images are string references a bit like a named queue. It's too bad Image Refs came before DVRs. It would have been much simpler to understand if you knew the DVR syntax.

-

Custom control request: flat style imaq image display

infinitenothing replied to infinitenothing's topic in User Interface

Good tip! My ROI tools (just the selection and rectangle tool) still look ancient though. It's fixable but I was hoping someone else did it first. -

I16 Image into U8 Image only lower byte

infinitenothing replied to @lex's topic in Machine Vision and Imaging

I'm not totally clear. You said IMAQ cast is working. Are you trying to reimplement IMAQ cast? -

Looks like it could be a good solution for these ideas: https://forums.ni.com/t5/LabVIEW-Idea-Exchange/Integrate-Start-Asynchronous-Call-with-Static-VI-Reference/idi-p/3307187 http://forums.ni.com/t5/LabVIEW-Idea-Exchange/Allow-Asynchronous-Call-By-Reference-with-Strict-Static-VI/idi-p/1662372

-

I'm not sure why the idea exchange mods didn't understand how powerful it is to be able to generate a case structure from a "class". The primary advantage being that you can put terminals,locals, and front panel linked property nodes in a case structure but not directly in a subVI. That can make things easier to debug because, from the front panel or from a terminal, you can "find all.." for those. I feel like they are stuck on OOP as a one size fits all solution.

-

TCP write / read problem, disable write buffer ?

infinitenothing replied to Zyl's topic in LabVIEW General

The reader will be using minimal resources while it's waiting for a new message so there's no need for additional breaths. I still think you need see where the extra messages are coming from by creating very well known unique messages. Also, your error handling in the reader terminates at the RT FIFO so I don't think you're catching errors (which seems like a likely culprit at this point). You can "OR" in the error and the timeout from the read to make sure you don't go processing invalid messages. -

TCP write / read problem, disable write buffer ?

infinitenothing replied to Zyl's topic in LabVIEW General

No, from the application level, I don't know of a way to see how many elements are in the buffer. It's unusual to leave anything on the buffer with TCP. Normally, you read as fast as possible without any throttle. You can then implement your own buffer in a queue for example. Then you'd get to use the queue tools to see what's in the buffer. I think your problem points to an application issue where something's writing faster than you think or your reader isn't really reading. Maybe it's throwing an error or something. What do you think about my "unique data" idea. You could slow the server down to once a second and just send 1, then 2, then 3. You should see that show up on your client. Also, you might want to post your client code. -

Quadrature Encoder counter with FPGA

infinitenothing replied to Neville D's topic in LabVIEW General

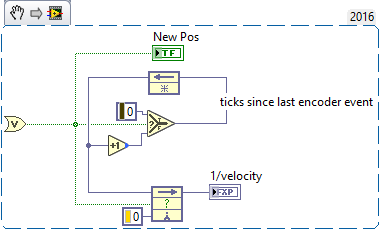

First, calculate how long it's been since the last encoder event. Then you need to latch that number when there's a new event to get the period between the last two events. What you do with the period from there is highly dependent on your application and available resources. If accuracy is of utmost importance, you can store all your periods in a FIFO and then integrate to get times and apply a quadratic fit to get the velocity and acceleration. -

TCP write / read problem, disable write buffer ?

infinitenothing replied to Zyl's topic in LabVIEW General

Yeah, what you're describing doesn't make sense. You can't have a server send 82 bytes every 10 ms and have a client receive 82 bytes every 1 ms. Maybe have the server send unique data and verify the messages at the client to see where the extra data is coming from. This won't fix your problem but, I recommend replacing the timed loop with a while loop. TCP code in a timed loop makes no sense to me. -

Memory or data Structure corrupt Error 74

infinitenothing replied to Gab's topic in Object-Oriented Programming

Look at the hex. That will show you how they flatten differently. I don't have LV10 so I can't test. -

It's weird behavior for a weird use case but it appears to do what it says it's going to do. https://zone.ni.com/reference/en-XX/help/371361M-01/lvhowto/case_selector_values/

-

Memory or data Structure corrupt Error 74

infinitenothing replied to Gab's topic in Object-Oriented Programming

Can you examine the binary flattened string to see the differences between how LV14 would flatten the string vs LV10. You'll need to change the string indicator type to show hex. I can tell you it's a little unusual to flatten a variant. Much more common to flatten what would become a variant and then use that as a string in the flattened cluster. For example: -

Any difference between application 64 bits vs 32 bits?

infinitenothing replied to ASalcedo's topic in LabVIEW General

I wouldn't say there was a big risk. If you find out 64 bit isn't going to work, you can always just install 32 bit next to it and switch over.- 8 replies

-

- application

- 64 bits

-

(and 1 more)

Tagged with:

-

Accessing FPGA Indicator Latency (cRIO)

infinitenothing replied to Yohann's topic in LabVIEW General

What sort of jitter are you seeing? What were you expecting? How much can you tolerate? If you have to have better jitter try: Move the indicators outside the loop. When you write to an indicator in development mode, it needs to use the network. You might also want to move the controls outside too and use something like a single process single element RT FIFO shared variable Turn off debug Replace the while loop with a timed loop -

Area within a curve - Green / Kelvin-Stokes Theorem

infinitenothing replied to gyc's topic in LabVIEW General

Ensegre, don't you need a convex polygon for that? You could convert the vectors to discrete pixels using Bresenham's Algorithm -

Area within a curve - Green / Kelvin-Stokes Theorem

infinitenothing replied to gyc's topic in LabVIEW General

How accurate do you have to be? Maybe implement your own flood fill? Otherwise, you have to define some sort of point to point interpolation which could be as simple as defining a polygon -

Since it's a 1:1 connection, I'm hoping that disconnects are infrequent enough that in the rare instance that there's a disconnect I can just let the user click a "reconnect" button when it happens. Of course to get to that point, I need to rule out as many possible causes of disconnects (application, OS, etc) as possible which brought me here. Buffering on the server is something I'm very nervous about. It's a fairly dense data stream and it would pile up quickly or could cause CPU starvation issues if implemented wrong. All this makes me appreciate NI's Network Streams. I just wish there was an open source library for the client.

-

There's a training course: http://sine.ni.com/tacs/app/overview/p/ap/of/lang/en/pg/1/sn/n24:14963/id/1587/

-

I'm debugging an issue where the server and client communicate fine for a long period of time but suddenly the server and client stop talking. Eventually the server (LabVIEW Linux RT) starts seeing error 56 on send and then 15 minutes later, the server's read will see error 66. The client is running on windows (not programmed in LabVIEW) and we can run diagnostics like Wireshark there. It's a 1:1 connection. Is there a way to gather more info as to why a TCP connection fails? Example be able to tell if: Client closed connection (normal disconnect) Some one pulled the cable (can blame user) Client thread crashes (can blame client developer)